The table engine plays a critical part in ClickHouse. It determines the data storage and reading and the support for concurrent read and write, index, the types of queries, and the host-backup replication.

ClickHouse provides about 28 table engines for different purposes. For example, Log family for small table data analysis, MergeTree family for big-volume data analysis, and Integration for external data integration. There is also a replication table Replicated and distributed table Distributed. New users may feel confused because of all the choices.

This article explains ClickHouse table engines to help users get started with ClickHouse.

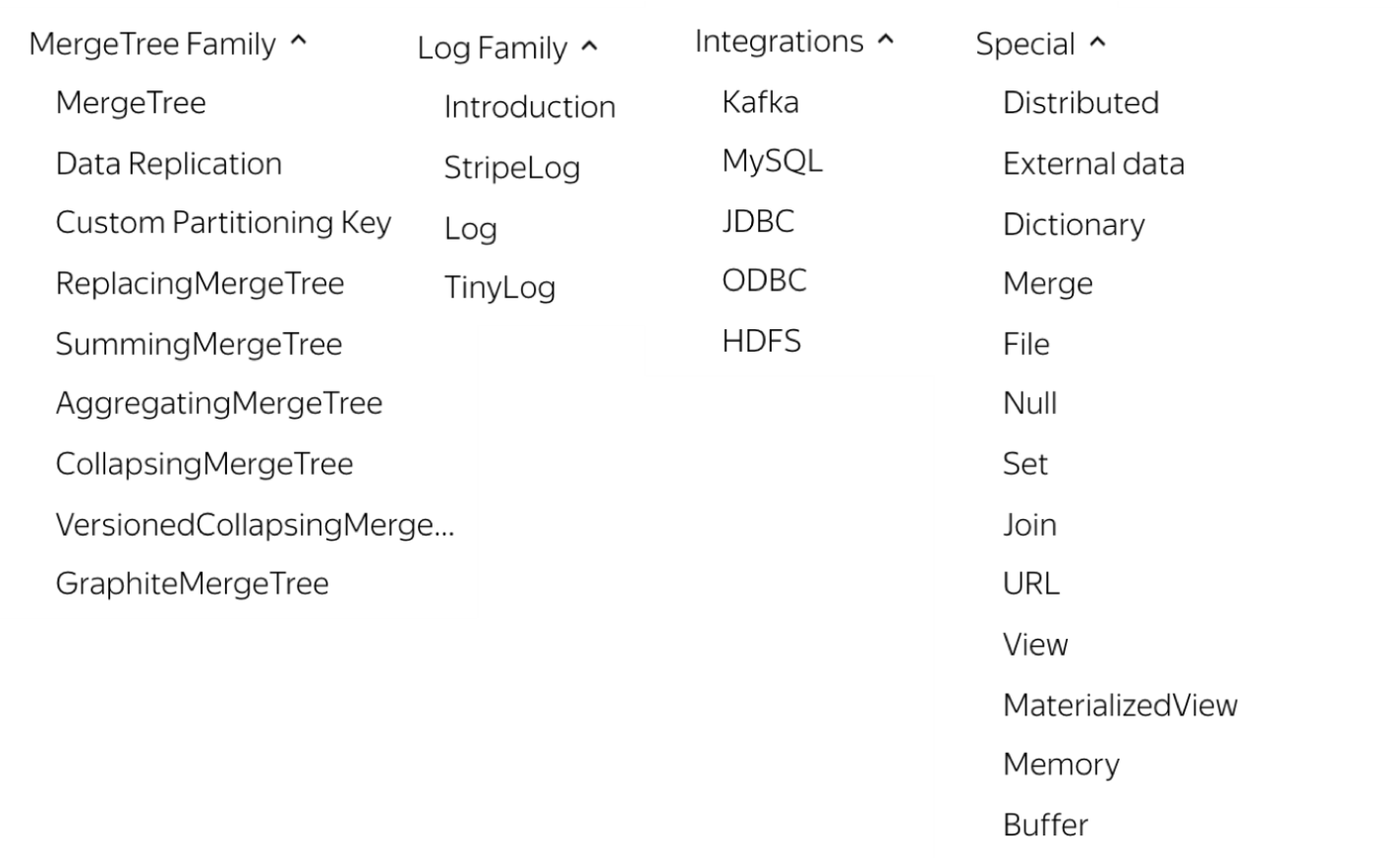

The following figure shows a summary of all the table engines provided by ClickHouse:

There are four series, Log, MergeTree, Integration, and Special. Among them, there are two special table engines, Replicated and Distributed, which are functionally orthogonal to other table engines.

Log table engines are easy in function. They are mainly used to write data quickly to small tables (containing about one million rows) and read all these tables. Log table engines have the following characteristics in common:

The differences are listed below:

The system table engines are mainly used to import external data to ClickHouse or perform operations on external data sources in ClickHouse.

The Special series table engines are mostly customized for specific scenarios. A few are introduced briefly below:

Log, Special, and Integration are mainly used for special purposes in relatively limited scenarios. The MergeTree family is the officially recommended storage engine, which supports almost all ClickHouse core functions.

The following section will focus on: MergeTree, ReplacingMergeTree, CollapsingMergeTree, VersionedCollapsingMergeTree, SummingMergeTree, and AggregatingMergeTree.

The MergeTree table engine is mainly used to analyze large amounts of data. It supports features, such as data partitioning, storage ordering, primary key indexing, sparse indexing, data TTL. MergeTree supports all ClickHouse SQL syntaxes with some features different from MySQL. For example, primary keys are not used for deduplication in MergeTree.

As shown in the following table creation DDL, the primary key of test_tbl is (id, create_time). The storage is sorted by the primary key and the data is partitioned by create_time. The data of the latest month is retained.

CREATE TABLE test_tbl (

id UInt16,

create_time Date,

comment Nullable(String)

) ENGINE = MergeTree()

PARTITION BY create_time

ORDER BY (id, create_time)

PRIMARY KEY (id, create_time)

TTL create_time + INTERVAL 1 MONTH

SETTINGS index_granularity=8192;Write Data: We have written several data records with the same primary key.

Insert into test_tbl values (0,'2019-12-12', null);

Insert into test_tbl values (0,'2019-12-12', null);

Insert into test_tbl values (1,'2019-12-13', null);

Insert into test_tbl values (1,'2019-12-13', null);

Insert into test_tbl values (2,'2019-12-14', null);Data Query: Although only three rows of data share the same id and create_time, five rows are displayed in the result.

select count(*) from test_tbl;

┌─count()─┐

│ 5 │

└─────────┘

select * from test_tbl;

┌─id─┬─create_time─┬─comment─┐

│ 2 │ 2019-12-14 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 1 │ 2019-12-13 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 0 │ 2019-12-12 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 1 │ 2019-12-13 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 0 │ 2019-12-12 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘Since MergeTree is similar to the LSM tree structure, many processing logics in the storage layer do not take effect until Compaction. Therefore, complete the execution of Compaction in the background forcibly and query after that. There are still 5 rows of data.

optimize table test_tbl final;

select count(*) from test_tbl;

┌─count()─┐

│ 5 │

└─────────┘

select * from test_tbl;

┌─id─┬─create_time─┬─comment─┐

│ 2 │ 2019-12-14 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 0 │ 2019-12-12 │ ᴺᵁᴸᴸ │

│ 0 │ 2019-12-12 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 1 │ 2019-12-13 │ ᴺᵁᴸᴸ │

│ 1 │ 2019-12-13 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘The primary key index in MergeTree is mainly used to accelerate queries rather than keeping records unique in databases like MySQL. Even after the Compaction operation is completed, the data rows with the same primary key still co-exist.

ClickHouse provides the ReplacingMergeTree engine for deduplication since MergeTree does not support primary key deduplication.

Example:

-- Create a table

CREATE TABLE test_tbl_replacing (

id UInt16,

create_time Date,

comment Nullable(String)

) ENGINE = ReplacingMergeTree()

PARTITION BY create_time

ORDER BY (id, create_time)

PRIMARY KEY (id, create_time)

TTL create_time + INTERVAL 1 MONTH

SETTINGS index_granularity=8192;

-- Write data with the same primary key.

insert into test_tbl_replacing values(0, '2019-12-12', null);

insert into test_tbl_replacing values(0, '2019-12-12', null);

insert into test_tbl_replacing values(1, '2019-12-13', null);

insert into test_tbl_replacing values(1, '2019-12-13', null);

insert into test_tbl_replacing values(2, '2019-12-14', null);

-- The query result shows that the data with the same primary key still exists before the Compaction.

Select count (*) from test_tbl_replacement;

┌─count()─┐

Last 5 minutes

└-----┘

select count(*) from test_tbl_replacing;

┌─count()─┐

│ 5 │

└─────────┘

select * from test_tbl_replacing;

┌─id─┬─create_time─┬─comment─┐

│ 0 │ 2019-12-12 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 0 │ 2019-12-12 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 1 │ 2019-12-13 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 1 │ 2019-12-13 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 2 │ 2019-12-14 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

-- Force background Compaction:

optimize table test_tbl_replacing final;

-- Query again, and the data with repetitive primary keys has disappeared.

select count(*) from test_tbl_replacing;

┌─count()─┐

│ 3 │

└─────────┘

select * from test_tbl_replacing;

┌─id─┬─create_time─┬─comment─┐

│ 2 │ 2019-12-14 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 1 │ 2019-12-13 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘

┌─id─┬─create_time─┬─comment─┐

│ 0 │ 2019-12-12 │ ᴺᵁᴸᴸ │

└────┴─────────────┴─────────┘Although ReplacingMergeTree supports primary key deduplication, it still has the following disadvantages:

Therefore, ReplacingmergeTree is usually used to ensure that data is deduplicated at the end of the query process. It cannot ensure that the primary keys are different during the query process.

ClickHouse provides CollapsingMergeTree to make up the restrictions in ReplacingMergeTree. CollapsingMergeTree requires a flag column Sign to be specified in the table creation statement. The rows with the same primary key and opposite Signs will be collapsed when Compaction is performed in the background. This is called a delete operation.

CollapsingMergeTree divides rows into two types based on the value of the Sign. The row whose Sign is 1 is called a state row, and the row whose Sign is -1 is called a cancel row.

A state row is written each time a state is added. A cancel row is written when a state is deleted.

When the Compaction operation is performed in the background, the state row and the cancel row are automatically collapsed (deleted). For data before compaction, the state row and the cancel row co-exist.

The business layer should be modified to collapse (delete) the primary key:

Example:

-- Create a table

CREATE TABLE UAct

(

UserID UInt64,

PageViews UInt8,

Duration UInt8,

Sign Int8

)

ENGINE = CollapsingMergeTree(Sign)

ORDER BY UserID;

-- Insert a state row. Note that the value of the sign column is 1.

INSERT INTO UAct VALUES (4324182021466249494, 5, 146, 1);

-- Insert a cancel row to counteract the above state row. Note that the value of the sign column is -1, and other values are consistent with the state row;

-- Insert a new state row with the same primary key to update PageViews from 5 to 6 and Duration from 146 to 185.

INSERT INTO UAct VALUES (4324182021466249494, 5, 146, -1), (4324182021466249494, 6, 185, 1);

-- Query data. It can be seen that the state row and the cancel row coexist before the Compaction.

SELECT * FROM UAct;

┌──────────────UserID─┬─PageViews─┬─Duration─┬─Sign─┐

│ 4324182021466249494 │ 5 │ 146 │ -1 │

│ 4324182021466249494 │ 6 │ 185 │ 1 │

└─────────────────────┴───────────┴──────────┴──────┘

┌──────────────UserID─┬─PageViews─┬─Duration─┬─Sign─┐

│ 4324182021466249494 │ 5 │ 146 │ 1 │

└─────────────────────┴───────────┴──────────┴──────┘

-- Rewrite the SQL statement to obtain the correct sum value:

-- sum(PageViews) => sum(PageViews * Sign)、

-- sum(Duration) => sum(Duration * Sign)

SELECT

UserID,

sum(PageViews * Sign) AS PageViews,

sum(Duration * Sign) AS Duration

FROM UAct

GROUP BY UserID

HAVING sum(Sign) > 0;

┌──────────────UserID─┬─PageViews─┬─Duration─┐

│ 4324182021466249494 │ 6 │ 185 │

└─────────────────────┴───────────┴──────────┘

-- Force background Compaction

optimize table UAct final;

-- Query again. It can be seen that the state row and the cancel row have been collapsed, and only the latest state row remains.

select * from UAct;

┌──────────────UserID─┬─PageViews─┬─Duration─┬─Sign─┐

│ 4324182021466249494 │ 6 │ 185 │ 1 │

└─────────────────────┴───────────┴──────────┴──────┘CollapsingMergeTree can delete data with the same primary key timely. However, when the state is constantly changing and data is written in multiple threads in parallel, the state row and the cancel row may be out of order, resulting in abnormal collapsing.

Example:

Example of out-of-order insertion.

-- Create a table

CREATE TABLE UAct_order

(

UserID UInt64,

PageViews UInt8,

Duration UInt8,

Sign Int8

)

ENGINE = CollapsingMergeTree(Sign)

ORDER BY UserID;

-- Insert a cancel row first

INSERT INTO UAct_order VALUES (4324182021466249495, 5, 146, -1);

-- Insert a state row after

INSERT INTO UAct_order VALUES (4324182021466249495, 5, 146, 1);

-- Force Compaction

optimize table UAct_order final;

-- It can be seen that the data with the same primary key cannot be collapsed even after the Compaction: Two rows of data still exist.

select * from UAct_order;

┌──────────────UserID─┬─PageViews─┬─Duration─┬─Sign─┐

│ 4324182021466249495 │ 5 │ 146 │ -1 │

│ 4324182021466249495 │ 5 │ 146 │ 1 │

└─────────────────────┴───────────┴──────────┴──────┘The VersionedCollapsingMergeTree table engine adds a Version column to the CREATE TABLE statement to resolve the issue when the data cannot be collapsed when written out-of-order through CollapsingMergeTree. It records the correspondence between the state row and the cancel row in case of disorder. The rows with the same primary key, the same Version, and opposite Signs will be deleted when performing the Compaction operation.

Similar to CollapsingMergeTree, the business layer needs to rewrite SQL, changing count(), sum(col) into sum(Sign), sum(col * Sign) to obtain the correct results.

Example:

Example of out-of-order insertion.

-- Create a table

CREATE TABLE UAct_version

(

UserID UInt64,

PageViews UInt8,

Duration UInt8,

Sign Int8,

Version UInt8

)

ENGINE = VersionedCollapsingMergeTree(Sign, Version)

ORDER BY UserID;

-- Insert a cancel row first. Note: Signz =-1 and Version = 1

INSERT INTO UAct_version VALUES (4324182021466249494, 5, 146, -1, 1);

-- Insert a state row (Sign = 1 and Version = 1) and a new state row (Sign = 1 and Version = 2) to update PageViews from 5 to 6, and Duration from 146 to 185.

INSERT INTO UAct_version VALUES (4324182021466249494, 5, 146, 1, 1),(4324182021466249494, 6, 185, 1, 2);

-- Query, and all rows are visible before Compaction.

SELECT * FROM UAct_version;

┌──────────────UserID─┬─PageViews─┬─Duration─┬─Sign─┐

│ 4324182021466249494 │ 5 │ 146 │ -1 │

│ 4324182021466249494 │ 6 │ 185 │ 1 │

└─────────────────────┴───────────┴──────────┴──────┘

┌──────────────UserID─┬─PageViews─┬─Duration─┬─Sign─┐

│ 4324182021466249494 │ 5 │ 146 │ 1 │

└─────────────────────┴───────────┴──────────┴──────┘

-- Rewrite the SQL statement to obtain the correct sum value:

-- sum(PageViews) => sum(PageViews * Sign)、

-- sum(Duration) => sum(Duration * Sign)

SELECT

UserID,

sum(PageViews * Sign) AS PageViews,

sum(Duration * Sign) AS Duration

FROM UAct_version

GROUP BY UserID

HAVING sum(Sign) > 0;

┌──────────────UserID─┬─PageViews─┬─Duration─┐

│ 4324182021466249494 │ 6 │ 185 │

└─────────────────────┴───────────┴──────────┘

-- Force background Compaction

optimize table UAct_version final;

-- Query again. It can be seen that the rows can be collapsed correctly even when the cancel row and the state row are out of order.

select * from UAct_version;

┌──────────────UserID─┬─PageViews─┬─Duration─┬─Sign─┬─Version─┐

│ 4324182021466249494 │ 6 │ 185 │ 1 │ 2 │

└─────────────────────┴───────────┴──────────┴──────┴─────────┘ClickHouse supports the pre-aggregation of the primary key columns in SummingMergeTree. When Compaction is performed in the background, multiple rows with the same primary key are summed with results shown in one row. This reduces the storage footprint and improves the aggregation computing performance.

Noteworthy Points:

Example:

-- Create a table

CREATE TABLE summtt

(

key UInt32,

value UInt32

)

ENGINE = SummingMergeTree()

ORDER BY key

-- Insert data

INSERT INTO summtt Values(1,1),(1,2),(2,1)

-- Query before Compaction. Multiple rows still exist.

select * from summtt;

┌─key─┬─value─┐

│ 1 │ 1 │

│ 1 │ 2 │

│ 2 │ 1 │

└─────┴───────┘

-- Use GROUP BY to perform aggregation computing.

SELECT key, sum(value) FROM summtt GROUP BY key

┌─key─┬─sum(value)─┐

│ 2 │ 1 │

│ 1 │ 3 │

└─────┴────────────┘

-- Force Compaction

optimize table summtt final;

-- Query after Compaction, and it can be seen that the data has been pre-aggregated

select * from summtt;

┌─key─┬─value─┐

│ 1 │ 3 │

│ 2 │ 1 │

└─────┴───────┘

-- After compaction, GROUP BY is still needed for aggregation computing.

SELECT key, sum(value) FROM summtt GROUP BY key

┌─key─┬─sum(value)─┐

│ 2 │ 1 │

│ 1 │ 3 │

└─────┴────────────┘AggregatingMergeTree is one of the pre-aggregation engines that improve the performance of aggregation computing. AggregatingMergeTree differs from SummingMergeTree. SummingMergeTree performs sum aggregation on non-primary-key columns, while AggregatingMergeTree can specify various aggregation functions.

The AggregatingMergeTree syntax is complex and needs to be used in combination with materialized views or AggregateFunction, a special data type of ClickHouse. There are also unique methods and requirements for insert and select operations. Use -State syntax for writing and -Merge syntax for querying.

Example One: AggregatingMergeTree syntax with materialized views.

-- Create a detail table

CREATE TABLE visits

(

UserID UInt64,

CounterID UInt8,

StartDate Date,

Sign Int8

)

ENGINE = CollapsingMergeTree(Sign)

ORDER BY UserID;

-- Create a materialized view for the detail table which pre-aggregates the detail table

-- Note that the pre-aggregation functions are sumState and uniqState, corresponding to the write syntax <agg>-State.

CREATE MATERIALIZED VIEW visits_agg_view

ENGINE = AggregatingMergeTree() PARTITION BY toYYYYMM(StartDate) ORDER BY (CounterID, StartDate)

AS SELECT

CounterID,

StartDate,

sumState(Sign) AS Visits,

uniqState(UserID) AS Users

FROM visits

GROUP BY CounterID, StartDate;

-- Insert detail data

INSERT INTO visits VALUES(0, 0, '2019-11-11', 1);

INSERT INTO visits VALUES(1, 1, '2019-11-12', 1);

-- Perform the final aggregation on materialized views

-- Note that the aggregation functions are sumMerge and uniqMerge, corresponding to the query syntax <agg>-Merge.

SELECT

StartDate,

sumMerge(Visits) AS Visits,

uniqMerge(Users) AS Users

FROM visits_agg_view

GROUP BY StartDate

ORDER BY StartDate;

-- The ordinary function sum and uniq are no longer available

-- SQL statement will report the error as follow: Illegal type AggregateFunction(sum, Int8) of argument

SELECT

StartDate,

sum(Visits),

uniq(Users)

FROM visits_agg_view

GROUP BY StartDate

ORDER BY StartDate;Example Two: AggregatingMergeTree syntax with the special data type AggregateFunction.

-- Create a detail table

CREATE TABLE detail_table

( CounterID UInt8,

StartDate Date,

UserID UInt64

) ENGINE = MergeTree()

PARTITION BY toYYYYMM(StartDate)

ORDER BY (CounterID, StartDate);

-- Insert detail data.

INSERT INTO detail_table VALUES(0, '2019-11-11', 1);

INSERT INTO detail_table VALUES(1, '2019-11-12', 1);

-- Create a pre-aggregation table,

-- Note: the type of the UserID column is AggregateFunction(uniq, UInt64)

CREATE TABLE agg_table

( CounterID UInt8,

StartDate Date,

UserID AggregateFunction(uniq, UInt64)

) ENGINE = AggregatingMergeTree()

PARTITION BY toYYYYMM(StartDate)

ORDER BY (CounterID, StartDate);

-- Read data from the detail table and insert the data into the aggregation table.

-- Note: the aggregation function used in the subquery is uniqState, corresponding to the write syntax <agg>-State

INSERT INTO agg_table

select CounterID, StartDate, uniqState(UserID)

from detail_table

group by CounterID, StartDate

-- The ordinary insert statement cannot be used to insert data into AggregatingMergeTree.

-- The SQL statement will report the error: Cannot convert UInt64 to AggregateFunction(uniq, UInt64)

INSERT INTO agg_table VALUES (1,'2019-11-12',1);

-- Query data from the aggregation table.

-- Note: the aggregation function used in select is uniqMerge, corresponding to the query syntax <agg>-Merge

SELECT uniqMerge(UserID) AS state

FROM agg_table

GROUP BY CounterID, StartDate;ClickHouse provides various table engines to meet different business requirements. This article gives an overview of the ClickHouse table engines and presents a detailed comparison and sample demonstration of the MergeTree table engines.

In addition to these table engines, ClickHouse provides advanced table engines, such as Replicated and Distributed. Further explanations about these engines will be released in the future.

Alibaba Cloud has launched the ClickHouse cloud hosting product. You can visit the product homepage for more information: ApsaraDB for ClickHouse.

ClickHouse Kernel Analysis – Storage Structure and Query Acceleration of MergeTree

ApsaraDB - July 29, 2022

ApsaraDB - July 8, 2021

ApsaraDB - March 15, 2024

ApsaraDB - May 7, 2021

ApsaraDB - July 7, 2021

ApsaraDB - May 7, 2021

ApsaraDB for ClickHouse

ApsaraDB for ClickHouse

ApsaraDB for ClickHouse is a distributed column-oriented database service that provides real-time analysis.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Log Management for AIOps Solution

Log Management for AIOps Solution

Log into an artificial intelligence for IT operations (AIOps) environment with an intelligent, all-in-one, and out-of-the-box log management solution

Learn More ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn MoreMore Posts by ApsaraDB