本文介绍常见的Linux系统内核网络参数及常见问题的解决方案。

使用自助问题排查工具

阿里云的自助问题排查工具可以帮助您快速检测内核参数配置,并给出明确的诊断报告。

单击进入自助问题排查页面,并切换至目标地域。

如果自助问题排查工具未能定位您的问题,请继续下面的步骤进行手动排查。

查看和修改内核参数

注意事项

在修改内核参数前,您需要注意以下几点:

从实际需求出发,尽量有相关数据的支撑,不建议您随意调整内核参数。

了解参数的具体作用,需注意不同类型或版本的环境中,内核参数可能有所不同。请参见常见Linux内核参数说明。

备份ECS实例中的重要数据,具体操作,请参见创建快照。

修改参数

/proc/sys/和/etc/sysctl.conf都支持在实例运行时修改内核参数,不同之处如下:

/proc/sys/是一个虚拟文件系统,提供了访问内核参数的方法,该目录下的net中存放了当前系统中已开启的所有网络内核参数,可以在系统运行时进行修改,但重启实例后就会失效,一般用于临时性验证修改的效果。/etc/sysctl.conf是一个配置文件,您可以通过修改/etc/sysctl.conf文件来修改内核参数的默认值,实例重启后不会失效。

/proc/sys/目录下文件与/etc/sysctl.conf配置文件中参数的完整名称相关,如net.ipv4.tcp_tw_recycle参数,对应的文件是/proc/sys/net/ipv4/tcp_tw_recycle文件,文件的内容就是参数值。

Linux从4.12内核版本开始移除了tcp_tw_recycle配置,即移除sysctl.conf中关于net.ipv4.tcp_tw_recycle的配置内容,当您的系统内核低于4.12版本才可以使用net.ipv4.tcp_tw_recycle参数。

通过/proc/sys/目录查看和修改内核参数

登录Linux系统的ECS实例。

具体操作,请参见ECS远程连接方式概述。

使用

cat命令,可以查看对应文件的内容。例如,执行以下命令,查看

net.ipv4.tcp_tw_recycle的值。cat /proc/sys/net/ipv4/tcp_tw_recycle使用

echo命令,可以修改内核参数对应的文件。例如,执行以下命令,将

net.ipv4.tcp_tw_recycle的值修改为0。echo "0" > /proc/sys/net/ipv4/tcp_tw_recycle

通过/etc/sysctl.conf文件查看和修改内核参数

登录Linux系统的ECS实例。

具体操作,请参见ECS远程连接方式概述。

执行如下命令,查看当前系统中生效的所有参数。

sysctl -a部分内核参数显示如下所示。

net.ipv4.tcp_app_win = 31 net.ipv4.tcp_adv_win_scale = 2 net.ipv4.tcp_tw_reuse = 0 net.ipv4.tcp_frto = 2 net.ipv4.tcp_frto_response = 0 net.ipv4.tcp_low_latency = 0 net.ipv4.tcp_no_metrics_save = 0 net.ipv4.tcp_moderate_rcvbuf = 1 net.ipv4.tcp_tso_win_divisor = 3 net.ipv4.tcp_congestion_control = cubic net.ipv4.tcp_abc = 0 net.ipv4.tcp_mtu_probing = 0 net.ipv4.tcp_base_mss = 512 net.ipv4.tcp_workaround_signed_windows = 0 net.ipv4.tcp_challenge_ack_limit = 1000 net.ipv4.tcp_limit_output_bytes = 262144 net.ipv4.tcp_dma_copybreak = 4096 net.ipv4.tcp_slow_start_after_idle = 1 net.ipv4.cipso_cache_enable = 1 net.ipv4.cipso_cache_bucket_size = 10 net.ipv4.cipso_rbm_optfmt = 0 net.ipv4.cipso_rbm_strictvalid = 1修改内核参数。

临时修改。

/sbin/sysctl -w kernel.parameter="[$Example]"说明kernel.parameter请替换成内核名,[$Example]请替换成参数值,如执行

sysctl -w net.ipv4.tcp_tw_recycle="0"命令,将net.ipv4.tcp_tw_recycle内核参数值改为0。永久修改。

执行如下命令,打开

/etc/sysctl.conf配置文件。vim /etc/sysctl.conf按

i键进入编辑模式。根据需要,修改内核参数。

具体格式如下所示。

net.ipv6.conf.all.disable_ipv6 = 1 net.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.lo.disable_ipv6 = 1按

Esc键,输入:wq,保存并退出编辑。执行如下命令,使配置生效。

/sbin/sysctl -p

网络相关内核参数常见问题及解决方案

无法远程连接Linux系统的ECS实例,在/var/log/message日志看到“nf_conntrack: table full, dropping packet”错误信息怎么办?

为什么/var/log/messages日志中会出现“Time wait bucket table overflow”错误信息?

无法远程连接Linux系统的ECS实例,在/var/log/message日志看到“nf_conntrack: table full, dropping packet”错误信息怎么办?

问题现象

无法远程连接ECS实例,ping目标实例时出现ping丢包或ping不通情况,在/var/log/message系统日志中频繁出现以下错误信息。

Feb 6 16:05:07 i-*** kernel: nf_conntrack: table full, dropping packet.

Feb 6 16:05:07 i-*** kernel: nf_conntrack: table full, dropping packet.

Feb 6 16:05:07 i-*** kernel: nf_conntrack: table full, dropping packet.

Feb 6 16:05:07 i-*** kernel: nf_conntrack: table full, dropping packet.问题原因

ip_conntrack是Linux系统内NAT的一个跟踪连接条目的模块,ip_conntrack模块会使用一个哈希表记录TCP协议established connection记录。当这个哈希表满之后,新连接的数据包会被丢弃掉,就会出现nf_conntrack: table full, dropping packet错误。

Linux系统会开辟一个空间,用于维护每一个TCP链接,这个空间的大小与nf_conntrack_buckets、nf_conntrack_max参数相关,后者的默认值是前者的4倍,所以一般建议调大nf_conntrack_max参数值。

维护系统连接比较消耗内存,建议您在系统空闲和内存充足的情况下,将nf_conntrack_max参数值调大。

解决方案

使用VNC远程连接实例。

具体操作,请参见通过密码认证登录Linux实例。

修改

nf_conntrack_max参数值。执行以下命令,打开

/etc/sysctl.conf文件。vi /etc/sysctl.conf按

i键进入编辑模式。修改

nf_conntrack_max参数值。例如,将哈希表项最大值参数修改为

655350。net.netfilter.nf_conntrack_max = 655350按

Esc键,输入:wq,保存并退出编辑。

修改超时参数

nf_conntrack_tcp_timeout_established值。例如,修改超时参数值为1200,默认超时时间是432000秒。

net.netfilter.nf_conntrack_tcp_timeout_established = 1200执行如下命令,使配置生效。

sysctl -p

为什么/var/log/messages日志中会出现“Time wait bucket table overflow”错误信息?

问题现象

Linux系统的ECS实例中,/var/log/messages日志中频繁出现“kernel: TCP: time wait bucket table overflow”错误信息。

Feb 18 12:28:38 i-*** kernel: TCP: time wait bucket table overflow

Feb 18 12:28:44 i-*** kernel: printk: 227 messages suppressed.

Feb 18 12:28:44 i-*** kernel: TCP: time wait bucket table overflow

Feb 18 12:28:52 i-*** kernel: printk: 121 messages suppressed.

Feb 18 12:28:52 i-*** kernel: TCP: time wait bucket table overflow

Feb 18 12:28:53 i-*** kernel: printk: 351 messages suppressed.

Feb 18 12:28:53 i-*** kernel: TCP: time wait bucket table overflow

Feb 18 12:28:59 i-*** kernel: printk: 319 messages suppressed.问题原因

net.ipv4.tcp_max_tw_buckets参数用于调整内核中管理TIME_WAIT状态的数量,当ECS实例中处于TIME_WAIT状态的连接数,加上需要转换为TIME_WAIT状态的连接数之和超过net.ipv4.tcp_max_tw_buckets参数值时,/var/log/messages日志中就会出现“kernel: TCP: time wait bucket table overflow”错误信息,此时,系统内核将会关闭超出参数值的部分TCP连接。

解决方案

您可以根据实际情况适当调高net.ipv4.tcp_max_tw_buckets参数值,同时,建议您从业务层面去改进TCP连接。本文介绍如何修改net.ipv4.tcp_max_tw_buckets参数值。

使用VNC远程连接实例。

具体操作,请参见通过密码认证登录Linux实例。

执行以下命令,查看TCP连接数。

netstat -antp | awk 'NR>2 {print $6}' | sort | uniq -c显示如下,表示处于TIME_WAIT状态的连接数为6300。

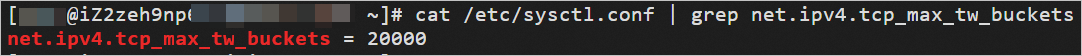

6300 TIME_WAIT 40 LISTEN 20 ESTABLISHED 20 CONNECTED执行如下命令,查看

net.ipv4.tcp_max_tw_buckets参数值。cat /etc/sysctl.conf | grep net.ipv4.tcp_max_tw_buckets显示如下所示,表示

net.ipv4.tcp_max_tw_buckets参数值为20000。

修改

net.ipv4.tcp_max_tw_buckets参数值。执行以下命令,打开

/etc/sysctl.conf文件。vi /etc/sysctl.conf按

i键进入编辑模式。修改

net.ipv4.tcp_max_tw_buckets参数值。例如,将

net.ipv4.tcp_max_tw_buckets参数值修改为65535。net.ipv4.tcp_max_tw_buckets = 65535按

Esc键,输入:wq,保存并退出编辑。

执行如下命令,使配置生效。

sysctl -p

为什么Linux系统的ECS实例中,出现大量的处于FIN_WAIT2状态的TCP连接?

问题现象

Linux系统的ECS实例中,出现大量的处于FIN_WAIT2状态的TCP连接。

问题原因

出现该问题可能有以下原因:

在HTTP服务中,Server由于某种原因会主动关闭连接,例如在KEEPALIVE超时的情况下,主动关闭连接的Server就会进入FIN_WAIT2状态。

在TCP/IP协议栈中,存在半连接的概念,FIN_WAIT2状态不算超时(与TIME_WAIT状态不同)。如果Client不关闭,FIN_WAIT2状态将保持到系统重启,越来越多的FIN_WAIT2状态会致使内核Crash。

解决方案

您可以将net.ipv4.tcp_fin_timeout参数值调小,以便加快系统关闭处于FIN_WAIT2状态的TCP连接。

使用VNC远程连接实例。

具体操作,请参见通过密码认证登录Linux实例。

修改

net.ipv4.tcp_fin_timeout参数值。执行以下命令,打开

/etc/sysctl.conf文件。vi /etc/sysctl.conf按

i键进入编辑模式。修改

net.ipv4.tcp_fin_timeout参数值。例如,将

net.ipv4.tcp_fin_timeout参数值修改为10。net.ipv4.tcp_fin_timeout = 10按

Esc键,输入:wq,保存并退出编辑。

执行如下命令,使配置生效。

sysctl -p

为什么Linux系统的ECS实例中,出现大量的处于CLOSE_WAIT状态的TCP连接?

问题现象

Linux系统的ECS实例中,出现大量的处于CLOSE_WAIT状态的TCP连接。

问题原因

出现该问题的可能原因是CLOSE_WAIT数量超出了正常的范围。

TCP连接断开时需要进行四次挥手,TCP连接的两端都可以发起关闭连接的请求,若对端发起了关闭连接,但本地没有关闭连接,那么该连接就会处于CLOSE_WAIT状态。虽然该连接已经处于半连接状态,但是已经无法和对端通信,需要及时地释放该连接。

解决方案

建议您从业务层面及时判断某个连接是否已经被对端关闭,即在程序逻辑中对连接及时关闭,并进行检查。

远程连接ECS实例。

具体操作,请参见ECS远程连接方式概述。

在程序中检查并关闭CLOSE_WAIT状态的TCP连接。

编程语言中对应的读、写函数一般包含了检测CLOSE_WAIT状态的TCP连接功能。Java语言和C语言中关闭连接的方法如下:

Java语言

通过

read方法来判断I/O 。当read方法返回-1时,则表示已经到达末尾。通过

close方法关闭该连接。

C语言

检查

read的返回值。若等于0,则可以关闭该连接。

若小于0,则查看error,若不是AGAIN,则同样可以关闭连接。

为什么客户端配置NAT后,无法访问服务端的ECS或RDS?

问题现象

客户端配置NAT后无法访问服务端的ECS、RDS,包括配置了SNAT的VPC中的ECS实例。

问题原因

出现该问题可能是服务端的net.ipv4.tcp_tw_recycle和net.ipv4.tcp_timestamps参数值配置为1导致。

当服务端的内核参数net.ipv4.tcp_tw_recycle和net.ipv4.tcp_timestamps的值都为1,表示服务端会检查每一个TCP连接报文中的时间戳(Timestamp),若Timestamp不是递增的关系,则不会响应这个报文。

解决方案

您可以根据服务端云产品不通,选择合适的方案进行处理。

远端服务器为ECS时,修改

net.ipv4.tcp_tw_recycle参数和net.ipv4.tcp_timestamps参数为0。远端服务器为RDS时,RDS无法直接修改内核参数,需要在客户端上修改

net.ipv4.tcp_tw_recycle参数和net.ipv4.tcp_timestamps参数为0。

使用VNC远程连接实例。

具体操作,请参见通过密码认证登录Linux实例。

修改

net.ipv4.tcp_tw_recycle和net.ipv4.tcp_timestamps参数值为0。执行以下命令,打开

/etc/sysctl.conf文件。vi /etc/sysctl.conf按

i键进入编辑模式。将

net.ipv4.tcp_tw_recycle和net.ipv4.tcp_timestamps参数值修改为0。net.ipv4.tcp_tw_recycle=0 net.ipv4.tcp_timestamps=0按

Esc键,输入:wq,保存并退出编辑。

执行以下命令,使配置生效。

sysctl -p

常见Linux内核参数说明

参数 | 说明 |

net.core.rmem_default | 默认的socket数据接收窗口大小(字节)。 |

net.core.rmem_max | 最大的socket数据接收窗口(字节)。 |

net.core.wmem_default | 默认的socket数据发送窗口大小(字节)。 |

net.core.wmem_max | 最大的socket数据发送窗口(字节)。 |

net.core.netdev_max_backlog | 当内核处理速度比网卡接收速度慢时,这部分多出来的包就会被保存在网卡的接收队列上。 该参数说明了这个队列的数量上限,在每个网络接口接收数据包的速率比内核处理这些包的速率快时,允许送到队列的数据包的最大数目。 |

net.core.somaxconn | 该参数定义了系统中每一个端口最大的监听队列的长度,是个全局参数。 该参数和 |

net.core.optmem_max | 表示每个套接字所允许的最大缓冲区的大小。 |

net.ipv4.tcp_mem | 确定TCP栈应该如何反映内存使用,每个值的单位都是内存页(通常是4 KB)。

|

net.ipv4.tcp_rmem | 为自动调优定义Socket使用的内存。

|

net.ipv4.tcp_wmem | 为自动调优定义Socket使用的内存。

|

net.ipv4.tcp_keepalive_time | TCP发送keepalive探测消息的间隔时间(秒),用于确认TCP连接是否有效。 |

net.ipv4.tcp_keepalive_intvl | 探测消息未获得响应时,重发该消息的间隔时间(秒)。 |

net.ipv4.tcp_keepalive_probes | 在认定TCP连接失效之前,最多发送多少个keepalive探测消息。 |

net.ipv4.tcp_sack | 启用有选择的应答(1表示启用),通过有选择地应答乱序接收到的报文来提高性能,让发送者只发送丢失的报文段,(对于广域网通信来说)这个选项应该启用,但是会增加对CPU的占用。 |

net.ipv4.tcp_timestamps | TCP时间戳(会在TCP包头增加12B),以一种比重发超时更精确的方法(参考RFC 1323)来启用对RTT的计算,为实现更好的性能应该启用这个选项。 |

net.ipv4.tcp_window_scaling | 启用RFC 1323(参考RFC 1323)定义的window scaling,若要支持超过64 KB的TCP窗口,必须启用该值(1表示启用),TCP窗口最大至1 GB,TCP连接双方都启用时才生效。 |

net.ipv4.tcp_syncookies | 该参数表示是否打开TCP同步标签(

|

net.ipv4.tcp_tw_reuse | 表示是否允许将处于TIME-WAIT状态的Socket(TIME-WAIT的端口)用于新的TCP连接。 |

net.ipv4.tcp_tw_recycle | 能够更快地回收TIME-WAIT套接字。 |

net.ipv4.tcp_fin_timeout | 对于本端断开的Socket连接,TCP保持在FIN-WAIT-2状态的时间(秒)。对端可能会断开连接或一直不结束连接或不可预料的进程死亡。 |

net.ipv4.ip_local_port_range | 表示TCP/UDP协议允许使用的本地端口号。 |

net.ipv4.tcp_max_syn_backlog | 该参数决定了系统中处于

|

net.ipv4.tcp_westwood | 启用发送者端的拥塞控制算法,它可以维护对吞吐量的评估,并试图对带宽的整体利用情况进行优化,对于WAN通信来说应该启用这个选项。 |

net.ipv4.tcp_bic | 为快速长距离网络启用Binary Increase Congestion,这样可以更好地利用以GB速度进行操作的链接,对于WAN通信应该启用这个选项。 |

net.ipv4.tcp_max_tw_buckets | 该参数设置系统的TIME_WAIT的数量,如果超过默认值则会被立即清除。tcp_max_tw_buckets默认值大小会受实例内存的影响,最大值为262144。 |

net.ipv4.tcp_synack_retries | 指明了处于SYN_RECV状态时重传SYN+ACK包的次数。 |

net.ipv4.tcp_abort_on_overflow | 设置该参数为1时,当系统在短时间内收到了大量的请求,而相关的应用程序未能处理时,就会发送Reset包直接终止这些链接。建议通过优化应用程序的效率来提高处理能力,而不是简单地Reset。默认值为0。 |

net.ipv4.route.max_size | 内核所允许的最大路由数目。 |

net.ipv4.ip_forward | 接口间转发报文。 |

net.ipv4.ip_default_ttl | 报文可以经过的最大跳数。 |

net.netfilter.nf_conntrack_tcp_timeout_established | 在指定时间内,已经建立的连接如果没有活动,则通过iptables进行清除。 |

net.netfilter.nf_conntrack_max | 哈希表项最大值。 |