This topic describes how query optimization works and how the join reorder works.

Query optimization overview

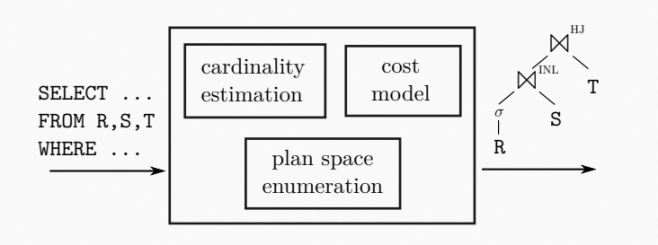

When query statements are processed, the optimizer receives query statements that you enter, performs a series of equivalent transformations, and selects the optimal execution plan from equivalent execution plans by using cardinality estimation and cost models. Because the database performance is highly dependent on of the selected execution plan, all database systems have query optimizers. The following figure shows a typical query optimizer.

A typical query optimizer includes the following components:

Plan space enumeration: generates multiple equivalent execution plans based on a series of equivalent transformation rules.

Cardinality estimation: estimates the data volume and data distribution during query execution based on the distribution of tables.

Cost model: calculates the costs for executing each execution plan based on execution plans and their states within the database.

The join order issue is widely studied in query plans in query optimizers. Selecting an incorrect join order may greatly deteriorate query performance. The join order issue also depends on the preceding components.

New requirements for the optimizer in HTAP

MySQL is a widely used OLTP database. It excels in data storage, access, and some simple or fixed-pattern queries. To achieve this, MySQL optimizers do a lot of optimization related to its execution model. For example, subqueries are executed and eliminated in the query optimization phase, and ORDER BY clauses are eliminated based on indexes. Such optimization has good results in MySQL queries. This also means that MySQL optimizers are highly related to execution models and storage. In HTAP, most solutions use row storage and IMCIs (replica) and accelerated complex queries at the execution layer. Under such conditions, most assumptions in MySQL optimizers are overturned. Because MySQL optimizers are highly related to execution models and storage, it is difficult to adapt to the query optimization capabilities in HTAP with simple modifications. If databases want to handle different storage modes, different execution models, and different data models, the optimizer must provide the following capabilities:

Less correlation with the storage layer or execution layer to facilitate the evolution of future features.

Able to process complex queries such as multi-dimensional filtering, joins, and aggregations.

Efficient query optimization to implement real-time analytics in HTAP.

IMCI query optimization

Row-based plan optimization and its limits

A MySQL optimizer has the following optimization process:

Apply rule-based optimization to prefect plans (no cost calculation is involved).

Convert some outer joins to inner joins.

Implement equivalent transformations. For example,

c1 = 5 and c1 = c2can be converted toc1 = 5 and c2 = 5.Perform partition pruning. If only some partitions are queried, other partitions are not accessed. This can significantly reduce the amount of scanned data.

Eliminate subqueries. Some subqueries that return only one row are executed in advance and replaced with the results to simplify the plan.

Use cost-based join reorders.

Accomplish subsequent optimization measures. The method to access tables is determined and ORDER BY and DISTINCT clauses are eliminated based on the indexes used.

This optimization process is very clear and is excellent in MySQL execution mode. However, when replica nodes are added, this optimization process also has the following problems:

Due to the limits of the MySQL execution mode, join reorders can only generate left deep execution plans. In complex HTAP queries, the optimal execution plan may be missed. SQL rewriting using subqueries and join order hints brings huge changes to the business.

When MySQL enumerates execution plans, it calculates the number of input rows and selectivity for each join. MySQL mainly performs index joins in join operations. MySQL uses existing secondary indexes on tables to estimate the selectivity. If no secondary indexes are created, the cost estimation may have significant error. This coincides with the original MySQL scenario: Join operations use indexes and indexes can be used to solve slow query issue. However, this method is not appropriate when you perform multi-dimensional filter, join, and aggregation operations on IMCIs because a large number of secondary indexes are required. When you create indexes, read loads are evenly allocated to write operations. A large number of secondary indexes occupy high storage space and deteriorate the write efficiency. Although histograms are introduced in MySQL 8.0, they only support filtering conditions on a single table and estimating the selectivity. Histograms do not help in complex query statements.

Separating the join reorder issue improves the search efficiency, but may cause the locally optimal plan issue.

IMCI plan optimization process

The IMCI feature introduces a new optimization process to overcome the shortcomings of MySQL optimizers in HTAP. The new optimization process dresses missing indexes, new execution models, and complex queries in HTAP. In IMCI queries, the optimizer uses rule-based rewriting and cost-based optimization.

Rule-based rewriting of plans

This step is similar to the MySQL optimizer. New rules are added to the existing rules of the MySQL optimizer. These new rules are mainly used to further optimize the efficiency of the IMCI executor and to adapt execution plans to the requirements of the execution model. Examples:

Subquery unnesting: In the absence of indexes, nested subqueries are executed similarly to nested loop joins. This causes poor execution efficiency. The IMCI feature converts nested subqueries into joins by using subquery unnesting and uses hash joins to execute queries efficiently.

Aggregate functions that contain DISTINCT in subqueries are eliminated. Example:

SELECT COUNT(DISTINCT c1) FROM t; -- is converted to SELECT COUNT(c1) FROM (SELECT c1 FROM t GROUP BY c1);Such conversion can simplify the design of the IMCI executor and supports more functions.

Predicate resorting: For example, multiple predicates are found in common table scanning:

t1.c2 LIKE '%cat%' AND t1.c1 = 5. Thet1.c1 = 5predicate brings lower execution costs and has the lower selectivity. Resorting these two predicates and first executing thet1.c1 = 5predicate can significantly reduceLIKEoperations and improve query efficiency.In fact, this step also includes some cost-based query optimization, which also optimizes queries in most cases. Applying the rules for operations such as predicate pushdown standardizes inputs of the cost-based query optimizer and executor, reduces the storage consumed in cost-based query optimization, and improves the efficiency of query optimization.

Cost-based optimization

After rule-based query rewriting, plans enter the cost-based query optimizer. The IMCI feature uses the cascade optimizer framework, which can avoid the locally optimal plan issue in query optimization. In addition to this framework, the following functional modules of the traditional optimizer are also used: plan enumeration, cardinality estimation, and cost model. The work process of these modules is discussed based on the join order issue.

Plan enumeration

Plan enumeration is a component used by the optimizer to enumerate plans. After a series of steps such as parsing and binding, an initial query plan is generated and added to the optimizer. The optimizer performs various equivalent transformations on the query plan by using rules to generate a new equivalent query plan. In IMCI, the rules for plan rewriting are divided into the following types:

Transform rules: They are similar to rewriting rules. However, these rules are optional and are used to optimize query performance. They are not widely used. Therefore, such rules are added to the cost-based optimizer in IMCI to enumerate equivalent query plans. Subsequently, the optimal query plan is selected by using the cardinality estimation and cost model.

Implement rules: They are used to select the algorithm between logical plans and physical plans. For example, hash join, index join, and sort merge join can be selected for join operations. Different algorithms are suitable for different scenarios. Therefore, such rules are also added to the cost-based optimizer in IMCI.

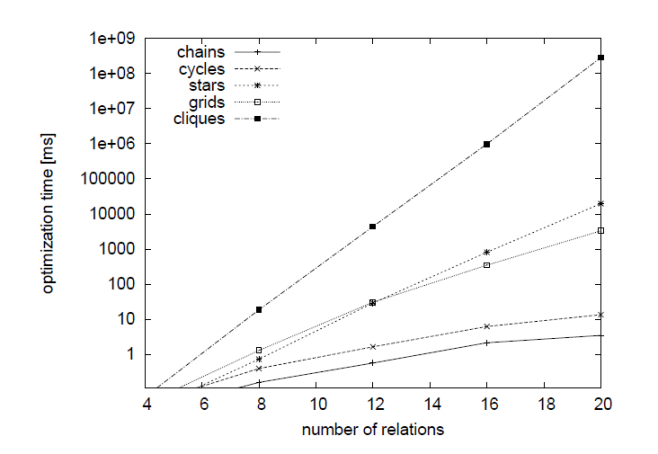

In this module, the execution plan is converted a large number of equivalent plans based on these rewriting rules. The optimal plan is selected by comparing their costs. The cascade optimizer in IMCI saves shared intermediate results by using data structures such as Memo, Group, and GroupExpr. This eliminates a lot of redundant intermediate results. However, a query statement that contains a large number of joins may still generate enormous equivalent plans. For a join of n tables, at least 2n possible join orders exist. The following figure shows the relationship between the number of join plans and the enumeration time without considering the Cartesian product:

The preceding figure shows that a join reorder that contains 12 tables takes more than 10 seconds in the worst case. Even a common star query can take more than 10 seconds to optimize plans for 20 tables. This optimization time is unacceptable for some queries involving a lot of tables. Selecting the optimal join order must solve three issues:

The optimal plan must be included in the enumerated join plans. To achieve this goal, the enumeration must have high efficiency so that the optimal join plan can be included in the search space.

If too many tables are joined, a stable heuristic algorithm is available to search for an adequate query plan within an acceptable time period.

The optimal plan can be correctly selected from all enumerated join plans.

Cardinality estimation and the cost model

The optimal plan is selected by estimating the costs of each plan. In the current optimizer, this process involves the following components:

The cardinality estimation component estimates the numbers of input and output rows for each operator and the approximate distribution of the output data for each operator. This component relies on two modules: the statistic collection and calculation and the logic for calculating the numbers of input and output rows.

The cost model component calculates the costs, adds the execution costs of each operator to obtain the total costs, and selects the execution plan with the lowest total costs.

Statistic collection and calculation in IMCI

To optimize queries in the absence of sufficient secondary indexes, the IMCI feature uses a statistic module in its optimizer. The IMCI feature estimates the numbers of output and output rows for each operator by collecting the following information from the table:

The cardinality of each column (the number of unique values in the column, which is equivalent to

COUNT(DISTINCT col)).The percentage of NULL values in each column.

The maximum and minimum values of each column.

The histogram created for each column based on the value size.

The unique key and foreign key attributes in the table.

Statistics constructed for the IMCI feature

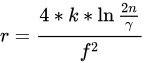

To collect these statistics, the system calculates the number of rows to be sampled based on the data volume of the table. The number of rows to be sampled is determined by using the following formula:

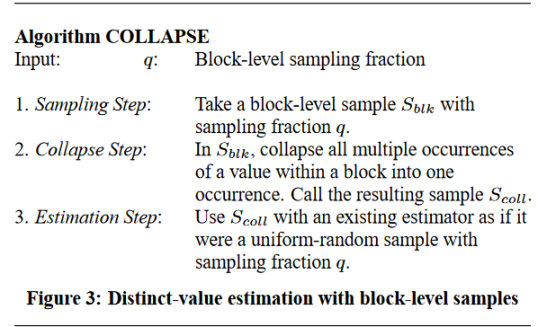

In the formula, n is the size of the table, k is the number of buckets in the histogram, f is the confidence interval of the relative error (or confidence). After the optimizer calculates the number of sampled rows based on the appropriate constants, the optimizer uses the input rows to construct a histogram to calculate the proportion of NULL values. The optimizer then calculates the cardinality of the column based on the sampled data. In IMCI, the optimizer first calculates the number of sampled rows by using the preceding formula, and then selects different cardinality estimation formulas based on sampling rates to minimize the error of cardinality estimation. Such formulas typically require uniform random sampling. However, in IMCI, data is usually not stored in pages. In IMCI, 64K rows are usually compressed and stored all together. If a row of data is read, the entire data block containing the row is read from the disk and decompressed. This causes serious read amplification. To solve this problem, the IMCI feature samples data blocks to reduce read amplification, and uses the COLLAPSE algorithm to modify the calculation formula for distinct values. If multiple identical values appear in the same block, only one value is calculated because the preceding formula is highly dependent on

How operators use statistics to estimate the number of rows in IMCI

The operators that actually need to be estimated are filter, join, and group by. For other operators such as sorting, the output is usually only related to the number of input rows. Histograms can effectively estimate the selectivity of predicates such as a > 10 AND a < 100. Joins of histograms can calculate the selectivity of equal joins and range joins. The column cardinality can be used to estimate the number of groups in the group by output, as well as to assist in estimating the selectivity of equality predicates and equal joins in cases where some histograms are not available. For example, in Selectivity(a = 1) = 1/COUNT(DISTINCT a), unique keys and foreign keys can be used to correct the results for equal joins. In the following SQL statement:

SELECT COUNT(*)

FROM t1, t2

WHERE t1.a = t2.a;t1.a is the unique key of t1 and t1.a is the foreign key of t2.a. For each row in t2, t1 must contain a row that matches it. Then the number of output rows of this join and the size of t2 can be accurately obtained.

Correlation of multiple predicates

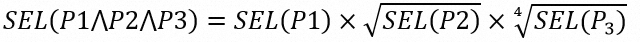

The IMCI feature uses the assumption that data columns are correlated. The exponential backoff algorithm is used to calculate the selectivity between multiple predicates. This algorithm first sorts the selectivity value of each predicate, and then calculates the selectivity by using the following formula:

The algorithm can effectively reduce the estimation error in most data sets based on real data.

Test optimization results

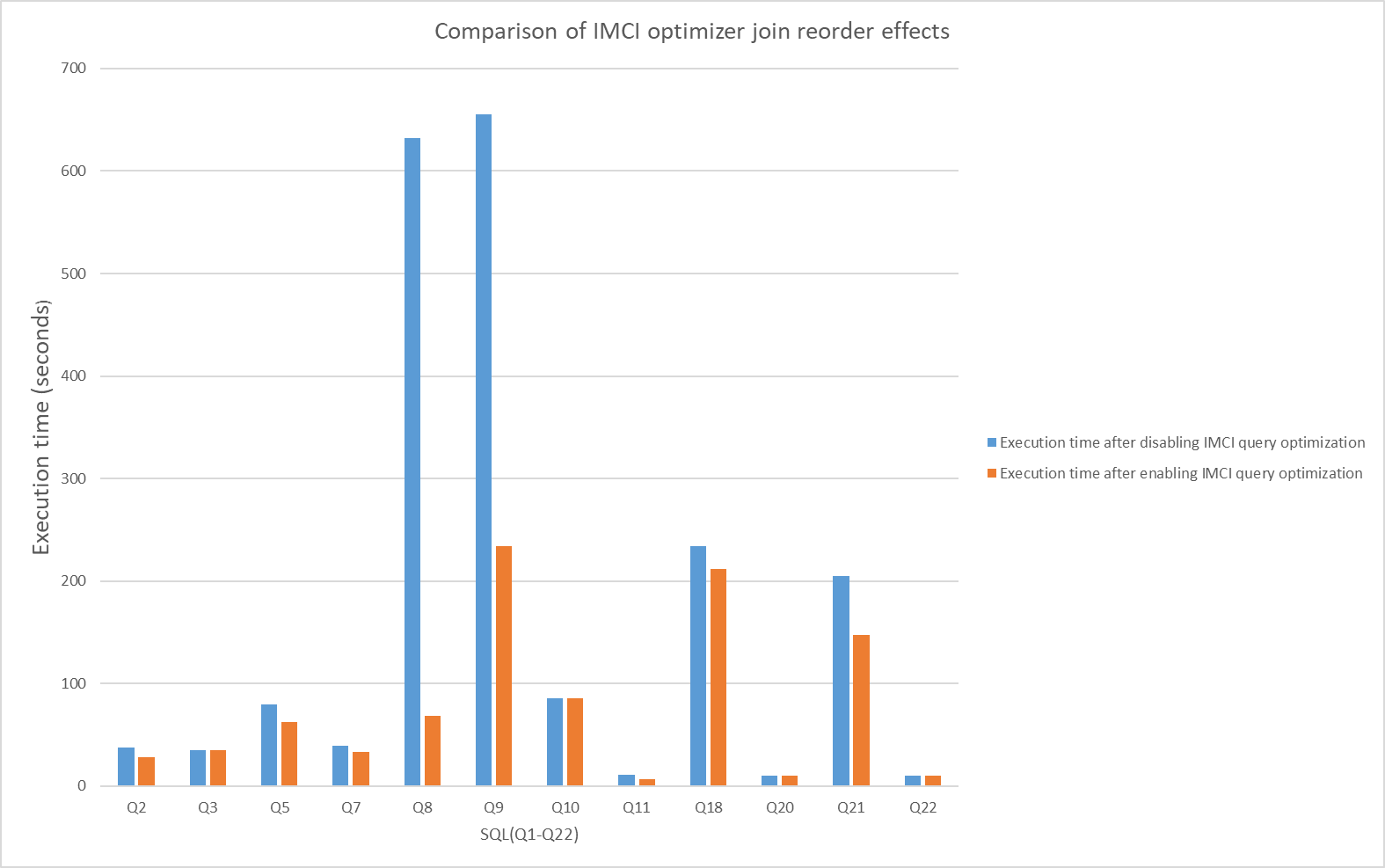

Test the performance on the TPCH 1 TB dataset when IMCI query optimization is enabled and disabled. The following figure shows the optimization results.

In the preceding figure, for the multi-table joins of Q8 and Q9, the execution efficiency is significantly improved after IMCI query optimization is enabled because better query plans are selected.

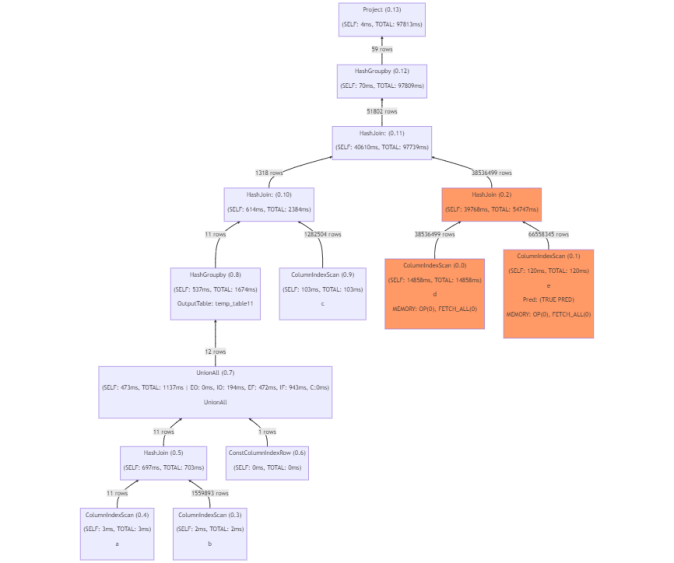

When IMCI query optimization is not enabled, joins are executed in the

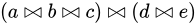

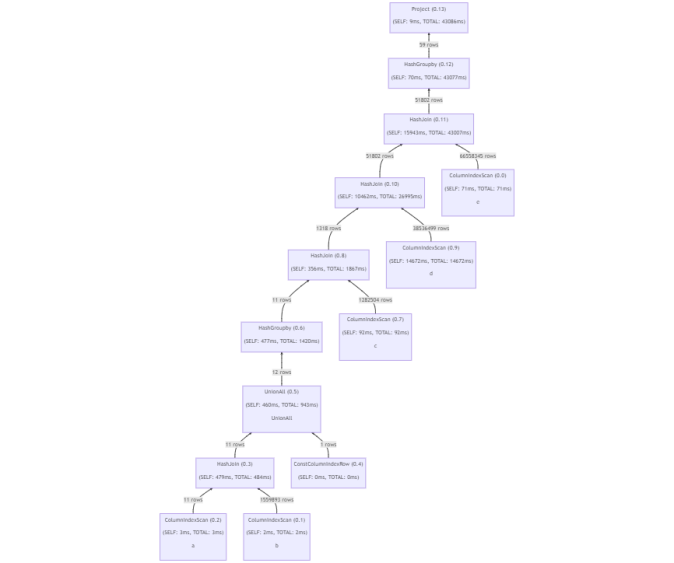

The following figure illustrates the query process when IMCI query optimization is enabled.

After IMCI query optimization is enabled, the join order is