This topic describes how to use Platform for AI (PAI) ArtLab Stable Diffusion web UI to generate images.

Background Information

Stable Diffusion is a text-to-image AI model developed by Stability AI that can generate and modify images based on text prompts. The Stable Diffusion web UI, developed by AUTOMATIC1111, provides a visualized browser interface for the development of the Stable Diffusion model. Even if you have no programming knowledge, Stable Diffusion web UI allows you to easily use Stable Diffusion through intuitive interactions.

The web UI provides an intuitive operating experience and supports customization by using different plugins and models. You can use the web UI to create more controllable visual works.

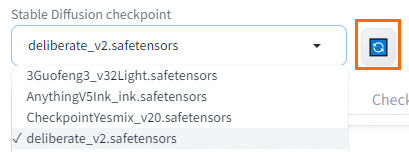

Model selection

Upload your models to a directory in Object Storage Service (OSS) and refresh the web UI. Then, you can select the model that you want to use in this section. We recommend that you download models from Civitai.

If you cannot access the preceding link, configure a proxy and then try again.

txt2img

Prompt syntax

General tips

Prompts generally include the following types of keywords: prefixes (image quality, painting style, lens effects, and lighting effects), subjects (person or object, posture, clothing, and factor), and scenes (environment and details).

If you want to increase or decrease the weight of a keyword, use parentheses (

(),{},[]) to enclose the keyword, which is followed by a colon (:) and a weight value. Example: (beautiful:1.3). We recommend that you use a weight value that ranges from 0.4 to 1.6. Keywords with lower weights are more likely to be ignored, and keywords with higher weights are prone to deformation due to overfitting. You can also use multiple pairs of parentheses ((),{},[]) to superimpose the weight of a keyword. An additional pair of parentheses indicates that the weight is increased or decreased to 1.1, 1.05 or 0.952 times. Example: (((cute))).Weight calculation rules:

(PromptA:weight value): You can use this format to increase or decrease the weight of a keyword. If the weight value is greater than 1, the weight is increased. If the weight value is less than 1, the weight is decreased.

(PromptB) indicates that the weight of PromptB is increased to 1.1 times, which is equivalent to (PromptB:1.1).

{PromptC} indicates that the weight of PromptC is increased to 1.05 times, which is equivalent to (PromptC:1.05).

[PromptD] indicates that the weight of PromptD is decreased to 0.952 times, which is equivalent to (PromptD:0.952).

((PromptE)) is equivalent to (PromptE:1.1*1.1).

{{PromptF}} is equivalent to (PromptF:1.05*1.05).

[[PromptG]] is equivalent to (PromptG:0.952*0.952).

You can use angle brackets (

<>) to invoke Low-Rank Adaptation (LoRA) and Hypernetwork models. Formats: <lora:filename:multiplier> and <hypernet:filename:multiplier>.Prompt hybrid scheduling:

In [promptA:promptB:factor], factor indicates the transition percentage from promptA to promptB, ranging from 0 to 1. For example, in the prompts

a girl holding an [apple :peach:0.9]anda girl holding an [apple :peach:0.2, the same seed value is used but different factor values are specified. When you generate images based on these prompts, the system generates highly similar images with a specific element fine-tuned. In this example, the object the girl is holding is changed from an apple to a peach.

Common prompts

Positive prompts

Negative prompts

Positive prompt

Description

Negative prompt

Description

HDR, UHD, 8K, 4K

Improves the quality of the image.

mutated hands and fingers

Prevents mutated hands or fingers.

best quality

Vivifies the image.

deformed

Prevents deformation.

masterpiece

Makes the image look like a masterpiece.

bad anatomy

Prevents bad anatomy.

Highly detailed

Adds details to the image.

disfigured

Prevents disfigurement.

Studio lighting

Applies studio lighting to make the image textured.

poorly drawn face

Prevents poorly drawn faces.

ultra-fine painting

Applies ultra-fine painting.

mutated

Prevents mutation.

sharp focus

Brings the image into sharp focus.

extra limb

Prevents extra limbs.

physically-based rendering

Adopts physical rendering.

ugly

Prevents ugly elements.

extreme detail description

Focuses on the details.

poorly drawn hands

Prevents poorly drawn hands.

Vivid Colors

Makes the image vivid in colors.

missing limb

Avoids missing limbs.

(EOS R8, 50mm, F1.2, 8K, RAW photo:1.2)

Incorporates professional photographic styles.

floating limbs

Prevents floating limbs.

Boken

Blurs the background and highlights the subject.

disconnected limbs

Prevents disconnected limbs.

Sketch

Uses sketch as the drawing method.

malformed hands

Prevents deformed hands.

Painting

Uses painting as the drawing method.

variant

Prevents out-of-focus.

-

-

long neck

Prevents long necks.

-

-

long body

Prevents long bodies.

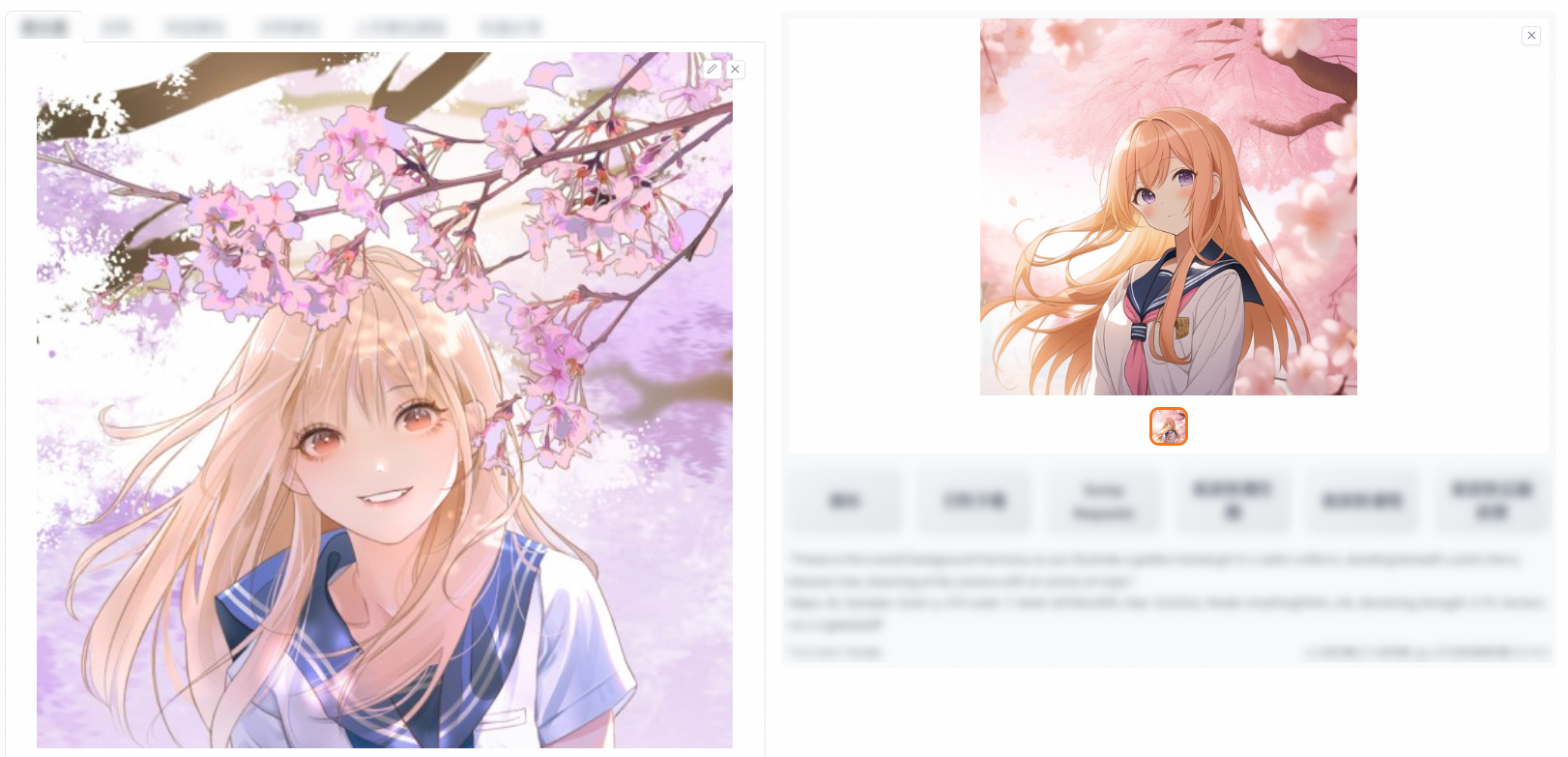

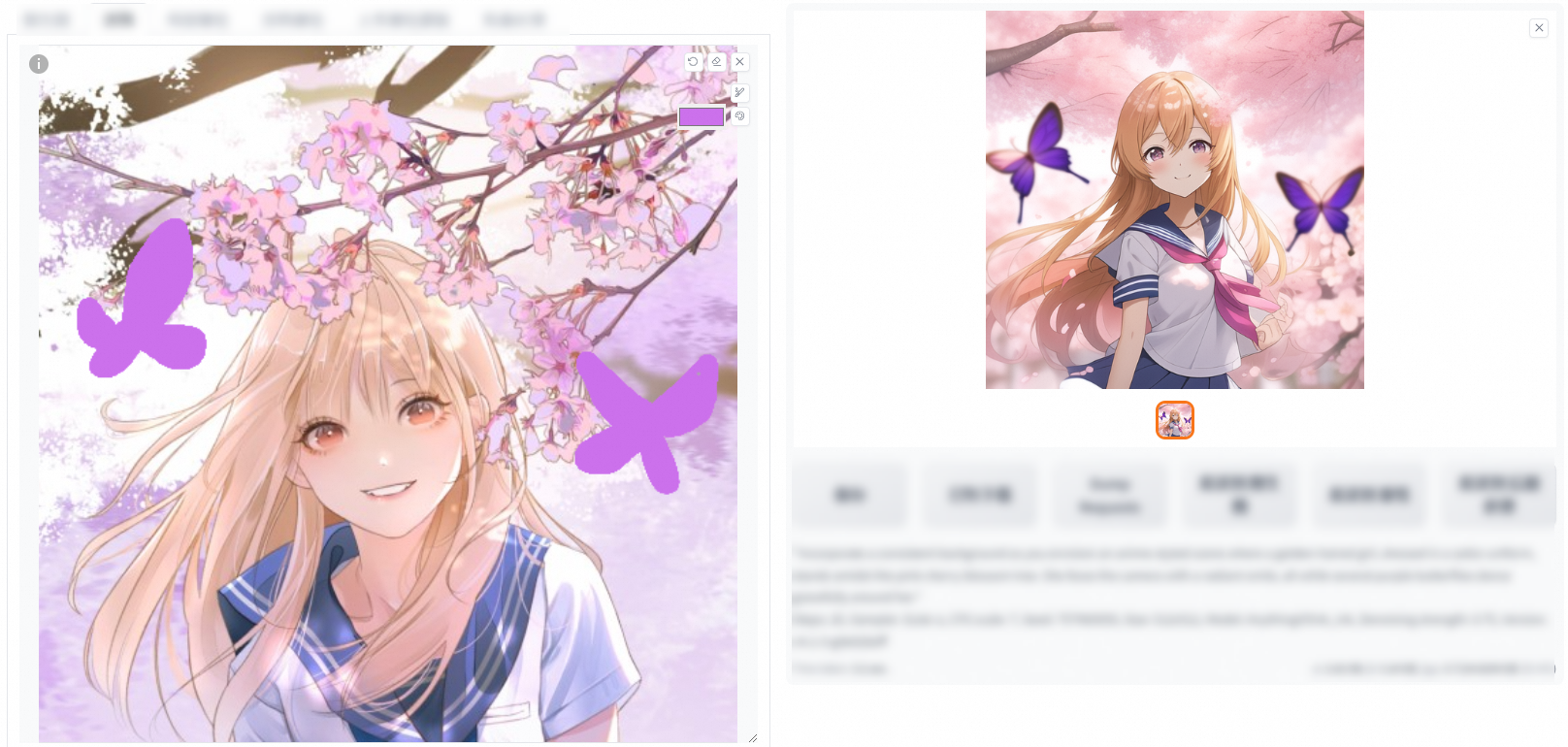

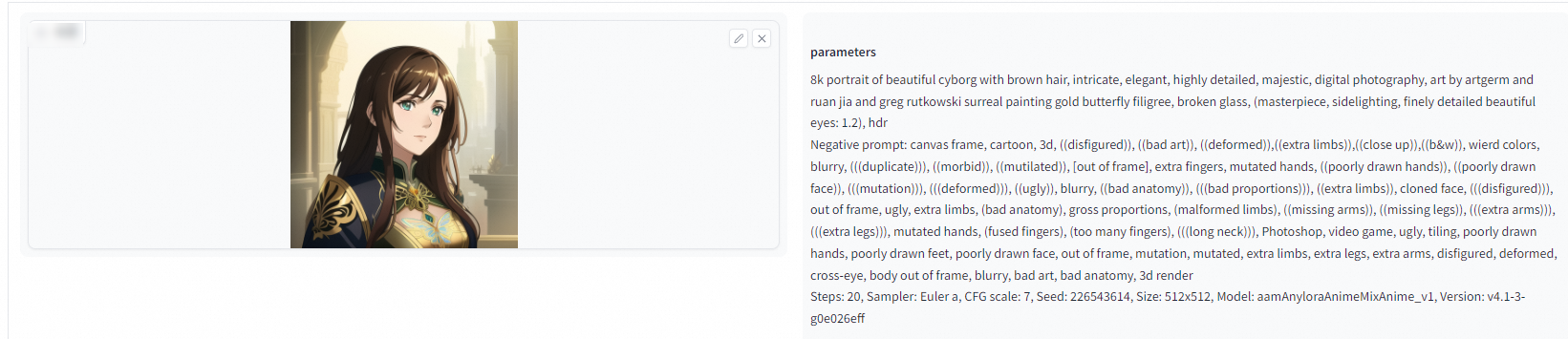

txt2img showcase

Simple prompts: Enter positive and negative prompts in the related sections. The positive prompt defines the elements that you want to appear in the generated image, whereas the negative prompt defines the elements that you want to prevent from appearing in the generated image. The more keywords you provide, the more closely the generated image will match your expectations.

Complex prompts:

Positive prompt: 8k portrait of beautiful cyborg with brown hair, intricate, elegant, highly detailed, majestic, digital photography, art by artgerm and ruan jia and greg rutkowski surreal painting gold butterfly filigree, broken glass, (masterpiece, sidelighting, finely detailed beautiful eyes: 1.2), hdr

Negative prompt: canvas frame, cartoon, 3d, ((disfigured)), ((bad art)), ((deformed)),((extra limbs)),((close up)),((b&w)), wired colors, blurry, (((duplicate))), ((morbid)), ((mutilated)), [out of frame], extra fingers, mutated hands, ((poorly drawn hands)), ((poorly drawn face)), (((mutation))), (((deformed))), ((ugly)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), out of frame, ugly, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), Photoshop, video game, ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, body out of frame, blurry, bad art, bad anatomy, 3d render

Prompt source: Civitai

NoteIf you cannot access the preceding link, configure a proxy and then try again.

The higher a keyword is ranked, the higher the weight of the keyword. You can adjust the order of keywords based on your needs. For example, you can arrange keywords in the following order: subject, medium, style, artist, website, resolution, additional details, color, and lighting. In actual use, you do not need to specify all types of the preceding keywords. Select and arrange the keywords based on your needs. Different from basic models, trained models often have hidden settings to use some fixed styles or discard some keywords in the prompts.

Parameters

①Sampling method

The sampling mode of the diffusion denoising algorithm. Different sampling methods deliver different effects. Select an appropriate sampling method based on your needs.

Euler a, DPM++ 2s a, and DPM++ 2s a Karras are similar in the overall composition of the image. Euler, DPM++ 2m and DPM++ 2m Karras are similar in the overall composition of the image. DDIM differs from other sampling methods in the overall composition of the image.

②Sampling steps

The sampling steps of the generated image. This parameter determines the AI optimization effect. With each iteration, the system compares the prompts with the current image and accordingly makes fine adjustments.

If you increase the value of sampling steps, more time and computing resources are consumed but better results cannot be guaranteed.

In actual use, an increasing value of sampling steps indicates that more details are displayed in the generated image, which is also closely related to the sampling method. For example, the sampling steps of Euler a range from 30 to 40. The image generated by using Euler a tends to be stable, and no details are added if you increase the value of sampling steps.

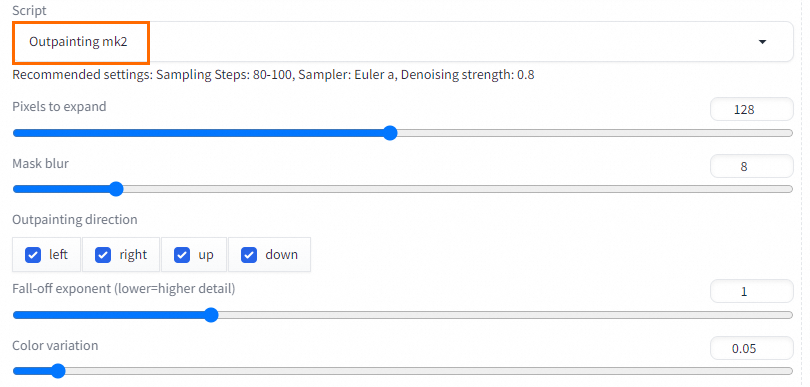

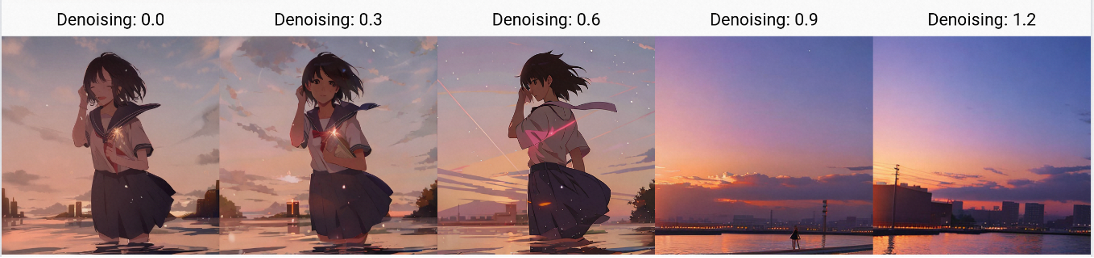

③Hires. fix

This parameter affects the resolution of the generated image. The system creates an image by using a low resolution, and then improves the details of the image without changing the composition of the image. If you select this option, you must configure parameters such as Hires steps and Denoising strength.

For information about the how to upscale images, see Three methods to upscale images by using PAI ArtLab.

④Width and Heigh: The size and resolution of the generated image. The higher the resolution, the more details the image has but more video memory is consumed. We recommend that you do not set an excessively high resolution. Use the default value: 512×512.

⑤Batch count

The total number of batches. The details of the images generated in different batches may vary. The larger the value, the longer it takes to calculate.

⑥Batch size

The number of images that are generated in each batch at a time. A larger value causes higher video memory consumption.

⑦CFG Scale

A larger value indicates that the AI generation is more compliant with the prompts that you provide. A smaller value indicates that the AI generation is more creative.

⑧Seed

A value of -1 indicates that each generation is random. If you set a value other than -1 (you can enter negative numbers and decimals), and do not change the seed value, model, GPU, and other parameters, the same image is generated every time.

⑨You can click this icon to read generation parameters from prompts or the previously generated image.

⑩You can click this icon to clear prompt content.

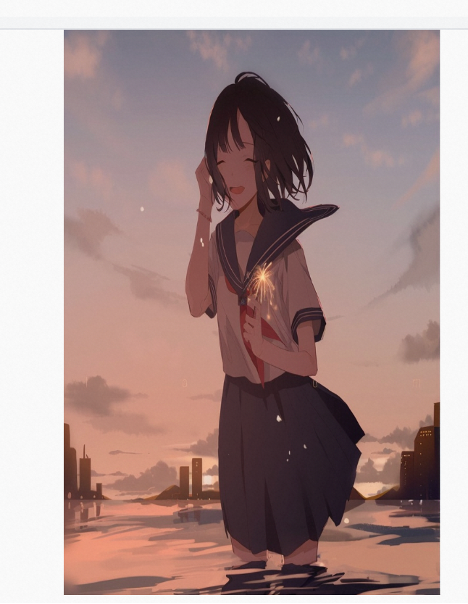

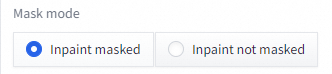

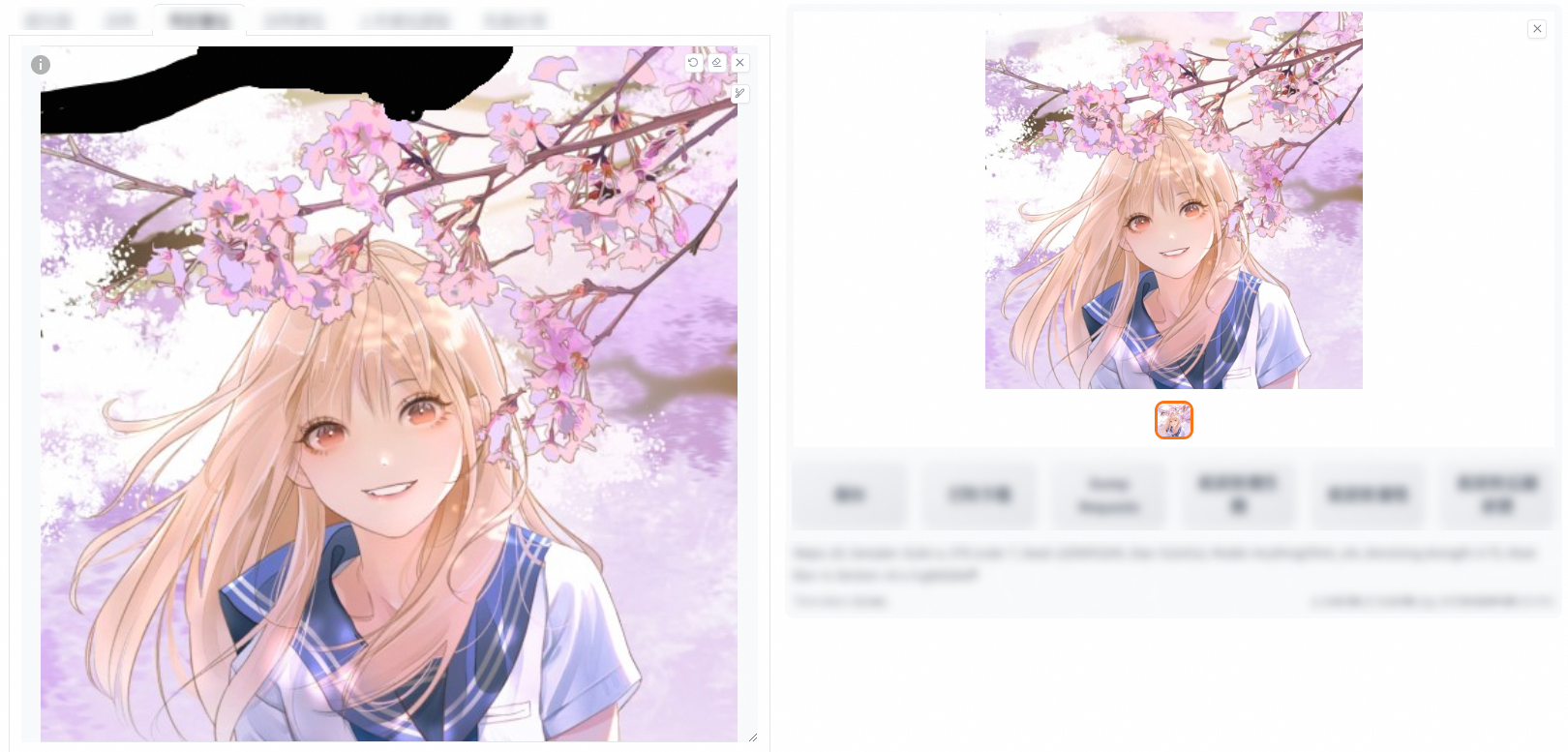

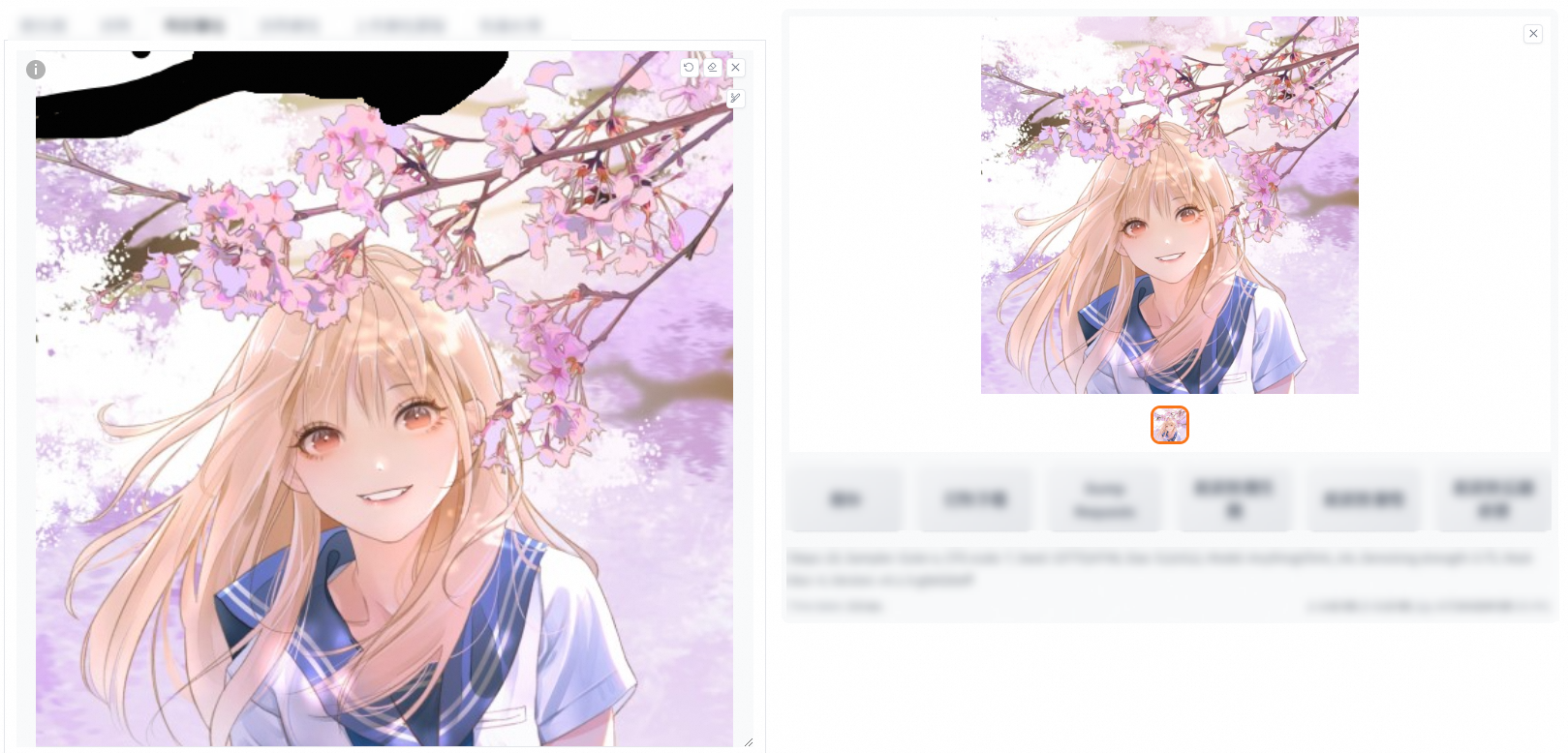

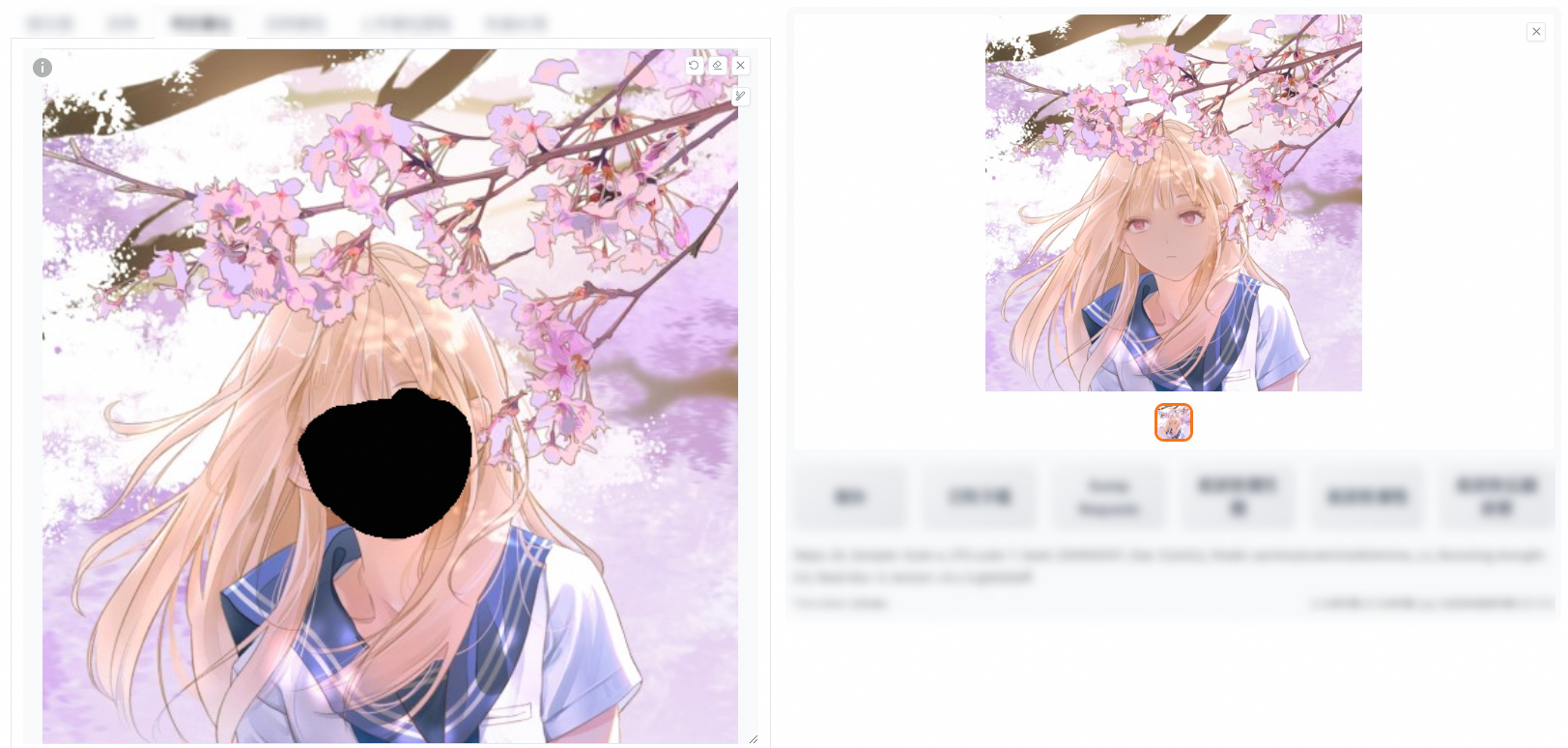

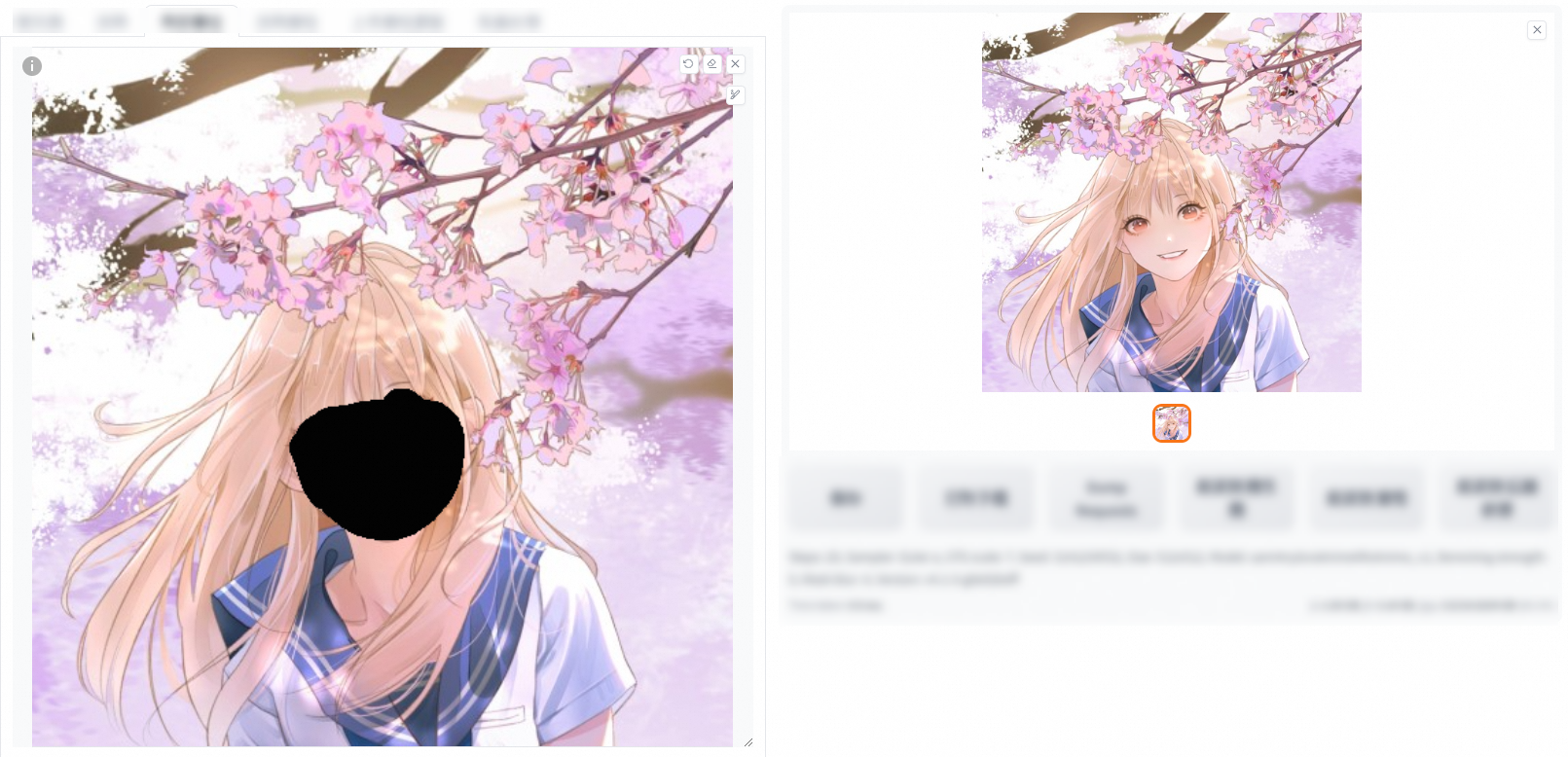

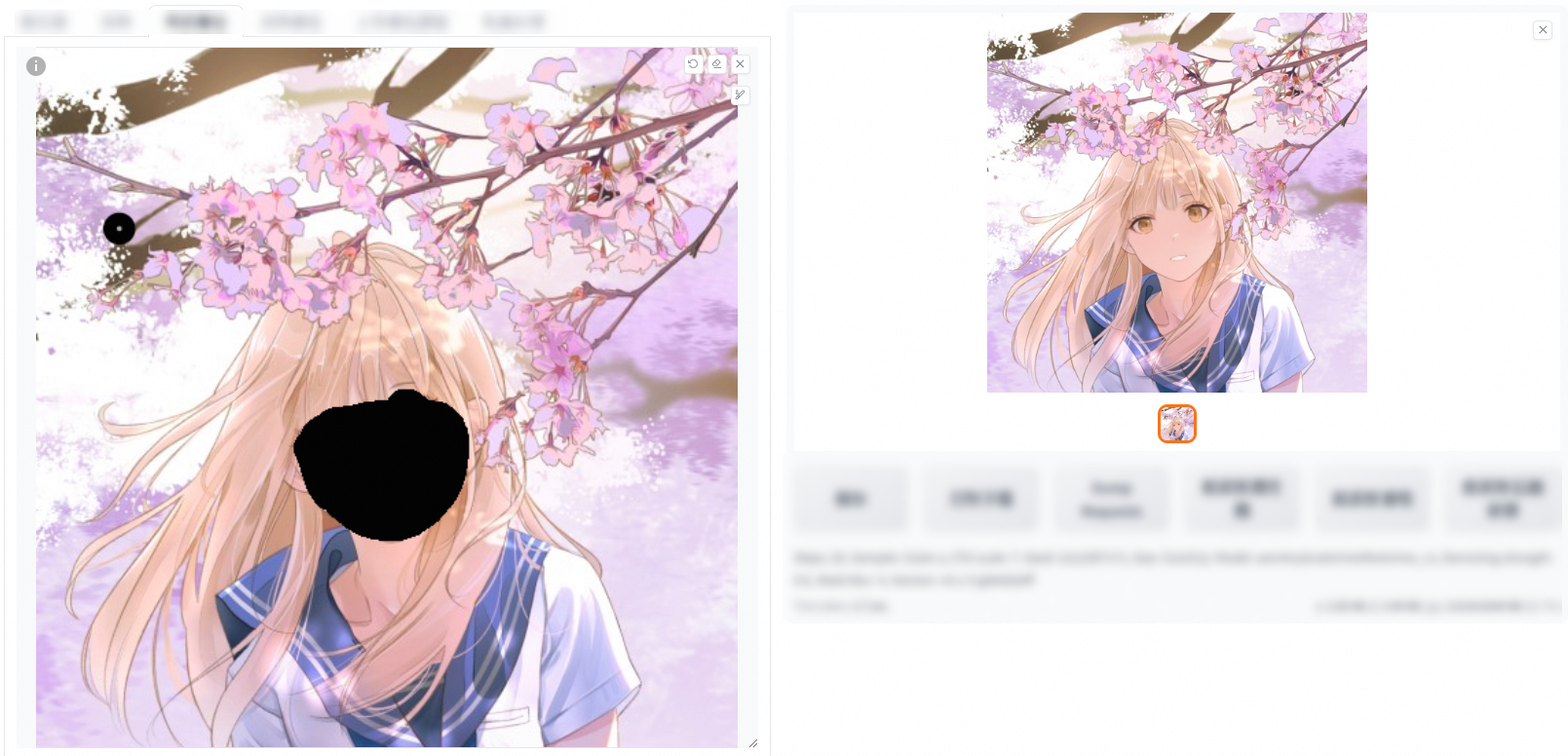

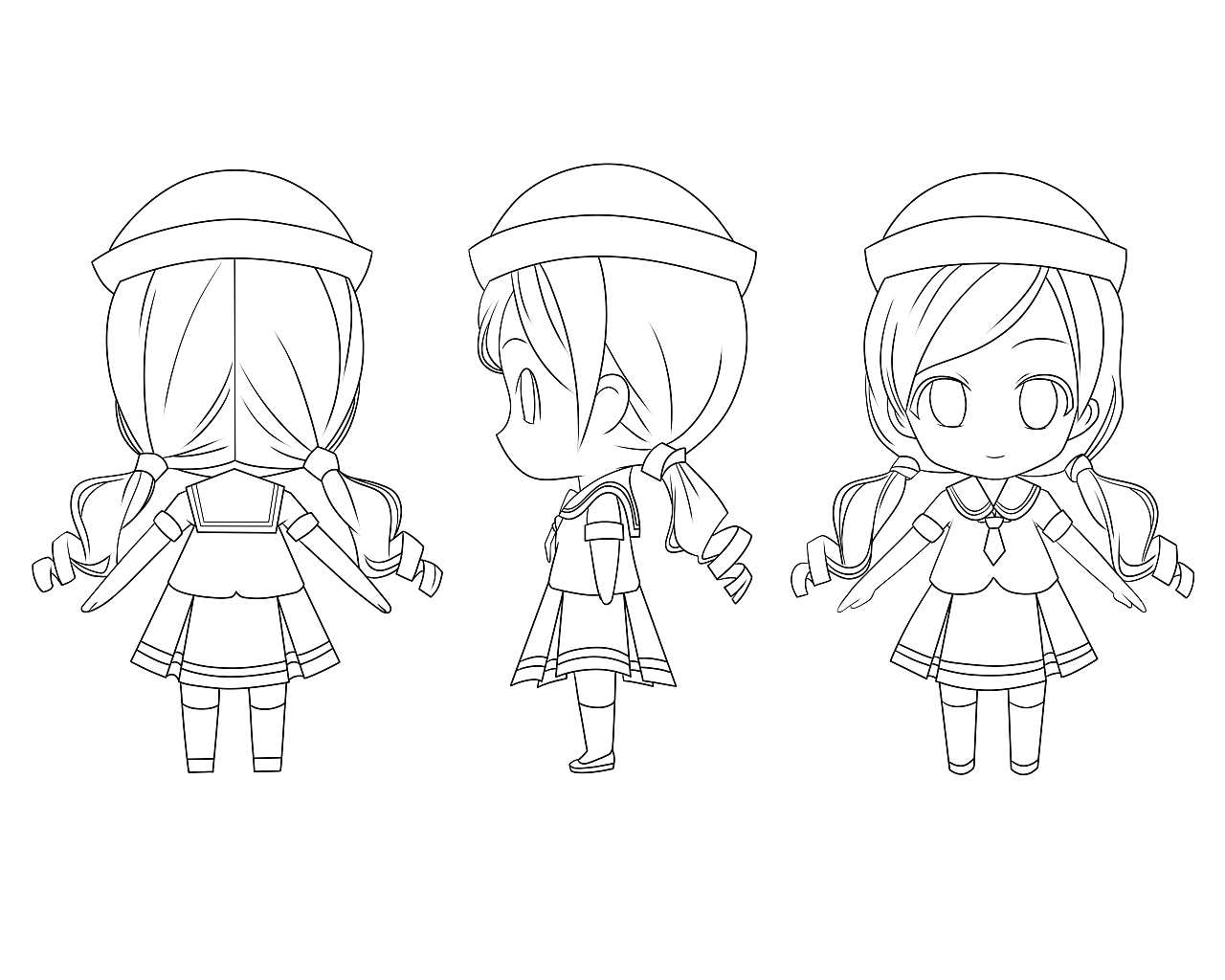

img2img

The img2img feature allows you to generate a new image from an existing image based on the prompts that you provide. For example, you can use this feature to convert a photo of a real person into an animation image. You can also color a sketch drawing. The img2img feature also allows you to modify parameters and modify or redraw specific parts.

The generated image can be used for the next image-to-image creation or partial re-editing, and can also be used in other features. The system can automatically generate keywords for the input image by using interrogation based on the CLIP and DeepBooru models. CLIP is suitable for realistic images and DeepBooru is suitable for cartoon images.

The following sections describe the procedures for using the img2img feature.

img2img

The following sections describe the parameters that are available for different features.

Image information

After you upload an image that is generated by Stable Diffusion, you can view the prompts and parameters of the image.

However, the screenshot of the original image or an image that is saved from another application cannot be identified in Stable Diffusion.

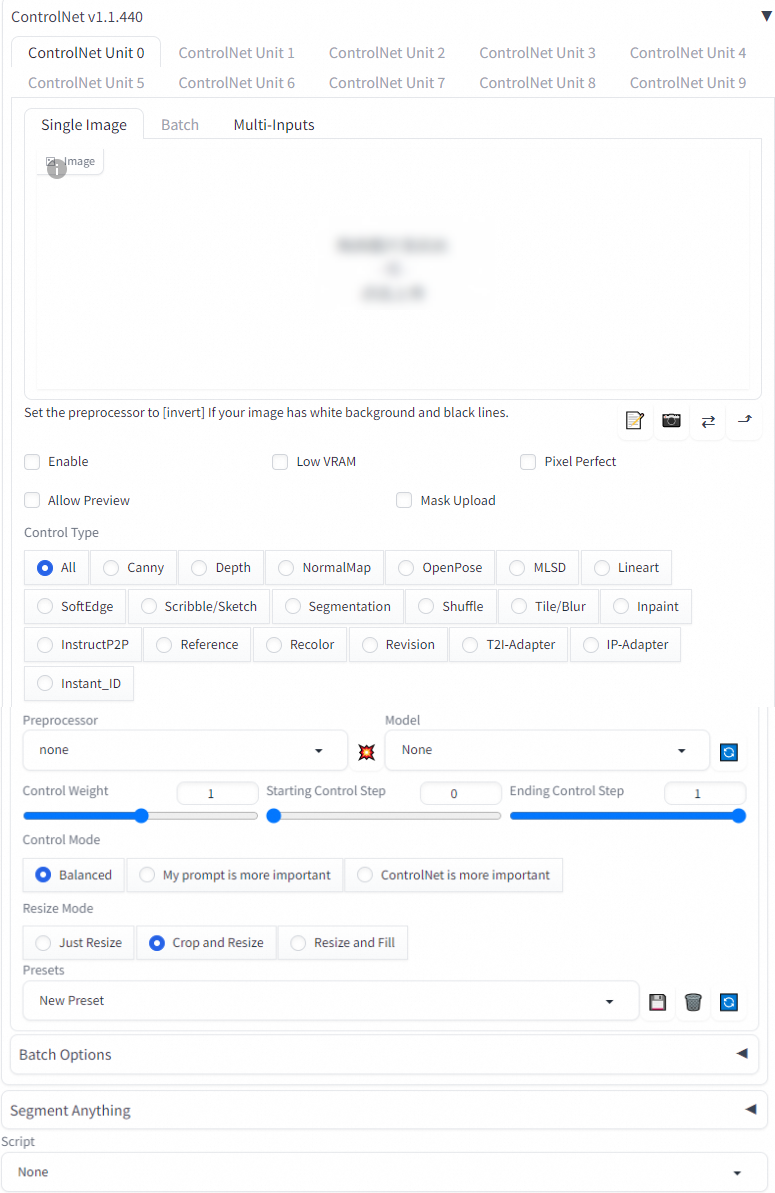

ControlNet plug-in

The MistoLine-SDXL-ControlNet plug-in developed by TheMisto.ai is provided for all versions of Stable Diffusion WebUI and ComfyUI in PAI ArtLab.

Parameters

Parameter | Description |

Enable | Specifies whether to enable ControlNet. |

Low VRAM | If the video memory is smaller than 4 GB, you can select this option. |

Preprocessor | The preprocessing effect varies based on the preprocessor. Each preprocessor has a corresponding model. You can use a preprocessor together with the corresponding model. |

Model | A model can be used together with a preprocessor. You must manually download a model and upload it to the corresponding directory in OSS. |

Control Weight | The weight of ControlNet in the AI generation. In the process of img2img generation, a low denoising strength in combination with a high control weight can change the filter and style of an image without changing the details of the image, and a high denoising strength in combination with a low control weight can modify the details of an image. |

Starting Control Step | The value is a percentage value that ranges from 0 to 1. This parameter indicates where ControlNet starts control. A value of 0 indicates that ControlNet starts control from the first step, and a value of 1 indicates that ControlNet starts control from the last step. A larger value indicates that CnotrolNet has less effect on the AI generation. For example, if you set the Sampling steps parameter to 20 and the Starting Control Step parameter to 0.3, ControlNet starts control from the sixth step based on the following formula: 20 × 0.3 = 6. |

Ending Control Step | The value is a percentage value that ranges from 0 to 1. This parameter indicates where CnotrolNet ends control. |

Control Type |

|