About the model

Alibaba Cloud open-sourced the QwQ-32B reasoning model on March 6. Using large-scale reinforcement learning, QwQ-32B delivers breakthrough improvements in math, coding, and general capabilities. Its overall performance matches DeepSeek-R1 while significantly lowering deployment and usage costs.

-

On AIME24—a benchmark for math ability—and LiveCodeBench—a benchmark for coding ability—QwQ-32B performs on par with DeepSeek-R1 and far outperforms o1-mini and R1 distilled models of the same size.

-

On LiveBench—the “hardest LLMs leaderboard” led by Yann LeCun, Meta’s Chief AI Scientist—IFEval—a benchmark for instruction following proposed by Google—and BFCL—a benchmark for accurate function or tool calling developed by UC Berkeley and others—QwQ-32B scores higher than DeepSeek-R1.

-

QwQ-32B innovatively integrates agent capabilities. It can think critically while using tools and adjust its reasoning process based on environmental feedback.

The PAI Model Gallery now fully supports one-click deployment, fine-tuning, and evaluation of the QwQ-32B model. Deployment requires a GPU with 96 GB of VRAM. Quantized versions—QwQ-32B-GGUF and QwQ-32B-AWQ—are also supported. You can deploy these quantized models on lower-cost GPUs such as a single A10.

Deploy the model

-

Go to the Model Gallery page.

-

Log on to the PAI console. In the top-left corner, select a region that meets your needs. Switch regions as needed to access available compute resources.

-

In the navigation pane on the left, click Workspace List. Then click the name of your workspace to open it.

-

In the navigation pane on the left, click Getting Started > Model Gallery.

-

-

In the Model Gallery page, find the QwQ-32B model card and click it to open the model details page.

-

Click Deploy in the upper-right corner. Select a deployment framework, enter a name for your inference service, and configure the required resources. Then click Deploy to launch the service on PAI-EAS—the Alibaba Cloud AI inference platform. Supported frameworks include SGLang, vLLM, and BladeLLM—an Alibaba Cloud PAI–developed high-performance inference framework.

-

Use the inference service. After successful deployment, go to the service page and click View Endpoint Information to obtain the endpoint URL and token. To learn how to invoke the service, click the pre-trained model link to return to the model introduction page and read the invocation instructions.

You can also debug the deployed QwQ-32B model online in the PAI-EAS inference platform.

Fine-tune the model

The PAI Model Gallery provides supervised fine-tuning (SFT) for QwQ-32B. You can use LoRA fine-tuning or full-parameter fine-tuning—both work out of the box.

-

Prepare training data. The SFT algorithm accepts training datasets in JSONL format and other formats. For more format requirements, see the model documentation. Here is an example of JSONL training data:

{"messages": [{"role": "system", "content": "You are a helpful and harmless assistant"}, {"role": "user", "content": "Who are you?"}, {"role": "assistant", "content": "I am Xiao Pai, an AI assistant trained by PAI. My goal is to provide useful, accurate, and timely information and help users communicate effectively. How can I help you today?"}]} {"messages": [{"role": "system", "content": "You are a helpful and harmless assistant"}, {"role": "user", "content": "Who are you!"}, {"role": "assistant", "content": "Hello! I am Xiao Pai, an AI language model developed by PAI. I can answer questions, provide information, hold conversations, and help solve problems. If you have any questions or need assistance, feel free to ask!"}]} -

Configure training parameters. After preparing your data, upload it to an Object Storage Service (OSS) bucket. Because QwQ-32B is large, the algorithm requires GPUs with at least 96 GB of VRAM. Make sure your resource quota includes enough compute resources.

The table below lists supported hyperparameters. Adjust them based on your dataset and compute resources—or use the default values.

Parameter name

Description

Notes

learning_rate

Learning rate. Controls how much the model weights change during training.

A learning rate that is too high causes unstable training and large loss fluctuations. A learning rate that is too low slows convergence. Choose a value that lets the model converge quickly and stably.

num_train_epochs

Number of times to loop over the training dataset.

Too few epochs may cause underfitting. Too many may cause overfitting. With small datasets, increase epochs to avoid underfitting. Smaller learning rates usually require more epochs.

per_device_train_batch_size

Number of samples processed per GPU in one training step.

Larger batch sizes speed up training but use more VRAM. Use the largest batch size that fits in your GPU memory. Check GPU memory usage on the task monitoring page.

gradient_accumulation_steps

Number of steps to accumulate gradients before updating model weights.

Small batch sizes increase gradient variance and slow convergence. Gradient accumulation lets you sum gradients from multiple batches before each update. Set this value to a multiple of your number of GPUs.

max_length

Maximum token length for input data in one training step.

After tokenization, your training data becomes a sequence of tokens. Use a token estimation tool to estimate text length.

lora_rank

LoRA rank.

lora_alpha

LoRA weight.

LoRA scaling coefficient. Usually set to lora_rank × 2.

lora_dropout

Dropout rate for LoRA training. Randomly drops neurons during training to prevent overfitting.

lorap_lr_ratio

LoRA+ learning rate ratio (λ = ηB/ηA), where ηA and ηB are the learning rates for adapter matrices A and B.

Compared to LoRA, LoRA+ uses different learning rates for key parts of the fine-tuning process. This improves performance and speeds up fine-tuning without extra compute cost. Set lorap_lr_ratio to 0 to use standard LoRA instead of LoRA+

advanced_settings

Use this field to add custom arguments in the format "--key1 value1 --key2 value2". Leave blank if not needed.

-

save_strategy: Model checkpoint save strategy. Options: "steps", "epoch", or "no". Default: "steps".

-

save_steps: Number of steps between saves. Default: 500.

-

save_total_limit: Maximum number of checkpoints to keep. Older checkpoints are deleted automatically. Default: 2. Set to None to keep all checkpoints.

-

warmup_ratio: Ratio of training steps used for learning rate warmup. During warmup, the learning rate increases gradually from a small value to the initial learning rate. Default: 0.

-

-

Click Train to start training. Monitor the training job status and logs. After training finishes, deploy the fine-tuned model as an online service.

Evaluate the model

The PAI Model Gallery includes common evaluation algorithms. You can evaluate pre-trained and fine-tuned models out of the box. Evaluation helps you assess model performance and compare multiple models to choose the best fit for your use case.

Evaluation entry points:

|

Evaluate a pre-trained model directly |

|

|

Evaluate a fine-tuned model on the training job details page |

|

Evaluation supports both custom and public datasets:

-

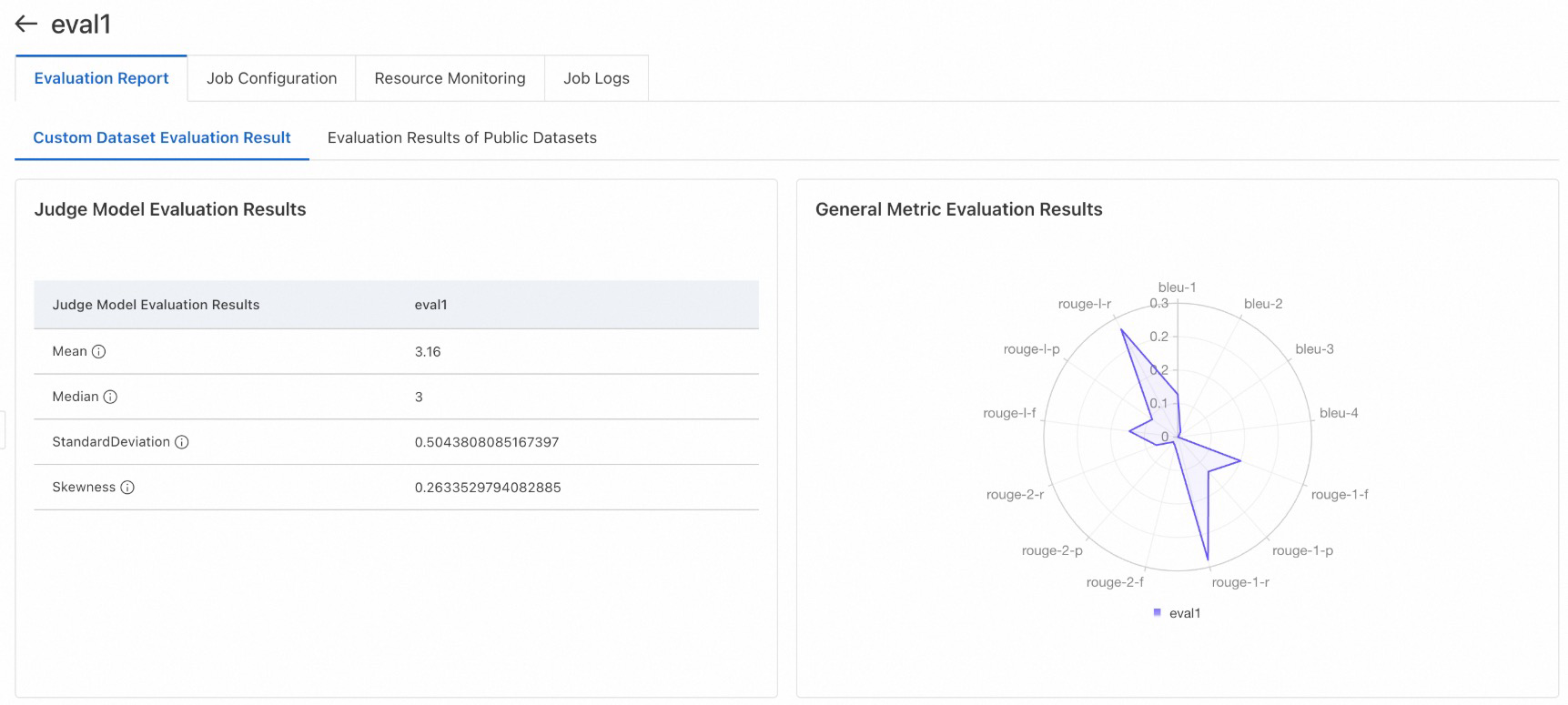

Custom dataset evaluation

Supported metrics include BLEU and ROUGE—common NLP text-matching scores—and LLM-as-a-Judge evaluation (available only in Expert Mode). LLM-as-a-Judge uses another LLM to score and explain results. Use this to test whether a model fits your unique scenario.

Provide an evaluation dataset in JSONL format. Each line must be a JSON object with question and answer fields. Example file: evaluation_test.jsonl.

-

Public dataset evaluation

This approach enables comprehensive capability evaluation of Large Language Models (LLMs) by categorizing open-source evaluation datasets by domain. PAI currently maintains datasets such as CMMLU, GSM8K, TriviaQA, MMLU, C-Eval, TruthfulQA, and HellaSwag, covering multiple domains such as math, knowledge, and reasoning. Other public datasets are being integrated progressively. Note: Evaluations using the GSM8K, TriviaQA, and HellaSwag datasets require significant time; select them as needed.

Select an output path for evaluation results. Choose compute resources based on system recommendations. Then submit the evaluation job. After completion, view results on the job page. If you select many datasets, the model runs them one by one—so wait time may be long. Check logs to see which dataset is running.

View evaluation reports. Examples for custom and public dataset evaluations:

Contact us

We welcome your continued use of the PAI Model Gallery. We regularly add state-of-the-art (SOTA) models. If you have model requests, contact us. Join the PAI Model Gallery user group on DingTalk by searching for group number 79680024618.