To integrate the capabilities of the DeepSeek series of large language models (LLMs), you need a stable and scalable backend model service. This topic explains how to deploy the DeepSeek-V3 and DeepSeek-R1 models by using Model Gallery on the Platform for AI (PAI). This method creates a standard, API-compatible model service endpoint, eliminating the need to manage underlying infrastructure such as environment configuration, model loading, and inference optimization. You can then integrate this endpoint directly into your applications.

Solution architecture

This solution is built on PAI and includes the following core components:

Model Gallery: Serves as the entry point for model distribution and deployment. It provides pre-configured DeepSeek models and their corresponding deployment configurations.

Elastic Algorithm Service (EAS): The core service that hosts model deployment and inference. It automatically manages underlying compute resources, such as GPUs, and starts model service instances based on your configuration.

Inference acceleration engines (SGLang, vLLM, or BladeLLM): These engines are used to optimize model inference performance.

SGLang/vLLM: Provides interfaces that are fully compatible with the OpenAI API, which simplifies migrating existing applications.

BladeLLM: Alibaba Cloud's proprietary high-performance inference framework that delivers superior inference performance in specific scenarios.

API gateway: EAS exposes the deployed model service through a secure API Gateway, providing a service endpoint and an authentication token.

Step 1: Plan models and resources

1. Choose an inference engine

Recommended: SGLang. It delivers high performance while being fully compatible with the OpenAI API standard, making it a great fit for the mainstream application ecosystem. In most scenarios, it supports a longer maximum context length than vLLM.

Alternative: vLLM. As a popular framework in the industry, it also offers excellent API compatibility.

For specific scenarios: BladeLLM. Choose BladeLLM, the high-performance inference framework developed by Alibaba Cloud PAI, only when you prioritize maximum inference performance and can work with an API that differs from the OpenAI standard. For example, it does not support

client.models.list(), and themax_tokensparameter has a default truncation behavior.

2. Choose a model and resources

Your choice of model determines the required compute resources and deployment costs. DeepSeek models are available as "full-version" and "distilled" variants, which have vastly different resource requirements.

Development and testing: Use a distilled model, such as

DeepSeek-R1-Distill-Qwen-7B. These models have a smaller resource footprint, typically a single GPU with 24 GB of GPU memory, deploy quickly, and are cost-effective, making them ideal for rapid feature validation.Production environment: Evaluate based on a balance of performance and cost. The

DeepSeek-R1-Distill-Qwen-32Bmodel strikes a good balance between effectiveness and cost. If you require higher model performance, choose a full-version model. This requires multiple high-end GPUs, such as eight GPUs with 96 GB of GPU memory each, which significantly increases costs.

The following table lists the minimum configurations for different model versions and the maximum number of tokens supported by different instance types and inference engines.

Full-version models

Model | Deployment method | Maximum token count (input + output) | Minimum configuration | |

SGLang (Recommended) | vLLM | |||

DeepSeek-R1 | Single-node - NVIDIA GPU | 56,000 | 65,536 | Single-node 8 × GU120 (8 × 96 GB of GPU memory) |

Single-node - GP7V instance type | 56,000 | 16,384 | ||

Distributed - Lingjun resources | 163,840 | 163,840 | ||

DeepSeek-V3 | Single-node - NVIDIA GPU | 56,000 | 65,536 | Single-node 8 × GU120 (8 × 96 GB of GPU memory) |

Single-node - GP7V instance type | 56,000 | 16,384 | ||

Distributed - Lingjun Intelligent Computing Service resources | 163,840 | 163,840 | ||

Single-node deployment instance type notes:

NVIDIA GPU:

ml.gu8v.c192m1024.8-gu120,ecs.gn8v-8x.48xlarge: Available as Public Resources, but availability may be limited.ecs.ebmgn8v.48xlarge: This instance type cannot be used as public resources. Purchase dedicated EAS resources.

GP7V instance type:

ml.gp7vf.16.40xlargeis available as a public resource and can only be used as a spot instance. If NVIDIA GPU resources are scarce, switch to the China (Ulanqab) region to find GP7V resources. Configure a VPC when deploying.

Distributed deployment instance type descriptions (recommended when high performance is required):

Distributed deployment relies on high-speed networking and must use PAI Lingjun Intelligent Computing Service, which provides high-performance, elastic heterogeneous computing power. You must also configure a VPC during deployment. To use PAI Lingjun Intelligent Computing Service, switch the region to China (Ulanqab).

Lingjun public resources:

ml.gu7xf.8xlarge-gu108: Requires four machines for a single instance deployment and can only be used as a spot instance.GP7V instance type: Requires two machines for a single instance deployment.

Lingjun prepaid resources: Requires whitelist approval. Contact your sales manager or submit a ticket for consultation.

Distilled models

Model | Maximum token count (input + output) | Minimum configuration | ||

SGLang (Recommended) | vLLM | BladeLLM | ||

DeepSeek-R1-Distill-Qwen-1.5B | 131,072 | 131,072 | 131,072 | 1 × A10 GPU (24 GB of GPU memory) |

DeepSeek-R1-Distill-Qwen-7B | 131,072 | 32,768 | 131,072 | 1 × A10 GPU (24 GB of GPU memory) |

DeepSeek-R1-Distill-Llama-8B | 131,072 | 32,768 | 131,072 | 1 × A10 GPU (24 GB of GPU memory) |

DeepSeek-R1-Distill-Qwen-14B | 131,072 | 32,768 | 131,072 | 1 × GPU L (48 GB of GPU memory) |

DeepSeek-R1-Distill-Qwen-32B | 131,072 | 32,768 | 131,072 | 2 × GPU L (2 × 48 GB of GPU memory) |

DeepSeek-R1-Distill-Llama-70B | 131,072 | 32,768 | 131,072 | 2 × GU120 (2 × 96 GB of GPU memory) |

Step 2: Deploy the model service

Log on to the PAI console, select the target region in the upper-left corner, navigate to Workspaces from the left-side navigation pane, and select the target workspace.

In the workspace, navigate to QuickStart > Model Gallery.

In the model list, search for and select the target model, such as

DeepSeek-R1-Distill-Qwen-7B, to go to the model details page.Click Deploy in the upper-right corner. On the deployment configuration page, set the following parameters based on your plan from Step 1.

Inference engine: SGLang or vLLM is recommended.

Resource Information: Choose Public Resources or Dedicated Resources, and select the appropriate GPU specification based on the model's requirements.

By default, public resources are used, and a recommended specification is provided. If availability is insufficient, try switching to another region.

ImportantWhen you deploy using public resources, billing starts as soon as the service enters the Running state and is charged based on duration, even if there are no calls. Stop the service promptly after testing.

If you select Resource Quota, you must select the inference engine and deployment template that correspond to your chosen instance type. For example, if you use a GP7V instance type, select SGLang as the inference engine and Single-Node-GP7V as the deployment template.

After confirming all configurations are correct, click Deploy. The system begins to create the service.

NoteFor large parameter models, such as the full-version DeepSeek-R1, the model loading process may take 20 to 30 minutes.

View the status of the deployment task on the Model Gallery > Job Management > Deployment Jobs page. Click the service name to go to the service details page. You can also click More in the upper-right corner to navigate to the PAI-EAS model service details page for more information.

Step 3: Debug online

Navigate to the Model Gallery > Job Management > Deployment Jobs page and click the name of the deployed service.

Click the Online Debugging tab.

For an SGLang/vLLM deployment, you can get the service's API description file from <EAS_ENDPOINT>/openapi.json. The following steps test the chat completions endpoint POST <EAS_ENDPOINT>/v1/chat/completions.

Append the API path. The online testing tool pre-fills the endpoint URL. Append the specific API path, which is

v1/chat/completions.Construct the request body.

If the prompt is: What is 3+5?

The request body format is as follows. The value for the

modelparameter is the model name retrieved from the<EAS_ENDPOINT>/v1/modelsendpoint. This example usesDeepSeek-R1-Distill-Qwen-7B.{ "model": "DeepSeek-R1-Distill-Qwen-7B", "messages": [ { "role": "user", "content": "What is 3 + 5?" } ] }Send the request.

Step 4: Call the model service

The official usage recommendations for the DeepSeek-R1 model series are as follows:

Set

temperaturebetween 0.5 and 0.7. The recommended value is 0.6 to prevent repetitive or incoherent output.Do not add a system prompt. Place all instructions in the user prompt.

For math-related questions, it is recommended to include "Please reason step by step and put the final answer in a \boxed{}." in the prompt.

To prevent unexpected output truncation when using BladeLLM, you must specify the max_tokens parameter in the request. If this parameter is omitted, the BladeLLM engine truncates the output to a default of 16 tokens.

API call

Get the service endpoint and token.

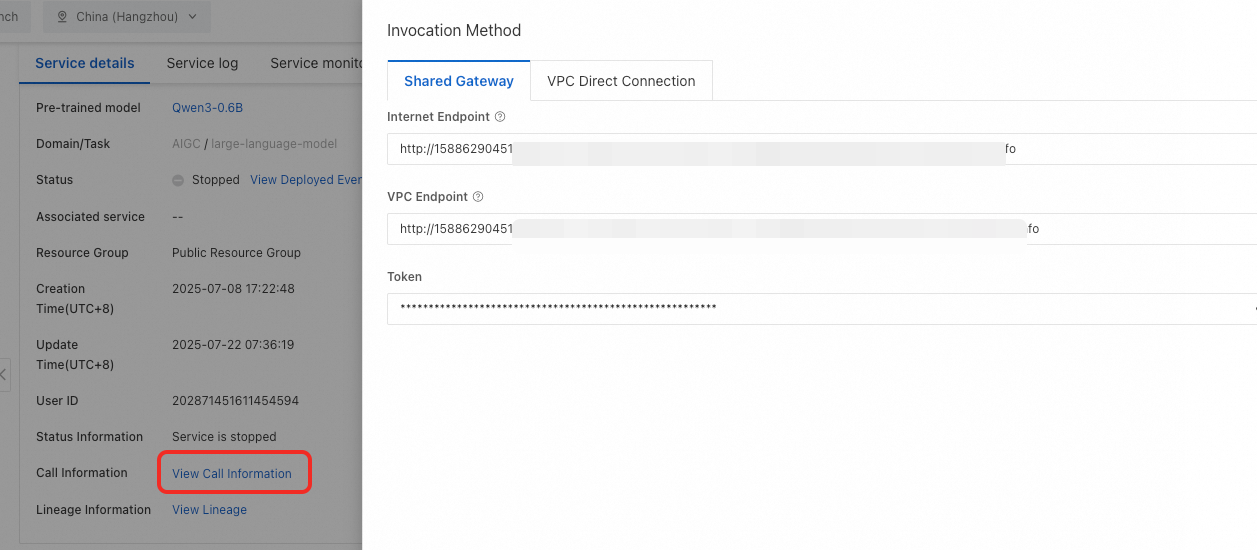

On the Model Gallery > Job Management > Deployment Jobs page, click the name of the deployed service to go to the service details page.

Click View Call Information to get the service endpoint and token.

Use a chat API call example.

Replace

<EAS_ENDPOINT>with the service endpoint and<EAS_TOKEN>with the service token.OpenAI SDK

Note:

Append

/v1to the end of the endpoint.BladeLLM-accelerated deployments do not support

client.models.list(). As a workaround, set themodelparameter to an empty string ("").

SGLang/vLLM accelerated deployment

from openai import OpenAI # 1. Configure the client # Replace <EAS_ENDPOINT> and <EAS_TOKEN> with the actual endpoint and token of your service. openai_api_key = "<EAS_TOKEN>" openai_api_base = "<EAS_ENDPOINT>/v1" client = OpenAI( api_key=openai_api_key, base_url=openai_api_base, ) # 2. Get the model name try: model = client.models.list().data[0].id print(model) except Exception as e: print(f"Failed to get the model list. Check your endpoint and token. Error: {e}") # 3. Construct and send the request stream = True chat_completion = client.chat.completions.create( messages=[ {"role": "user", "content": "Hello, please introduce yourself."} ], model=model, max_tokens=2048, stream=stream, ) if stream: for chunk in chat_completion: print(chunk.choices[0].delta.content, end="") else: result = chat_completion.choices[0].message.content print(result)BladeLLM accelerated deployment

from openai import OpenAI ##### API Configuration ##### # Replace <EAS_ENDPOINT> with the endpoint of the deployed service and <EAS_TOKEN> with the token of the deployed service. openai_api_key = "<EAS_TOKEN>" openai_api_base = "<EAS_ENDPOINT>/v1" client = OpenAI( api_key=openai_api_key, base_url=openai_api_base, ) # BladeLLM accelerated deployment does not currently support using client.models.list() to get the model name. You can set the value of model to "" for compatibility. model="" stream = True chat_completion = client.chat.completions.create( messages=[ {"role": "user", "content": "Hello, please introduce yourself."} ], model=model, max_tokens=2048, stream=stream, ) if stream: for chunk in chat_completion: print(chunk.choices[0].delta.content, end="") else: result = chat_completion.choices[0].message.content print(result)HTTP

SGLang/vLLM accelerated deployment

Replace <model_name> with the model name obtained from the model list API

<EAS_ENDPOINT>/v1/models.curl -X POST \ -H "Content-Type: application/json" \ -H "Authorization: <EAS_TOKEN>" \ -d '{ "model": "<model_name>", "messages": [ { "role": "user", "content": "hello!" } ] }' \ <EAS_ENDPOINT>/v1/chat/completionsimport json import requests # Replace <EAS_ENDPOINT> with the endpoint of the deployed service and <EAS_TOKEN> with the token of the deployed service. EAS_ENDPOINT = "<EAS_ENDPOINT>" EAS_TOKEN = "<EAS_TOKEN>" url = f"{EAS_ENDPOINT}/v1/chat/completions" headers = { "Content-Type": "application/json", "Authorization": EAS_TOKEN, } # Replace <model_name> with the model name obtained from the model list API <EAS_ENDPOINT>/v1/models. model = "<model_name>" stream = True messages = [ {"role": "user", "content": "Hello, please introduce yourself."}, ] req = { "messages": messages, "stream": stream, "temperature": 0.6, "top_p": 0.5, "top_k": 10, "max_tokens": 300, "model": model, } response = requests.post( url, json=req, headers=headers, stream=stream, ) if stream: for chunk in response.iter_lines(chunk_size=8192, decode_unicode=False): msg = chunk.decode("utf-8") if msg.startswith("data"): info = msg[6:] if info == "[DONE]": break else: resp = json.loads(info) print(resp["choices"][0]["delta"]["content"], end="", flush=True) else: resp = json.loads(response.text) print(resp["choices"][0]["message"]["content"])BladeLLM accelerated deployment

curl -X POST \ -H "Content-Type: application/json" \ -H "Authorization: <EAS_TOKEN>" \ -d '{ "messages": [ { "role": "user", "content": "hello!" } ] }' \ <EAS_ENDPOINT>/v1/chat/completionsimport json import requests # Replace <EAS_ENDPOINT> with the endpoint of the deployed service and <EAS_TOKEN> with the token of the deployed service. EAS_ENDPOINT = "<EAS_ENDPOINT>" EAS_TOKEN = "<EAS_TOKEN>" url = f"{EAS_ENDPOINT}/v1/chat/completions" headers = { "Content-Type": "application/json", "Authorization": EAS_TOKEN, } stream = True messages = [ {"role": "user", "content": "Hello, please introduce yourself."}, ] # When you use BladeLLM for accelerated deployment, if you do not specify the max_tokens parameter, the output is truncated to 16 tokens by default. We recommend that you adjust the max_tokens request parameter as needed. req = { "messages": messages, "stream": stream, "temperature": 0.6, "top_p": 0.5, "top_k": 10, "max_tokens": 300, } response = requests.post( url, json=req, headers=headers, stream=stream, ) if stream: for chunk in response.iter_lines(chunk_size=8192, decode_unicode=False): msg = chunk.decode("utf-8") if msg.startswith("data"): info = msg[6:] if info == "[DONE]": break else: resp = json.loads(info) if resp["choices"][0]["delta"].get("content") is not None: print(resp["choices"][0]["delta"]["content"], end="", flush=True) else: resp = json.loads(response.text) print(resp["choices"][0]["message"]["content"])Different models and deployment frameworks have distinct behaviors when using the inference service. Find more detailed instructions on API calls on the model's introduction page in Model Gallery.

Build a local WebUI

Gradio is a user-friendly Python library for quickly creating interactive interfaces for machine learning models. Follow these steps to run a Gradio WebUI locally.

Download the code: Download the appropriate code based on the inference engine you selected during deployment. Use the GitHub link if you have stable network access to GitHub. Otherwise, use the OSS link.

vLLM, SGLang: vLLM/SGLang_github, vLLM/SGLang_oss

BladeLLM: BladeLLM_github, BladeLLM_oss

Prepare the environment: Python 3.10 or later is required. Install the dependencies:

pip install openai gradio.Start the web application: Run the following command in your terminal. Replace

<EAS_ENDPOINT>and<EAS_TOKEN>with your service's Endpoint and Token.python webui_client.py --eas_endpoint "<EAS_ENDPOINT>" --eas_token "<EAS_TOKEN>"After the application starts successfully, a local URL (usually

http://127.0.0.1:7860) is printed to your console. Open this URL in your browser to access the WebUI.

Integrate third-party applications

To connect to Chatbox, Dify, or Cherry Studio, see Integrate third-party clients.

Step 5: Clean up resources

For services deployed using Public Resources, billing starts from the moment the service is successfully created and is based on its runtime duration. To prevent further charges, stop or delete the service when it is no longer needed.

Return to the Job Management > Deployment Jobs page.

Find the service you want to stop and click Stop or Delete in the Actions column.

Stop: The service instance is released, and billing stops. The service configuration is saved, allowing you to restart it later.

Delete: Both the service configuration and the instance are permanently deleted.

Costs and risks

Cost breakdown

Services deployed using Public Resources are billed by the minute from creation (when the status becomes "Running") until they are stopped or deleted, with bills settled hourly. Billing continues even if the service is idle. Stopping the service stops the billing.

For more information, see Billing of Elastic Algorithm Service (EAS).

Cost control recommendations

Clean up promptly: After development and testing, Stop or Delete the service immediately to control costs.

Select an appropriate model: In non-production environments, prioritize using lower-cost distilled models.

Use preemptible resources: For non-production tasks, enable the preemptible mode during deployment. Note that certain conditions must be met for a successful bid, and there is a risk of resource instability.

Leverage long-term use discounts: For long-running production services, reduce costs by purchasing a savings plan or prepaid resources.

Key risks

Unexpected costs: Forgetting to stop a service results in continuous billing. Always clean up resources immediately after use.

Output truncation with BladeLLM: When using the BladeLLM engine, if

max_tokensis not specified in the API request, the output is truncated to 16 tokens, which can lead to unexpected behavior or functional failure.Incorrect API call specifications:

Including a

systemprompt inmessageswhen calling DeepSeek-R1 series models may lead to unexpected behavior.The API request URL must end with a path like

/v1/chat/completions; otherwise, it returns a 404 error.

Resource availability: When deploying LLMs, especially full-version ones, high-end GPU resources in certain regions may have limited availability, leading to deployment failures or long waits. Try switching to other regions.

Model deployment FAQ

The service is stuck in a waiting state after I click deploy

Possible reasons include:

Insufficient machine resources in the current region.

The model is large, and loading it takes a long time. For LLMs like DeepSeek-R1 and DeepSeek-V3, this process can take 20-30 minutes.

You can wait and observe for a while. If the service still fails to start after an extended period, try the following steps:

Go to the Job Management > Deployment Jobs page to view the deployment task details. In the upper-right corner of the page, click to navigate to the PAI-EAS model service details page and check the service instance status.

Stop the current service and switch to another region in the upper-left corner of the console to redeploy it.

NoteUltra-large-parameter models like DeepSeek-R1 and DeepSeek-V3 require an 8-GPU setup to start the service, and resource availability is limited. Consider deploying smaller distilled models like

DeepSeek-R1-Distill-Qwen-7B, for which resources are more readily available.

Model call FAQ

Why do I get a 404 error when calling the API?

Check if the OpenAI API suffix, such as v1/chat/completions, has been added to the URL you are calling. For details, refer to the API call instructions on the model's homepage.

If you use a vLLM accelerated deployment, check whether the model parameter in the request body of the chat API call is filled with the correct model name. You can get the model name by calling the /v1/models endpoint.

The request is too long and causes a gateway timeout

The default request timeout for the gateway used during deployment is 180 seconds. If you need to extend this timeout, configure a Dedicated Gateway and submit a ticket to adjust the request timeout for the Dedicated Gateway. The maximum adjustable timeout is 600 seconds.

Why is there no "web search" feature?

The "web search" feature cannot be implemented by only deploying a model service. You must build an AI application (Agent) based on the model service.

What should I do if the model skips the thinking process?

If you deploy the DeepSeek-R1 model and encounter situations where the model sometimes skips its thinking process, use the updated chat template from DeepSeek that forces thinking. To use it:

Modify the startup command.

As shown in the figure, edit the service configuration by editing the JSON Configuration. Modify the

containers-scriptfield to add"--chat-template /model_dir/template_force_thinking.jinja"(you can add it after"--served-model-name DeepSeek-R1").

If the service is already deployed, go to Model Gallery > Job Management > Deployment Jobs, click the name of the deployed service, and then click Update service in the upper-right corner of the details page to access the same configuration page.

Modify the request body. In each request, add

{"role": "assistant", "content": "<think>\n"}at the end of themessagesarray.

Can I disable the thinking mode for DeepSeek-R1?

The DeepSeek-R1 series models do not currently support disabling the thinking process.

How do I implement a multi-turn conversation?

The model service itself does not save conversation history. The client application is responsible for storing the history and including it in subsequent requests to the model. The following is an example for a service deployed with SGLang.

curl -X POST \

-H "Content-Type: application/json" \

-H "Authorization: <EAS_TOKEN>" \

-d '{

"model": "<model_name>",

"messages": [

{

"role": "user",

"content": "Hello"

},

{

"role": "assistant",

"content": "Hello! I'm glad to see you. What can I help you with?"

},

{

"role": "user",

"content": "What was my previous question?"

}

]

}' \

<EAS_ENDPOINT>/v1/chat/completions