Qwen3 is the latest large language model (LLM) series from Alibaba Cloud's Qwen team, released on April 29, 2025. The series includes two Mixture-of-Experts (MoE) models and six Dense models. These models have undergone extensive training, resulting in breakthrough performance in inference, instruction following, agent capabilities, and multilingual support. PAI-Model Gallery offers all eight model sizes, including their Base and 8-bit floating point (FP8) versions, for a total of 22 models. This topic describes how to deploy, fine-tune, and evaluate these models in the Model Gallery.

Model deployment and invocation

Model deployment

This section provides an example of deploying the Qwen3-235B-A22B model with SGLang.

-

Go to the Model Gallery page.

-

Log on to the PAI console. In the upper-left corner, select a region that has available compute resources.

-

In the left navigation pane, select Workspace List, and click the name of the workspace you want to enter.

-

In the left navigation pane, choose QuickStart > Model Gallery.

-

-

On the Model Gallery page, find and click the Qwen3-235B-A22B model card to view the model details.

-

In the upper-right corner, click Deploy. Configure the following parameters and keep the default settings for the others to deploy the model to the PAI-EAS inference service platform.

-

Deployment Method: Set Inference Engine to SGLang and Deployment Template to Single Machine.

-

Resource Information: For Resource Type, select Public Resources. Recommended specifications are provided. For the minimum configuration required by the model, see Required computing power and supported token count for deployment.

ImportantIf no resource specifications are available, the public resources in the region are out of stock. You can try the following solutions:

-

Switch regions. For example, the China (Ulanqab) region has a large inventory of Lingjun preemptible resources (ml.gu7ef.8xlarge-gu100, ml.gu7xf.8xlarge-gu108, ml.gu8xf.8xlarge-gu108, ml.gu8tf.8.40xlarge). Preemptible resources may be reclaimed, so monitor your bid.

-

Use an EAS resource group. You can go to EAS Subscription for Dedicated Resources to purchase dedicated EAS resources.

-

-

Online debugging

At the bottom of the Service Details page, click Online Debugging, as shown in the following figure.

API call

-

Retrieve the service endpoint and token.

-

Go to Model Gallery > Task Management > Deployment. Click the name of the deployed service to view its details.

-

Click View Endpoint Information to obtain the Internet Endpoint and token.

-

-

The following examples show how to call the

/v1/chat/completionschat API for an SGLang deployment.curl -X POST \ -H "Content-Type: application/json" \ -H "Authorization: <EAS_TOKEN>" \ -d '{ "model": "<Model name, obtained from the '/v1/models' API>", "messages": [ { "role": "system", "content": "You are a helpful assistant." }, { "role": "user", "content": "hello!" } ] }' \ <EAS_ENDPOINT>/v1/chat/completionsfrom openai import OpenAI ##### API Configuration ##### # Replace <EAS_ENDPOINT> with the service endpoint and <EAS_TOKEN> with the service token. openai_api_key = "<EAS_TOKEN>" openai_api_base = "<EAS_ENDPOINT>/v1" client = OpenAI( api_key=openai_api_key, base_url=openai_api_base, ) models = client.models.list() model = models.data[0].id print(model) stream = True chat_completion = client.chat.completions.create( messages=[ {"role": "user", "content": "Hello, please introduce yourself."} ], model=model, max_completion_tokens=2048, stream=stream, ) if stream: for chunk in chat_completion: print(chunk.choices[0].delta.content, end="") else: result = chat_completion.choices[0].message.content print(result)Replace <EAS_ENDPOINT> with the service endpoint and <EAS_TOKEN> with the service token.

The invocation method varies based on the deployment method. For more information about API calls, see LLM API calls.

Integrate third-party applications

To connect to Chatbox, Dify, or Cherry Studio, see Integrate third-party clients.

Advanced configuration

You can modify the service's JSON configuration to enable advanced features, such as adjusting the token limit or enabling tool calling (Function Calling).

Procedure: On the deployment page, go to the Service Configuration section and edit the JSON. If the service is already deployed, update it to access the deployment page.

Modify the token limit

Qwen3 models natively support a token length of 32,768. You can use RoPE scaling technology to support a maximum token length of 131,072, but this may cause some performance loss. To do this, modify the containers.script field in the service configuration JSON file as follows:

-

vLLM:

vllm serve ... --rope-scaling '{"rope_type":"yarn","factor":4.0,"original_max_position_embeddings":32768}' --max-model-len 131072 -

SGLang:

python -m sglang.launch_server ... --json-model-override-args '{"rope_scaling":{"rope_type":"yarn","factor":4.0,"original_max_position_embeddings":32768}}'

Parse tool calls

vLLM and SGLang support parsing the tool call content generated by the model into a structured message. To enable this, modify the containers.script field in the service configuration JSON file as follows:

-

vLLM:

vllm serve ... --enable-auto-tool-choice --tool-call-parser hermes -

SGLang:

python -m sglang.launch_server ... --tool-call-parser qwen25

Control the thinking mode

Qwen3 uses thinking mode by default. You can control this feature with a hard switch to completely disable thinking or a soft switch where the model follows user instructions on whether to think.

Use the soft switch /no_think

The following code provides a sample request body:

{

"model": "<MODEL_NAME>",

"messages": [

{

"role": "user",

"content": "/no_think Hello!"

}

],

"max_tokens": 1024

}Use the hard switch

-

Control with an API parameter (for vLLM and SGLang): Add the

chat_template_kwargsparameter to the API call. The following code provides an example:curl -X POST \ -H "Content-Type: application/json" \ -H "Authorization: <EAS_TOKEN>" \ -d '{ "model": "<MODEL_NAME>", "messages": [ { "role": "user", "content": "Give me a short introduction to large language models." } ], "temperature": 0.7, "top_p": 0.8, "max_tokens": 8192, "presence_penalty": 1.5, "chat_template_kwargs": {"enable_thinking": false} }' \ <EAS_ENDPOINT>/v1/chat/completionsfrom openai import OpenAI # # Replace <EAS_ENDPOINT> with the service endpoint and <EAS_TOKEN> with the service token. openai_api_key = "<<EAS_TOKEN>" openai_api_base = "<EAS_ENDPOINT>/v1" client = OpenAI( api_key=openai_api_key, base_url=openai_api_base, ) chat_response = client.chat.completions.create( model="<MODEL_NAME>", messages=[ {"role": "user", "content": "Give me a short introduction to large language models."}, ], temperature=0.7, top_p=0.8, presence_penalty=1.5, extra_body={"chat_template_kwargs": {"enable_thinking": False}}, ) print("Chat response:", chat_response)Replace <EAS_ENDPOINT> with the service endpoint, <EAS_TOKEN> with the service token, and <MODEL_NAME> with the actual model name retrieved from the

/v1/modelsAPI. -

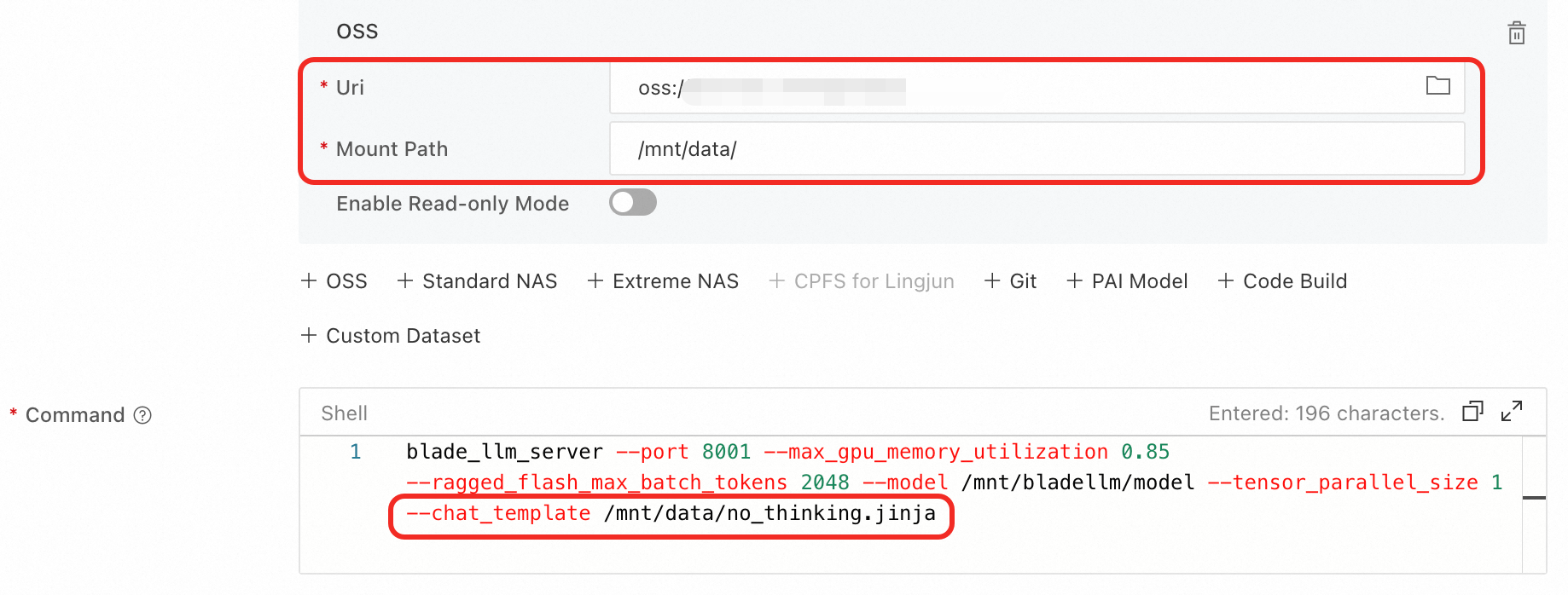

Disable by modifying the service configuration (for BladeLLM): You can use a chat template that prevents the model from generating thinking content when the model starts.

-

On the model's introduction page in the Model Gallery, check if a method is provided to disable thinking mode for BladeLLM. For example, with Qwen3-8B, you can disable thinking mode by modifying the

containers.scriptfield in the service configuration JSON file as follows:blade_llm_server ... --chat_template /model_dir/no_thinking.jinja -

You can write your own chat template, such as

no_thinking.jinja, mount it from OSS for reading, and modify thecontainers.scriptfield in the service configuration JSON file.

-

Parse thinking content

To output the "think" part separately, modify the containers.script field in the service configuration JSON file as follows:

-

vLLM:

vllm serve ... --enable-reasoning --reasoning-parser qwen3 -

SGLang:

python -m sglang.launch_server ... --reasoning-parser deepseek-r1

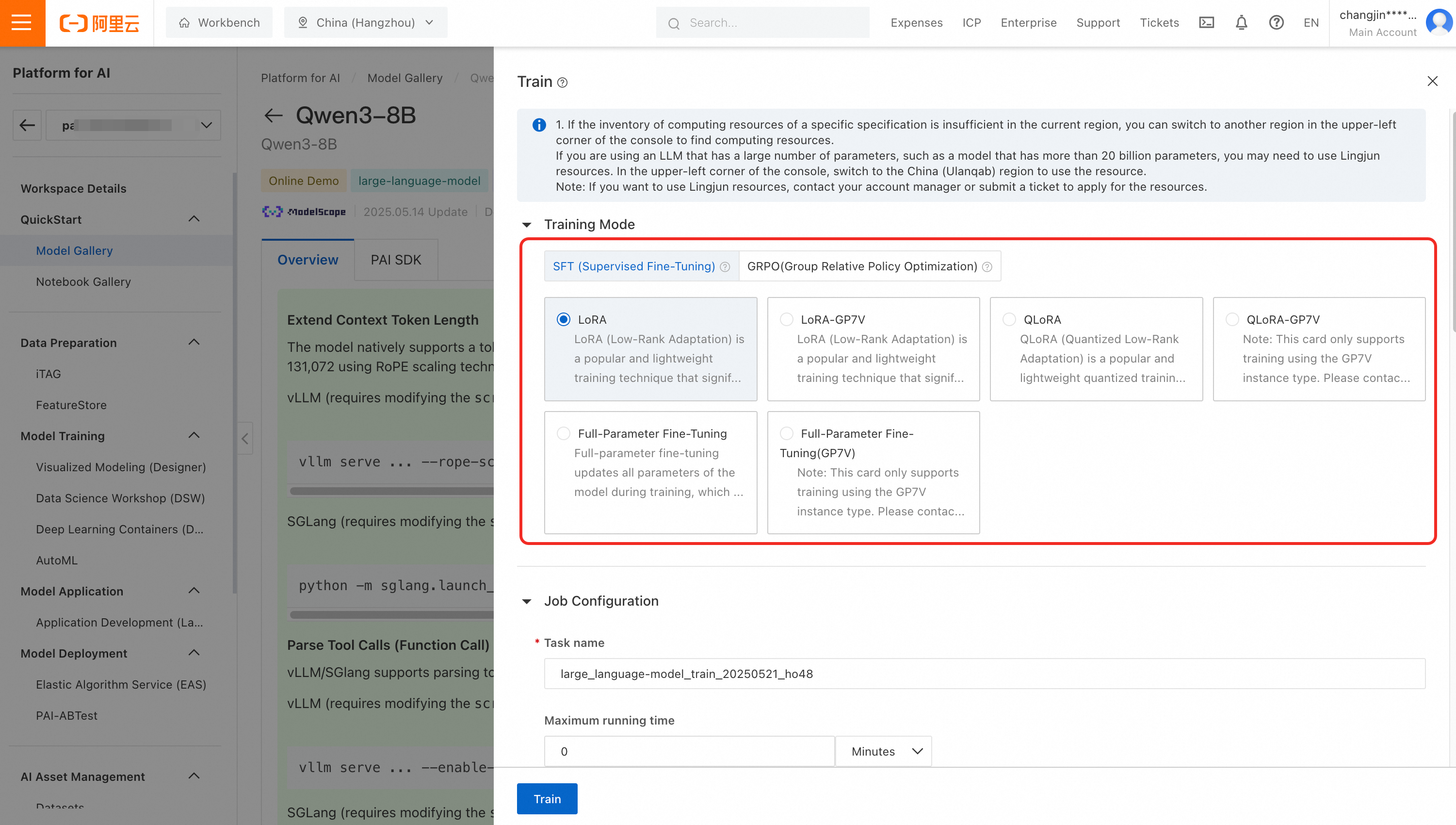

Model fine-tuning

-

The Qwen3-32B, 14B, 8B, 4B, 1.7B, and 0.6B models support Supervised Fine-Tuning (SFT) (full-parameter, LoRA, or QLoRA) and GRPO training.

-

You can submit training tasks with one click to train models specific to your business scenarios.

Model evaluation

For detailed instructions on model evaluation, see Model evaluation and Best practices for LLM evaluation.

Appendix: Required computing power and supported token count for deployment

The following table lists the minimum configuration required for Qwen3 deployment and the maximum number of tokens supported on different inference frameworks when using various instance types.

Among the FP8 models, only the Qwen3-235B-A22B model has reduced computing power requirements compared to the original model. The computing power requirements for other FP8 models are the same as their non-FP8 counterparts and are therefore not listed in the table. For example, to find the computing power required by Qwen3-30B-A3B-FP8, refer to Qwen3-30B-A3B.

|

Model |

Maximum number of tokens supported (input + output) |

Minimum configuration |

|

|

SGLang accelerated deployment |

vLLM accelerated deployment |

||

|

Qwen3-235B-A22B |

32768 (with RoPE scaling: 131072) |

32768 (with RoPE scaling: 131072) |

8 × GPU H / GU120 (8 × 96 GB VRAM) |

|

Qwen3-235B-A22B-FP8 |

32768 (with RoPE scaling: 131072) |

32768 (with RoPE scaling: 131072) |

4 × GPU H / GU120 (4 × 96 GB VRAM) |

|

Qwen3-30B-A3B Qwen3-30B-A3B-Base Qwen3-32B |

32768 (with RoPE scaling: 131072) |

32768 (with RoPE scaling: 131072) |

1 × GPU H / GU120 (96 GB VRAM) |

|

Qwen3-14B Qwen3-14B-Base |

32768 (with RoPE scaling: 131072) |

32768 (with RoPE scaling: 131072) |

1 × GPU L / GU60 (48 GB VRAM) |

|

Qwen3-8B Qwen3-4B Qwen3-1.7B Qwen3-0.6B Qwen3-8B-Base Qwen3-4B-Base Qwen3-1.7B-Base Qwen3-0.6B-Base |

32768 (with RoPE scaling: 131072) |

32768 (with RoPE scaling: 131072) |

1 × A10 / GU30 (24 GB VRAM) Important

The 8B model requires 48 GB of VRAM when RoPE scaling is enabled. |

FAQ

Q: Do model services deployed on PAI support session functionality (maintaining context between multiple requests)?

No, they do not. The model service API deployed on PAI is stateless. Each call is independent, and the server does not retain any context or session state between requests.

To implement a multi-turn conversation, the client must save the conversation history and include it in subsequent model invocation requests. For a request example, see How do I implement a multi-turn conversation?