Machine Learning Designer supports one-click pipeline deployment. You can deploy a batch data-processing pipeline that implements data pre-processing, feature engineering, and model prediction to Elastic Algorithm Service (EAS) as an online service after packaging the pipeline as a model.

Limits

You can add only Alink algorithm components to such a pipeline. They are marked with a small purple circle.

All the paired training and prediction components involved in a model to be deployed must be successfully run. If they are successfully run, they are marked with a green check. For example, to deploy a linear regression model as an online service, the Linear Regression Training and Linear Regression Prediction components must both be successfully run.

Online services accept only single input and output. You can select only a single serial link from the Directed Acyclic Graph (DAG) of the batch model that you want to deploy.

Prerequisites

A batch data-processing pipeline that implements data pre-processing, feature engineering, and model prediction is created and successfully run. For more information, see Build a model.

Procedure

Go to the Machine Learning Designer page.

Log on to the Machine Learning Platform for AI console.

In the left-side navigation pane, click Workspaces. On the Workspaces page, click the name of the workspace that you want to manage.

In the left-side navigation pane, choose to go to the Machine Learning Designer page.

On the Pipelines tab, double-click the pipeline to open the pipeline.

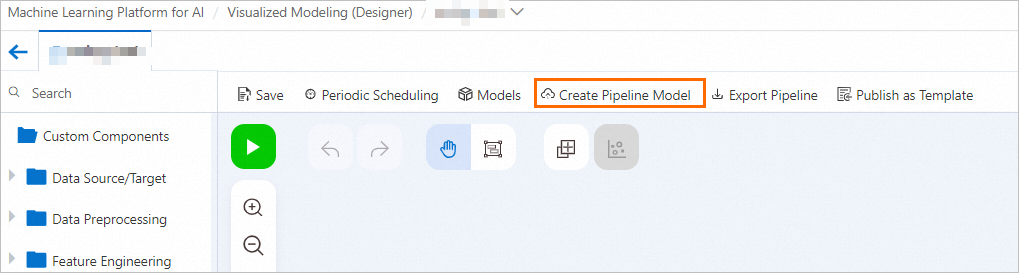

In the top navigation bar of the canvas, choose Create Pipeline Model.

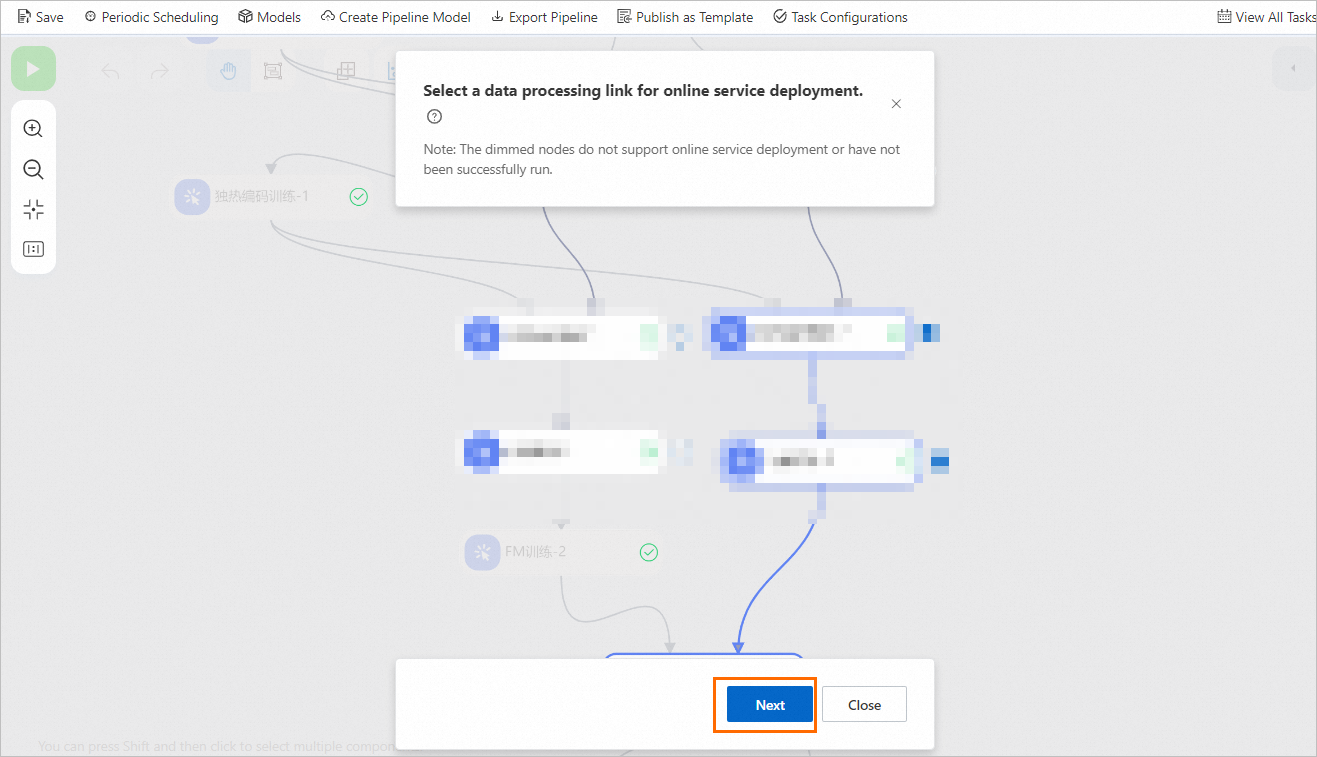

Select the nodes of the pipeline and click Next.

Select specific available nodes to form a serial data processing link that usually consists of 1 to N prediction components. For example, In this case, data is normalized, one-hot encoded, vector-aggregated, and then submitted to the FM Prediction component for prediction.

If you select a node that can form a serial link with its downstream or upstream nodes, all the downstream or upstream nodes are automatically selected.

If you unselect a node, all the automatically selected nodes are unselected.

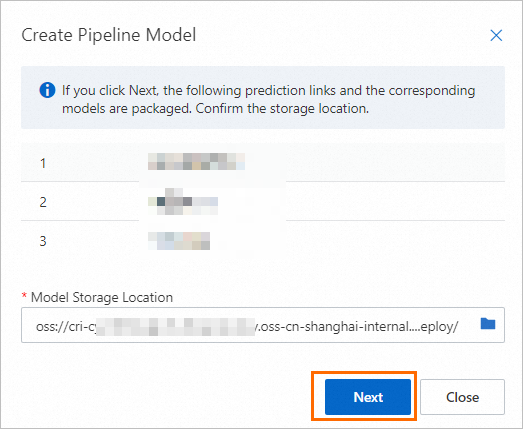

In the Create Pipeline Model dialog box, click Next to start packaging.

The data prediction link and the models generated by the link are automatically packaged to produce a pipeline model. During the process, a batch task whose name starts with model-combination- is launched.

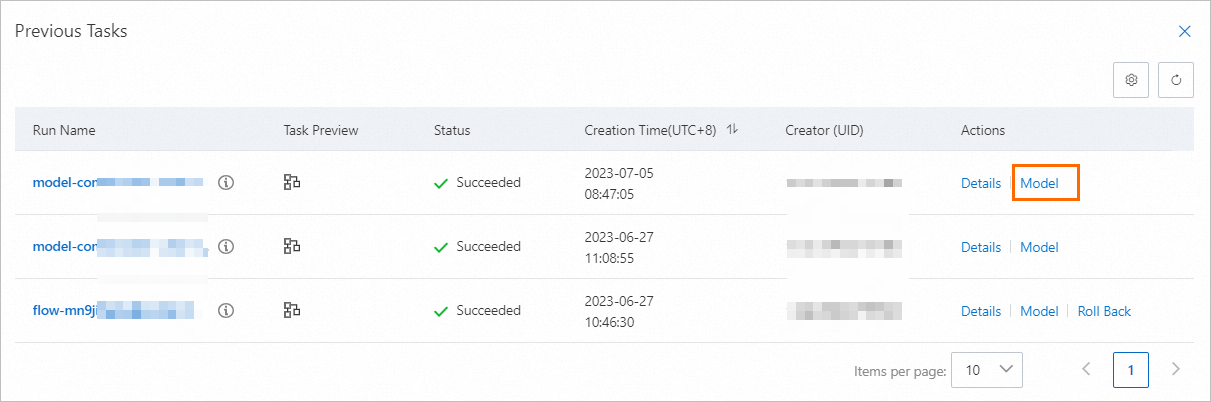

In the top navigation bar of the canvas, click View All Tasks. In the Previous Tasks dialog box, find the task and view the task progress. It takes about 3 to 5 minutes to execute the task. After the task state changes to Succeeded, you can continue.

In the Previous Tasks dialog box, find the task and click Model in the Actions column to deploy the pipeline model with a few clicks.

You are directed to the EAS-Online Model Services page. For more information about how to deploy a model, see Model service deployment by using the PAI console and Machine Learning Designer.