Logtail provides plug-ins for data processing to parse raw logs into structured data.

Background information

Logtail plug-ins for data processing are classified into native plug-ins and extended plug-ins.

Native plug-ins provide high performance and are suitable for most business scenarios. We recommend that you use native plug-ins.

Extended plug-ins provide more features. If you cannot process complex business logs by using native plug-ins, you can use extended plug-ins to parse the logs. However, system performance may be compromised in this case.

Limits

Limits on performance

If you use extended plug-ins to process logs, Logtail consumes more resources. Most of the resources are CPU resources. You can modify the Logtail parameter settings based on your business requirements. For more information, see Configure the startup parameters of Logtail.

If raw logs are generated at a speed that exceeds 5 MB/s, we recommend that you do not use complicated combinations of plug-ins to process logs. You can use extended plug-ins to preliminarily process logs, and then use the data transformation feature to further process the logs. For more information, see Data transformation.

Limits on log collection

Extended plug-ins use the line mode to process text logs. In this mode, the metadata of files such as the

__tag__:__path__and__topic__fields, is stored in each log.If you add extended plug-ins to process logs, the following limits apply to tag-related features:

The contextual query and LiveTail features are unavailable. If you want to use the features, you must add the aggregators configuration.

The

__topic__field is renamed to__log_topic__. After you add the aggregators configuration, logs contain the__topic__and__log_topic__fields. If you do not require the__log_topic__field, you can use the processor_drop plug-in to delete the field. For more information, see processor_drop.For tag fields such as

__tag__:__path__, the original field indexes no longer take effect. You must recreate indexes for the fields. For more information, see Create indexes.

Limits on plug-in combinations

For plug-ins of Logtail earlier than V2.0:

You cannot add native plug-ins and extended plug-ins at the same time.

You can use native plug-ins only to collect text logs. When you add native plug-ins, take note of the following items:

You must add one of the following Logtail plug-ins for data processing as the first plug-in: Data Parsing (Regex Mode), Data Parsing (Delimiter Mode), Data Parsing (JSON Mode), Data Parsing (NGINX Mode), Data Parsing (Apache Mode), and Data Parsing (IIS Mode).

After you add the first plug-in, you can add one Time Parsing plug-in, one Data Filtering plug-in, and multiple Data Masking plug-ins.

For plug-ins of Logtail V2.0: You can add extended plug-ins only after you add native plug-ins.

Limits on native plug-in-related parameter combinations

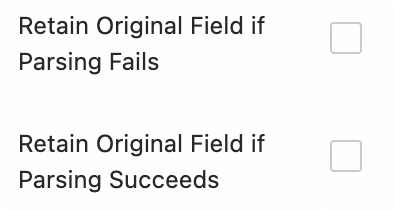

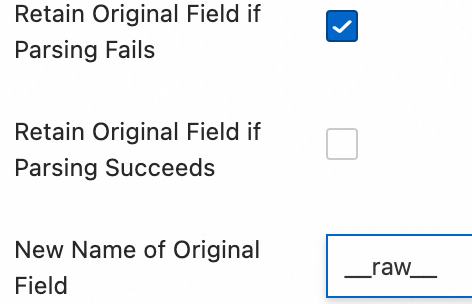

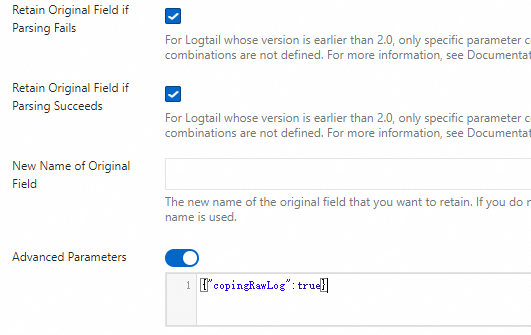

For native plug-ins of Logtail earlier than V2.0, we recommend that you use only the following parameter combinations. The plug-ins refer to Data Parsing (Regex Mode), Data Parsing (JSON Mode), Data Parsing (Delimiter Mode), Data Parsing (NGINX Mode), Data Parsing (Apache Mode), and Data Parsing (IIS Mode). For other parameter combinations, Simple Log Service does not ensure configuration effects.

Upload logs that are parsed.

Upload logs that are obtained after successful parsing, and raw ones if the parsing fails.

Upload logs obtained after parsing. Add a raw log field to the logs if the parsing succeeds, and raw logs if it fails.

For example, if a raw log is

"content": "{"request_method":"GET", "request_time":"200"}"and it's successfully parsed, the system adds a raw log field, which is specified by the New Name of Original Field parameter. If you do not configure the parameter, the original field name is used. The field value is{"request_method":"GET", "request_time":"200"}.

Add plug-ins

Add plug-ins when you modify a Logtail configuration

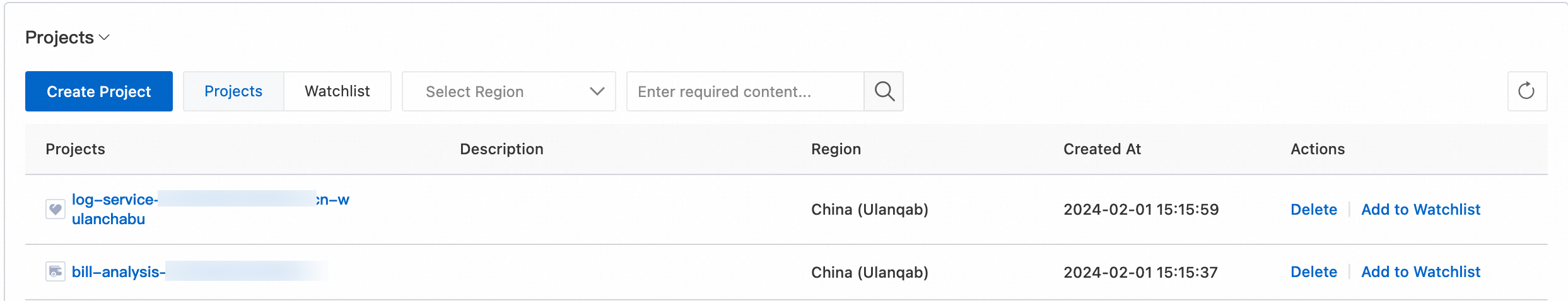

Log on to the Simple Log Service console.

In the Projects section, click the one you want to manage.

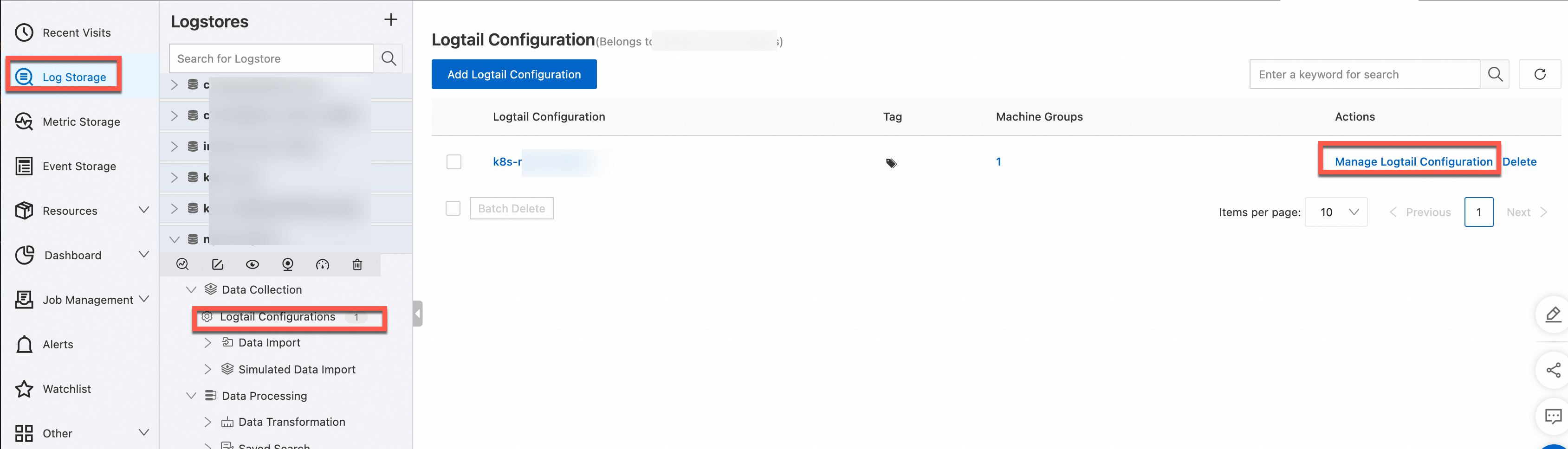

Choose . Click > of the required Logstore. Then, choose .

In the Logtail Configuration list, find the required Logtail configuration, and then click Manage Logtail Configuration in the Actions column.

Click Edit in the upper-left corner of the page. In the Processor Configurations section, add Logtail plug-ins, and then click Save.

Add plug-ins when you create a Logtail configuration

Log on to the Simple Log Service console.

On the right side of the page that appears, click the Quick Data Import card.

In the Import Data dialog box, click a card, follow the instructions to configure parameters in the wizard, and then add Logtail plug-ins in the Logtail Configuration step of the wizard. For more information, see Collect text logs from servers.

NoteThe Logtail plug-in configuration that you add when you create a Logtail configuration works in the same manner as the Logtail plug-in configuration that you add when you modify the Logtail configuration.

Logtail plug-ins for data processing

Native plug-ins

Plug-in | Description |

Data Parsing (Regex Mode) | |

Data Parsing (JSON Mode) | |

Data Parsing (Delimiter Mode) | |

Data Parsing (NGINX Mode) | |

Data Parsing (Apache Mode) | |

Data Parsing (IIS Mode) | |

Time Parsing | |

Data Filtering | |

Data Masking |

Extended plug-ins

Operation | Description |

Extract fields | |

Add fields | |

Drop fields | |

Rename fields | |

Encapsulate fields | Encapsulates one or more fields into a JSON object-formatted field. For more information, see Extended plug-in: Encapsulate Fields. |

Expand JSON fields | |

Filter logs | Uses regular expressions to match the values of log fields and filter logs. For more information, see processor_filter_regex. |

Uses regular expressions to match the names of log fields and filter logs. For more information, see processor_filter_key_regex. | |

Extract log time | Parses the time field in raw logs and specifies the parsing result as the log time. For more information, see Go language time format. |

Convert IP addresses | Converts IP addresses in logs to geographical locations. A geographical location includes the following information: country, province, city, longitude, and latitude. For more information, see Extended plug-in: Convert IP Addresses. |

Mask sensitive data | Replaces sensitive data in logs with specified strings or MD5 hash values. For more information, see Extended plug-in: Data Masking. |

Map field values | |

Encrypt fields | |

Encode and decode data | |

Convert logs to metrics | Converts the collected logs to Simple Log Service metrics. For more information, see Extended plug-in: Log to Metric. |

Convert logs to traces | Converts the collected logs to Simple Log Service traces. For more information, see Extended plug-in: Log to Trace. |