This topic describes how to use Logtail to collect MySQL binary logs.

Simple Log Service no longer supports the Logtail plug-in for collecting MySQL binary logs. New users can no longer create Logtail configurations to collect MySQL binary logs, but existing Logtail configurations remain unaffected. We recommend that you use DataWorks or Flink to collect MySQL binary logs. For more information, see MySQL connector and MySQL data source.

Principle

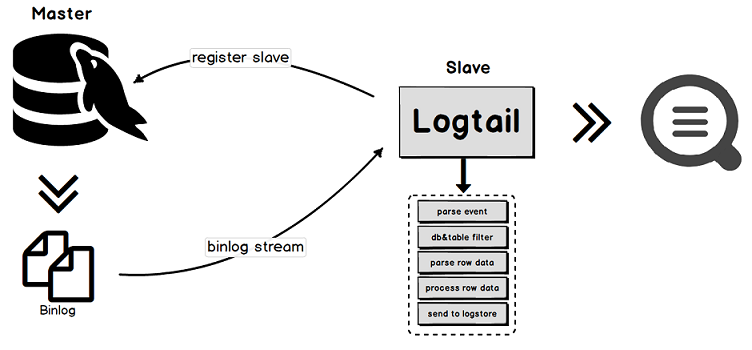

Logtail acts as a secondary MySQL node to communicate with the primary MySQL node. The following list describes the communication process:

Logtail acts as a secondary MySQL node and sends a dump request to the primary MySQL node.

After the primary MySQL node receives the dump request, the node sends binary logs to Logtail in real time.

Logtail performs operations such as event parsing, event filtering, and data parsing on the binary logs. Then, Logtail uploads the parsed data to Simple Log Service.

Features

Binary logs can be incrementally collected. This way, you can collect data related to the update operations that are performed on your databases. MySQL databases are supported, such as Relational Database Service (RDS) databases that use the MySQL protocol.

Multiple methods are provided to filter data in databases.

Binary log checkpoints can be configured.

Checkpoints can be used to synchronize the status of data storage.

Limits

Logtail V1.0.31 and later can collect binary logs from MySQL 8.0 databases.

The binary logging feature must be enabled for your MySQL database, and the binlog_format parameter must be set to ROW for the database. By default, the feature is enabled for an RDS database.

# Check whether the binary logging feature is enabled. mysql> show variables like "log_bin"; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | log_bin | ON | +---------------+-------+ 1 row in set (0.02 sec) # View the format of binary logs. mysql> show variables like "binlog_format"; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | binlog_format | ROW | +---------------+-------+ 1 row in set (0.03 sec)The ID of the secondary MySQL node as which Logtail acts must be unique for your database.

Limits on RDS databases:

You cannot install Logtail on the server that hosts your RDS instance. You must install Logtail on a server that can connect to the instance.

You cannot collect binary logs from a secondary RDS database. You must configure your primary RDS database to collect binary logs.

Operations such as database version upgrades, table schema changes, and disk changes may interrupt data synchronization. If data synchronization is interrupted, you can delete the checkpoint directory of Logtail and restart Logtail to resolve the issue. If the issue persists, we recommend that you use DataWorks or Flink to collect data. The default checkpoint directory is

/etc/ilogtail/checkpoint.

Scenarios

If you want to synchronize large amounts of data and require high performance, you can use Logtail to collect MySQL binary logs.

Query and analyze the incremental data of databases in real time.

Audit the operations that are performed on databases.

Use Simple Log Service to perform operations on the update-related data of databases. For example, you can perform custom query and analysis on the data, visualize the data, push the data to downstream nodes for stream processing, import the data to MaxCompute for batch processing, and import the data to Object Storage Service (OSS) for long-term storage.

Usage notes

We recommend that you relax the limits on resource usage for Logtail to handle traffic spikes and mitigate data risks. If the limits are exceeded, Logtail may be forcefully restarted.

You can modify the related parameters in the /usr/local/ilogtail/ilogtail_config.json file. For more information, see Configure the startup parameters of Logtail.

You can relax the limit on CPU utilization to two cores and the limit on memory usage to 2,048 MB. Example:

{

...

"cpu_usage_limit":2,

"mem_usage_limit":2048,

...

}Data reliability

We recommend that you enable the global transaction identifier (GTID) feature on your MySQL server and upgrade Logtail to V0.16.15 or later. This prevents data from being repeatedly collected after a primary/secondary switchover is triggered on your database and ensures data reliability.

Incomplete data collection: If Logtail is disconnected from your MySQL server for a long period of time, some data may not be collected.

When Logtail is disconnected from your primary MySQL node, the primary MySQL node keeps generating binary logs and deletes expired binary logs. After Logtail is re-connected to the primary MySQL node, Logtail uses a local checkpoint to request binary logs from the primary MySQL node. However, if the network disconnection lasts long, the logs that are generated after the checkpoint may be deleted. In this case, the recovery mechanism is triggered. The mechanism identifies the most recent binary log on the primary MySQL node to resume collection. The logs that are generated between the checkpoint and the most recent binary log are not collected. This leads to incomplete data collection.

Repeated data collection: If a primary/secondary switchover is triggered when the sequence numbers of binary logs are inconsistent between your primary and secondary MySQL node, binary logs may be repeatedly collected.

If you configure primary/secondary synchronization for your MySQL database, the primary MySQL node automatically synchronizes binary logs to the secondary node. The secondary node stores the logs to local binary log files. If the sequence numbers are inconsistent between the primary and secondary MySQL nodes and a primary/secondary switchover is triggered, logs may be repeatedly collected. This issue occurs because the checkpoint mechanism is based on the names of binary log files and the offsets of the files.

For example, a piece of data is in the checkpoint range from

(binlog.100, 4)to(binlog.105, 4)on the primary MySQL node and in the checkpoint range from(binlog.1000, 4)to(binlog.1005, 4)on the secondary MySQL node. Logtail has obtained the data from the primary MySQL node and updated the local checkpoint to(binlog.105, 4). If a primary/secondary switchover is triggered and no errors occur, Logtail continues to collect binary logs from the new primary MySQL node based on the local checkpoint(binlog.105, 4). However, the sequence number of the data that is in the checkpoint range from(binlog.1000, 4)to(binlog.1005, 4)on the new primary MySQL node is greater than the sequence number of the data that is requested by Logtail. The new primary MySQL node returns all data in the gap range to Logtail. This leads to repeated data collection.

Create a Logtail configuration

Log on to the Simple Log Service console.

In the Import Data section, click MySQL BinLog - Plug-in.

Select the project and Logstore. Then, click Next.

Create a machine group.

If a machine group is available, click Use Existing Machine Groups.

If no machine groups are available, perform the following steps to create a machine group. In this example, an Elastic Compute Service (ECS) instance is used.

On the ECS Instances tab, select Manually Select Instances. Then, select the ECS instance that you want to use and click Create.

For more information, see Install Logtail on ECS instances.

ImportantIf you want to collect logs from an ECS instance that belongs to a different Alibaba Cloud account than Log Service, a server in a data center, or a server of a third-party cloud service provider, you must manually install Logtail. For more information, see Install Logtail on a Linux server or Install Logtail on a Windows server.

After you manually install Logtail, you must configure a user identifier for the server. For more information, see Configure a user identifier.

After Logtail is installed, click Complete Installation.

In the Create Machine Group step, configure the Name parameter and click Next.

Log Service allows you to create IP address-based machine groups and custom identifier-based machine groups. For more information, see Create an IP address-based machine group and Create a custom identifier-based machine group.

Confirm that the machine group is displayed in the Applied Server Groups section and click Next.

ImportantIf you apply a machine group immediately after you create the machine group, the heartbeat status of the machine group may be FAIL. This issue occurs because the machine group is not connected to Simple Log Service. To resolve this issue, you can click Automatic Retry. If the issue persists, see What do I do if no heartbeat connections are detected on Logtail?

Specify the data source and click Next.

You can specify a data source by using form configuration or editor configuration in JSON. For more information, see Logtail configuration details.

Preview data, configure indexes, and then click Next.

By default, full-text indexing is enabled for Log Service. You can also configure field indexes based on collected logs in manual mode or automatic mode. To configure field indexes in automatic mode, click Automatic Index Generation. This way, Log Service automatically creates field indexes. For more information, see Create indexes.

ImportantIf you want to query and analyze logs, you must enable full-text indexing or field indexing. If you enable both full-text indexing and field indexing, the system uses only field indexes.

Click Log Query. You are redirected to the query and analysis page of your Logstore.

You must wait approximately 1 minute for the indexes to take effect. Then, you can view the collected logs on the Raw Logs tab. For more information, see Query and analyze logs.

Logtail configuration details

You can specify a data source by using form configuration or editor configuration in JSON.

Form configuration

In the Specify Data Source step, configure the following parameters.

Parameter | Description |

Config Name | The name of the Logtail configuration. |

Database Host | The address of the host on which the database resides. |

Database Port | The port number of the database. |

Database Username | The username of the account that is used to log on to the database. Make sure that the account has read permissions on the database and the MySQL REPLICATION permission. Example: |

DB Password | The password of the account that is used to log on to the database. If you have high requirements for data security, we recommend that you set the username and password to Important If you modify this parameter in the Simple Log Service console, the parameter setting in the Logtail configuration on the Logtail server is overwritten after the modification is synchronized to the server. |

ServerID | The ID of the secondary MySQL node as which Logtail acts. Important The value of the ServerID parameter must be unique for your database. Otherwise, data fails to be collected. |

Included Tables | The names of the tables from which data is collected. When you specify the exact name of a table, you must include the name of the database to which the table belongs and the name of the table. Example: test_db.test_table. You can also specify a regular expression for this parameter.

|

Ignored Tables | The names of the tables from which data is not collected. When you specify the exact name of a table, you must include the name of the database to which the table belongs and the name of the table. Example: test_db.test_table. You can also specify a regular expression for this parameter.

|

Binary Log File Name of First Collection | The name of the binary log file from which data is collected for the first time. If you do not configure this parameter, Logtail starts to collect data from the current point in time. If you want Logtail to collect data from a specific position, set the Binary Log File Name of First Collection parameter to the name of the binary log file from which you want Logtail to collect data and set the Binary Log File Offset of First Collection parameter to the offset of the file. Example: Note If you configure the Binary Log File Name of First Collection parameter, a large volume of traffic is generated the first time data is collected from the file. |

Binary Log File Offset of First Collection | The offset of the binary log file from which data is collected for the first time. |

Add GTID | Specifies whether to add GTIDs to the data that is uploaded to Simple Log Service. For more information, see GTID Format and Storage. |

Collect INSERT Events | Specifies whether to collect the data on INSERT events. |

Collect UPDATE Events | Specifies whether to collect the data on UPDATE events. |

Collect DELETE Events | Specifies whether to collect the data on DELETE events. |

Collect DDL Events | Specifies whether to collect the data on data definition language (DDL) events. Note If you select Collect DDL Events, the values of the Included Tables and Ignored Tables parameters do not take effect. The values are used for data filtering. |

Encoding Method | The encoding format of data. |

Convert Text to String | Specifies whether to convert the data of the text type to the string type. |

Compress Events into JSON | Specifies whether to pack event data in the JSON format. If you select Compress Events into JSON, Logtail packs event data into the data and old_data fields in the JSON format. The old_data field is available only for ROW_UPDATE events. For example, a table contains three columns named c1, c2, and c3. If you clear Compress Events into JSON, the data on ROW_INSERT events contains the c1, c2, and c3 fields. If you select Compress Events into JSON, Logtail packs all data in the c1, c2, and c3 columns into the data field, and the field value is in the Important This parameter is available only for Logtail V0.16.19 and later. |

Collect Event Metadata | Specifies whether to collect the metadata of events. The metadata of binary log events includes event_time, event_log_position, event_size, and event_server_id. Important This parameter is available only for Logtail V0.16.21 and later. |

Data Transformation | The configuration that is used to process data. For example, you can configure this parameter to extract fields, extract log time, mask data, and filter logs. This parameter is optional. You can specify one or more data processing methods. For more information, see Use Logtail plug-ins to process data. |

Editor configuration in JSON

In the Plug-in Configuration field, specify information about your Logtail configuration. Example:

inputs is required and is used to configure the data source settings for the Logtail configuration.

ImportantYou can specify only one type of data source in inputs.

processors is optional and is used to configure the data processing settings for the Logtail configuration to parse data. You can specify one or more processing methods.

If your logs cannot be parsed based only on the setting of inputs, you can configure processors in the Plug-in Configuration field to add plug-ins for data processing. For example, you can extract fields, extract log time, mask data, and filter logs. For more information, see Use Logtail plug-ins to process data.

{

"inputs": [

{

"type": "service_canal",

"detail": {

"Host": "************.mysql.rds.aliyuncs.com",

"Port": 3306,

"User" : "user1",

"ServerID" : 56321,

"Password": "*******",

"IncludeTables": [

"user_info\\..*"

],

"ExcludeTables": [

".*\\.\\S+_inner"

],

"TextToString" : true,

"EnableDDL" : true

}

}

]

}Parameter | Type | Required | Description |

type | string | Yes | The type of the data source. Set the value to service_canal. |

Host | string | No | The address of the host on which the database resides. Default value: 127.0.0.1. |

Port | int | No | The port number of the database. Default value: 3306. |

User | string | No | The username of the account that is used to log on to the database. Default value: root. Make sure that the account has read permissions on the database and the MySQL REPLICATION permission. Example: |

Password | string | No | The password of the account that is used to log on to the database. This parameter is empty by default. If you have high requirements for data security, we recommend that you set the username and password to Important If you modify this parameter in the Simple Log Service console, the parameter setting in the Logtail configuration on the Logtail server is overwritten after the modification is synchronized to the server. |

ServerID | int | No | The ID of the secondary MySQL node as which Logtail acts. Default value: 125. Important The value of the ServerID parameter must be unique for your database. Otherwise, data fails to be collected. |

IncludeTables | string array | Yes | The names of the tables from which data is collected. When you specify the exact name of a table, you must include the name of the database to which the table belongs and the name of the table. Example: test_db.test_table. You can also specify a regular expression for this parameter.

|

ExcludeTables | string array | No | The names of the tables from which data is not collected. When you specify the exact name of a table, you must include the name of the database to which the table belongs and the name of the table. Example: test_db.test_table. You can also specify a regular expression for this parameter.

|

StartBinName | string | No | The name of the binary log file from which data is collected for the first time. By default, Logtail starts to collect data from the current point in time. If you want Logtail to collect data from a specific position, set the StartBinName parameter to the name of the binary log file from which you want Logtail to collect data and set the StartBinlogPos parameter to the offset of the file. Example: Note If you configure the StartBinName parameter, a large volume of traffic is generated the first time data is collected from the file. |

StartBinlogPos | int | No | The offset of the binary log file from which data is collected for the first time. Default value: 0. |

EnableGTID | bool | No | Specifies whether to add GTIDs to the data that is uploaded to Simple Log Service. For more information, see GTID Format and Storage. Valid values:

|

EnableInsert | bool | No | Specifies whether to collect the data on INSERT events. Valid values:

|

EnableUpdate | bool | No | Specifies whether to collect the data on UPDATE events. Valid values:

|

EnableDelete | bool | No | Specifies whether to collect the data on DELETE events. Valid values:

|

EnableDDL | bool | No | Specifies whether to collect the data on DDL events. Valid values:

Note If you set the EnableDDL parameter to true, the values of the IncludeTables and ExcludeTables parameters do not take effect. The values are used for data filtering. |

Charset | string | No | The encoding format of data. Default value: utf8. |

TextToString | bool | No | Specifies whether to convert the data of the text type to the string type. Valid values:

|

PackValues | bool | No | Specifies whether to pack event data in the JSON format. If you set the PackValues parameter to true, Logtail packs event data into the data and old_data fields in the JSON format. The old_data field is available only for ROW_UPDATE events. Valid values:

For example, a table contains three columns named c1, c2, and c3. If you set the PackValues parameter to false, the data on ROW_INSERT events contains the c1, c2, and c3 fields. If you set the PackValues parameter to true, Logtail packs all data in the c1, c2, and c3 columns into the data field, and the field value is in the Important This parameter is available only for Logtail V0.16.19 and later. |

EnableEventMeta | bool | No | Specifies whether to collect the metadata of events. The metadata of binary log events includes event_time, event_log_position, event_size, and event_server_id.

Important This parameter is available only for Logtail V0.16.21 and later. |

Modify the configurations on a Logtail server

If you did not enter real information for parameters such as Host, User, and Password in the Plug-in Config field when you created the Logtail configuration, you can modify the parameters after the Logtail configuration is delivered to the Logtail server.

Log on to the server on which Logtail is installed.

Open the /usr/local/ilogtail/user_log_config.json file, find the service_canal keyword, and then modify the parameters such as Host, User, and Password.

Run the following command to restart Logtail:

sudo /etc/init.d/ilogtaild stop; sudo /etc/init.d/ilogtaild start

Troubleshooting

If no data is displayed on the preview page or query page after logs are collected by using Logtail, you can troubleshoot the errors based on the instructions that are provided in What do I do if errors occur when I use Logtail to collect logs?

Sample database table and logs

For example, the INSERT, UPDATE, and DELETE operations are performed on a table named specialalarm in a database named user_info. The following list describes the schema of the table, the operations that are performed on the table, and the collected logs:

Table schema

CREATE TABLE `specialalarm` ( `id` int(11) unsigned NOT NULL AUTO_INCREMENT, `time` datetime NOT NULL, `alarmtype` varchar(64) NOT NULL, `ip` varchar(16) NOT NULL, `count` int(11) unsigned NOT NULL, PRIMARY KEY (`id`), KEY `time` (`time`) USING BTREE, KEY `alarmtype` (`alarmtype`) USING BTREE ) ENGINE=MyISAM AUTO_INCREMENT=1;Database operations

Perform the INSERT, DELETE, and UPDATE operations.

insert into specialalarm (`time`, `alarmType`, `ip`, `count`) values(now(), "NO_ALARM", "10.10.**.***", 55); delete from specialalarm where id = 4829235 ; update specialalarm set ip = "10.11.***.**" where id = "4829234";Create an index for

zc.specialalarm.ALTER TABLE `zc`.`specialalarm` ADD INDEX `time_index` (`time` ASC);Collected logs

You can view the logs that are collected for each operation on the query and analysis page of the Logstore that is specified in the Logtail configuration. Examples:

INSERT statement

__source__: 10.30.**.** __tag__:__hostname__: iZbp145dd9fccu***** __topic__: _db_: zc _event_: row_insert _gtid_: 7d2ea78d-b631-11e7-8afb-00163e0eef52:536 _host_: *********.mysql.rds.aliyuncs.com _id_: 113 _table_: specialalarm alarmtype: NO_ALARM count: 55 id: 4829235 ip: 10.10.***.*** time: 2017-11-01 12:31:41DELETE statement

__source__: 10.30.**.** __tag__:__hostname__: iZbp145dd9fccu**** __topic__: _db_: zc _event_: row_delete _gtid_: 7d2ea78d-b631-11e7-8afb-00163e0eef52:537 _host_: *********.mysql.rds.aliyuncs.com _id_: 114 _table_: specialalarm alarmtype: NO_ALARM count: 55 id: 4829235 ip: 10.10.**.*** time: 2017-11-01 12:31:41UPDATE statement

__source__: 10.30.**.** __tag__:__hostname__: iZbp145dd9fccu**** __topic__: _db_: zc _event_: row_update _gtid_: 7d2ea78d-b631-11e7-8afb-00163e0eef52:538 _host_: *********.mysql.rds.aliyuncs.com _id_: 115 _old_alarmtype: NO_ALARM _old_count: 55 _old_id: 4829234 _old_ip: 10.10.22.133 _old_time: 2017-10-31 12:04:54 _table_: specialalarm alarmtype: NO_ALARM count: 55 id: 4829234 ip: 10.11.***.*** time: 2017-10-31 12:04:54DDL statement

__source__: 10.30.**.** __tag__:__hostname__: iZbp145dd9fccu**** __topic__: _db_: zc _event_: row_update _gtid_: 7d2ea78d-b631-11e7-8afb-00163e0eef52:539 _host_: *********.mysql.rds.aliyuncs.com ErrorCode: 0 ExecutionTime: 0 Query: ALTER TABLE `zc`.`specialalarm` ADD INDEX `time_index` (`time` ASC) StatusVars:

Field

Description

_host_The hostname of the database.

_db_The name of the database.

_table_The name of the table.

_event_The type of the event.

_id_The auto-increment ID. IDs start from 0 and increment by 1 each time the data on a binary log event is collected.

_gtid_The GTID.

_filename_The name of the binary log file.

_offset_The offset of the binary log file. The value is updated only when a COMMIT operation is performed.