This topic describes how to view the monitoring metrics of GPU-accelerated instances and how to configure auto scaling policies for provisioned GPU-accelerated instances based on different resource metrics.

Background

The resource utilization of GPU hardware varies in different scenarios, such as model training, AI inference, and audio and video transcoding. Function Compute provides the auto scaling feature for provisioned GPU-accelerated instances based on the resource utilization of GPU instances, including streaming multiprocessors (SM), GPU memory, hardware decoder, and hardware encoder.

You can configure an auto scaling policy for provisioned instances in Function Compute by using scheduled setting modification and metric-based setting modification. You can use only metric-based setting modification to configure an auto scaling policy for GPU-accelerated instances based on metrics. For more information, see the "Metric-based Setting Modification" section in Configure provisioned instances and auto scaling rules.

View the metrics of GPU-accelerated instances

After the GPU function is executed, you can view the resource usage of the GPU-accelerated instance in the Function Compute console.

- Log on to the Function Compute console. In the left-side navigation pane, choose .

- In the top navigation bar, select a region. In the service list, click the name of the desired service.

- In the function list of the monitoring dashboard, click the name of the desired function.

- On the monitoring dashboards page, click the Instance Metrics tab.

You can check the resource usage of GPU-accelerated instances by viewing the following metrics: GPU-accelerated Memory Usage (Percentage), GPU-accelerated SM Usage (Percentage), GPU-accelerated Hardware Encoder Usage (Percentage), and GPU-accelerated Hardware Decoder Usage (Percentage).

Configure an auto scaling policy

Metrics for GPU resource usage

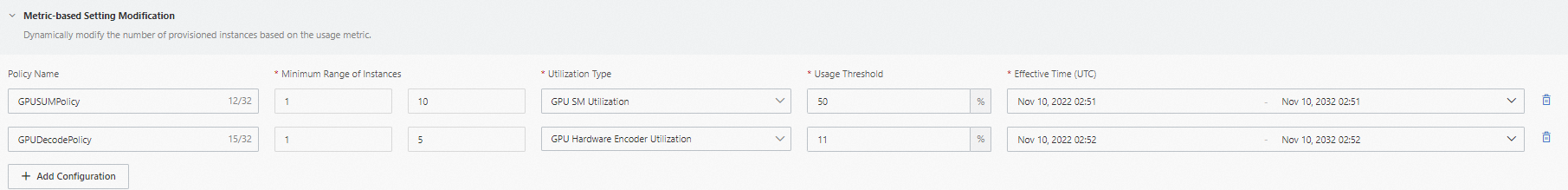

| Metric | Description | Value range |

|---|---|---|

| GPUSmUtilization | GPU SM utilization | [0, 1]. The utilization rate ranges from 0% to 100%. |

| GPUMemoryUtilization | GPU memory usage | [0, 1]. The utilization rate ranges from 0% to 100%. |

| GPUDecoderUtilization | GPU hardware decoder utilization | [0, 1]. The utilization rate ranges from 0% to 100%. |

| GPUEncoderUtilization | GPU hardware encoder utilization | [0, 1]. The utilization rate ranges from 0% to 100%. |

Configure an auto scaling policy in the Function Compute console

- Log on to the Function Compute console. In the left-side navigation pane, click Services & Functions.

- In the top navigation bar, select a region. On the Services page, click the desired service.

- On the Functions page, click the name of the desired function. On the Function Details page that appears, click the Auto Scaling tab.

- On the Function Details page, click the Auto Scaling tab and click Create Rule.

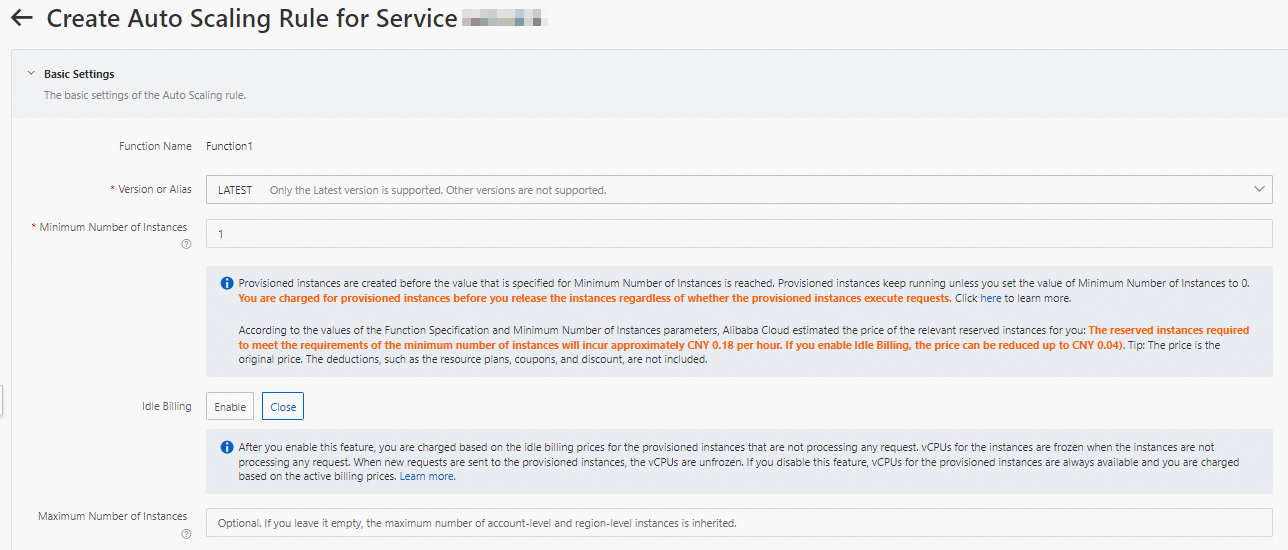

- On the page that appears, configure the following parameters and click Create.

After the configuration is complete, you can choose to view the change of Function Provisioned Instances.

Configure an auto scaling policy by using Serverless Devs

- Install Serverless Devs and Docker

- Configure Serverless Devs

- A GPU function is deployed. For more information, see Invoke GPU functions based on asynchronous tasks.

- Create a project directory.

mkdir fc-gpu-async-job&&cd fc-gpu-async-job - In the project directory, create a file that contains the auto scaling policy, such as gpu-sm-hpa-policy.json.

Example:

{ "target": 1, "targetTrackingPolicies": [ {"name":"hpa_gpu_decoder_util","startTime":"2022-09-05T16:00:00.000Z","endTime":"2023-07-06T16:00:00.000Z","metricType":"GPUSmUtilization","metricTarget":0.01,"minCapacity":1,"maxCapacity":20} ] } - In the project directory, run the following command to add the auto scaling policy to the desired function:

s cli fc provision put --region ${region} --service-name ${service-name} --function-name ${function-name} --qualifier LATEST --config gpu-sm-hpa-policy.json - Run the following command to view the auto scaling policy:

s cli fc provision get --region ${region} --service-name ${service-name} --function-name ${function-name} --qualifier LATESTIf the command is successfully run, the following result is returned:[2022-10-08 16:00:12] [INFO] [FC] - Getting provision: zh****.LATEST/zh**** serviceName: zh**** functionName: zh**** qualifier: LATEST resource: 164901546557****#zh****#LATEST#zh**** target: 1 current: 1 scheduledActions: null targetTrackingPolicies: - name: hpa_gpu_decoder_util startTime: 2022-09-05T16:00:00.000Z endTime: 2023-07-06T16:00:00.000Z metricType: GPUSmUtilization metricTarget: 0.01 minCapacity: 1 maxCapacity: 20 currentError: alwaysAllocateCPU: true

References

If you want to modify the sensitivity of auto scaling, join the DingTalk group 11721331 to contact Function Compute technical support.