This topic describes how to create an Alibaba Cloud Logstash cluster and configure a Logstash pipeline to synchronize data between Alibaba Cloud Elasticsearch clusters.

Background information

Prerequisites

- An Alibaba Cloud account is created.

To create an Alibaba Cloud account, visit the account registration page.

- A virtual private cloud (VPC) and a vSwitch are created.

For more information, see Create an IPv4 VPC.

Limits

- The source Elasticsearch cluster, Logstash cluster, and destination Elasticsearch cluster must reside in the same VPC. If they reside in different VPCs, you must configure Network Address Translation (NAT) gateways for the Logstash cluster. This way, the Logstash cluster can connect to the source Elasticsearch cluster and destination Elasticsearch cluster over the Internet. For more information, see Configure a NAT gateway for data transmission over the Internet.

- The versions of the source Elasticsearch cluster, Logstash cluster, and destination Elasticsearch cluster must meet compatibility requirements. For more information, see Compatibility matrixes.

Procedure

Preparations

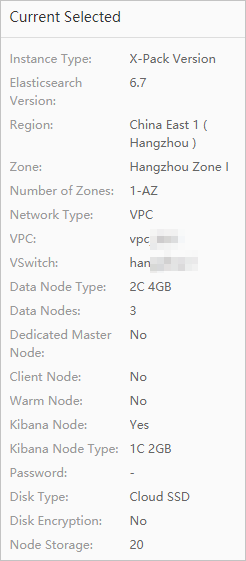

Step 1: Create a Logstash cluster

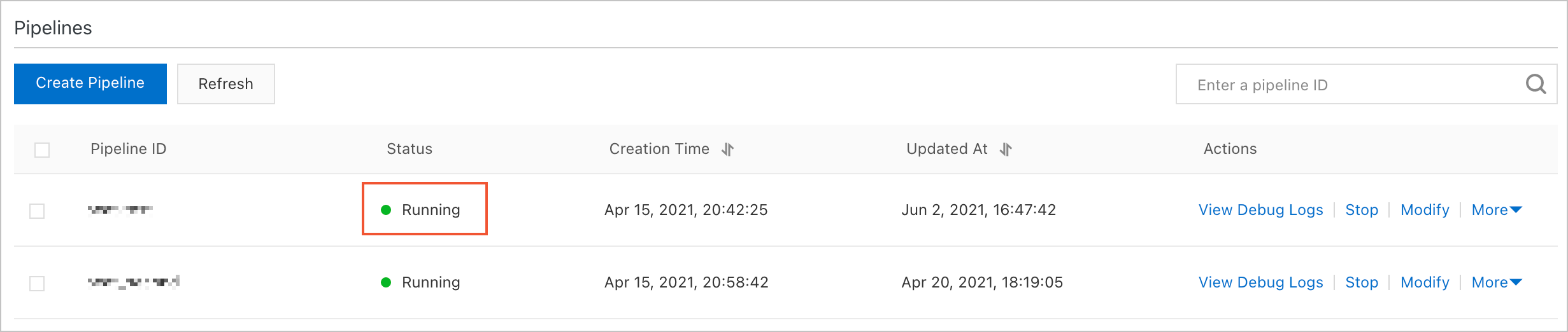

Step 2: Create and run a Logstash pipeline

After the state of the newly created Logstash cluster changes to Active, you can create and run a Logstash pipeline to synchronize data.

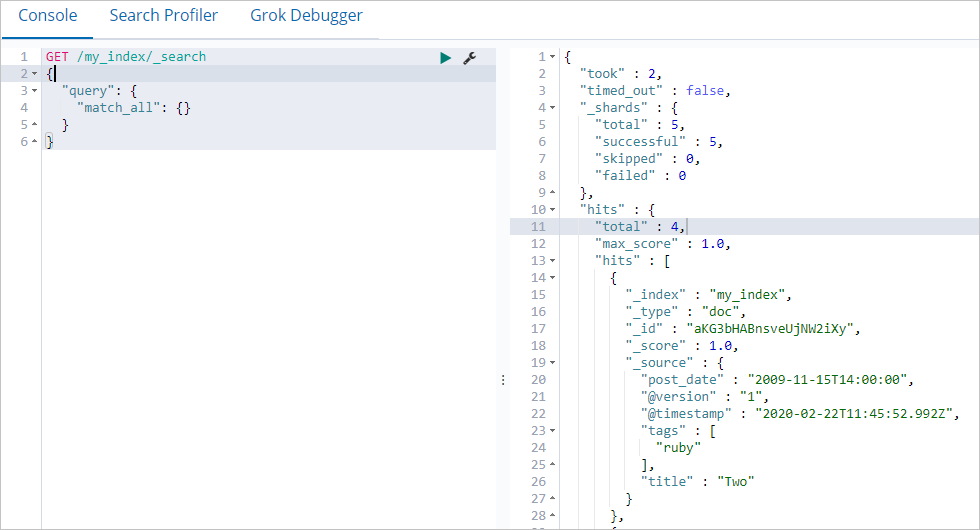

Step 3: View synchronization results

After the Logstash pipeline is created and starts to run, you can log on to the Kibana console of the destination Elasticsearch cluster to view data synchronization results.

References

- Configure monitoring for a Logstash cluster:

- Migrate third-party Elasticsearch data to Alibaba Cloud Elasticsearch:

If the data in the destination Elasticsearch cluster is the same as the data in the

source Elasticsearch cluster, data is successfully synchronized. You can also run

the

If the data in the destination Elasticsearch cluster is the same as the data in the

source Elasticsearch cluster, data is successfully synchronized. You can also run

the