Network Interface Controller (NIC) multi-queue enables you to configure multiple transmit (Tx) and receive (Rx) queues on a NIC, with each queue processed by a separate CPU core. This improves network I/O throughput and reduces latency by distributing packet processing across multiple CPU cores.

Benefits

Traditional single-queue NICs use only one CPU core to process all network packets, creating a bottleneck. The single core becomes overloaded while other cores remain idle, causing increased latency and packet loss.

Multi-queue NICs distribute network traffic across multiple CPU cores, fully utilizing your multi-core architecture. Test results show performance improvements of 50-100% with two queues and significantly higher gains with four queues.

Key benefits:

Better CPU utilization: Distribute network traffic across multiple cores

Higher throughput: Process multiple packets simultaneously, especially under high loads

Lower latency: Reduce congestion by distributing packets across queues

Fewer packet drops: Prevent packet loss in high-traffic scenarios

Improper configuration (incorrect queue count or CPU affinity settings) can degrade performance. Follow the guidance in this topic to optimize your setup.

How it works

Queue architecture

An Elastic Network Interface (ENI) supports multiple Combined queues. Each Combined queue consists of one Rx queue (receive) and one Tx queue (transmit), processed by an independent CPU core.

Rx queue: Handles incoming packets, distributed based on rules such as polling or flow-based distribution

Tx queue: Handles outgoing packets, sent based on factors like order or priority

IRQ Affinity

Each queue has an independent interrupt. Interrupt Request (IRQ) Affinity distributes interrupts for different queues to specific CPU cores, preventing any single core from becoming overloaded.

IRQ Affinity is enabled by default in all images except Red Hat Enterprise Linux (RHEL). For RHEL configuration, see Configure IRQ Affinity.

Prerequisites

Before you configure multi-queue settings, ensure that you have:

An ECS instance that supports the NIC multi-queue feature (see Instance family overview).

The appropriate permissions to modify ENI settings.

(Optional) For IRQ Affinity configuration on RHEL: public network access to download the

ecs_mqscript.

Instance type support

To check if an instance type supports multi-queue:

View the NIC queues column in the instance type tables.

Values greater than 1 indicate multi-queue support

The value indicates the maximum number of queues per ENI

Call the DescribeInstanceTypes API operation to query queue metrics:

Parameter | Description |

| Default queues for the primary ENI |

| Default queues for secondary ENIs |

| Maximum queues allowed per ENI |

| Total queue quota for the instance |

Early-version public images with kernel versions earlier than 2.6 may not support multi-queue. Use the latest public images for best compatibility.

View queue configuration

View in the console

Go to the ENI page in the ECS console.

In the top navigation bar, select your region and resource group.

Click the ENI ID to view its details.

In the Basic Information section, check the Queues parameter:

If you've modified the queue count, the new value appears here

If you've never modified the queue count:

No value if the ENI is unbound

Default queue count if the ENI is bound to an instance

View using the API

Call the DescribeNetworkInterfaceAttribute API operation and check the QueueNumber parameter in the response.

View on a Linux instance

Connect to your Linux instance using Workbench.

NoteQueue configuration can only be viewed on Linux instances. For Windows instances, use the console or API.

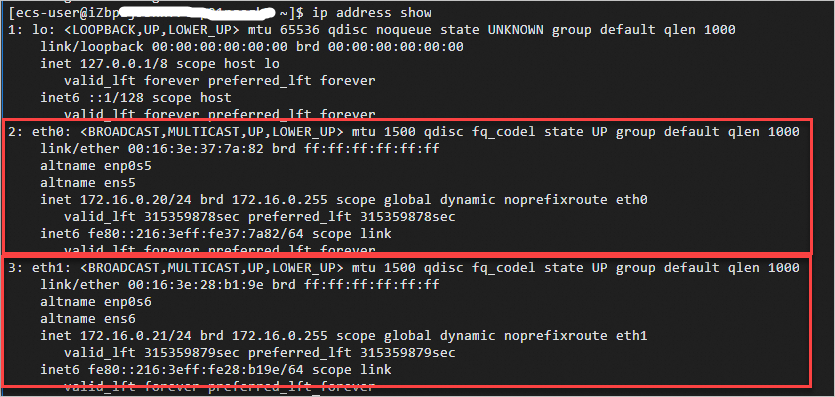

Run

ip address showto view your network interfaces.

Check if multi-queue is enabled on an ENI (example uses

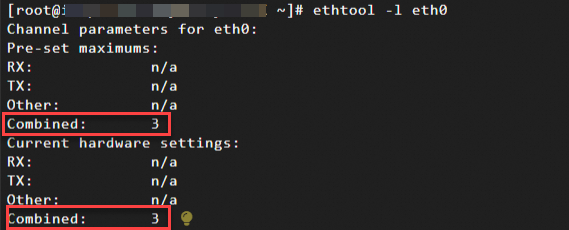

eth0):ethtool -l eth0In the output:

Pre-set maximums - Combined: Maximum queues supported by the ENI

Current hardware settings - Combined: Number of queues currently in use

Example output showing 3 queues supported and 3 queues in use:

Change queue configuration

After you bind an ENI to an instance, the ENI's queue count is automatically set to the default for that instance type. You can manually change this configuration if needed.

Change the maximum queues (console or API)

You can change the maximum number of queues supported by an ENI using the console or API.

Requirements:

Requirement | Description |

ENI state | Must be in Available state, or bound instance must be in Stopped state |

Queue limit | Cannot exceed the |

Total quota | Total queues across all ENIs cannot exceed the |

Using the console:

Go to the ENI page.

Find your ENI and click its ID to view details.

In the Basic Information section, click the edit icon next to Queues.

Enter the new queue count and click OK.

If the ENI is bound to an instance, the new queue count takes effect after you start the instance.

Using the API:

Call the ModifyNetworkInterfaceAttribute API operation and specify the QueueNumber parameter.

Change queue usage in the OS (Linux only)

You can adjust the number of queues that an ENI actively uses in the operating system. This number must be less than or equal to the maximum queues supported by the ENI.

Changes made at the OS level:

Don't affect the queue count displayed in the console or returned by API operations.

Don't persist after instance restart - the OS reverts to using the maximum available queues.

Example using Alibaba Cloud Linux 3:

Connect to your Linux instance using Workbench.

Check current queue configuration:

ethtool -l eth0Change the number of queues in use (example changes to 2):

sudo ethtool -L eth0 combined 2Replace

2with your desired queue count (must not exceed the Pre-set maximums value).Verify the change:

ethtool -l eth0The Current hardware settings - Combined value should now show your new queue count.

Configure IRQ Affinity

IRQ Affinity assigns interrupts for different queues to specific CPUs, improving network performance by reducing CPU contention.

IRQ Affinity is enabled by default in all images except RHEL. Configuration is only required for RHEL instances.

Requirements for RHEL instances

Red Hat Enterprise Linux 9.2 or later

Public network access to download the

ecs_mqscriptThe

irqbalanceservice disabled (conflicts withecs_mq)

Configure IRQ Affinity on RHEL

Connect to your RHEL instance using Workbench.

(Optional) Disable the

irqbalanceservice to prevent conflicts:systemctl stop irqbalance.serviceDownload the

ecs_mqscript package:wget https://ecs-image-tools.oss-cn-hangzhou.aliyuncs.com/ecs_mq/ecs_mq_2.0.5.tgzExtract the package:

tar -xzf ecs_mq_2.0.5.tgzNavigate to the script directory:

cd ecs_mq/Install the script environment (replace

redhatand9with your OS name and major version):bash install.sh redhat 9Start the

ecs_mqservice:systemctl start ecs_mqIRQ Affinity is now enabled.

Benefits of ecs_mq 2.0.5

The new version of the ecs_mq script provides several improvements:

Preferentially binds interrupts to CPUs on the NUMA (Non-Uniform Memory Access) node associated with the ENI's PCIe interface

Optimizes logic for tuning multiple network devices

Binds interrupts based on the ratio of queues to CPUs

Optimizes binding based on CPU sibling positions

Resolves high latency issues during memory access across NUMA nodes

Performance improvements: Network performance tests show 5–30% improvement in most PPS (packets per second) and bps (bits per second) metrics compared to the older version.

Optimize performance

Configure queue count and IRQ Affinity based on your workload to achieve optimal network performance. To ensure load balancing:

Assign an appropriate number of queues per CPU core based on your network load

Configure IRQ Affinity according to your system's actual throughput and latency requirements

Test various configurations to find the optimal setup for your specific workload

Monitor CPU utilization and network metrics (throughput, latency, packet loss) to evaluate the effectiveness of your configuration.