This topic describes how to run Weather Research and Forecasting Model (WRF) in an Elastic High Performance Computing (E-HPC) cluster for meteorological simulation.

Background information

WRF is an open source mesoscale numerical system that is widely used for weather forecast. It supports a wide range of atmospheric process studies and simulations, including historical data reconstruction and future weather forecasting, on a variety of computing platforms. For more information, visit the WRF official website.

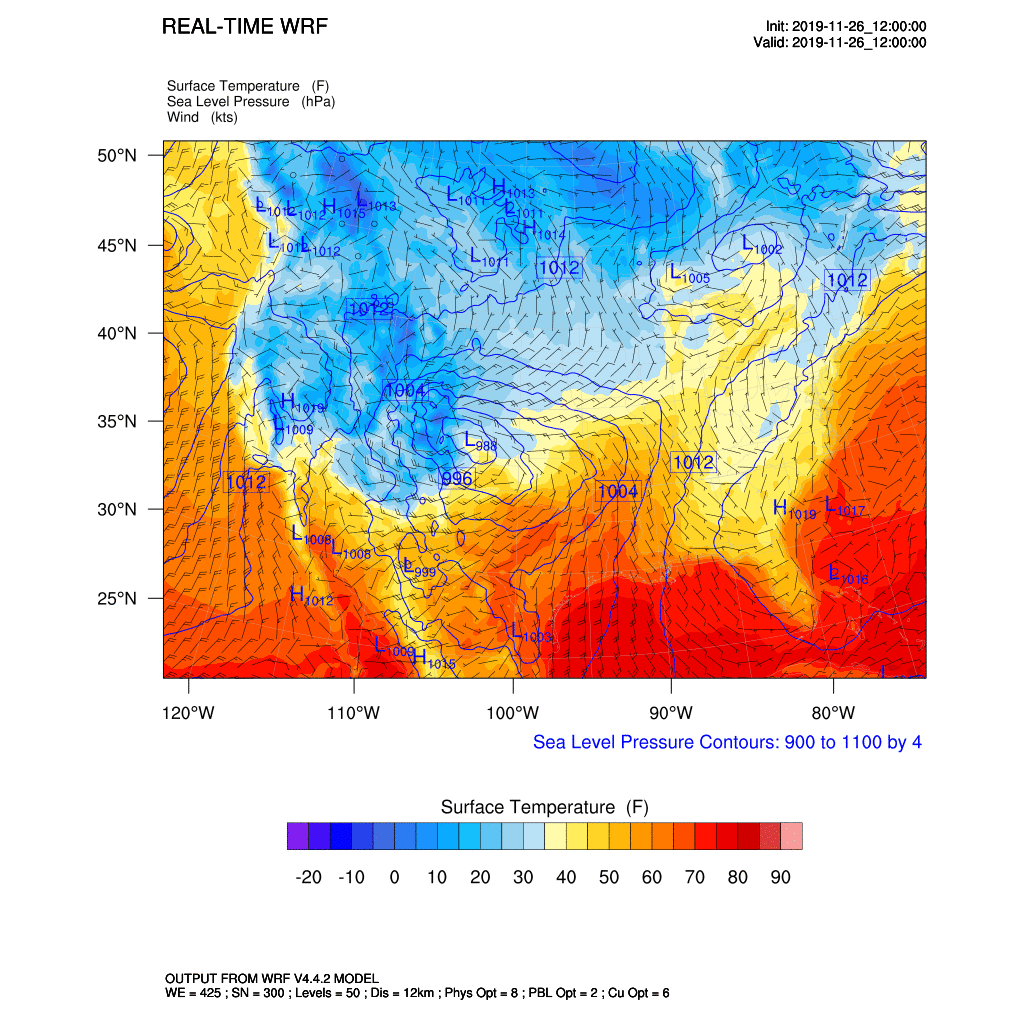

This topic simulates meteorological activities at a 12-km resolution to describe how WRF works in combination with E-HPC. In this topic, a winter storm in the USA in 2019 is used as an example. In the simulation, key meteorological factors such as precipitation, temperature, and wind speed are analyzed and studied, and their changes and distribution in a 12-hour period are illustrated.

Preparations

Use one of the following methods to create an E-HPC cluster:

Create a cluster by using a template. For more information, see Create a cluster by using a template.

ImportantIf you want to create a cluster by using a cluster template, you must modify the node configuration on the Compute Node and Queue page before you create the cluster.

Manually create a cluster. For more information, see Create a standard cluster.

In this example, the following configurations are used for the cluster:

Item

Configuration

Series

Standard Edition

Deployment Mode

Public cloud cluster

Cluster Type

SLURM

Node configurations

One management node, one logon node, and four compute nodes with the following specifications:

Management node: Elastic Compute Service (ECS) instance of the ecs.c8ae.xlarge type and with 4 vCPUs and 8 GiB memory

Logon node: ECS instance of the ecs.c8ae.xlarge type and with 4 vCPUs and 8 GiB memory

Compute node: ECS instance of the ecs.c8ae.16xlarge type and with 64 vCPUs and 128 GiB memory

Compute nodes are interconnected over the eRDMA network.

Image

Alibaba Cloud Linux 2.1903 LTS 64-bit

Create a cluster user. For more information, see Manage users.

The user is used to log on to the cluster, compile software, and submit jobs. The following settings are used in this example:

Username: testuser

User group: sudo permissions group

(Conditionally required) If you manually create the cluster, you must install the

wrf-aoccsoftware. If you create the cluster by using a template, skip this step. For more information, see Install and uninstall software in a cluster.NoteAfter the

wrf-aoccsoftware is installed in the cluster, all other depended software is automatically installed.

Step 1: Verify and configure the environment

Log on to E-HPC Portal. For more information, see Log on to E-HPC Portal.

Click

in the upper-right corner to use Workbench to connect to the cluster.

in the upper-right corner to use Workbench to connect to the cluster. Run the following command to check whether the software is successfully installed. This ensures that the system successfully loads the required version of environment.

module availSample output:

Configure the conus12km workload.

Run the following command to download and decompress the workload file:

cd ~ wget https://ehpc-perf.oss-cn-hangzhou.aliyuncs.com/yt710/WRFV4/input-data/v4.4_bench_conus12km.tar.gz tar -zxvf v4.4_bench_conus12km.tar.gz cd v4.4_bench_conus12km ln -s /opt/ehpc_common_softwares/nwp/wrf-aocc/4.4.2/aliyun/2/x86_64/run/* .Run the following command to download and decompress the NCL programming language:

cd ~ wget https://ehpc-perf.oss-cn-hangzhou.aliyuncs.com/AMD-Genoa/WRFV4/ncl_draw.tar.gz tar -zxvf ncl_draw.tar.gz

Run the following command to modify the

~/.bashrcfile:vim ~/.bashrcAdd the following code to the file:

module load aocc/4.0.0 aocl/4.0.1 gcc/12.3.0 hdf5/1.10.5 libfabric/1.16.0 mpich-aocc/4.0.3 netcdf/4.8.0 szip/2.0.0 wrf-aocc/4.4.2 zlib/1.2.13 export NCARG_ROOT=~/ncl export PATH=$NCARG_ROOT/bin:$PATHRun the following command on the four compute nodes to install NCL and ImageMagick:

NoteYou can send a remote command to all four nodes in the console for quick execution. For more information, see Send commands to nodes.

sudo yum install -y ncl ImageMagickRun the following command to create a job execution file named

png2gif.sh:cd ~ vim png2gif.sh

Step 2: Submit a job

After you verify and configure the environment, you must close the Workbench dialog box to continue with the following steps:

In the top navigation bar, click Task Management.

In the upper part of the page, click submitter.

On the Create Job page, specify the parameters. The following tables describe the parameters:

NoteRetain the default settings for other parameters.

Basic parameters

Parameter

Example

Description

Job Name

wrf-conus12

The name of the job.

If you need to automatically download and decompress job files, the decompression directory uses the name of the job.

Output File

Variable name: out

Variable value: test.gif

The output file of the job.

Queue

comp

The queue in which you want to run the job.

If you added compute nodes to a queue when you created the cluster, submit the job to the queue. Otherwise, the job fails to be run. If you did not add compute nodes to a queue, the job is submitted to the default queue of the scheduler.

Command

Edit Online

The job execution command that you want to submit to the scheduler. You can enter a command or the relative path of the script file. E-HPC Portal supports the following methods:

Edit Online

Use Local File

Use Uploaded File

Number of Nodes

4

The number of compute nodes that are used to run the job.

Number of Tasks

4

The number of tasks used by each compute node to run the job, that is, the number of processes.

Number of Threads

2

The number of threads that are used by a task. If you do not specify this parameter, the number of threads is 1.

Advanced parameters

Parameter

Example

Description

MPI Profile

Enable

Specifies whether to enable MPI performance profiling.

Click Submit.

After you complete the operation, you can see that the job is in the RUNNING status.

Step 3: View the job details

On the Task Management page, find the submitted job and click View in the Actions column.

On the job details page, you can view the details of the job, as shown in the following figure:

When the job is in the COMPLETED status, click the Input and Output Files tab and select Output File from the drop-down list in the upper-right corner to view the running result.

The following GIF figure shows a sample result: