This topic describes how to migrate data from an Amazon RDS for Oracle instance to an ApsaraDB RDS for MySQL instance by using Data Transmission Service (DTS). DTS supports schema migration, full data migration, and incremental data migration. When you configure a data migration task, you can select all of the supported migration types to ensure service continuity.

Prerequisites

The Public accessibility option of the Amazon RDS for Oracle instance is set to Yes. The setting ensures that DTS can access the Amazon RDS for Oracle instance over the Internet.

The database version of the Amazon RDS for Oracle instance is 9i, 10g, 11g, or 12c or later (non-multitenant architecture).

The database version of the ApsaraDB RDS for MySQL instance is 5.6 or 5.7.

The available storage space of the ApsaraDB RDS for MySQL instance is at least twice the total size of the data in the Amazon RDS for Oracle instance.

NoteThe binary log files that are generated during data migration occupy some space. They are automatically cleared after data migration is complete.

You are familiar with the capabilities and limits of DTS if it is used to migrate data from an Oracle database. Advanced Database & Application Migration (ADAM) is used for database evaluation. This helps you smoothly migrate data to the cloud. For more information, see Prepare an Oracle database and Overview.

Limits

DTS uses read and write resources of the source and destination databases during full data migration. This may increase the loads of the database servers. If the database performance is unfavorable, the specification is low, or the data volume is large, database services may become unavailable. For example, DTS occupies a large amount of read and write resources in the following cases: a large number of slow SQL queries are performed on the source database, the tables have no primary keys, or a deadlock occurs in the destination database. Before you migrate data, evaluate the impact of data migration on the performance of the source and destination databases. We recommend that you migrate data during off-peak hours. For example, you can migrate data when the CPU utilization of the source and destination databases is less than 30%.

The tables to be migrated in the source database must have PRIMARY KEY or UNIQUE constraints and all fields must be unique. Otherwise, the destination database may contain duplicate data records.

DTS uses the

ROUND(COLUMN,PRECISION)function to retrieve values from columns of the FLOAT or DOUBLE data type. If you do not specify a precision, DTS sets the precision for the FLOAT data type to 38 digits and the precision for the DOUBLE data type to 308 digits. You must check whether the precision settings meet your business requirements.DTS automatically creates a destination database in the ApsaraDB RDS for MySQL instance. However, if the name of the source database is invalid, you must manually create a database in the ApsaraDB RDS for MySQL instance before you configure the data migration task.

NoteFor more information about the database naming conventions of ApsaraDB RDS for MySQL databases and how to create a database, see Manage databases.

If a data migration task fails, DTS automatically resumes the task. Before you switch your workloads to the destination instance, stop or release the data migration task. Otherwise, the data in the source database overwrites the data in the destination instance after the task is resumed.

Billing rules

Migration type | Task configuration fee | Internet traffic fee |

Schema migration and full data migration | Free of charge. | Charged only when data is migrated from Alibaba Cloud over the Internet. For more information, see Billing overview. |

Incremental data migration | Charged. For more information, see Billing overview. |

Migration types

Schema migration

DTS supports schema migration for the following types of objects: table, index, constraint, and sequence. DTS does not support schema migration for the following types of objects: view, synonym, trigger, stored procedure, function, package, and user-defined type.

Full data migration

DTS migrates historical data of the required objects from the source database in the Amazon RDS for Oracle instance to the destination database in the ApsaraDB RDS for MySQL instance.

Incremental data migration

DTS retrieves redo log files from the source database in the Amazon RDS for Oracle instance. Then, DTS synchronizes incremental data from the source database in the Amazon RDS for Oracle instance to the destination database in the ApsaraDB RDS for MySQL instance. Incremental data migration allows data to be migrated smoothly without interrupting the services of self-managed applications when you migrate data from an Oracle database.

SQL operations that can be migrated during incremental data migration

INSERT, DELETE, and UPDATE

CREATE TABLE

NoteIf a CREATE TABLE operation creates a partitioned table or a table that contains functions, DTS does not synchronize the operation.

ALTER TABLE, including only ADD COLUMN, DROP COLUMN, RENAME COLUMN, and ADD INDEX

DROP TABLE

RENAME TABLE, TRUNCATE TABLE, and CREATE INDEX

Permissions required for database accounts

Database | Schema migration | Full data migration | Incremental data migration |

Amazon RDS for Oracle instance | Permissions of the schema owner | Permissions of the schema owner | Permissions of the master user |

ApsaraDB RDS for MySQL instance | Read and write permissions on the destination database | Read and write permissions on the destination database | Read and write permissions on the destination database |

For more information about how to create a database account and grant permissions to the database account, see the following topics:

Amazon RDS for Oracle instance: CREATE USER and GRANT

ApsaraDB RDS for MySQL instance: Create databases and accounts for an ApsaraDB RDS for MySQL instance

Data type mappings

For more information, see Data type mappings between heterogeneous databases.

Before you begin

Log on to the Amazon RDS Management Console.

Go to the basic information page of the Amazon RDS for Oracle instance.

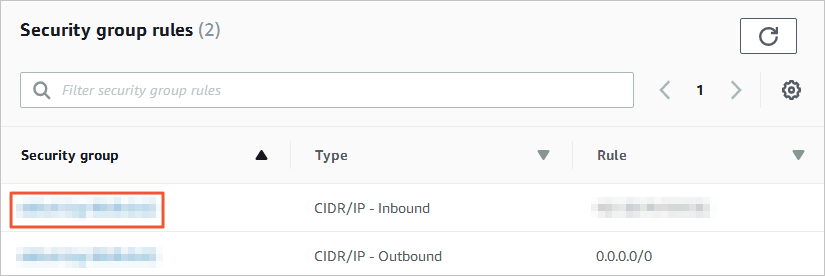

In the Security group rules section, click the name of the security group to which the existing inbound rule belongs.

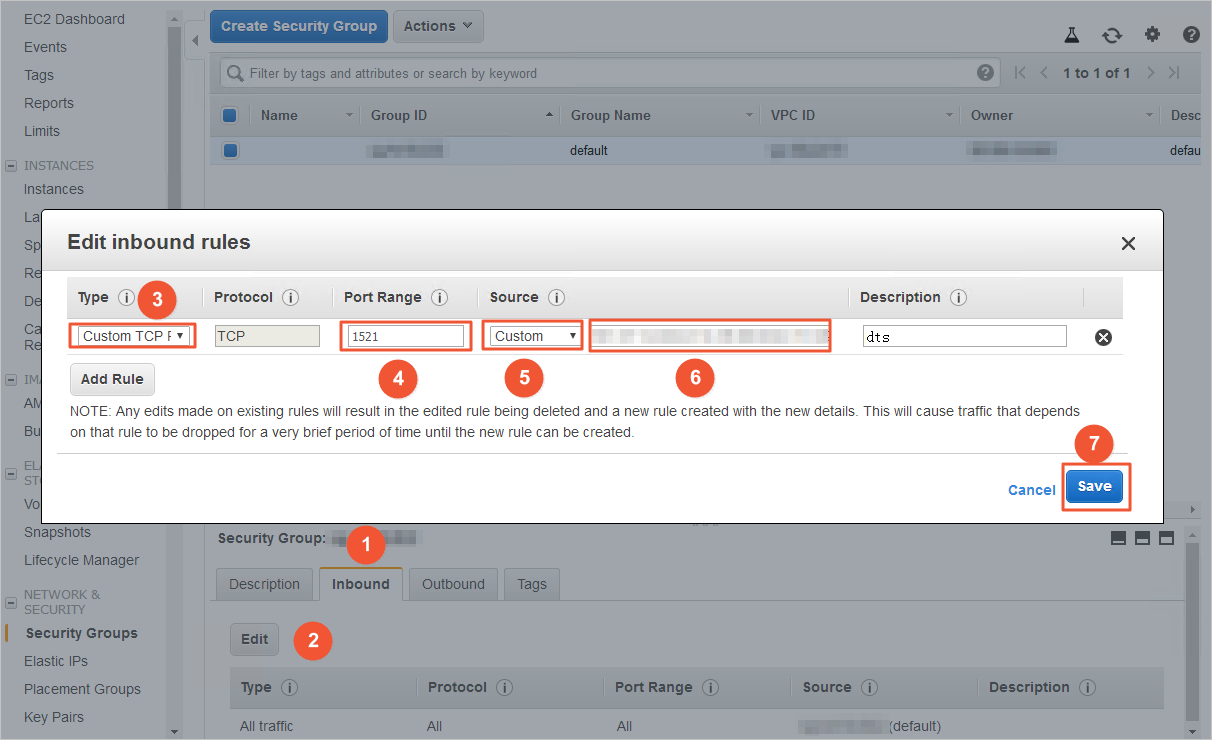

On the Security Groups page, click the Inbound tab in the Security Group section. On the Inbound tab, click Edit. In the Edit inbound rules dialog box, add the CIDR blocks of DTS servers that reside in the corresponding region to the inbound rule. For more information, see Add the CIDR blocks of DTS servers.

Note

NoteYou need to add only the CIDR blocks of DTS servers that reside in the same region as the destination database. For example, the source database resides in the Singapore region and the destination database resides in the China (Hangzhou) region. You need to add only the CIDR blocks of DTS servers that reside in the China (Hangzhou) region.

You can add all of the required CIDR blocks to the inbound rule at a time.

If you have other questions, see the official documentation of Amazon or contact technical support.

Modify the log settings of the Amazon RDS for Oracle instance. Skip this step if you do not need to perform incremental data migration.

If the database version of the Amazon RDS for Oracle instance is 12c or later (non-multitenant architecture), perform the following steps to configure the log settings:

Use the master user account and the SQL*Plus tool to connect to the Amazon RDS for Oracle instance.

Enable archive logging and supplemental logging.

Type

Procedure

Archive logging

Execute the following statement to check whether archive logging is enabled:

SELECT LOG_MODE FROM v$database;View and set the retention period for archived logs.

NoteWe recommend that you set the retention period of archived logs to at least 72 hours. In this example, the retention period is set to 72 hours.

exec rdsadmin.rdsadmin_util.show_configuration; exec rdsadmin.rdsadmin_util.set_configuration('archivelog retention hours', 72);

Supplemental logging

Enable supplemental logging at the database or table level:

Enable database-level supplemental logging

Execute the following statement to check whether database-level supplemental logging is enabled:

SELECT supplemental_log_data_min, supplemental_log_data_pk, supplemental_log_data_ui FROM v$database;Enable primary key and unique key supplemental logging at the database level:

exec rdsadmin.rdsadmin_util.alter_supplemental_logging('ADD', 'PRIMARY KEY'); exec rdsadmin.rdsadmin_util.alter_supplemental_logging('ADD', 'UNIQUE');

Enable table-level supplemental logging by using one of the following methods:

Enable table-level supplemental logging for all columns:

exec rdsadmin.rdsadmin_util.alter_supplemental_logging('ADD', 'ALL');Enable primary key supplemental logging at the table level:

exec rdsadmin.rdsadmin_util.alter_supplemental_logging('ADD', 'PRIMARY KEY');

Grant fine-grained permissions to the database account of the Amazon RDS for Oracle instance.

If the database version of the Amazon RDS for Oracle instance is 9i, 10g, or 11g, perform the following steps to configure the log settings:

Use the master user account and the SQL*Plus tool to connect to the Amazon RDS for Oracle instance.

Run the

archive log list;command to check whether the Amazon RDS for Oracle instance is running in ARCHIVELOG mode.NoteIf the instance is running in NOARCHIVELOG mode, switch the mode to ARCHIVELOG. For more information, see Managing Archived Redo Logs.

Enable force logging.

exec rdsadmin.rdsadmin_util.force_logging(p_enable => true);Enable supplemental logging for primary keys.

begin rdsadmin.rdsadmin_util.alter_supplemental_logging(p_action => 'ADD',p_type => 'PRIMARY KEY');end;/Enable supplemental logging for unique keys.

begin rdsadmin.rdsadmin_util.alter_supplemental_logging(p_action => 'ADD',p_type => 'UNIQUE');end;/Set a retention period for archived logs.

begin rdsadmin.rdsadmin_util.set_configuration(name => 'archivelog retention hours', value => '24');end;/Set a retention period for archived logs.

NoteWe recommend that you set the retention period of archived logs to at least 24 hours.

Commit the changes.

commit;

Procedure (in the new DTS console)

Use one of the following methods to go to the Data Migration page and select the region in which the data migration instance resides.

DTS console

Log on to the DTS console.

In the left-side navigation pane, click Data Migration.

In the upper-left corner of the page, select the region in which the data migration instance resides.

DMS console

NoteThe actual operation may vary based on the mode and layout of the DMS console. For more information, see Simple mode and Customize the layout and style of the DMS console.

Log on to the DMS console.

In the top navigation bar, move the pointer over .

From the drop-down list to the right of Data Migration Tasks, select the region in which the data synchronization instance resides.

Click Create Task to go to the task configuration page.

Configure the source and destination databases. The following table describes the parameters.

WarningAfter you configure the source and destination databases, we recommend that you read the Limits that are displayed in the upper part of the page. Otherwise, the task may fail or data inconsistency may occur.

Section

Parameter

Description

N/A

Task Name

The name of the DTS task. DTS automatically generates a task name. We recommend that you specify an informative name that makes it easy to identify the task. You do not need to specify a unique task name.

Source Database

Select a DMS database instance.

The instance that you want to use. You can choose whether to use an existing instance based on your business requirements.

If you select an existing instance, DTS automatically populates the parameters for the database.

If you do not use an existing instance, you must configure the database information below.

NoteIn the DMS console, you can click Add DMS Database Instance to register a database with DMS. For more information, see Register an Alibaba Cloud database instance and Register a database hosted on a third-party cloud service or a self-managed database.

In the DTS console, you can register a database with DTS on the Database Connections page or the new configuration page. For more information, see Manage database connections.

Database Type

The type of the source database. Select Oracle.

Access Method

The access method of the source database. Select Public IP Address.

Instance Region

The region in which the Amazon RDS for Oracle instance resides.

NoteIf the region in which the Amazon RDS for Oracle instance resides is not displayed in the drop-down list, select a region that is geographically closest to the Amazon RDS for Oracle instance.

Domain Name or IP

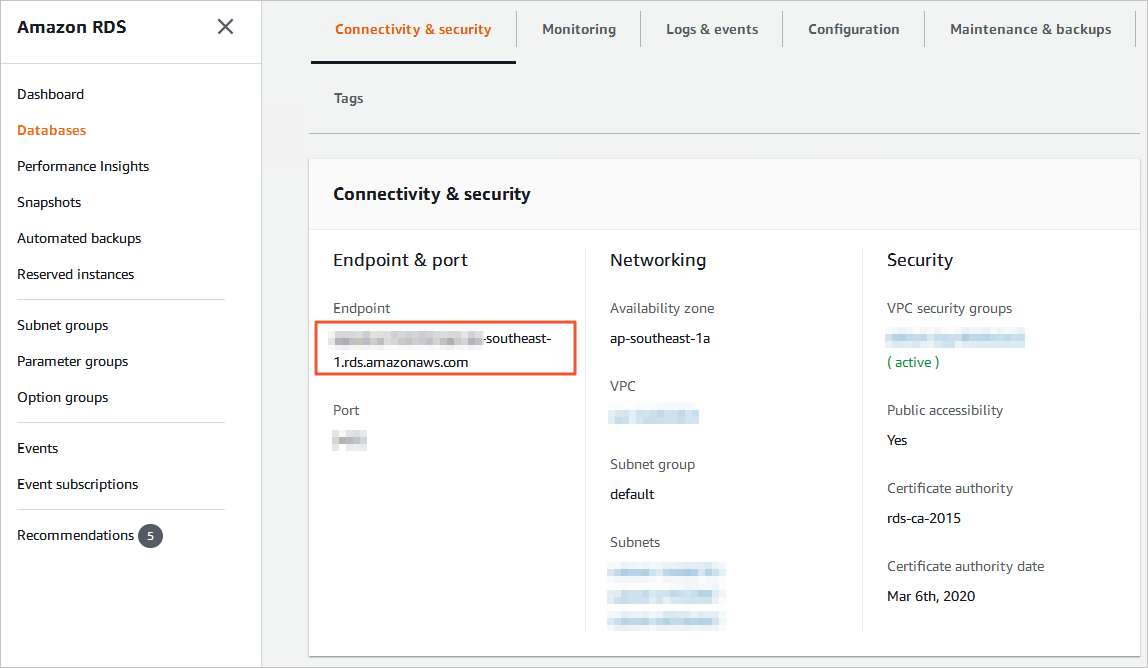

The endpoint that is used to access the Amazon RDS for Oracle instance.

NoteYou can obtain the endpoint on the basic information page of the Amazon RDS for Oracle instance.

Port Number

The service port number of the Amazon RDS for Oracle instance. Default value: 1521.

Oracle Type

The architecture of the source database. If you select Non-RAC Instance, you must configure the SID parameter.

If you select RAC or PDB Instance, you must configure the Service Name parameter.

In this example, Non-RAC Instance is selected.

Database Account

The database account of the Amazon RDS for Oracle instance. For information about the permissions that are required for the account, see the Permissions required for database accounts section of this topic.

Database Password

The password that is used to access the database instance.

Destination Database

Select a DMS database instance.

The instance that you want to use. You can choose whether to use an existing instance based on your business requirements.

If you select an existing instance, DTS automatically populates the parameters for the database.

If you do not use an existing instance, you must configure the database information below.

NoteIn the DMS console, you can click Add DMS Database Instance to register a database with DMS. For more information, see Register an Alibaba Cloud database instance and Register a database hosted on a third-party cloud service or a self-managed database.

In the DTS console, you can register a database with DTS on the Database Connections page or the new configuration page. For more information, see Manage database connections.

Database Type

The type of the destination database. Select MySQL.

Access Method

The access method of the destination database. Select Alibaba Cloud Instance.

Instance Region

The region in which the destination ApsaraDB RDS for MySQL instance resides.

RDS instance ID

The ID of the destination ApsaraDB RDS for MySQL instance.

Database Account

The database account of the ApsaraDB RDS for MySQL instance. For information about the permissions that are required for the account, see the Permissions required for database accounts section of this topic.

Database Password

The password that is used to access the database instance.

Encryption

Specifies whether to encrypt the connection to the source database instance. Select Non-encrypted or SSL-encrypted based on your business requirements. If you want to set this parameter to SSL-encrypted, you must enable SSL encryption for the ApsaraDB RDS for MySQL instance before you configure the DTS task. For more information, see Use a cloud certificate to enable SSL encryption.

In the lower part of the page, click Test Connectivity and Proceed, and then click Test Connectivity in the CIDR Blocks of DTS Servers dialog box that appears.

NoteMake sure that the CIDR blocks of DTS servers can be automatically or manually added to the security settings of the source and destination databases to allow access from DTS servers. For more information, see Add the CIDR blocks of DTS servers.

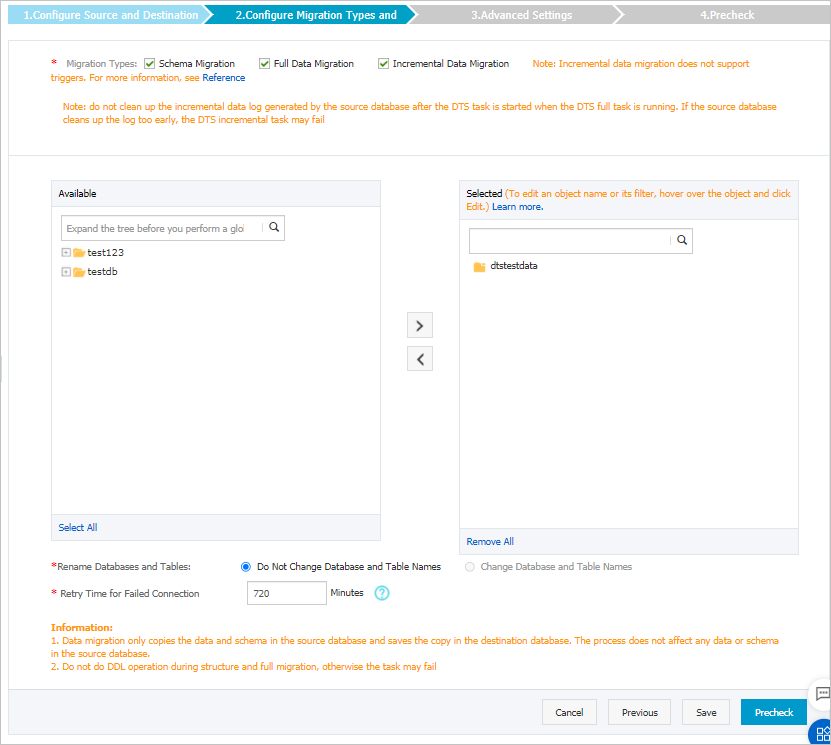

Configure the objects to be migrated.

On the Configure Objects page, configure the objects that you want to migrate.

Parameter

Description

Migration Types

To perform only full data migration, select Schema Migration and Full Data Migration.

To ensure service continuity during data migration, select Schema Migration, Full Data Migration, and Incremental Data Migration.

NoteIf you do not select Schema Migration, make sure a database and a table are created in the destination database to receive data and the object name mapping feature is enabled in Selected Objects.

If you do not select Incremental Data Migration, we recommend that you do not write data to the source database during data migration. This ensures data consistency between the source and destination databases.

Processing Mode of Conflicting Tables

Precheck and Report Errors: checks whether the destination database contains tables that use the same names as tables in the source database. If the source and destination databases do not contain tables that have identical table names, the precheck is passed. Otherwise, an error is returned during the precheck and the data migration task cannot be started.

NoteIf the source and destination databases contain tables with identical names and the tables in the destination database cannot be deleted or renamed, you can use the object name mapping feature to rename the tables that are migrated to the destination database. For more information, see Map object names.

Ignore Errors and Proceed: skips the precheck for identical table names in the source and destination databases.

WarningIf you select Ignore Errors and Proceed, data inconsistency may occur and your business may be exposed to the following potential risks:

If the source and destination databases have the same schema, and a data record has the same primary key as an existing data record in the destination database, the following scenarios may occur:

During full data migration, DTS does not migrate the data record to the destination database. The existing data record in the destination database is retained.

During incremental data migration, DTS migrates the data record to the destination database. The existing data record in the destination database is overwritten.

If the source and destination databases have different schemas, only specific columns are migrated or the data migration task fails. Proceed with caution.

Source Objects

Select one or more objects from the Source Objects section. Click the

icon to add the objects to the Selected Objects section. Note

icon to add the objects to the Selected Objects section. NoteYou can select columns, tables, or databases as the objects to be migrated.

Selected Objects

To rename an object that you want to migrate to the destination instance, right-click the object in the Selected Objects section. For more information, see Map the name of a single object.

To rename multiple objects at a time, click Batch Edit in the upper-right corner of the Selected Objects section. For more information, see Map multiple object names at a time.

NoteIf you use the object name mapping feature to rename an object, other objects that depend on the object may fail to be migrated.

To specify WHERE conditions to filter data, right-click a table in the Selected Objects section. In the dialog box that appears, specify the conditions. For more information, see Specify filter conditions.

To migrate the SQL operations performed on a specific database or table, right-click the object in the Selected Objects section. In the dialog box that appears, select the SQL operations that you want to migrate. For more information about the SQL statements that can be synchronized, see the SQL operations that can be migrated during incremental data migration section of this topic.

Click Next: Advanced Settings to configure advanced settings.

Parameter

Description

Dedicated Cluster for Task Scheduling

By default, DTS schedules the task to the shared cluster if you do not specify a dedicated cluster. You can also purchase a dedicated cluster of the required specifications to run the data migration task. For more information, see What is a DTS dedicated cluster.

Retry Time for Failed Connections

The retry time range for failed connections. If the source or destination database fails to be connected after the data migration task is started, DTS immediately retries a connection within the retry time range. Valid values: 10 to 1,440. Unit: minutes. Default value: 720. We recommend that you set the parameter to a value greater than 30. If DTS is reconnected to the source and destination databases within the specified retry time range, DTS resumes the data migration task. Otherwise, the data migration task fails.

NoteIf you specify different retry time ranges for multiple data migration tasks that share the same source or destination database, the value that is specified later takes precedence.

When DTS retries a connection, you are charged for the DTS instance. We recommend that you specify the retry time range based on your business requirements. You can also release the DTS instance at the earliest opportunity after the source database and destination instance are released.

Retry Time for Other Issues

The retry time range for other issues. For example, if DDL or DML operations fail to be performed after the data migration task is started, DTS immediately retries the operations within the retry time range. Valid values: 1 to 1440. Unit: minutes. Default value: 10. We recommend that you set the parameter to a value greater than 10. If the failed operations are successfully performed within the specified retry time range, DTS resumes the data migration task. Otherwise, the data migration task fails.

ImportantThe value of the Retry Time for Other Issues parameter must be smaller than the value of the Retry Time for Failed Connections parameter.

Enable Throttling for Full Data Migration

Specifies whether to enable throttling for full data migration. During full data migration, DTS uses the read and write resources of the source and destination databases. This may increase the loads of the database servers. You can enable throttling for full data migration based on your business requirements. To configure throttling, you must configure the Queries per second (QPS) to the source database, RPS of Full Data Migration, and Data migration speed for full migration (MB/s) parameters. This reduces the loads of the destination database server.

NoteYou can configure this parameter only if you select Full Data Migration for the Migration Types parameter.

Enable Throttling for Incremental Data Migration

Specifies whether to enable throttling for incremental data migration. To configure throttling, you must configure the RPS of Incremental Data Migration and Data migration speed for incremental migration (MB/s) parameters. This reduces the loads of the destination database server.

NoteYou can configure this parameter only if you select Incremental Data Migration for the Migration Types parameter.

Environment Tag

The environment tag that is used to identify the data migration instance. You can select an environment tag based on your business requirements.

Actual Write Code

The encoding format in which data is written to the destination database. Select an encoding format based on your business requirements. In this example, you do not need to configure this parameter.

Configure ETL

Specifies whether to enable the extract, transform, and load (ETL) feature. For more information, see What is ETL? Valid values:

Yes: configures the ETL feature. You can enter data processing statements in the code editor. For more information, see Configure ETL in a data migration or data synchronization task.

No: does not configure the ETL feature.

Monitoring and Alerting

Specifies whether to configure alerting for the data migration task. If the task fails or the migration latency exceeds the specified threshold, the alert contacts receive notifications. Valid values:

No: does not configure alerting.

Yes: configures alerting. In this case, you must also configure the alert threshold and alert notification settings. For more information, see the Configure monitoring and alerting when you create a DTS task section of the Configure monitoring and alerting topic.

Click Next Step: Data Verification to configure the data verification task.

For more information about how to use the data verification feature, see Configure a data verification task.

Save the task settings and run a precheck.

To view the parameters to be specified when you call the relevant API operation to configure the DTS task, move the pointer over Next: Save Task Settings and Precheck and click Preview OpenAPI parameters.

If you do not need to view or have viewed the parameters, click Next: Save Task Settings and Precheck in the lower part of the page.

NoteBefore you can start the data migration task, DTS performs a precheck. You can start the data migration task only after the task passes the precheck.

If the task fails to pass the precheck, click View Details next to each failed item. After you analyze the causes based on the check results, troubleshoot the issues. Then, run a precheck again.

If an alert is triggered for an item during the precheck:

If an alert item cannot be ignored, click View Details next to the failed item and troubleshoot the issues. Then, run a precheck again.

If the alert item can be ignored, click Confirm Alert Details. In the View Details dialog box, click Ignore. In the message that appears, click OK. Then, click Precheck Again to run a precheck again. If you ignore the alert item, data inconsistency may occur, and your business may be exposed to potential risks.

Purchase an instance.

Wait until Success Rate becomes 100%. Then, click Next: Purchase Instance.

On the Purchase Instance page, configure the Instance Class parameter for the data migration instance. The following table describes the parameters.

Section

Parameter

Description

New Instance Class

Resource Group

The resource group to which the data migration instance belongs. Default value: default resource group. For more information, see What is Resource Management?

Instance Class

DTS provides instance classes that vary in the migration speed. You can select an instance class based on your business scenario. For more information, see Instance classes of data migration instances.

Read and agree to Data Transmission Service (Pay-as-you-go) Service Terms by selecting the check box.

Click Buy and Start. In the message that appears, click OK.

You can view the progress of the task on the Data Migration page.

Procedure (in the old DTS console)

Log on to the DTS console.

NoteIf you are redirected to the Data Management (DMS) console, you can click the

icon in the

icon in the  to go to the previous version of the DTS console.

to go to the previous version of the DTS console.In the left-side navigation pane, click Data Migration.

At the top of the Migration Tasks page, select the region where the destination cluster resides.

In the upper-right corner of the page, click Create Migration Task.

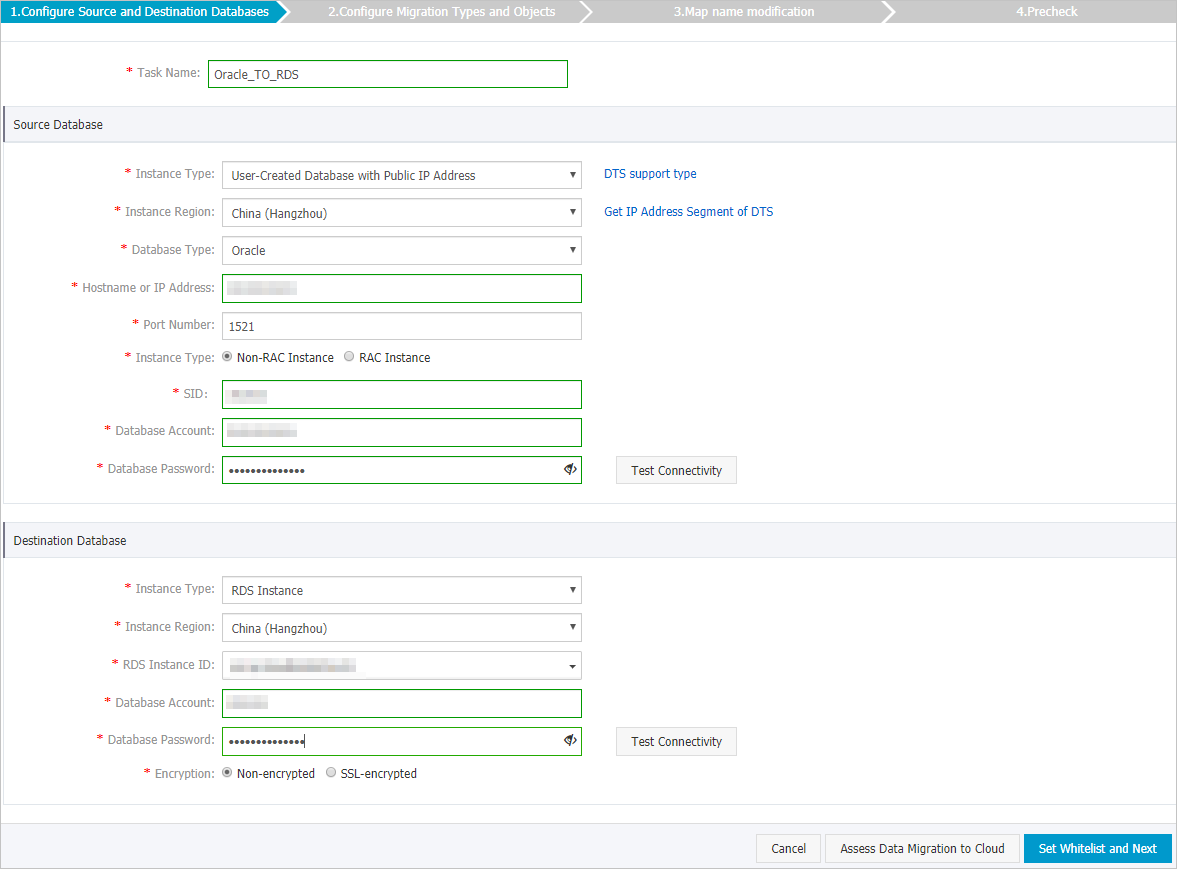

Configure the source and destination databases.

Section

Parameter

Description

N/A

Task Name

The task name that DTS automatically generates. We recommend that you specify a descriptive name that makes it easy to identify the task. You do not need to specify a unique task name.

Source Database

Instance Type

The instance type of the source database. Select User-Created Database with Public IP Address.

Instance Region

The region in which the source instance resides. If you select User-Created Database with Public IP Address as the instance type of the source database, you do not need to configure the Instance Region parameter.

Database Type

The type of the source database. Select Oracle.

Hostname or IP Address

The endpoint that is used to access the Amazon RDS for Oracle instance.

NoteYou can obtain the endpoint on the basic information page of the Amazon RDS for Oracle instance.

Port Number

The service port number of the Amazon RDS for Oracle instance. Default value: 1521.

Instance Type

If you select Non-RAC Instance, you must configure the SID parameter.

If you select RAC Instance, you must configure the Service Name parameter.

In this example, Non-RAC Instance is selected. Then, configure the SID parameter.

Database Account

The database account of the Amazon RDS for Oracle instance. For information about the permissions that are required for the account, see the Permissions required for database accounts section of this topic.

Database Password

The password of the database account.

NoteAfter you configure the source database parameters, click Test Connectivity next to Database Password to verify whether the configured parameters are valid. If the configured parameters are valid, the Passed message is displayed. If the Failed message is displayed, click Check next to Failed to modify the source database parameters based on the check results.

Destination Database

Instance Type

The instance type of the destination database. Select RDS Instance.

Instance Region

The region in which the ApsaraDB RDS for MySQL instance resides.

RDS Instance ID

The ID of the ApsaraDB RDS for MySQL instance.

Database Account

The database account of the ApsaraDB RDS for MySQL instance. For information about the permissions that are required for the account, see the Permissions required for database accounts section of this topic.

Database Password

The password of the database account.

NoteAfter you configure the destination database parameters, click Test Connectivity next to Database Password to verify whether the configured parameters are valid. If the configured parameters are valid, the Passed message is displayed. If the Failed message is displayed, click Check next to Failed to modify the destination database parameters based on the check results.

In the lower-right corner of the page, click Set Whitelist and Next.

If the source or destination database instance is an Alibaba Cloud database instance, such as an ApsaraDB RDS for MySQL or ApsaraDB for MongoDB instance, or is a self-managed database hosted on Elastic Compute Service (ECS), DTS automatically adds the CIDR blocks of DTS servers to the whitelist of the database instance or ECS security group rules. If the source or destination database is a self-managed database on data centers or is from other cloud service providers, you must manually add the CIDR blocks of DTS servers to allow DTS to access the database. For more information about the CIDR blocks of DTS servers, see the "CIDR blocks of DTS servers" section of the Add the CIDR blocks of DTS servers to the security settings of on-premises databases topic.

WarningIf the CIDR blocks of DTS servers are automatically or manually added to the whitelist of the database or instance, or to the ECS security group rules, security risks may arise. Therefore, before you use DTS to migrate data, you must understand and acknowledge the potential risks and take preventive measures, including but not limited to the following measures: enhance the security of your username and password, limit the ports that are exposed, authenticate API calls, regularly check the whitelist or ECS security group rules and forbid unauthorized CIDR blocks, or connect the database to DTS by using Express Connect, VPN Gateway, or Smart Access Gateway.

Select the objects to be migrated and the migration types.

Setting

Description

Select the migration types

To perform only full data migration, select Schema Migration and Full Data Migration.

To ensure service continuity during data migration, select Schema Migration, Full Data Migration, and Incremental Data Migration.

NoteIf Incremental Data Migration is not selected, we recommend that you do not write data to the source database during data migration. This ensures data consistency between the source and destination databases.

Select the objects to be migrated

Select one or more objects from the Available section and click the

icon to move the objects to the Selected section. Note

icon to move the objects to the Selected section. NoteYou can select columns, tables, or databases as the objects to be migrated.

By default, after an object is migrated to the destination database, the name of the object remains unchanged in the destination database. You can use the object name mapping feature to rename the objects that are migrated to the ApsaraDB RDS for MySQL instance. For more information, see Object name mapping.

If you use the object name mapping feature to rename an object, other objects that depend on the object may fail to be migrated.

Specify whether to rename objects

You can use the object name mapping feature to rename the objects that are migrated to the destination instance. For more information, see Object name mapping.

Specify the retry time range for a failed connection to the source or destination database

By default, if DTS fails to connect to the source or destination database, DTS retries within the next 12 hours. You can specify the retry time range based on your business requirements. If DTS reconnects to the source and destination databases within the specified retry time range, DTS resumes the data migration task. Otherwise, the data migration task fails.

NoteWhen DTS retries a connection, you are charged for the DTS instance. We recommend that you specify the retry time range based on your business requirements. You can also release the DTS instance at the earliest opportunity after the source and destination instances are released.

In the lower-right corner of the page, click Precheck.

NoteBefore you can start the data migration task, DTS performs a precheck. You can start the data migration task only after the task passes the precheck.

If the task fails to pass the precheck, you can click the

icon next to each failed item to view details.

icon next to each failed item to view details. You can troubleshoot the issues based on the causes and run a precheck again.

If you do not need to troubleshoot the issues, you can ignore failed items and run a precheck again.

After the task passes the precheck, click Next.

In the Confirm Settings dialog box, specify the Channel Specification parameter and select Data Transmission Service (Pay-As-You-Go) Service Terms.

Click Buy and Start to start the data migration task.

Schema migration and full data migration

We recommend that you do not manually stop the task during full data migration. Otherwise, the data migrated to the destination database may be incomplete. You can wait until the data migration task automatically stops.

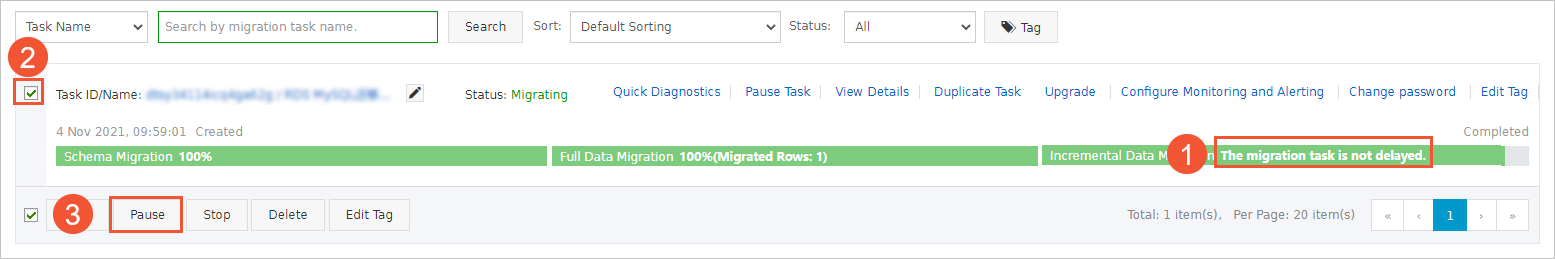

Schema migration, full data migration, and incremental data migration

An incremental data migration task does not automatically stop. You must manually stop the task.

ImportantWe recommend that you select an appropriate time to manually stop the data migration task. For example, you can stop the task during off-peak hours or before you switch your workloads to the destination cluster.

Wait until Incremental Data Migration and The migration task is not delayed appear in the progress bar of the migration task. Then, stop writing data to the source database for a few minutes. The latency of incremental data migration may be displayed in the progress bar.

Wait until the status of incremental data migration changes to The migration task is not delayed again. Then, manually stop the migration task.

Switch your workloads to the ApsaraDB RDS for MySQL instance.