This topic provides answers to some frequently asked questions about real-time synchronization.

Overview

Category | Question or issue |

Real-time synchronization task configuration |

|

Errors for real-time synchronization of data from MySQL | |

Errors for real-time synchronization from Oracle, PolarDB, and MySQL | |

Error messages |

|

Real-time synchronization task configuration

What types of data sources support real-time synchronization?

For information about the types of data sources that support real-time synchronization, see Data source types that support real-time synchronization.

Why does my real-time synchronization task have high latency?

If some data that is synchronized by your real-time synchronization task cannot be queried, the task may have latency. You can go to the Real-time Computing Nodes page in Operation Center to check whether the value that indicates the latency is excessively large. For more information, see Solutions to latency on a real-time synchronization node.

The following table describes the possible causes of high latency.

Problem description | Cause | Solution |

High latency occurs on the source. | A large number of data changes are made on the source. If the latency spikes, the amount of data in the source increases at a specific point in time. | If the source contains a large amount of data and high data synchronization latency is caused by frequent data updates in the source, you can use one of the following solutions to resolve the issue:

|

The offset from which data starts to be synchronized is much earlier than the current time. | If the offset from which data starts to be synchronized is much earlier than the current time, an extended period of time is required to read the historical data before data can be read in real time. | |

High latency occurs on the destination. | The performance of the destination is poor, or the loads on the destination are high. | If the loads on the destination are high, you must contact the related database administrator. This issue cannot be resolved only by adjusting the number of parallel threads. |

High latency occurs on the source and destination. | Data is synchronized over the Internet. The poor network connection causes the latency of the data synchronization task. | If you synchronize data over the Internet, the timeliness of data synchronization cannot be ensured. We recommend that you establish network connections between the resource group that you use and your data sources and synchronize data over an internal network. Note Real-time synchronization over the Internet has the following disadvantages: Packet loss may occur and the performance of data synchronization may be affected due to unstable network connection. Data security is not high. |

If the performance of the source and that of the destination have large differences or the loads on the source or destination are high, data synchronization latency may be excessively high. | If the loads on the destination are high, you must contact the related database administrator. This issue cannot be resolved only by adjusting the number of parallel threads. |

Why is the Internet not recommended for real-time synchronization?

Real-time synchronization over the Internet has the following disadvantages:

Packet loss may occur, and the performance of data synchronization may be affected due to unstable network connection.

The security of data synchronization is low.

What operation does DataWorks perform on the data records that are synchronized in real time?

When Data Integration synchronizes data from a data source, such as a MySQL, Oracle, LogHub, or PolarDB data source, to a DataHub or Kafka data source in real time, Data Integration adds five fields to the data records in the destination. These fields are used for operations such as metadata management, sorting, and deduplication. For more information, see Fields used for real-time synchronization.

How do I deal with the TRUNCATE statement during real-time synchronization?

Real-time synchronization supports the TRUNCATE statement. The TRUNCATE statement takes effect when full data and incremental data are merged. If you do not execute the TRUNCATE statement, excessive data may be generated during data synchronization.

How do I improve the speed and performance of real-time synchronization?

If data is written to the destination at a low speed, you can increase the number of parallel threads for the destination and modify the values of the Java Virtual Machine (JVM) parameters. The values of the JVM parameters affect only the frequency of full heap garbage collection (Full GC). A large JVM heap memory size reduces the frequency of Full GC and improves the performance of real-time synchronization.

Can I directly run a real-time synchronization task on the codeless UI?

You cannot directly run a real-time synchronization task on the codeless user interface (UI). After you complete the configuration of a real-time synchronization task, you must commit and deploy the task and run the task in the production environment. For more information, see O&M for real-time synchronization nodes.

Why does my real-time synchronization task that is used to synchronize data from MySQL slow down?

One of the possible reasons is that binary logs generated for the MySQL data source increase. The binary logging feature can be enabled only at the instance level. After you enable this feature for the MySQL data source, binary logs are generated for changes made on all tables in the MySQL data source. As a result, binary logs generated for changes made on the tables that are not specified in the real-time synchronization task slow down the running of the task.

Why do differences exist between the amount of resources that are consumed when I synchronize data from a single database and the amount of resources that are consumed when I synchronize data from multiple databases?

When you run a real-time synchronization task to synchronize data from two or more databases, the synchronization task enters the whole-instance synchronization mode. In this case, the amount of resources that are consumed by the synchronization task is greater than the amount of resources that are consumed by two real-time synchronization tasks used to respectively synchronize data from a single database.

Which types of DDL processing policies do real-time synchronization tasks support?

Supported DDL processing policies

Normal | Ignoring | Alerting | Error reporting |

A DDL message is normally sent to and processed by the destination. The processing policy may vary based on the destination type. | A DDL message is discarded, and the destination does not perform processing operations. | A DDL message is discarded, and an alert notification is sent. Note If your real-time synchronization task is not configured with the related alert rule, no alert notification is sent. | The status of the real-time synchronization task is set to failed, and the task stops running. Note If your real-time synchronization task is configured with the related alert rule, an alert notification is sent. |

DDL operation types

Create a table |

|

Delete a table |

|

Add a field |

|

Delete a field | This type of DDL operation does not support a normal processing policy. You can configure only an ignoring, alerting, or error reporting policy for this type of DDL operation. |

Rename a table | This type of DDL operation does not support a normal processing policy. You can configure only an ignoring, alerting, or error reporting policy for this type of DDL operation. |

Rename a field | This type of DDL operation does not support a normal processing policy. You can configure only an ignoring, alerting, or error reporting policy for this type of DDL operation. |

Change a field type |

|

Clear a table |

|

What are the precautions that I must practice on data synchronization from a source table on which a DDL or DML operation is performed?

If new fields are added to a source table, the related real-time synchronization task works in the following ways:

If a field named DEFAULT VALUE is added to the source table, the field is also added to the related destination table after the real-time synchronization task is run. The value of the field in the destination table is NULL. If data is written to the field in the source table in subsequent operations, the real-time synchronization task synchronizes the data to the field in the destination table.

If a field named VIRTUAL is added to the source table, the field is also added to the related destination table after the real-time synchronization task is run. The value of the field in the destination table is NULL. If data is written to the field in the source table in subsequent operations, the real-time synchronization task synchronizes the data to the field in the destination table.

If you run a real-time synchronization task to synchronize data from a MySQL or PolarDB for MySQL data source and you want to add a field to a source table in the data source, we recommend that you add the field to the end rather than the middle of the source table. If a field must be added to the middle of a source table, you must take note of the following limits:

For a synchronization task used to synchronize both full and incremental data, do not add a field to the middle of a source table during synchronization of full data. Otherwise, a data exception occurs during synchronization of incremental data in real time.

During synchronization of incremental data in real time, if you want to reset the offset from which you start to synchronize incremental data and you want to perform a DDL operation to add a field to the middle of a source table, you must make sure that the new offset is later than the time when you add the field to the middle of the source table. Otherwise, a data exception occurs during synchronization of incremental data in real time.

If a data exception occurs, you can perform data initialization again to restore data to a normal state. You need to only remove the table to the middle of which you add a field and perform data initialization. You do not need to initialize data in all tables.

Does the system retain information of a source table, such as not-null properties and the default values of fields of the source table, in the mapped destination table that is automatically created?

When the system creates a destination table, the system retains only information such as the field names, data types, and remarks of the mapped source table in the destination table, but not the default values of fields and constraints, such as not-null constraints and constraints on indexes, of the mapped source table.

Why does my real-time synchronization task used to synchronize data from PostgreSQL have high latency after a failover occurs on the task?

The latency occurs due to the characteristic of a PostgreSQL database. If the latency affects your business, you can stop the real-time synchronization task and then restart the real-time synchronization task to synchronize full data and incremental data from PostgreSQL.

How do I run an existing real-time synchronization task to perform full synchronization?

You can find the real-time synchronization task in the Tasks section of the Synchronization Task page in Data Integration, click the More icon in the Operation column, and then select Rerun to rerun the synchronization task.

Errors for real-time synchronization of data from MySQL

When I run a real-time synchronization task to synchronize data from MySQL, data can be read at the beginning but cannot be read after a period of time. What do I do?

Run the following command on the related MySQL database to view the binary log files that record the data write operation in the database:

show master statusSearch for

journalName=mysql-bin.xx,position=xxin the binary log files of the MySQL database to check whether the binary log files contain data records about the offset specified by the position parameter. For example, you can search for journalName=mysql-bin.000001,position=50.Contact the database administrator if data is being written to the MySQL database but no data write operations are recorded in binary logs.

Errors for real-time synchronization from Oracle, PolarDB, and MySQL

Why are errors repeatedly reported when a real-time synchronization node is run to synchronize data from Oracle, PolarDB, or MySQL?

Problem description: When a real-time synchronization task is run to synchronize data from Oracle, PolarDB, or MySQL, errors are repeatedly reported.

By default, if a real-time synchronization task is run to synchronize data from an Oracle, PolarDB, or MySQL data source, the related destination does not support the synchronization of data changes generated by DDL operations performed on the source. If the data changes that are generated by DDL operations other than table creation on the source are synchronized, the system reports an error for the real-time synchronization task and the node fails. In a resumable upload scenario, the following situation may exist: No DDL operations are performed on the source, but the system still reports an error for the real-time synchronization task.

NoteTo prevent data loss or disorder within a specific period of time, we recommend that you do not use the rename command to exchange the name of one column with the name of another column. For example, if you use the rename command to exchange the name of Column A and that of Column B, data loss or disorder may occur.

Cause: Real-time synchronization supports resumable uploads. To ensure data integrity, after the real-time synchronization task is started, the synchronization task may read the data changes that are generated by previous DDL operations again. As a result, the error is reported again.

Solution:

If data changes are generated by DDL operations on the source, manually make the same changes in the destination.

Start the real-time synchronization task and change the processing rule for DDL messages from error reporting to ignoring.

NoteIn a resumable upload scenario, the real-time synchronization task also subscribes to the DDL events. To ensure that the synchronization task can run as expected, you must temporarily change the processing rule for DDL messages from error reporting to ignoring.

Stop the real-time synchronization task, change the processing rule for DDL messages from ignoring back to error reporting, and then restart the real-time synchronization task.

Error messages

When I run a real-time synchronization task to synchronize data from Kafka, the following error message is returned: Startup mode for the consumer set to timestampOffset, but no begin timestamp was specified. What do I do?

Specify an offset from which you want to synchronize data.

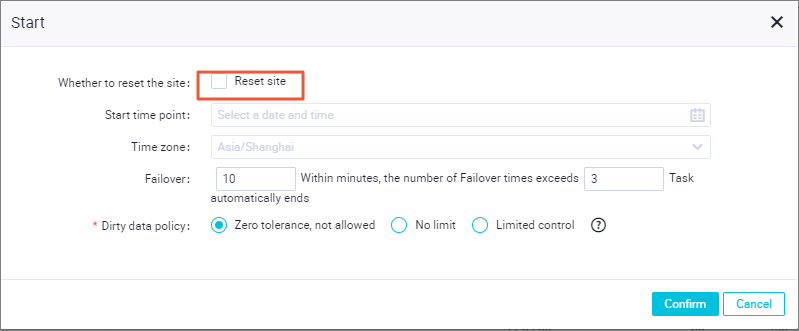

Data Integration allows you to reset the offset for a synchronization task to specify the start position of data synchronization. If you want to run your synchronization task to start synchronizing data from a specific point in time or specific data position again, you can reset the offset for the synchronization task. For example, if an error occurs or some data needs to be synchronized again during data synchronization, you can reset the offset for the related synchronization task to synchronize data from a specified position again. This ensures the consistency and integrity of data.

When I run a real-time synchronization task to synchronize data from MySQL, the following error message is returned: Cannot replicate because the master purged required binary logs. What do I do?

If the Cannot replicate because the master purged required binary logs. Replicate the missing transactions from elsewhere, or provision a new slave from backup error message is returned, Data Integration may fail to find the binary logs generated for the offset from which you want to synchronize data. You must check the retention duration of the binary logs of your MySQL data source and specify an offset within the retention duration before you start your synchronization task.

If Data Integration cannot find the binary logs, you can reset the offset to the current time.

When I run a real-time synchronization task to synchronize data from MySQL, the MySQLBinlogReaderException error message is returned. What do I do?

If the following error message is returned: MySQLBinlogReaderException: The database you are currently syncing is the standby database, but the current value of log_slave_updates is OFF, you need to enable the binlog log update of the standby database first, the binary logging feature is disabled for the secondary ApsaraDB RDS for MySQL instance. If you want to synchronize data from the secondary ApsaraDB RDS for MySQL instance, you must enable this feature for the instance. To enable the feature, contact the related database administrator.

For more information, see the Enable the binary logging feature step in the Configure data sources for data synchronization from MySQL topic.

When I run a real-time synchronization task to synchronize data from MySQL, an error message that contains show master status' has an error! is returned. What do I do?

If the detailed error message is Caused by: java.io.IOException: message=Access denied; you need (at least one of) the SUPER, REPLICATION CLIENT privilege(s) for this operation, with command: show master status, the account that you specified when you add the MySQL data source to DataWorks is not granted permissions on the related MySQL database.

The account must be granted the SELECT, REPLICATION SLAVE, and REPLICATION CLIENT permissions on the MySQL database. For more information about how to grant an account the required permissions on a database, see Create an account and grant the required permissions to the account.

When I run a real-time synchronization task to synchronize data from MySQL, the following error message is returned: parse.exception.PositionNotFoundException: can't find start position forxxx. What do I do?

Data Integration cannot find the binary logs generated for the offset from which you want to synchronize data. You must reset an offset for the node.

When I run a real-time synchronization task to synchronize data from Hologres, the following error message is returned: permission denied for database xxx. What do I do?

Before you run a real-time synchronization task to synchronize data from Hologres, you must obtain the permissions that belong to the <db>_admin user group in the Hologres console for your account. After you obtain the permissions, you can use your account to create schemas. For more information, see Hologres permission models.

When I run a real-time synchronization task to synchronize data to MaxCompute, the following error message is returned: ODPS-0410051:invalid credentials-accessKeyid not found. What do I do?

If you run a real-time synchronization task to synchronize data to MaxCompute and a temporary AccessKey pair is used for data synchronization, the temporary AccessKey pair is valid for only seven days. After the period elapses, the temporary AccessKey pair automatically expires, and the real-time synchronization task fails. If the system detects that the temporary AccessKey pair is expired, the system restarts the real-time synchronization task. If a related alert rule is configured for the synchronization task, the system reports an error.

When I run a real-time synchronization task to synchronize data to Oracle, the following error message is returned: logminer doesn't init, send HeartbeatRecord. What do I do?

When a real-time synchronization task that is used to synchronize data to Oracle is being initialized to find an appropriate offset for data synchronization, the node needs to load the previous archived log file. If the size of the archived log file is large, 3 to 5 minutes may be required to complete the initialization.