This topic describes how to use DataWorks to create and configure external tables. This topic also lists the data types supported in external tables.

Overview

The following table describes the concepts that you need to know before you use external tables.

Concept | Description |

Object Storage Service (OSS) | OSS supports storage classes, including Standard, Infrequent Access, and Archive, and is applicable to various data storage scenarios. In addition, OSS can deeply integrate with the Hadoop open-source community and other products such as E-MapReduce (EMR), Batch Compute, MaxCompute, Machine Learning Platform for AI (PAI), and Function Compute. |

MaxCompute | MaxCompute is an efficient and fully managed data warehousing solution. It can be used together with OSS to allow you to analyze and process large amounts of data in an efficient and cost-effective manner. |

External table feature of MaxCompute | The next-generation computing framework of MaxCompute V2.0 allows you to use the external table feature to directly query numerous files in OSS, without the need to load data into MaxCompute tables. This reduces the time and labor costs required for data migration and lowers storage costs. |

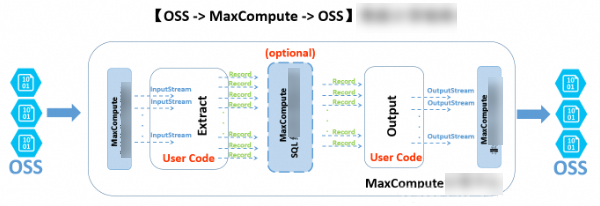

The following figure shows the overall architecture of external tables of MaxCompute.

MaxCompute allows you to create external tables for unstructured data, such as data in OSS and Tablestore. The unstructured data processing framework allows MaxCompute to import data from and export data to OSS or Tablestore based on the data transmission and processing logic. The following content uses OSS external tables as an example to describe the processing logic:

The external data from an OSS bucket is converted based on the unstructured framework and passed to the InputStream class of Java. You can write code for the EXTRACT logic. This indicates that you need to read, parse, convert, and compute data from input streams. The format of extracted data records must be supported by MaxCompute.

The extracted data records can be further processed by the built-in structured SQL compute engine of MaxCompute. More data records may be generated during the processing.

The generated data records are passed to the user-defined output logic for further computing and conversion. Finally, the system uses the OutputStream Java class to export the required data in the data records to OSS.

You can use DataWorks together with MaxCompute to create, search for, and configure external tables by using a visual interface in the DataWorks console. You can also query, process, and analyze data in external tables.

Network and access authorization

MaxCompute is separate from OSS. Therefore, network connectivity between MaxCompute and OSS on different clusters may affect the ability of MaxCompute to access the data stored in OSS. We recommend that you use an internal endpoint that ends with -internal.aliyuncs.com when you access the data stored in OSS from MaxCompute.

MaxCompute requires a secure authorized channel to access data stored in OSS. MaxCompute uses Resource Access Management (RAM) and Security Token Service (STS) of Alibaba Cloud to secure data access. MaxCompute applies for data access permissions from STS as the table creator. The permission settings for Tablestore are the same as those for OSS.

Perform STS authorization.

To access OSS data by using the external table feature of MaxCompute, you must grant OSS access permissions to the account that is used to run MaxCompute jobs. STS is a token management service provided by the RAM service of Alibaba Cloud. Based on STS, authorized RAM users and cloud services can issue tokens with custom validity and permissions. Applications can use tokens to call Alibaba Cloud API operations to manipulate resources.

You can use one of the following methods to grant OSS access permissions:

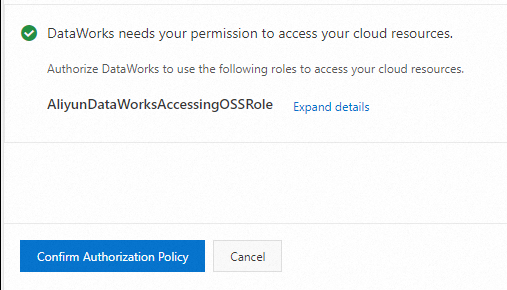

If your MaxCompute project and the OSS bucket you want to access belong to the same Alibaba Cloud account, log on to the DataWorks console with the account and perform one-click authorization.

Open the configuration tab of a newly created table and find the Physical Model section.

Set Table Type to External Table.

Configure the Storage Address parameter and click Authorize.

On the Cloud Resource Access Authorization page, click Confirm Authorization Policy.

Grant MaxCompute the permissions to access data stored in OSS in the RAM console.

Log on to the RAM console.

NoteIf your MaxCompute project and the OSS bucket you want to access belong to different Alibaba Cloud accounts, you must use the account to which the OSS bucket belongs to log on to the RAM console and perform the following operations.

In the left-side navigation pane, choose .

On the Roles page, click Create Role. In the Create Role panel, set Select Trusted Entity to Alibaba Cloud Account and click Next.

Configure the RAM Role Name and Note parameters.

NoteThe role name must be set to AliyunODPSDefaultRole or AliyunODPSRoleForOtherUser.

Select Current Alibaba Cloud Account or Other Alibaba Cloud Account for Select Trusted Alibaba Cloud Account.

NoteIf you select Other Alibaba Cloud Account, enter the account ID.

- Click OK.

Configure the role details.

On the Roles page, find the desired role and click the name of the role in the Role Name column. On the Trust Policy tab of the page that appears, click Edit Trust Policy and enter policy document based on your business requirements.

--If the MaxCompute project and OSS bucket belong to the same account, enter the following content: { "Statement": [ { "Action": "sts:AssumeRole", "Effect": "Allow", "Principal": { "Service": [ "odps.aliyuncs.com" ] } } ], "Version": "1" }-- If the MaxCompute project and OSS bucket belong to different accounts, enter the following content: { "Statement": [ { "Action": "sts:AssumeRole", "Effect": "Allow", "Principal": { "Service": [ "ID of the Alibaba Cloud account that owns the MaxCompute project@odps.aliyuncs.com" ] } } ], "Version": "1" }After the configuration is complete, click Save trust policy document.

Click Grant Permission in the Actions column of the role on the Roles page. In the Grant Permission panel, search for the AliyunODPSRolePolicy policy that is required for OSS access and attach the policy to the role. If you cannot find the policy in this way, click the name of the role in the Role Name column. On the Permissions tab of the page that appears, click Precise Permission to attach the policy to the role.

{ "Version": "1", "Statement": [ { "Action": [ "oss:ListBuckets", "oss:GetObject", "oss:ListObjects", "oss:PutObject", "oss:DeleteObject", "oss:AbortMultipartUpload", "oss:ListParts" ], "Resource": "*", "Effect": "Allow" }, { "Action": [ "ots:ListTable", "ots:DescribeTable", "ots:GetRow", "ots:PutRow", "ots:UpdateRow", "ots:DeleteRow", "ots:GetRange", "ots:BatchGetRow", "ots:BatchWriteRow", "ots:ComputeSplitPointsBySize" ], "Resource": "*", "Effect": "Allow" }, { "Action": [ "pvtz:DescribeRegions", "pvtz:DescribeZones", "pvtz:DescribeZoneInfo", "pvtz:DescribeVpcs", "pvtz:DescribeZoneRecords" ], "Resource": "*", "Effect": "Allow" }, { "Action": [ "dlf:CreateFunction", "dlf:BatchGetPartitions", "dlf:ListDatabases", "dlf:CreateLock", "dlf:UpdateFunction", "dlf:BatchUpdateTables", "dlf:DeleteTableVersion", "dlf:UpdatePartitionColumnStatistics", "dlf:ListPartitions", "dlf:DeletePartitionColumnStatistics", "dlf:BatchUpdatePartitions", "dlf:GetPartition", "dlf:BatchDeleteTableVersions", "dlf:ListFunctions", "dlf:DeleteTable", "dlf:GetTableVersion", "dlf:AbortLock", "dlf:GetTable", "dlf:BatchDeleteTables", "dlf:RenameTable", "dlf:RefreshLock", "dlf:DeletePartition", "dlf:UnLock", "dlf:GetLock", "dlf:GetDatabase", "dlf:GetFunction", "dlf:BatchCreatePartitions", "dlf:ListPartitionNames", "dlf:RenamePartition", "dlf:CreateTable", "dlf:BatchCreateTables", "dlf:UpdateTableColumnStatistics", "dlf:ListTableNames", "dlf:UpdateDatabase", "dlf:GetTableColumnStatistics", "dlf:ListFunctionNames", "dlf:ListPartitionsByFilter", "dlf:GetPartitionColumnStatistics", "dlf:CreatePartition", "dlf:CreateDatabase", "dlf:DeleteTableColumnStatistics", "dlf:ListTableVersions", "dlf:BatchDeletePartitions", "dlf:ListCatalogs", "dlf:UpdateTable", "dlf:ListTables", "dlf:DeleteDatabase", "dlf:BatchGetTables", "dlf:DeleteFunction" ], "Resource": "*", "Effect": "Allow" } ] }

Use an OSS data source.

If you have added an OSS data source to DataWorks, find the workspace that you created on the Workspaces page of the DataWorks console and click Manage in the Actions column to go to the SettingCenter page. Then, view and use the data source on the Data Sources page in SettingCenter.

Create an external table

Create an external table by executing DDL statements.

Go to the DataStudio page and execute DDL statements to create an external table. For more information, see Create and manage MaxCompute tables. The DDL statements must comply with the MaxCompute syntax. If STS authorization is complete, you do not need to include the odps.properties.rolearn property in the DDL statements.

The following code provides a sample DDL statement, in which EXTERNAL indicates an external table:

CREATE EXTERNAL TABLE IF NOT EXISTS ambulance_data_csv_external( vehicleId int, recordId int, patientId int, calls int, locationLatitute double, locationLongtitue double, recordTime string, direction string ) STORED BY 'com.aliyun.odps.udf.example.text.TextStorageHandler' -- Required. The STORED BY clause specifies the name of a custom storage handler class or another file format for the external table. with SERDEPROPERTIES ( 'delimiter'='\\|', -- Optional. The SERDEPROPERTIES clause specifies the parameters used when data is serialized or deserialized. These parameters can be passed into the EXTRACT logic through DataAttributes. 'odps.properties.rolearn'='acs:ram::xxxxxxxxxxxxx:role/aliyunodpsdefaultrole' ) LOCATION 'oss://oss-cn-shanghai-internal.aliyuncs.com/oss-odps-test/Demo/SampleData/CustomTxt/AmbulanceData/' -- Required. The LOCATION parameter specifies the location of the external table. USING 'odps-udf-example.jar'; -- Required if you use a custom format class. The USING parameter specifies the JAR package where the custom format class resides.STORED BY can be followed by a parameter indicating the built-in storage handler for a CSV or TSV file.

com.aliyun.odps.CsvStorageHandler: defines how to read data from and write data to CSV files. With this specification, the column delimiter is a comma (,) and the line feed is \n. Example:STORED BY'com.aliyun.odps.CsvStorageHandler'.com.aliyun.odps.TsvStorageHandler: defines how to read data from and write data to TSV files. With this specification, the column delimiter is \t and the line feed is \n.

STORED BY can also be followed by a parameter indicating an external table of which data is in one of the following open source formats: ORC, PARQUET, SEQUENCEFILE, RCFILE, AVRO, and TEXTFILE. For example, you can specify the

org.apache.hive.hcatalog.data.JsonSerDeclass to save the table as a text file.org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe -> stored as textfile

org.apache.hadoop.hive.ql.io.orc.OrcSerde -> stored as orc

org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe -> stored as parquet

org.apache.hadoop.hive.serde2.avro.AvroSerDe -> stored as avro

org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe -> stored as sequencefile

DDL statement used to create an external table of which data is in an open source format:

CREATE EXTERNAL TABLE [IF NOT EXISTS] (<column schemas>) [PARTITIONED BY (partition column schemas)] [ROW FORMAT SERDE ''] STORED AS [WITH SERDEPROPERTIES ( 'odps.properties.rolearn'='${roleran}' [,'name2'='value2',...] ) ] LOCATION 'oss://${endpoint}/${bucket}/${userfilePath}/';The following table describes the properties of the SERDEPROPERTIES clause.

Property

Valid value

Default value

Description

odps.text.option.gzip.input.enabled

true and false

false

Specifies whether to compress a file whose data you want to read.

odps.text.option.gzip.output.enabled

true and false

false

Specifies whether to compress a file whose data you want to write.

odps.text.option.header.lines.count

A non-negative integer

0

Skips the first N lines of a text file.

odps.text.option.null.indicator

A string

Empty string

The string that represents NULL.

odps.text.option.ignore.empty.lines

true and false

true

Specifies whether to ignore blank lines.

odps.text.option.encoding

UTF-8/UTF-16/US-ASCII

UTF-8

Specifies the encoding format of a text file.

NoteMaxCompute allows you to use only a built-in extractor to read CSV or TSV files in the GZIP format from OSS. You can select whether a file is GZIP compressed, which determines the properties that you need to specify.

The LOCATION parameter is in the oss://oss-cn-shanghai-internal.aliyuncs.com/Bucket name/Directory name format. You can obtain an OSS directory in the graphical user interface (GUI). You do not need to add a file name next to the directory.

You can find tables created by using DDL statements in the Workspace Tables pane. You can modify level-1 folders and level-2 folders of tables to change the table locations.

Create a Tablestore external table.

Statement used to create a Tablestore external table:

CREATE EXTERNAL TABLE IF NOT EXISTS ots_table_external( odps_orderkey bigint, odps_orderdate string, odps_custkey bigint, odps_orderstatus string, odps_totalprice double ) STORED BY 'com.aliyun.odps.TableStoreStorageHandler' WITH SERDEPROPERTIES ( 'tablestore.columns.mapping'=':o_orderkey,:o_orderdate,o_custkey, o_orderstatus,o_totalprice', -- (3) 'tablestore.table.name'='ots_tpch_orders' 'odps.properties.rolearn'='acs:ram::xxxxx:role/aliyunodpsdefaultrole' ) LOCATION 'tablestore://odps-ots-dev.cn-shanghai.ots-internal.aliyuncs.com';Parameter description:

com.aliyun.odps.TableStoreStorageHandler specifies the built-in storage handler of MaxCompute for processing data stored in Tablestore.

SERDEPROPERTIES provides options for parameters. You must specify tablestore.columns.mapping and tablestore.table.name when you use TableStoreStorageHandler.

tablestore.columns.mapping: Required. This parameter specifies the columns of the Tablestore table that MaxCompute wants to access. The columns include the primary key columns and property columns. A primary key column is indicated with the colon sign (:) at the beginning of the column name. In this example, primary key columns are

:o_orderkeyand:o_orderdate. The others are property columns.A Tablestore table supports a maximum of four primary key columns. The data types of these columns must be STRING, INTEGER, or BINARY. The first primary key column is also the partition key column. You must specify all primary key columns of the table in Tablestore when you specify a mapping. For other columns, you need to specify only the property columns that MaxCompute needs to access.

tablestore.table.name: the name of the Tablestore table. If the table name does not exist in Tabletore, an error is reported, and MaxCompute does not create a table in Tablestore.

LOCATION: specifies the name and Endpoints of the Tablestore instance.

Create a table in the GUI.

Go to the DataStudio page and create a table in the GUI. For more information, see Create and manage MaxCompute tables. An external table has the following properties:

General

Table Name (entered when you create a table)

Display Name

Level-1 Theme and Level-2 Theme

Description

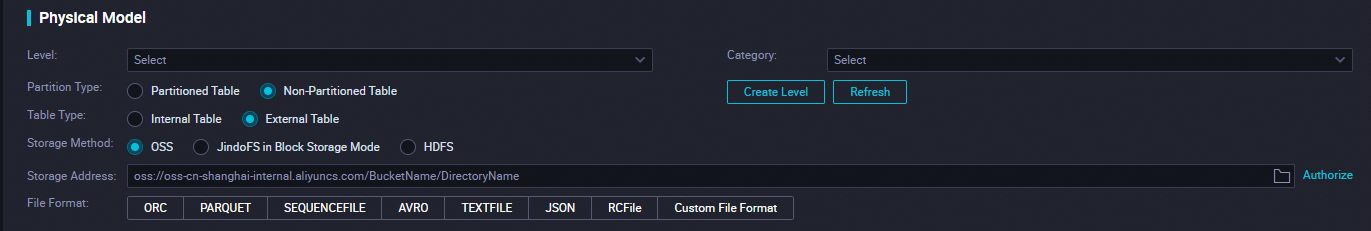

Physical Model

Table Type: Select External Table.

Partition Type: Tablestore external tables do not support partitioning.

Storage Address: corresponds to the LOCATION parameter. In the Physical Model section, you can enter the value of the LOCATION parameter as the storage address. You can also click Select to select a storage address. Then, click Authorize.

File Format: Select the file format based on your business requirements. The following formats are supported: CSV, TSV, ORC, PARQUET, SEQUENCEFILE, RCFILE, AVRO, TEXTFILE, and a custom file format. If you select a custom file format, you need to select the corresponding resource. After you commit a resource, DataWorks automatically parses out included class names and displays them in the Class Name drop-down list.

rolearn: If you have completed STS authorization, you can leave it empty.

Schema

Parameter

Description

Data Type

MaxCompute 2.0 supports fields of TINYINT, SMALLINT, INT, BIGINT, VARCHAR, and STRING types.

Actions

The add, modify, and delete operations are supported.

Definition or Maximum Value Length

You can set the maximum length for fields of the VARCHAR type. For complex data types, enter the definition.

Supported data types

The following table describes basic data types that are supported in external tables.

Data type | New | Example | Description |

TINYINT | Yes | 1Y and -127Y | The 8-bit signed integer type. Valid values: -128 to 127. |

SMALLINT | Yes | 32767S and -100S | The 16-bit signed integer type. Valid values: -32768 to 32767. |

INT | Yes | 1000 and -15645787 | The 32-bit signed integer type. Valid values: -2^31 to 2^31-1. |

BIGINT | No | 100000000000L and -1L | The 64-bit signed integer type. Valid values: -2^63+1 to 2^63-1. |

FLOAT | Yes | None | The 32-bit binary floating point type. |

DOUBLE | No | 3.1415926 1E+7 | An eight-byte double precision floating-point number (the 64-bit binary floating point type). |

DECIMAL | No | 3.5BD and 99999999999.9999999BD | A decimal exact number. The integer part is in the range of -1036 + 1 to 1036 - 1, and the fractional part is accurate to 10 to 18 decimal places. |

VARCHAR(n) | Yes | None | The variable-length character type, in which n specifies the length. Valid values: 1 to 65535. |

STRING | No | "abc", 'bcd', and "alibaba" | The string type. The maximum size is 8 MB. |

BINARY | Yes | None | A binary number. The maximum size is 8 MB. |

DATETIME | No | DATETIME '2017-11-11 00:00:00' | The DATETIME type. UTC+8 is used as the standard time zone. Valid values: 0000-01-01 to 9999-12-31, accurate to the millisecond. |

TIMESTAMP | Yes | TIMESTAMP '2017-11-11 00:00:00.123456789' | The TIMESTAMP data type, which is independent of time zones. Valid values: 0000-01-01 00:00:00.000000000 to 9999-12-31 23:59:59.999999999, accurate to the nanosecond. |

BOOLEAN | No | TRUE and FALSE | The BOOLEAN type. Valid values: TRUE and FALSE. |

The following table lists complex data types that are supported in external tables.

Data type | Custom method | Construction method |

ARRAY | array< int >; array< struct< a:int, b:string >> | array(1, 2, 3); array(array(1, 2); array(3, 4)) |

MAP | map< string, string >; map< smallint, array< string>> | map("k1", "v1", "k2", "v2"); map(1S, array('a', 'b'), 2S, array('x', 'y)) |

STRUCT | struct< x:int, y:int>; struct< field1:bigint, field2:array< int>, field3:map< int, int>> | named_struct('x', 1, 'y', 2); named_struct('field1', 100L, 'field2', array(1, 2), 'field3', map(1, 100, 2, 200)) |

If you want to use data types newly supported by MaxCompute 2.0, such as TINYINT, SMALLINT, INT, FLOAT, VARCHAR, TIMESTAMP, BINARY, and complex data types, you must include set odps.sql.type.system.odps2=true; before the statement used to create a table, and commit the set statement together with the table creation statement. If compatibility with Hive is required, we recommend that you include the odps.sql.hive.compatible=true; statement.

View and process external tables

To view external tables, perform the following operations: Go to the DataStudio page of your workspace. In the left-side navigation pane, click Workspace Tables. For more information, see Manage tables. External tables are processed in the same way as internal tables.