This topic describes how to migrate data from a PolarDB for PostgreSQL cluster to a self-managed Oracle database by using Data Transmission Service (DTS). This is suitable for scenarios such as data backflow tests and functional tests.

Prerequisites

- The tables to be migrated from the source PolarDB for PostgreSQL cluster must contain primary keys or UNIQUE NOT NULL indexes.

- The version number of the self-managed Oracle database is 9i, 10g, 11g, 12c, 18c, or 19c.

- The schemas of objects such as tables are created in the self-managed Oracle database.

- The storage space of the self-managed Oracle database is larger than the total size of the data in the PolarDB for PostgreSQL cluster.

Limits

- In this scenario, DTS supports only full data migration and incremental data migration. DTS does not support schema migration.

- During full data migration, DTS uses read and write resources of the source and destination databases. This may increase the loads of the database servers. Before you migrate data, evaluate the impact of data migration on the performance of the source and destination databases. We recommend that you migrate data during off-peak hours.

- If the self-managed Oracle database is deployed in a Real Application Cluster (RAC) architecture and is connected to DTS over an Alibaba Cloud virtual private cloud (VPC), you must connect the Single Client Access Name (SCAN) IP address of the Oracle RAC and the virtual IP address (VIP) of each node to the VPC and configure routes. The settings ensure that your DTS task can run as expected. For more information, see Connect an on-premises data center to DTS by using VPN Gateway. Important When you configure the source Oracle database in the DTS console, you can specify the SCAN IP address of the Oracle RAC as the database endpoint or IP address.

- A data migration task can migrate data from only a single database. To migrate data from multiple databases, you must create a data migration task for each database.

- If you select a schema as the object to be migrated and create a table in the schema or execute the RENAME statement to rename a table in the schema, you must execute the

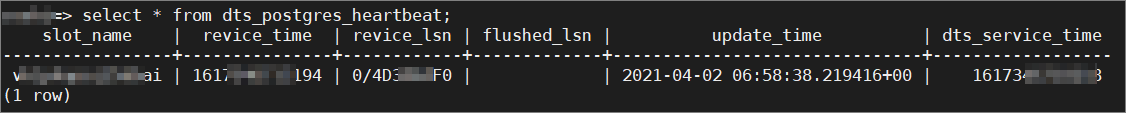

ALTER TABLE schema.table REPLICA IDENTITY FULL;statement before you write data to the table.Note Replace theschemaandtablevariables in the preceding statement with your schema name and table name. - To ensure that the latency of data migration is accurate, DTS adds a heartbeat table named

dts_postgres_heartbeatto the source database. The following figure shows the schema of the heartbeat table.

- If the source database has long-running transactions and the task contains incremental data migration, the WAL logs that are generated before the long-running transactions are submitted may not be cleared and therefore pile up, resulting in insufficient storage space in the source database.

Billing

| Migration type | Task configuration fee | Internet traffic fee |

|---|---|---|

| Full data migration | Free of charge. | Charged only when data is migrated from Alibaba Cloud over the Internet. For more information, see Billing overview. |

| Incremental data migration | Charged. For more information, see Billing overview. |

SQL operations that can be synchronized during incremental data migration

INSERT, UPDATE, and DELETE

Permissions required for database accounts

| Database | Required permission |

|---|---|

| PolarDB PostgreSQL | Permissions of a privileged account |

| Self-managed Oracle database | Permissions of the schema owner |

Procedure

- Log on to the DTS console. Note If you are redirected to the Data Management (DMS) console, you can click the

icon in the lower-right corner to go to the previous version of the DTS console.

icon in the lower-right corner to go to the previous version of the DTS console. - In the left-side navigation pane, click Data Migration.

- At the top of the Migration Tasks page, select the region where the destination cluster resides.

- In the upper-right corner of the page, click Create Migration Task.

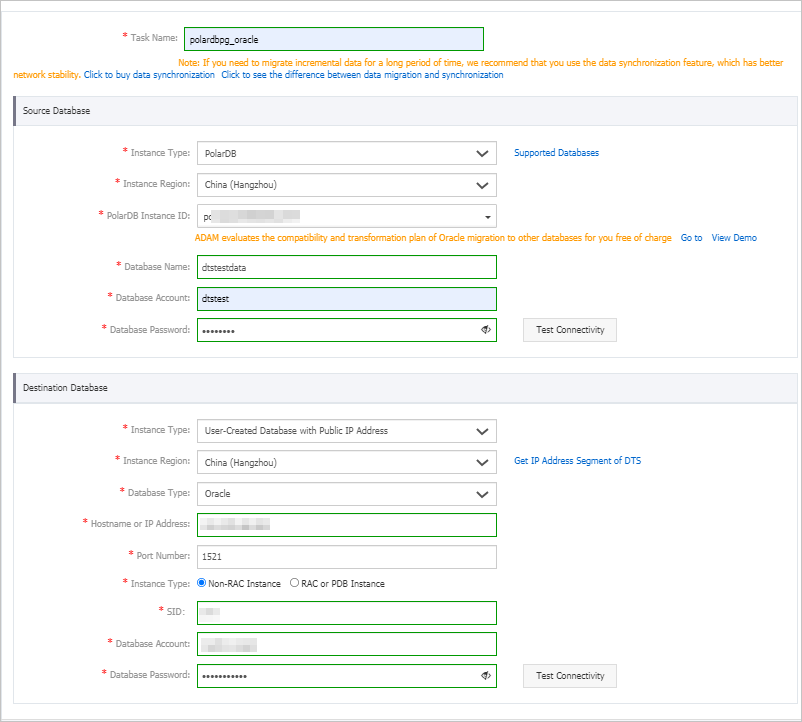

- Configure the source and destination databases.

Section Parameter Description N/A Task Name The task name that DTS automatically generates. We recommend that you specify a descriptive name that makes it easy to identify the task. You do not need to specify a unique task name. Source Database Instance Type The type of the source database. Select PolarDB. Instance Region The region in which the source PolarDB cluster resides. PolarDB Instance ID The ID of the source PolarDB for PostgreSQL cluster. Database Account The database account of the source PolarDB cluster. For more information about the permissions that are required for the account, see Permissions required for database accounts. Database Password The password of the database account. Note After you set the source database parameters, click Test Connectivity next to Database Password to check whether the information is valid. If the information is valid, the Passed message is displayed. If the Failed message is displayed, click Check next to Failed to modify the information based on the check results.Destination Database Instance Type The access method of the destination database. In this example, User-Created Database with Public IP Address is selected. Note If the destination self-managed database is of another type, you must set up the environment that is required for the database. For more information, see Preparation overview.Instance Region You do not need to specify this parameter. Database Type The type of the destination database. Select Oracle. Hostname or IP Address The IP address that is used to access the self-managed Oracle database. In this example, the public IP address is used. Port Number The service port number of the self-managed Oracle database. In this example, 1521 is used. Instance Type - If you select Non-RAC Instance, you must specify the SID parameter.

- If you select RAC or PDB Instance, you must specify the Service Name parameter.

SID The system ID (SID) of the destination database. Database Account The database account of the self-managed Oracle database. For information about the permissions that are required for the account, see Permissions required for database accounts. Database Password The password of the database account. Note After you set the destination database parameters, click Test Connectivity next to Database Password to check whether the information is valid. If the information is valid, the Passed message is displayed. If the Failed message is displayed, click Check next to Failed to modify the information based on the check results. - In the lower-right corner of the page, click Set Whitelist and Next. Warning If the CIDR blocks of DTS servers are automatically or manually added to the whitelist of the database or instance, or to the ECS security group rules, security risks may arise. Therefore, before you use DTS to migrate data, you must understand and acknowledge the potential risks and take preventive measures, including but not limited to the following measures: enhance the security of your username and password, limit the ports that are exposed, authenticate API calls, regularly check the whitelist or ECS security group rules and forbid unauthorized CIDR blocks, or connect the database to DTS by using Express Connect, VPN Gateway, or Smart Access Gateway.

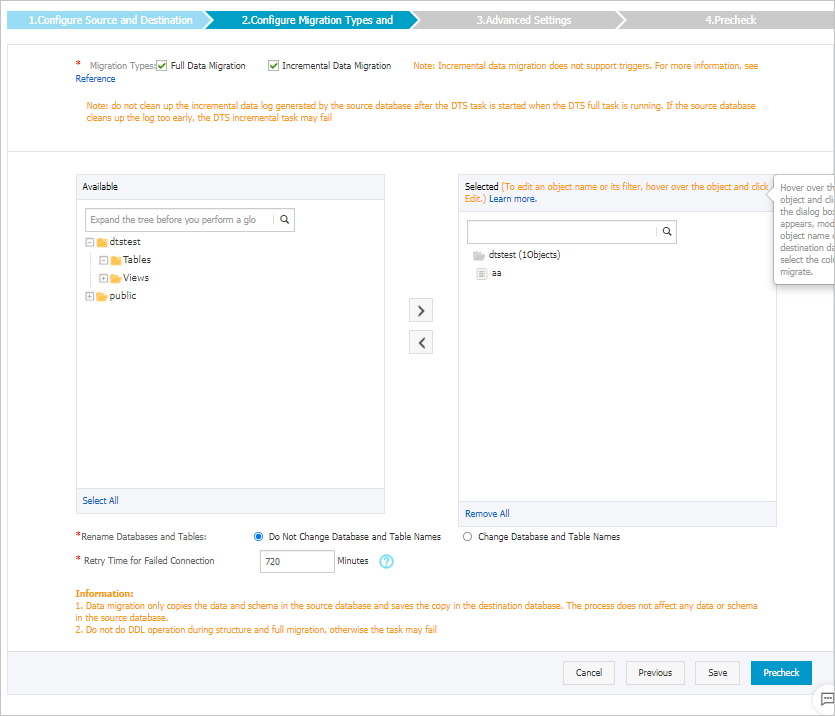

- Select the migration types, the migration policy, and the objects to migrate.

Setting Description Specify whether to rename objects You can use the object name mapping feature to rename the objects that are migrated to the destination cluster. For more information, see Object name mapping. Specify the retry time range for failed connections to the source or destination database By default, if DTS fails to connect to the source or destination database, DTS retries within the next 720 minutes (12 hours). You can specify the retry time range based on your business requirements. If DTS is reconnected to the source and destination databases within the specified time range, DTS resumes the data migration task. Otherwise, the data migration task fails. Note Within the time range in which DTS attempts to reconnect to the source and destination databases, you are charged for the DTS instance. We recommend that you specify the retry time range based on your business requirements. You can also release the DTS instance at the earliest opportunity after the source and destination databases are released. - In the lower-right corner of the page, click Precheck. Note

- Before you can start the data migration task, DTS performs a precheck. You can start the data migration task only after the task passes the precheck.

- If the task fails to pass the precheck, you can click the

icon next to each failed item to view details.

icon next to each failed item to view details. - You can troubleshoot the issues based on the causes and run a precheck again.

- If you do not need to troubleshoot the issues, you can ignore failed items and run a precheck again.

- After the task passes the precheck, click Next.

- In the Confirm Settings dialog box, specify the Channel Specification parameter and select Data Transmission Service (Pay-As-You-Go) Service Terms.

- Click Buy and Start to start the data migration task.

- Full data migration

We recommend that you do not manually stop the task during full data migration. Otherwise, the data migrated to the destination database may be incomplete. You can wait until the data migration task automatically stops.

- Full data migration and incremental data migration

An incremental data migration task does not automatically stop. You must manually stop the task.

Important We recommend that you select an appropriate time to manually stop the data migration task. For example, you can stop the task during off-peak hours or before you switch your workloads to the destination cluster.- Wait until Incremental Data Migration and The migration task is not delayed are displayed in the progress bar of the migration task. Then, stop writing data to the source database for a few minutes. The latency of Incremental Data Migration may be displayed in the progress bar.

- Wait until the status of Incremental Data Migration changes to The data migration task is not delayed again. Then, manually stop the migration task.

- Full data migration