If the performance metrics such as memory usage, CPU utilization, and bandwidth usage of specific data nodes of a Tair (Redis OSS-compatible) cluster instance are much higher than those of other data nodes, the cluster instance may have data skew issues. If the instance data is severely skewed, exceptions, such as key evictions, out of memory (OOM) errors, and prolonged responses, may occur even when the memory usage of the instance is low.

Quick handling

This section describes how to confirm whether data skew exists and how to identify and handle large keys.

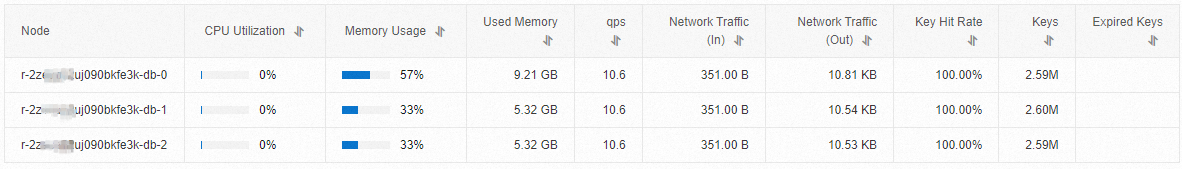

In the left-side navigation pane of the instance details page, choose CloudDBA > Real-time Performance to check whether the Memory Usage metric is balanced across the data nodes.

In this example, the Memory Usage metric of the db-0 data node is significantly higher than that of other data nodes. This indicates that data skew occurs on the instance.

Note

NoteYou can also use the instance diagnostics feature to check whether data skew exists on the current instance. For more information, see Perform diagnostics on an instance.

View the total number of keys for each data node.

In this example, the total number of keys is 2.59 million in db-0, 2.60 million in db-1, and 2.59 million in db-2. The keys are evenly distributed across the data nodes. In this case, it is highly likely that the db-0 data node has large keys.

NoteIf the keys are unevenly distributed across the data nodes, it may indicate an issue with the key name design in your business logic. For example, the use of hash tags can cause the client to route all keys to the same data node when the Cyclic Redundancy Check (CRC) algorithm is used for distribution. In this case, you must make adjustments at the application level to avoid using

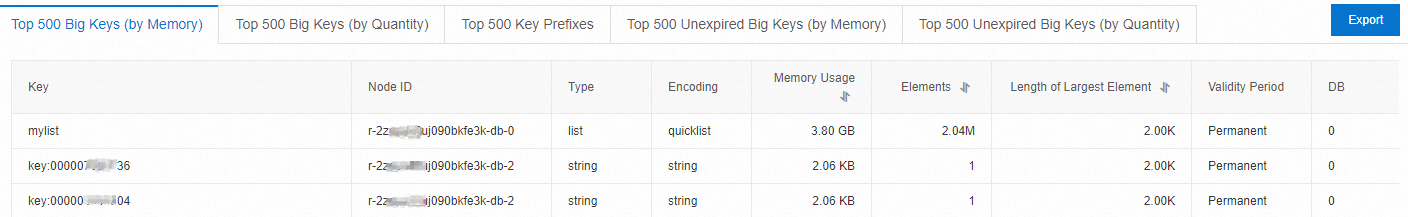

{}in key names.Use the offline key analysis feature to quickly identify large keys.

This feature analyzes the backup files of the instance without affecting online business. After the analysis is complete, you can view the top 500 large keys. In this example, the key named mylist is identified as a large key.

(Optional) Connect to a specific data node for query and analysis.

For a cluster instance in proxy mode, you can run the SCAN command on the specified data node by using the ISCAN command developed in-house by Alibaba Cloud. For more information, see In-house commands for instances in proxy mode.

For a cluster instance in direct connection mode, you can call the DescribeDBNodeDirectVipInfo operation to obtain the virtual IP address (VIP) of each data node, and then connect the client to the data node for analysis.

Use one of the following solutions:

Provisional solution: Upgrade the instance specifications.

Long-term solution (recommended): Restructure the business logic by appropriately splitting large keys.

For more information about the causes of and solutions to data skew issues, see the following section. This topic is also suitable for troubleshooting issues related to high performance metrics such as memory usage, CPU utilization, bandwidth usage, and latency in standard instances.

Why does data skew occur?

Tair (Redis OSS-compatible) cluster instances use a distributed architecture. The storage space of a cluster instance is split into 16,384 slots. Each data node stores and handles the data in specific slots. For example, in a 3-shard cluster instance, slots are distributed among the three shards in the following way: the first shard handles slots in the [0,5460] range, the second shard handles slots in the [5461,10922] range, and the third shard handles slots in the [10923,16383] range. When you write a key to a cluster instance or update a key of a cluster instance, the client determines the slot to which the key belongs by using the following formula: Slot=CRC16(key)%16384. Then, the client writes the key to the slot. In theory, this mechanism evenly distributes keys among data nodes, and is sufficient to maintain the values of metrics such as memory usage and CPU utilization at almost the same level across all data nodes.

However, in actual practice, data skew may occur due to a lack of advanced planning, unusual data writes, or data access spikes.

Typically, data skew occurs when the resources of specific data nodes are in higher demand compared with those of other data nodes. You can view the metrics of data nodes on the Data Node tab of the Performance Monitor page in the console. If the metrics of a data node are consistently 20% higher than those of other data nodes, data skew may be present. The severity of the data skew is proportional to the difference between the metrics of the abnormal data node and normal data nodes.

When the memory usage of a single data node reaches 100%, the data node triggers data eviction. The default eviction policy is volatile-lru.

The following figure shows two typical data skew scenarios. Although keys are evenly distributed across the cluster instance (two keys per data node), data skew still occurs.

The number of queries per second (QPS) for

key1on theShard 1data node is much higher than that of other keys. This is a case of data access skew, which can lead to high CPU utilization and bandwidth usage on the data node. This affects the performance of all keys on the data node.The size of

key5on theShard 2data node is 1 MB, which is much larger than that of other keys. This is considered a case of data volume skew, which can lead to high memory and bandwidth usage on the data node. This affects the performance of all keys on the data node.

Provisional solutions

If data skew occurs on an instance, you can implement a provisional solution to mitigate the issue in the short term.

Issue | Possible cause | Provisional solution |

Memory usage skew | Large keys and hash tags | Upgrade the instance specifications. For more information, see Change the configurations of an instance. Important

|

Bandwidth usage skew | Large keys, hotkeys, and resource-intensive commands | Increase the bandwidth of one or more data nodes. For more information, see Manually increase the bandwidth of an instance. Note The bandwidth of a data node can be increased by up to six times the default bandwidth of the instance, but the increase in bandwidth cannot exceed 192 Mbit/s. If this measure still does not resolve the data skew issue, we recommend that you make adjustments at the application level. |

CPU utilization skew | Large keys, hotkeys, and resource-intensive commands |

Note Scale the instance during off-peak hours because the data migration process that occurs during scaling can consume significant CPU resources. |

To temporarily address data skew, you can also reduce requests for large keys and hotkeys. To resolve issues related to large keys and hotkeys, you must make adjustments at the application level. We recommend that you promptly identify the cause of data skew in your instance and handle the issue at the application level to optimize instance performance. For more information, see the Causes and solutions section of this topic.

Causes and solutions

To resolve the root cause of data skew, we recommend that you evaluate your business growth and make the necessary preparations for future growth. You can take measures to split large keys and write data in a manner that conforms to the expected usage.

Cause | Description | Solution |

Large keys | A large key is identified based on the size of the key and the number of members in the key. Typically, large keys are common in key-value data structures such as Hash, List, Set, and Zset. Large keys occur when these structures store a large number of fields or fields that are excessively large. Large keys are one of the main culprits in data skew. For more information about how to gracefully delete large keys or hotkeys without affecting your business, see Identify and handle large keys and hotkeys. |

|

Hotkeys | Hotkeys are keys that have a much higher QPS value than other keys. Hotkeys are commonly found during stress testing on a single key, or in flash sale scenarios involving popular product IDs. For more information about hotkeys, see Identify and handle large keys and hotkeys. |

|

Resource-intensive commands | Each command has a metric called time complexity that measures its resource and time consumption. In most cases, the higher the time complexity of a command, the more resources the command consumes. For example, the time complexity of the |

|

Hash Tags | Tair distributes keys to specific data nodes based on the content contained in the |

|

FAQ

In a cluster instance with large keys, can I upgrade the specifications of the data node where a large key resides?

No. Tair (Redis OSS-compatible) does not allow you to separately upgrade the specifications of individual data nodes. It only allows for simultaneous upgrades of specifications for all data nodes in the instance.

Can I add data nodes and redistribute keys to eliminate large keys in a cluster instance?

No. While adding data nodes can help relieve some of the memory pressure from existing data nodes, Tair (Redis OSS-compatible) distributes data at the key level, which means that large keys cannot be automatically split. As a result, after the keys are redistributed, one data node may still have higher memory usage than others, resulting in data skew. The recommended approach is to manually split large keys into smaller ones.