If your cluster's allocated resources are insufficient to schedule application pods due to high demand, you can enable the auto scaling feature in ACK One registered clusters to automatically scale out nodes and increase available resources for scheduling. Two elasticity solutions are available: node auto scaling and node instant scaling. The latter offers faster scaling, higher delivery efficiency, and lower operational complexity.

Prerequisites

You have created a node pool.

You have read node scaling to understand its working principles and features.

Step 1: Configure RAM permissions

Create a RAM user and grant the following custom policy to the user. For more information, see Use RAM to authorize access to clusters and cloud resources.

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, click the name of the one you want to change. In the left navigation pane, choose .

On the Secrets page, click Create from YAML. Fill in the following sample code to create a Secret named alibaba-addon-secret.

NoteComponents access cloud services using the stored AccessKeyID and AccessKeySecret. Skip this step If an

alibaba-addon-secretalready exists.apiVersion: v1 kind: Secret metadata: name: alibaba-addon-secret namespace: kube-system type: Opaque stringData: access-key-id: <AccessKeyID of the RAM user> access-key-secret: <AccessKeySecret of the RAM user>

Step 2: Configure node scaling solution

Enable node auto scaling

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster to manage and click its name. In the left navigation pane, choose .

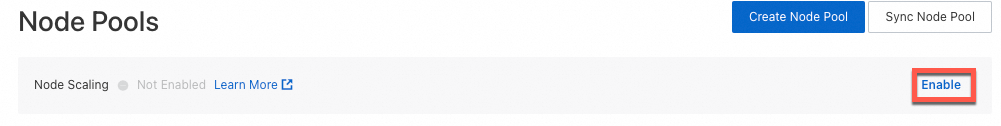

On the Node Pools page, click Enable next to Node Scaling.

If this is the first time you use the node auto scaling feature, follow the prompted instructions to activate the service and complete authorization. Otherwise, skip this step.

For an ACK managed cluster, authorize ACK to use the AliyunCSManagedAutoScalerRole for accessing your cloud resources.

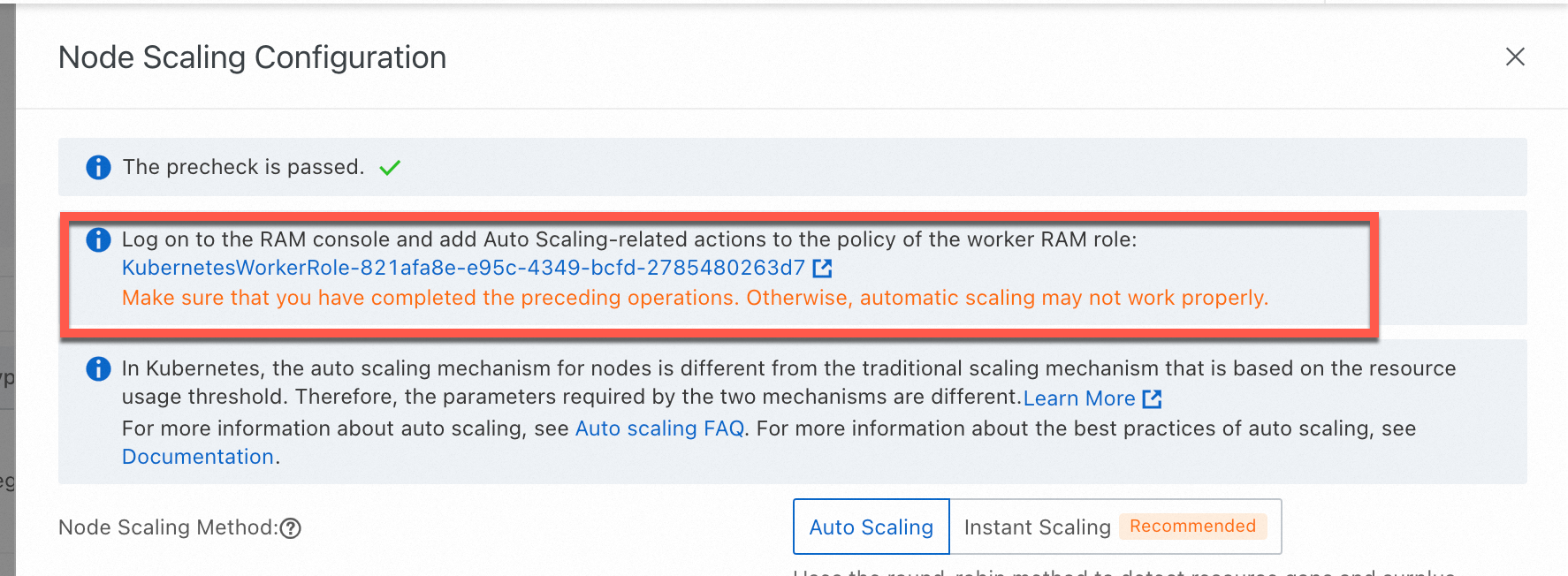

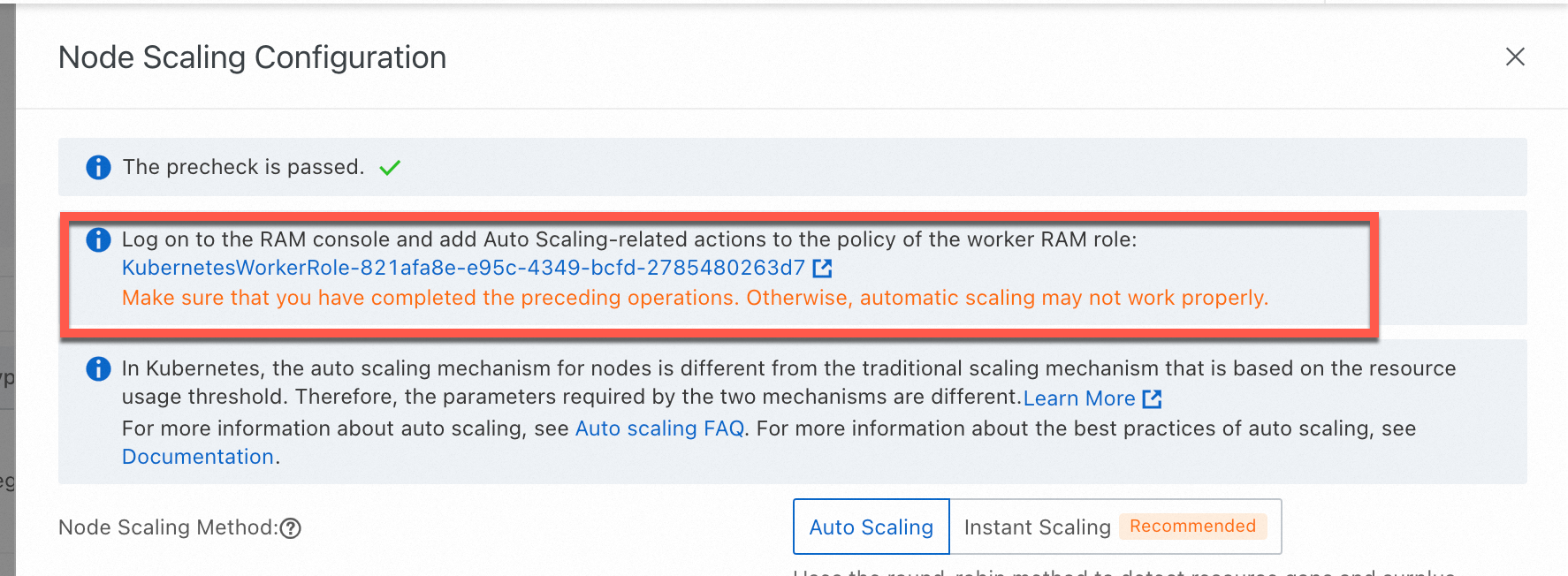

For an ACK dedicated cluster, authorize ACK to use the KubernetesWorkerRole and AliyunCSManagedAutoScalerRolePolicy for scaling management. The following figure shows the console page on which you can make the authorization when you enable Node Scaling.

In the Node Scaling Configuration panel, set Node Scaling Method to Auto Scaling, configure scaling parameters, and click OK.

Enable node instant scaling

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster to manage and click its name. In the left navigation pane, choose .

On the Node Pools page, click Enable next to Node Scaling.

If this is the first time you use the automatic cluster scaling feature, follow the on-screen instructions to activate the Auto Scaling service and grant the required permissions. If you have activated the service and granted the permissions, skip this step.

ACK managed clusters: Grant permissions to the AliyunCSManagedAutoScalerRole role.

ACK dedicated clusters: Grant permissions to the KubernetesWorkerRole role and the AliyunCSManagedAutoScalerRolePolicy system policy. The following figure shows the entries.

On the Node Scaling Configuration page, set Node Scaling Method to Instant Scaling, configure the scaling parameters, and then click OK.

When elastic scaling is performed, the scaling component automatically triggers a scale-out based on the scheduling status.

You can switch the node scaling solution after it is selected. To switch the solution, you can change it to Node Autoscaling. Carefully read the on-screen messages and follow the instructions. This feature is available only to users on the whitelist. To use this feature, submit a ticket.

Configuration item

Description

Scale-in Threshold

The ratio of the requested resources of a single node to the resource capacity of the node in a node pool for which node autoscaling is enabled.

A node can be scaled in only when the ratio is lower than the configured threshold. This means the CPU and memory resource utilization of the node is lower than the Scale-in Threshold.

GPU Scale-in Threshold

The scale-in threshold for GPU-accelerated instances.

A GPU-accelerated node can be scaled in only when the ratio is lower than the configured threshold. This means the CPU, memory, and GPU resource utilization of the node is lower than the GPU Scale-in Threshold.

Defer Scale-in For

The interval between when a scale-in is required and when the scale-in is performed. Unit: minutes. Default value: 10 minutes.

ImportantThe scaling component can perform a scale-in only after the conditions specified by Scale-in Threshold and Defer Scale-in For are met.

Step 3: Configure a node pool with auto scaling enabled

You can either modify existing node pools by switching their Scaling Mode to Auto, or create new node pools with auto scaling enabled. Key configurations are as follows:

Parameter | Description |

Scaling Mode | Manual and Auto scalings are supported. Computing resources are automatically adjusted as needed and policies to reduce cluster costs.

|

Instances | The Min. Instances and Max. Instances defined for a node pool exclude your existing instances. Note

|

Instance-related parameters | Select the ECS instances used by the worker node pool based on instance types or attributes. You can filter instance families by attributes such as vCPU, memory, instance family, and architecture. For more information about the instance specifications not supported by ACK and how to configure nodes, see ECS instance type recommendations. When the node pool is scaled out, ECS instances of the selected instance types are created. The scaling policy of the node pool determines which instance types are used to create new nodes during scale-out activities. Select multiple instance types to improve the success rate of node pool scale-out operations. The instance types of the nodes in the node pool. If you select only one, the fluctuations of the ECS instance stock affect the scaling success rate. We recommend that you select multiple instance types to increase the scaling success rate. Select the ECS instances used by the worker node pool based on instance types or attributes. You can filter instance families by attributes such as vCPU, memory, instance family, and architecture. For more information about the instance specifications not supported by ACK and how to configure nodes, see ECS specification recommendations for ACK clusters. When the node pool is scaled out, ECS instances of the selected instance types are created. The scaling policy of the node pool determines which instance types are used to create new nodes during scale-out activities. Select multiple instance types to improve the success rate of node pool scale-out operations. |

Operating System | When you enable auto scaling, you can select an image based on Alibaba Cloud Linux, Windows, or Windows Core. If you select an image based on Windows or Windows Core, the system automatically adds the |

Node Labels | Node labels are automatically added to nodes that are added to the cluster by scale-out activities. Important Auto scaling can recognize node labels and taints only after the node labels and taints are mapped to node pool tags. A node pool can have only a limited number of tags. Therefore, you must limit the total number of ECS tags, taints, and node labels of a node pool that has auto scaling enabled to less than 12. |

Scaling Policy |

|

Use Pay-as-you-go Instances When Preemptible Instances Are Insufficient | You must set the Billing Method parameter to Preemptible Instance. After this feature is enabled, if enough preemptible instances cannot be created due to price or inventory constraints, ACK automatically creates pay-as-you-go instances to meet the required number of ECS instances. |

Enable Supplemental Spot Instances | You must set the Billing Method parameter to Spot Instance. After this feature is enabled, when a system receives a message that spot instances will be reclaimed (5 minutes before reclamation), ACK will attempt to scale out new instances as compensation. If compensation succeeds, ACK will drain and remove the old nodes from the cluster. If compensation fails, ACK will not drain the old nodes. Active release of spot instances may cause service interruptions. After compensation failure, when inventory becomes available or price conditions are met, ACK will automatically purchase instances to maintain the expected node count. For details, see Best practices for preemptible instance-based node pools. To improve compensation success rates, we recommend enabling Use Pay-as-you-go Instances When Spot Instances Are Insufficient at the same time. |

Scaling Mode | You must enble Node Scaling on the Node Pools page and set the Scaling Mode of the node pool to Auto.

|

Taints | After you add taints to a node, ACK no longer schedules pods to it. |

Step 4: (Optional) Verify the result

After you complete the preceding operations, you can use the node auto scaling feature. The node pool shows that auto scaling has started and the cluster-autoscaler component is automatically installed in the cluster.

Auto scaling is enabled for the node pool

On the Node Pools page, the node pools that have auto scaling enabled are displayed in the node pool list.

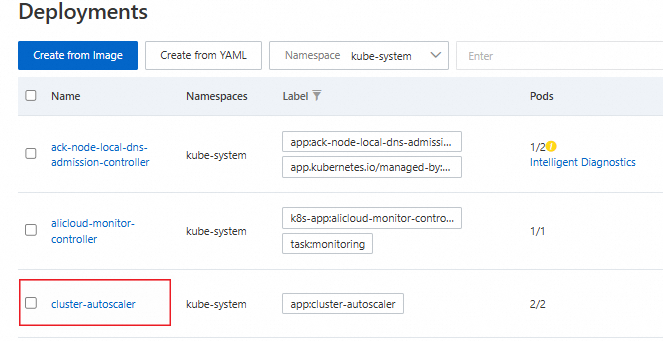

The cluster-autoscaler component is installed

In the left-side navigation pane of the details page, choose .

Select the kube-system namespace to view the cluster-autoscaler component.