Dify workflows often require scheduling to automate tasks in use cases such as risk monitoring, data analytics, content generation, and data synchronization. However, Dify does not natively support scheduling. This guide explains how to integrate XXL-JOB, a distributed task scheduling system, to schedule and monitor your workflow applications and ensure reliable operation.

XXL-JOB scheduling core features

Feature | Overview |

Task support | Supports scheduling for self-hosted Dify workflows on the public network and in an Alibaba Cloud internal network environment. |

Flexible time configuration |

|

Enterprise-level alerts and monitoring |

|

Observability and scheduling dashboard | Provides an enterprise-grade scheduling dashboard that visualizes scheduling status at the instance and application levels, including key metrics like scheduling trends, success rate, and failure rate. |

Execution history and event tracking |

|

Solution overview

Configure XXL-JOB to schedule a Dify workflow application in just three steps:

Create a Dify environment: Create a Container Service for Kubernetes (ACK) cluster, install the ack-dify component in the cluster, then access the Dify service and create a workflow application.

Create and configure a scheduling instance: Create an XXL-JOB scheduling instance, create an application within the instance to group tasks, then configure task parameters to connect to the Dify workflow application.

Test the integration: Verify that the distributed task scheduling function runs correctly and review the details of a successful scheduled execution.

1. Create a Dify environment

1. Deploy the Dify environment To complete operations such as installing the ack-dify component and enabling public network access for the Dify service, useone-click deployment for a Dify environment. Note This guide uses public network access for demonstration purposes. For production environments, we recommend enabling Resource Access Management (RAM) to ensure the security of your application data. If you are new to ACK clusters, click One-click deployment to create the necessary runtime environment for the Dify service. This solution uses the ack-dify application template from the ACK App Marketplace to quickly deploy a Dify application with Helm, meeting development and testing needs. Important Before using one-click deployment, ensure you have activated and authorized the Container Service for Kubernetes (ACK) cluster. For details, see Quick creation of an ACK managed cluster.

Once the ack-dify component is installed, proceed to the next step. | |

2. View the external IP address After configuration, navigate to Network > Services > ack-dify and set the namespace to dify-system. You will see the External IP of the ack-dify service. To access the Dify service, enter this IP address into your browser's address bar. |

|

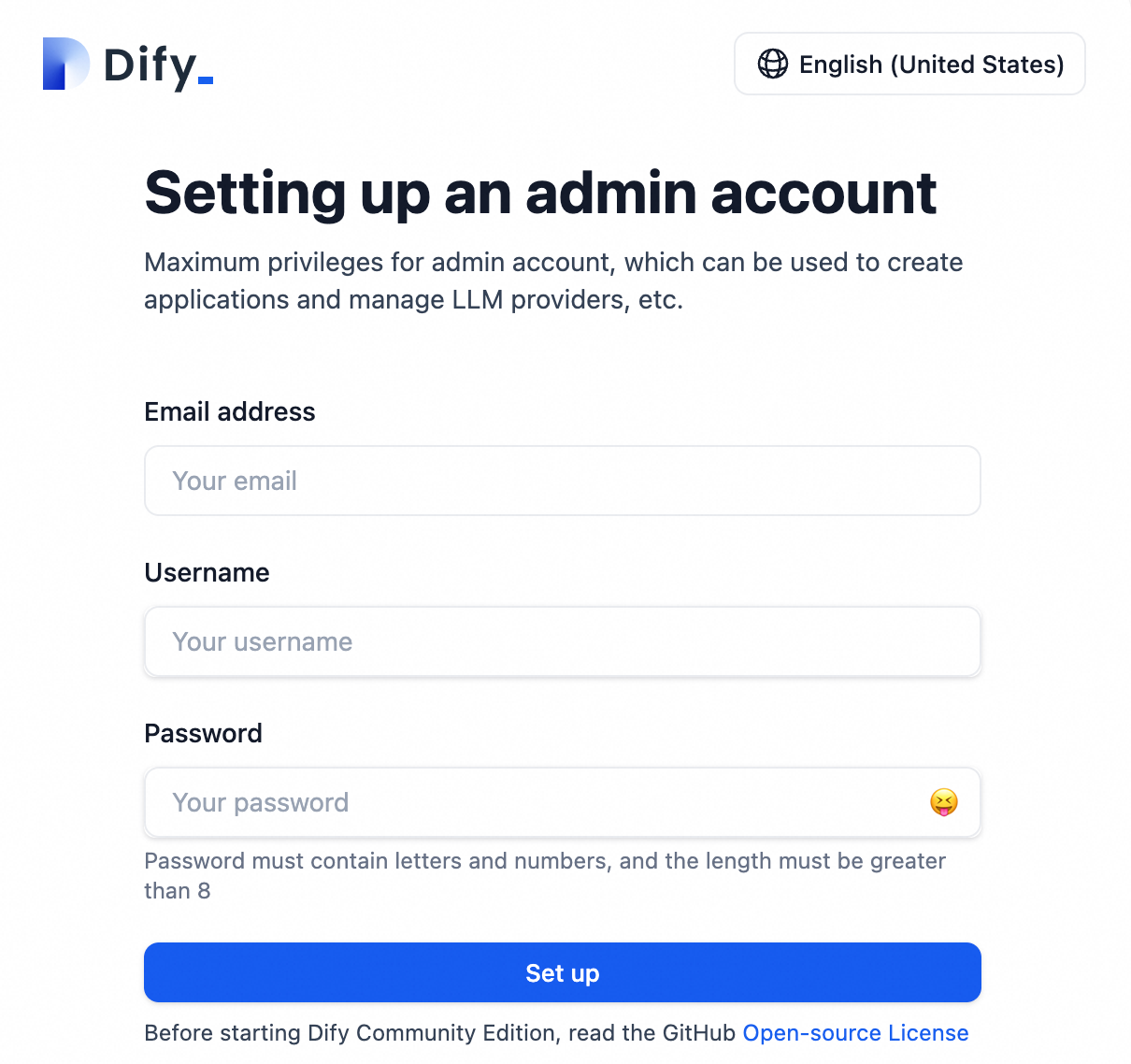

3. Register the Dify service Access the External IP. To register and use the Dify platform, follow the on-screen instructions for Setting up an admin account (including an email address, username, and password). |

|

4. Create a Dify workflow application For subsequent scheduling tests, you must create a simple Dify workflow example. To quickly build and publish a workflow application, import the provided file dify-flow.yml into Dify. Important Scheduling Dify workflows with XXL-JOB only supports Dify workflow applications. XXL-JOB does not currently support chat-type applications. |

|

2. Create and configure a scheduling instance

1. Create an instance Log on to the XXL-JOB console, select a Region from the top menu bar, and set the Instance Name and VPC ID. Ensure the engine version is 2.2.0 or later. Click Buy Now at the bottom. |

|

2. Create an application Before using XXL-JOB tasks, you must first create an application. Applications provide a way to logically group tasks. Each application acts as an independent task execution unit, simplifying viewing, configuring, and scheduling tasks. On the XXL-JOB instance page, find the target instance and click Task Management in the Actions column. In the left navigation pane, select Application Management, then click Create Application.

|

|

3. Create a task A task is the specific unit of business logic to be scheduled. After you create an application, you must bind it to a task executor. Once registered with the application via its AppName, the executor can run the tasks under that application. Note If you do not create an application first, the executor cannot register, and its tasks cannot run

|

|

4. Configure task parameters After publishing the Dify workflow application, select API Access to view the required task parameters.

|

|

3. Test the integration

1. Run a test task To verify that the scheduling function is working, go to Task Management and click Run Once. |

|

2. View scheduling details Choose Execution List and click Details to view the information for the scheduling task. You can confirm that the test task was successful and review its details. |

|

3. View details

|

|

Disclaimer

Dify on ACK is a Helm deployment solution that adapts the open source Dify project for the Alibaba Cloud ACK environment, enabling rapid deployment. ACK does not guarantee the operation of the Dify application itself or its compatibility with other ecosystem components, such as plug-ins and databases. ACK does not provide compensation or other commercial services for business losses caused by defects in Dify or its ecosystem components. We recommend that you follow updates from the open source community and proactively fix issues in the open source software to ensure the stability and security of your Dify deployment.