ログが Simple Log Service から Object Storage Service (OSS) に転送された後、ログはさまざまな形式で保存できます。このトピックでは、Parquet 形式について説明します。

OSS へのログ転送の旧バージョンは廃止されました。新バージョンを参照してください。

パラメーター

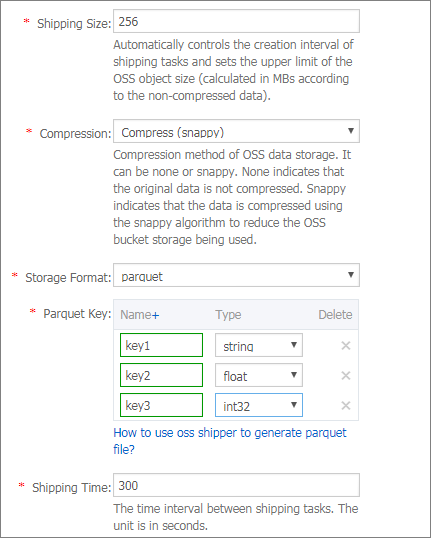

次の図は、転送ルールで [ストレージ形式] に parquet を指定した場合に設定する必要があるパラメーターを示しています。詳細については、「Simple Log Service から OSS にログデータを転送する」をご参照ください。

次の表に、パラメーターの説明を示します。

パラメーター | 説明 |

キー名 | OSS に転送するログフィールドの名前。ログストアの [未加工ログ] タブでログフィールドを表示できます。ログフィールドは 1 つずつ追加することをお勧めします。ログフィールドが OSS に転送されると、ログフィールドは追加された順序に基づいて Parquet ファイルに保存されます。ログフィールドの名前は、Parquet ファイルの列の名前として使用されます。 OSS に転送できるログフィールドには、ログコンテンツのフィールドと、__time__、_topic__、__source__ などの予約フィールドが含まれます。予約フィールドの詳細については、「予約フィールド」をご参照ください。Parquet ファイルの列の値は、次のシナリオでは null になります。

説明

|

型 | Parquet 形式は、string、boolean、int32、int64、float、double の 6 つの型のデータの保存をサポートしています。ログが転送されると、文字列型のデータは Parquet では byte_array 型として保存され、システムは Parquet データの |

OSS オブジェクトのサンプル URL

ログが OSS に転送されると、ログは OSS バケットに保存されます。次の表に、ログを保存する OSS オブジェクトのサンプル URL を示します。

圧縮タイプ | オブジェクトサフィックス | サンプル URL | 説明 |

圧縮なし | .parquet | oss://oss-shipper-shenzhen/ecs_test/2016/01/26/20/54_1453812893059571256_937.parquet | OSS オブジェクトをコンピューターにダウンロードし、オブジェクト内のデータを使用します。詳細については、「データの使用」をご参照ください。 |

Snappy | .snappy.parquet | oss://oss-shipper-shenzhen/ecs_test/2016/01/26/20/54_1453812893059571256_937.snappy.parquet |

データの使用

LanguageManual DDLE-MapReduce、Spark、または Hive を使用して、OSS に転送されたデータを使用します。詳細については、「」をご参照ください。

検査ツールを使用してデータを使用します。

Python が提供する parquet-tools を使用して、Parquet ファイルを検査し、ファイルの詳細を表示し、データを読み取ります。次のコマンドを実行するか、別の方法を使用して、ユーティリティをインストールします。

pip3 install parquet-toolsParquet ファイルの列のデータを表示する

コマンド

remote_addr 列と body_bytes_sent 列のデータを表示します。

parquet-tools show -n 2 -c remote_addr,body_bytes_sent 44_1693464263000000000_2288ff590970d092.parquet応答

+----------------+-------------------+ | remote_addr | body_bytes_sent | |----------------+-------------------| | 61.243.1.63 | b'1904' | | 112.235.74.182 | b'4996' | +----------------+-------------------+

Parquet ファイルの内容を表示する(ファイルを CSV 形式に変換する)

コマンド

parquet-tools csv -n 2 44_1693464263000000000_2288ff590970d092.parquet応答

remote_addr,body_bytes_sent,time_local,request_method,request_uri,http_user_agent,remote_user,request_time,request_length,http_referer,host,http_x_forwarded_for,upstream_response_time,status b'61.**.**.63',b'1904',b'31/Aug/2023:06:44:01',b'GET',b'/request/path-0/file-7',"b'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_5_8) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.801.0 Safari/535.1'",b'uh2z',b'49',b'4082',b'www.kwm.mock.com',b'www.ap.mock.com',b'222.**.**.161',b'2.63',b'200' b'112.**.**.182',b'4996',b'31/Aug/2023:06:44:01',b'GET',b'/request/path-1/file-5',b'Mozilla/5.0 (Windows NT 6.1; de;rv:12.0) Gecko/20120403211507 Firefox/12.0',b'tix',b'71',b'1862',b'www.gx.mock.com',b'www.da.mock.com',b'36.**.**.237',b'2.43',b'200'

Parquet ファイルの詳細を表示する

コマンド

parquet-tools inspect 44_1693464263000000000_2288ff590970d092.parquet応答

############ file meta data ############ created_by: SLS version 1 // SLS バージョン 1 によって作成されました num_columns: 14 // 列数:14 num_rows: 4661 // 行数:4661 num_row_groups: 1 // 行グループ数:1 format_version: 1.0 // フォーマットバージョン: 1.0 serialized_size: 2345 // シリアル化されたサイズ:2345 ############ Columns ############ // 列 remote_addr // リモートアドレス body_bytes_sent // 送信された本文のバイト数 time_local // ローカル時間 request_method // リクエストメソッド request_uri // リクエスト URI http_user_agent // HTTP ユーザーエージェント remote_user // リモートユーザー request_time // リクエスト時間 request_length // リクエストの長さ http_referer // HTTP リファラー host // ホスト http_x_forwarded_for // HTTP X-Forwarded-For upstream_response_time // アップストリーム応答時間 status // ステータス ############ Column(remote_addr) ############ // 列(remote_addr) name: remote_addr // 名前:remote_addr path: remote_addr // パス:remote_addr max_definition_level: 1 // 最大定義レベル:1 max_repetition_level: 0 // 最大繰り返しレベル:0 physical_type: BYTE_ARRAY // 物理型:BYTE_ARRAY logical_type: None // 論理型:なし converted_type (legacy): NONE // 変換された型(レガシー):なし compression: UNCOMPRESSED (space_saved: 0%) // 圧縮:非圧縮(節約されたスペース:0%) ......