By Zhouyang Lv

Hello everyone. I am Zhouyang Lv, a Platform Architect from YKC Charging R&D Center. Today, I would like to share the development road to the cloud-native stability of YKC Charging.

YKC Charging was established in 2016, with charging services and energy management as the core. It has nine business directions. As of November 2022, the business covers 370 cities, with 7,400 charging pile carriers and 310,000 charging terminals accessed. It also has cooperation with 640 charging pile enterprises. In addition to meeting the rapid development of the business and serving more customers, YKC Charging has attached great importance to the stability of online services to enhance the user's charging experience in the new energy industry.

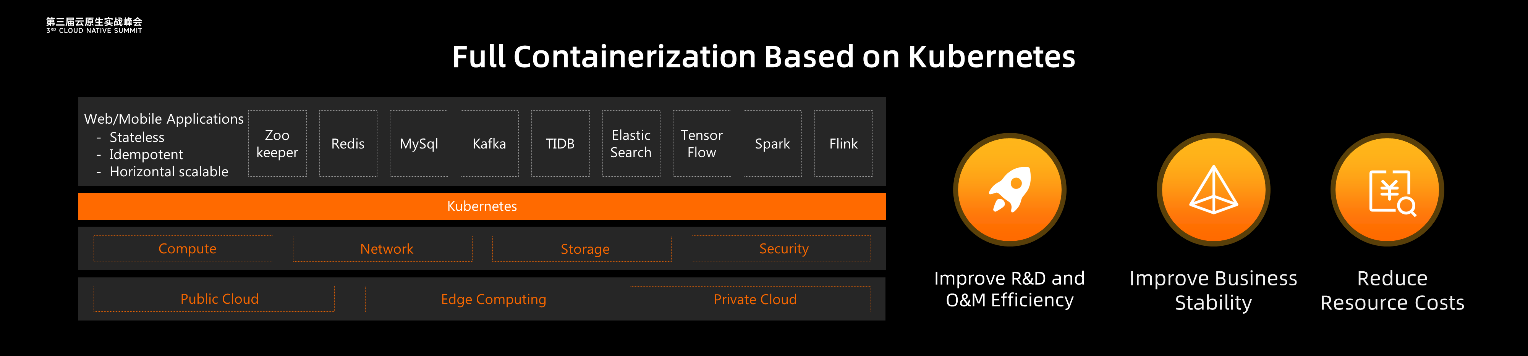

In 2019, YKC Charging determined the technology route of the business system 100% containerization to ensure stable business operation. At that time, although the container platform had other options, it was clear that Kubernetes had become the technology trend and the infrastructure base of the cloud-native era. Kubernetes can bring multifaceted value, including the improvement of R&D operations and efficiency and the reduction of resource costs.

Based on its business, YKC Charging pays the most attention to the business stability improvement of Kubernetes. Any link can fail in a large distributed IT architecture. For example, ECS on the cloud is not 100% able to maintain normal operation. Every application process may encounter downtime after running for a long time. We need to make sure that when these failures occur, the business is not affected in any way. The scheduling and health check mechanism of Kubernetes provides self-healing capabilities for the IT architecture. When a fault occurs, business pods can be automatically rescheduled to normal nodes. This process does not require human intervention. Through the cross-zone deployment of Kubernetes clusters and the zone-first microservice access policy, business systems can still provide services, even in rare scenarios where data center-level failures occur.

We have proven this in practice. During peak hours, Kubernetes auto-scaling capabilities can realize automatic scale-out based on business workloads. This elasticity involves the horizontal scaling of workloads and computing resources. Before fully using Kubernetes, we considered a similar solution but did not put it into large-scale use because of concerns about affecting business stability. Before full containerization, our team tried to build a Kubernetes cluster to verify the various capabilities of Kubernetes. In practice, we encountered many challenges.

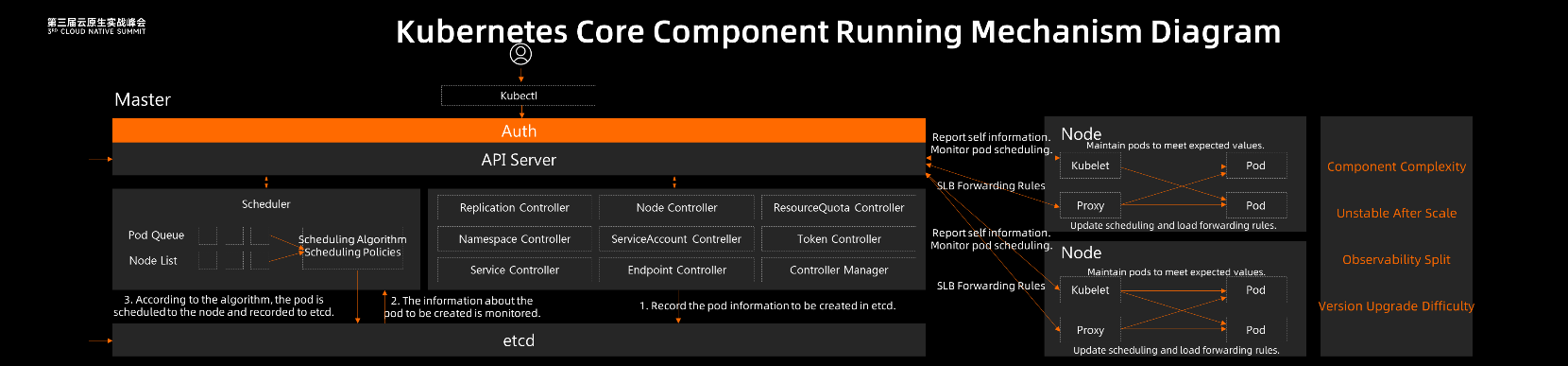

Kubernetes is a large and complex distributed system that involves more than ten core components. When these components are integrated with the IaaS layer on the cloud, it is even more complicated. It takes a lot of effort to fix network plug-ins. We also do not have professional technical support to quickly solve problems (such as node exceptions, Pod exceptions, and network disconnection). In particular, we have nothing to do when we encounter a bug in Kubernetes. There are many bugs in open-source Kubernetes. Currently, there are more than 1600 issues in the open state in the community. When the cluster size is large, the chance that each component of the system has corresponding performance problems becomes higher. If you encounter an etcd performance bottleneck, a series of problems will occur in the cluster. On the business side, it will be reflected that the user cannot charge.

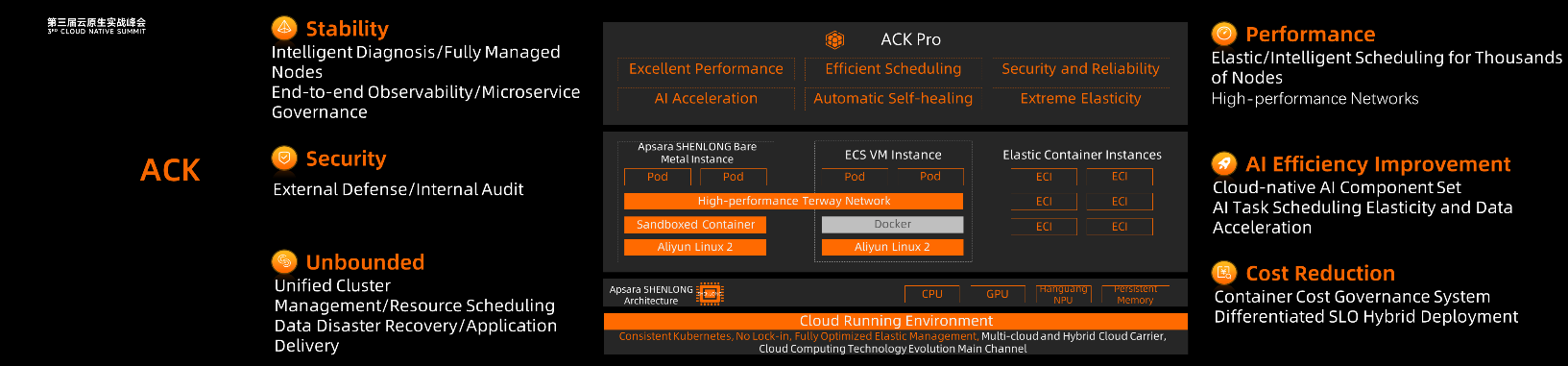

Kubernetes version and Kubernetes organization upgrade is another challenge. The community version is updated quickly, and the upgrade has the potential to affect the business. However, we are worried that if we do not upgrade for too long, the old version will cause more serious problems due to vulnerabilities. Therefore, in addition to keeping self-built Kubernetes in the test environment for learning and research, all systems in the production environment are migrated to ACK. We recognize the value of ACK in these areas based on our business scenarios and technical architecture.

First, API and standards are fully compatible with open-source Kubernetes to ensure our technical architecture follows the open-source technical system. Secondly, cloud products (such as computing, storage, and network) are deeply integrated, and these integrations are based on the Kubernetes standard. It realizes the connection between the container network and the virtual machine network in VPC, especially in terms of the network. This helps us to gradually migrate applications from ECS to Kubernetes. The whole migration process was smooth. Since the network is connected, applications can be verified one by one while maintaining the original architecture. Only the underlying bearer of the application is shifted from the virtual machine to the container. This ensures our business stability during container migration.

Finally, in terms of the stability of the cluster, ACK has done a lot of work (such as master node management, intelligent inspection and diagnosis, and cross-zone high availability). These have been verified by large-scale scenarios of Alibaba Double 11 and the large customer practice of Alibaba Cloud. For YKC Charging, the most important point is that the upgrade of clusters and components is easier. You can operate with one click in the console, and the business is unaware. This reduces maintenance costs and provides a guarantee for the improvement of business stability.

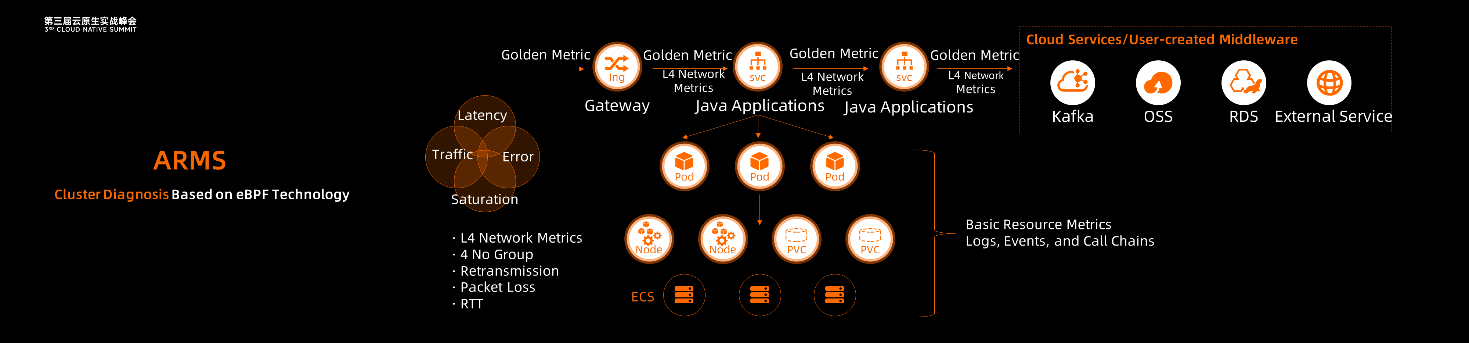

ACK also integrates a useful cluster diagnostic tool, which is implemented based on the eBPF technology. It provides an out-of-the-box capability that can be enabled with a click. This tool provides an application topology from a global perspective, following the principle of from the whole to the individual. Starting from the global view and the three golden indicators of the number of requests, the number of errors, and the delay, we can find abnormal service individuals (such as an application service). After locating this application, we can obtain logs and association analysis. A hierarchical drill-down displayed on one page does not require us to jump back and forth between multiple systems. This facilitates the quick location of service calls in the topology. These valuable data are imported to the Prometheus service on the cloud. In the cloud-native era, the status of Prometheus in the observability field is equivalent to the status of Kubernetes in the cloud-native base.

Through Prometheus and Grafana on the cloud, we have combined eBPF metrics with the metrics of cloud products to create a business monitoring dashboard that can help us learn about the current business progress. For important interfaces, we set alert rules based on service quality and notify the O&M group through the ARMS alert platform to guarantee SLA core services, which significantly helps improve our business stability.

In terms of microservice stability, our team has made a lot of effort. According to previous experience, more than 80% of online service failures are related to version releases. This is about the lack of smooth application online and offline and the lack of fine-grained and grayscale policies.

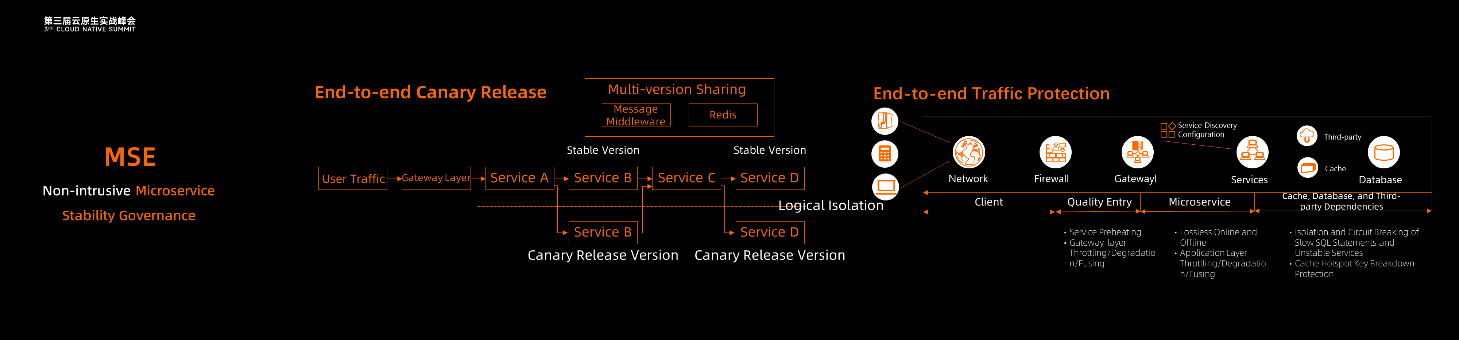

With the help of Alibaba Cloud MSE, we have made a series of improvements to the stability of the microservice system. The microservice governance capability provided by MSE is implemented based on Java-Agent bytecode enhancement technology, which can perfectly match the Spring Cloud microservice framework we use. These upgrades are code-free, so building these capabilities is straightforward.

The first thing to solve is the lossless online and offline problems. Those who have done large-scale microservices models know that the problem of lossless online and offline is a long-standing problem. MSE microservice governance agent does two things. One thing is to dynamically perceive the behavior of applications online and offline, and the other thing is to dynamically adjust the load balancing strategy for the service of consumers. Through these two things, it is easy to realize applications lossless online and offline. Now, end users are unaware of whether we are doing application scaling or version release.

In terms of end-to-end canary release, MSE provides a complete solution. The production environment only requires one environment. Then, it can define multiple logical canary release versions based on the swimlane model and configure routing rules to allow specific traffic to flow in the corresponding swimlane. As such, when a new version is released, the number of requests affected by the new version can be strictly controlled. After sufficient verification, it is determined whether to increase the coverage of the new version or roll back to the previous version, thus minimizing the impact of version release on normal business.

The end-to-end canary release puts forward higher requirements for the entire R&D and O&M process. We have only promoted on one business line, and the benefits are apparent because the application that change caused production accidents has been reduced by 70% or more. In the future, we will continue to make persistent efforts to extend the end-to-end canary release to the entire enterprise.

In addition, end-to-end traffic protection is an important means to improve business stability based on MSE. From gateways to microservice applications to third-party dependencies, we have configured traffic protection rules at each layer to ensure no system is overwhelmed by user traffic during peak hours.

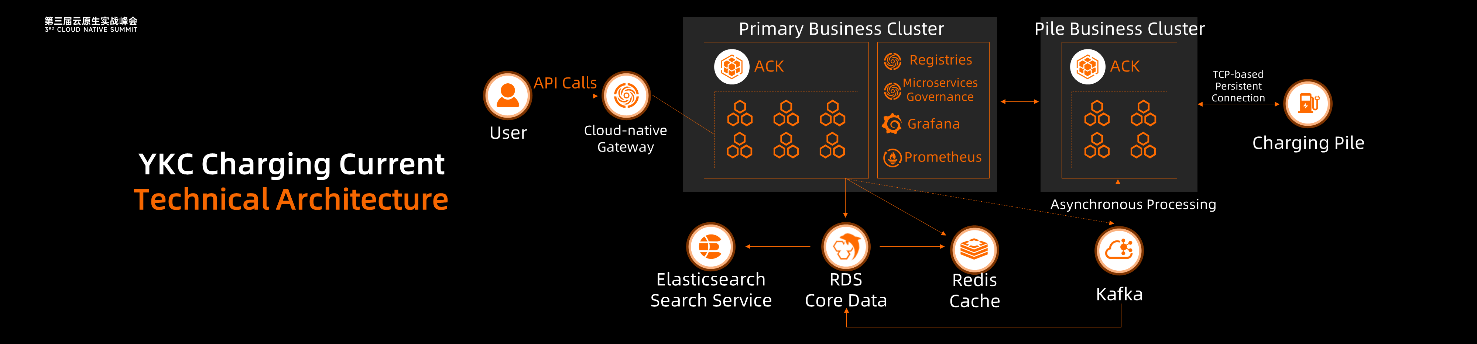

This is the current technical architecture of YKC Charging. We have built a dedicated cluster to ensure the stability of the charging pile connection. Dual services provide basic capabilities through TCP strong connection and dual communication. With the comprehensive containerization and stability construction of YKC Charging, the number of charging piles accessed to YKC Charging has increased by 20,0000 to 30,0000, and the average demand iteration cycle has been reduced from seven people per day to four people per day, which promotes the rapid iteration of the business.

In addition to continuing to improve the end-to-end canary release coverage, we have two major plans for the future. First, we will improve our service quality through the edge container solution, which is related to the business characteristics of YKC Charging. In extreme scenarios (such as network interruption), based on the capabilities of edge nodes, some businesses can serve normally, so users can charge in this situation. Second, we will enhance end-to-end security governance. Security protection measures are strengthened in terms of attack prevention, login authentication, two-wire TLS internal services involving gateways, and permission management.

We hope Alibaba Cloud's solutions can help us realize these two plans more quickly. We also hope other technical teams in the new energy industry can work with us to explore technical paths for cloud-native stability.

212 posts | 13 followers

FollowAlibaba Cloud Native - May 11, 2022

Alibaba Clouder - October 21, 2020

Alibaba Cloud Native Community - January 5, 2023

Alibaba Clouder - June 11, 2018

Alibaba Developer - September 16, 2020

Alibaba Clouder - October 26, 2020

212 posts | 13 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by Alibaba Cloud Native