By Leijuan

As Java programmers, I believe that you may already be familiar with microkernel architecture design. So how was the idea of microkernel proposed? What is the role of microkernel in the design of the operating system kernel? This article explains the microkernel architecture design from the perspective of plug-in architecture. This article also compares the microkernel architecture with the microservice architecture and shares its reference for microservice design.

The microkernel architecture design is popular now. It sounds like it is related to the operating system kernel. As Java programmers, the operating system kernel seems to have nothing to do with us. However, if I told you microkernel is a plug-in architecture, you will be puzzled. "How can you explain to a Java programmer what plug-in architecture is? I use plug-in architectures every day, such as Eclipse, IntelliJ IDEA, Open Service Gateway Initiative (OSGi), Spring Plugin, and Serial Peripheral Interface (SPI). Some of my projects are designed with plug-ins to customize process control." There is some technology we use every day, but if we could explain it clearer, find some problems, optimize it to help with future architecture design, more people could benefit from the daily design and development.

The microkernel design is a plug-in system. As we all know, the operating system kernel was born earlier. Therefore, the plug-in was used in the kernel design first, and that was why it was called microkernel design.

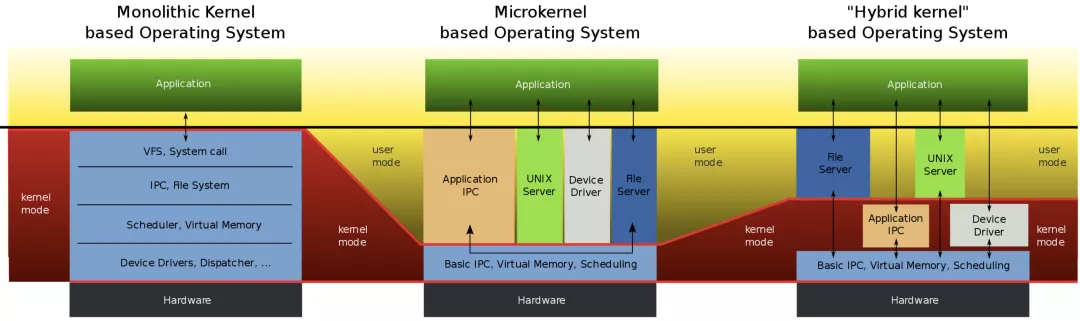

The microkernel is a kernel that only completes the functions that the kernel has to complete, including clock interrupt, process creation and destruction, process scheduling, and inter-process communication. However, others such as file systems, memory management, and device drivers are placed in user-state space as system processes. Specifically, microkernel is relative to macro kernel. For example, Linux is a typical macro kernel. In addition to clock interrupt, process creation and destruction, process scheduling and inter-process communication, the kernel needs to complete other file systems, memory management, input and output, and device driver management tasks.

In other words, compared with the microkernel, the macro kernel is an underlying program with many functions. It does a lot of things, and it is not pluggable. Modifying some small functions will involve recompiling the whole program. For example, a small bug that occurs in a function may cause problems with the entire kernel. This is also why many people call Linux the monolithic operating system. The microkernel is only responsible for the core functions. Other functions are added by plug-ins through user-state independent processes. Then, the microkernel is responsible for process management, scheduling, and inter-process communication, and completes functions needed by the entire kernel. A basic function fails, but this function exists as a separate process, which does not have any impact on other processes and will not cause the kernel to be unavailable. At most, a function of the kernel is not available.

The microkernel is a program segment running at the highest level. It can complete some functions that user-state programs cannot complete. The microkernel coordinates cooperation among system processes through inter-process communication, which requires system calls. However, system calls require the switch of stacks and the protection of process sites, which is time-consuming. The macro kernel completes the cooperation between modules through simple function calls. In theory, the efficiency of the macro kernel is higher than that of the microkernel. This is the same as the architecture design of microservices. After we divide the Monolith application into multiple small applications, the system design becomes more complicated. Before, it was all application internal function calls, but now problems, such as network communication and timeout, must be involved, and the response time will be lengthened.

So far, I believe that everyone has a general understanding of the microkernel and the macro kernel, and it seems each one has merits. However, one of the biggest problems with macro kernel is the cost of customization and maintenance. Nowadays, there are more mobile and IoT devices. If you want to adapt a huge and complicated kernel to a certain device, it can be very complicated. If you want a simpler method, you could adapt the Linux kernel to the Android kernel.

Therefore, we need a microkernel architecture design that is easy to customize, very small, and can realize hot-replacement or update functions online. Microkernel proposes this core requirement. However, the microkernel has a problem with running efficiency, so the hybrid kernel between the microkernel and the macro kernel emerges. The hybrid kernel is designed to have the flexibility of the microkernel and have the macro kernel performance on the key points at the same time. Theoretically, the microkernel design is inefficient. However, with the improvement of chip design and hardware performance, this aspect has improved significantly and is no longer the most critical issue.

The kernel design has three forms shown on the chart below:

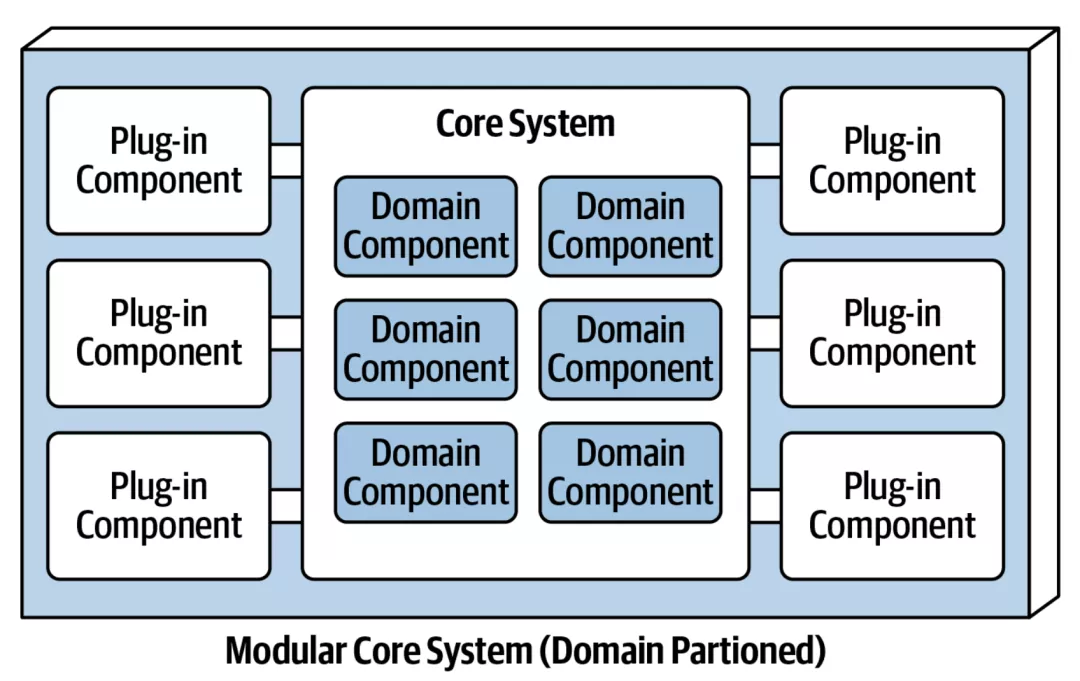

The plug-in architecture is simple and has two core components: the core system and the plug-in component. The core system is responsible for managing various plug-ins. The core system also provides some important functions, such as plug-in registration management, plug-in lifecycle management, communication between plug-ins, and dynamic plug-in replacement. The overall architecture is shown on the chart below:

The plug-in architecture is very helpful for the microservice architecture design. Considering isolation, plug-ins may run as independent processes. If these processes are extended to the network and distributed on many servers, this is the prototype of microservices architecture.

In the microservice architecture design scenario, we need to rename the plug-in component as service, which is similar to a service in microkernel design. In this case, the microservice is similar to the microkernel. Both involve service registration, management, and inter-service communication. Let's see how the microkernel solves the communication problems between services. The following section is taken from Wikipedia:

"Because all services run in different address spaces, function calls cannot be directly performed in the microkernel architecture like the macro kernel. Under the microkernel architecture, an inter-process communication mechanism should be created, through which service processes can exchange messages, call each other's services, and complete synchronization. Using a master-slave architecture, it has a special advantage in a distributed system, because the remote system and the local process can use the same set of inter-process communication mechanisms."

The message-based inter-process communication mechanism is adopted. The message module is the simplest and has two interfaces: sending and receiving interfaces. After the message is sent, the service process will wait to receive the message. Then, it sends the message after processing. So far, you may know that this is asynchronous. Back to the plug-in architecture design, the plug-in component design includes interaction specifications, which is an interface for communication with the outside world. If the design is based on message communication or sending and receiving interface messages, the design is simple.

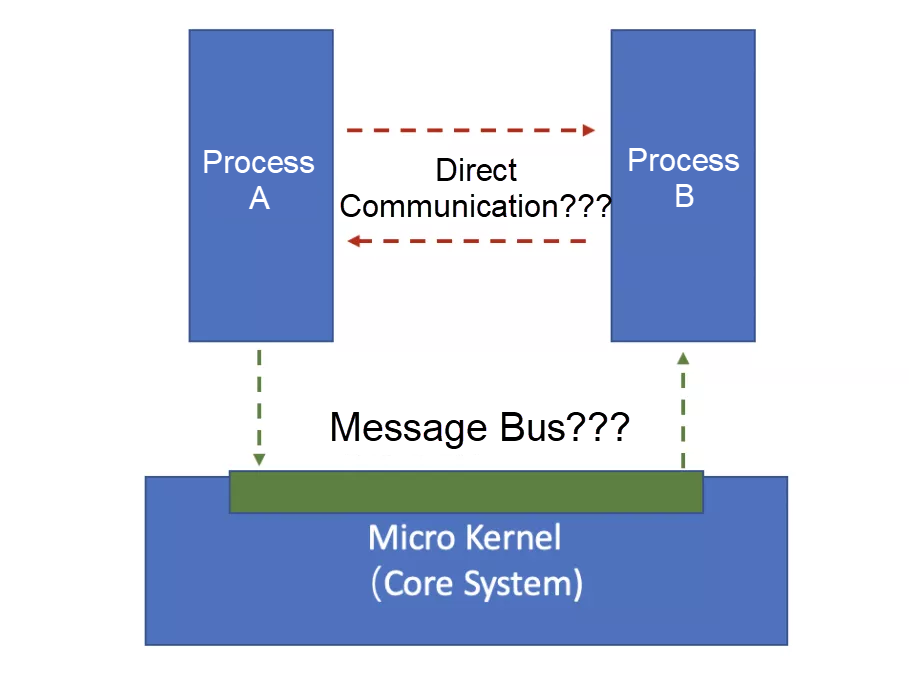

However, there is another issue here, inter-process communication. You may ask about the problem of the two processes that send messages to each other. However, the biggest question is whether a third party is involved in the inter-process communication. This is shown on the chart below:

In the kernel design of the operating system, the message must be forwarded through the kernel, which is the bus architecture we understand. The kernel is responsible for coordinating the communication between various processes. If process A sends messages directly to process B, the corresponding memory address must be known. Services in the microkernel can be replaced at any time. If a service is unavailable or replaced, other processes that communicate with the service should be notified. Isn't this too complicated? As mentioned earlier, only the sending and receiving interfaces are available, and no other interfaces are available for offline notification and service unavailability notification. In the microkernel design, the process must send messages to the kernel through the bus architecture, and then the kernel sends them to the corresponding process. This is a bus design. Many applications use the event bus architecture when decoupling plug-in components internally. This is the bus design mechanism.

Why am I explaining this? This information is critical. The distributed process communication is the core of microservices. The service-to-service communication we understand is that service A starts the listening port, service B establishes the connection with service A, and then the two communicate. This method is different from the bus architecture design of microkernel where the kernel is responsible for message receiving and forwarding. For example, if the HTTP or High-speed Service Framework (HSF) protocols are used, the kernel will inform both parties of the communication of their respective addresses. Then, they can communicate with each other. Then, there will be no need for the kernel or bus architecture design. This is the traditional service discovery mechanism.

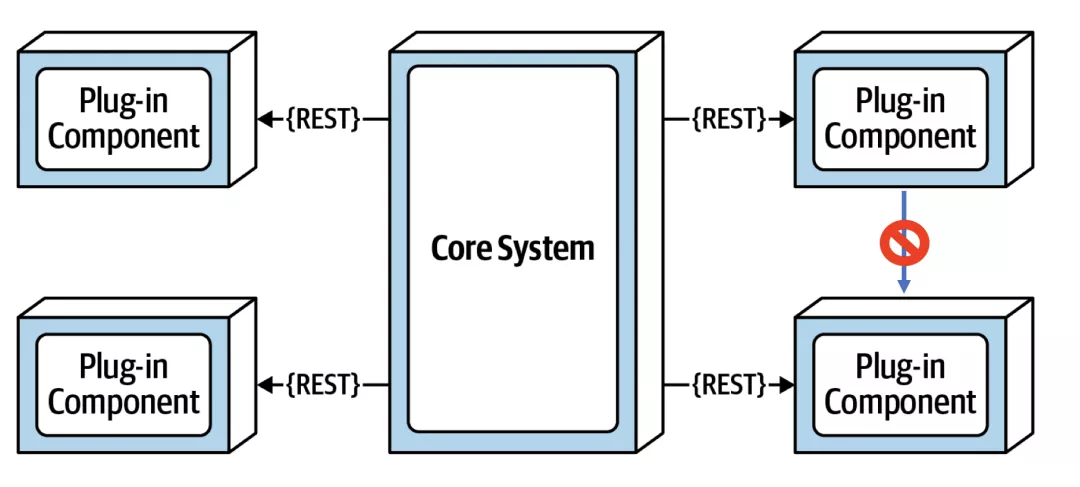

However, there is another mode, which is a completely transparent, plug-in-based communication mechanism, as shown on the chart below:

Plug-in components, or services in the microservices architecture, cannot communicate directly, and their messages need to be forwarded by the core system. This mode has the same advantages as the microkernel architecture. Plug-ins have no direct communication and are very transparent to each other. For example, if service A is offline or replaced, there is no need to notify other services. When service A goes from data center 1 to data center 2, it is unnecessary to notify other services. Even if the network between service N and service A is not interconnected, the two services can also communicate with each other.

There is a performance issue. We all know that the shortest segment between two points is a straight line. Why should the core system be involved? This is the argument between the microkernel and the macro kernel. Function calls are very fast, but message communication between processes is very slow. However, the benefits of this communication mechanism through intermediaries are obvious. *How can we improve the performance of this bus-based communication? We can use high-performance binary protocols, and the protocols like HTTP 1.1 are not required. We can use the zero-copy mechanism to forward network packets quickly. We can use good network hardware, such as Remote Direct Memory Access (RDMA), and recommended protocols, such as User Datagram Protocol (UDP)-based Quick UDP Internet Connection (QUIC). In summary, similar to microkernel, the performance of such microservice communication can be improved. If you really can't stand this performance, you can use the hybrid mode in critical scenarios and adopt the design that lets some services have direct communication. However, this should only be used in scenarios with the high performance requirements.

In addition, the plug-in components in the plug-in architecture are various, and the communication mechanisms are also different, such as the Remote Procedure Call (RPC) pattern, publish/subscribe (pub/sub) pattern, pattern without acknowledgment (ACK) (Beacon APIs), and two-way communication patterns. You can choose different communication protocols, but there is a problem here. The core system needs to understand this protocol before it can route messages. In this case, the core system needs to write a large number of adapters to parse these protocols. For example, Envoy includes various filters to support different protocols, such as HTTP, MySQL, and ZooKeeper. Therefore, the core system becomes very complex and unstable.

You can choose a general protocol. The core system only supports this protocol, and plug-ins communicate with each other based on this protocol. The core system is not responsible for the communication between the service and other external systems, such as databases and GitHub integration. That is completed inside the service. Currently, the relatively general protocol is the Google Remote Procedure Call (gRPC) protocol. For example, gRPC is used in Kubernetes, and the Distributed Application Runtime (Dapr) also uses gRPC for service integration. The communication model provided by gRPC can meet the requirements of most communication scenarios. The other is RSocket, which provides richer communication models and is also suitable for inter-service communication scenarios, such as the core system. Compared with gRPC, RSocket can run on a variety of transport layers, such as TCP, User Datagram Protocol (UDP), WebSocket, and RDMA. On the contrary, gRPC can only run on HTTP 2.0.

As mentioned earlier, it is better to use the core system designed by the plug-in architecture as the route for message communication between services. If this is the case, a broker or agent mode will be created. Here, you will think of the service mesh. Of course, you can choose either the agent sidecar mode or the centralized broker mode. The functions of both modes are the same, but the processing methods are different.

Based on the service registration and discovery mechanism, the agent locates the agent of the other service. Then, the two agents communicate with each other. This way, the costs of calls between services are saved. However, in the centralized mode of brokers, all sent and received messages are from the broker. This does not involve the service registration and discovery mechanism or the push of service metadata. This is the bus architecture.

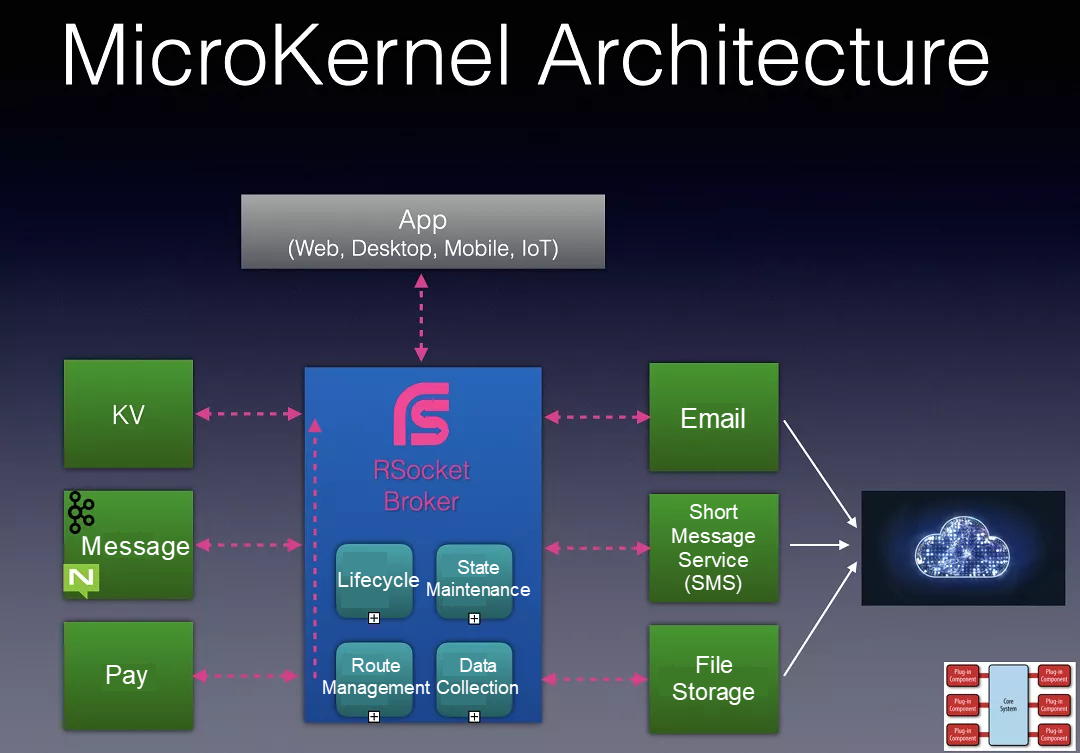

What I am doing now is the bus architecture design based on the broker. This design is based on the RSocket Broker, and the microkernel architecture design is also adopted. There is a possibility I cannot complete this design. The core of RSocket Broker is to manage registered services, route management, and data collection without adding too many functions. Like the design concept of the core system, only necessary functions are added. If you want to expand more functions for the entire system, such as sending text messages, sending emails, and connecting cloud storage services, you need to write a service and then connect the service with the broker. Then, it receives the message from the broker and sends the message to the broker after processing. The overall architecture is shown on the chart below:

Many people may ask how to dynamically scale up the broker when the load on the service instance is too high. The broker provides data, such as queries per second (QPS) of a service instance. As for whether to implement the expansion, you only need to write a service, collect data from the broker, analyze it, and then call Kubernetes API to implement the expansion. The broker is not loaded with these business functions. It only adds necessary functions. This is the same as the core system design.

Regarding the flexibility of the plug-in architecture, if there is a key-value (KV) storage plug-in in the system, you only need to follow the message format or the communication interface to save the KV data. However, you don't care much about the Redis storage, Tair storage, or KV service on the cloud, which provides a good foundation for service standardization and replaceability. This helps migrate applications to the cloud or cloud-native since the entire system has great flexibility.

There are a lot of books about the introduction of the microkernel and the operating system. The two books below are helpful for general architecture design and the microservice scenario. I also referenced these two books to write this article.

The design of the microkernel architecture is a good reference for the design of microservices. However, microservices have a major problem, which is the division of service boundaries. However, the operating system has been developed for decades. It is very stable, and its function division is very easy. The microservice architecture is designed to serve the business. Although the target business may have existed for hundreds of years, it has not been many years since it became software-based, digitalized, and process-based. I think the microservice architecture became more complex with the complexity of real business and various compromises.

Alibaba Cloud Function Compute Enters the Forrester Leader Quadrant for the First Time

212 posts | 13 followers

FollowGXIC - October 16, 2019

Alibaba Clouder - October 16, 2020

Alibaba Clouder - April 20, 2018

wenlou - November 7, 2019

Alibaba Cloud Native Community - January 9, 2023

Alibaba Clouder - October 26, 2020

212 posts | 13 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native

Dikky Ryan Pratama May 8, 2023 at 6:51 am

with this article I understand better what microkernel architecture is, thank you