By Gao Xing, nicknamed Kongya at Alibaba.

Here's a curious question. Why is it that chatbots are becoming increasingly popular in recent years? Well, the answer is pretty obvious. Chatbots can help businesses save valuable time, increase their efficiency, provide services around-the-clock, and even reduce service errors.

But, digging a big deeper then, what are the principles and problems involved the creation of chatbots? And, on the flip side, how can we improve the efficiency of chatbots to further increase the benefits that they offer to businesses?

Curious to know the answers? Today, in this article, as a technical expert from Alibaba, I'm going to try to address all of these questions through showing the findings of a case study conducted at Alibaba.

The content of today's article is based on an article that was presented at ACL 2019, in Florence, Italy.

But, before we get too deep into things, let's cover some of the basic concepts and general knowledge.

In terms of the overall solutions they can offer, chatbots can be classified into two specific subtypes. There's taskbots, which are chatbots that are specifically used for tasks such as booking flight tickets or checking the weather forecast. And then there are QAbots, which are bots whose sole job is to answer questions based on their knowledge bases.

Typically, the chatbots used in customer service scenarios are QAbots. There are three major types of QAbots, which are classified based on their knowledgebase formats. First, there's doc-QAbots, which are QAbots whose knowledge is based on one or more documents. And then there's kg-QAbots, which take their knowledge from knowledge graphs and FAQbots, also known as faq-QAbots, whose knowledge comes from question-answer pairs of frequently asked questions (FAQ).

For the discussion in this article, we're going to focus in on FAQbots, which are easier to maintain and are also one of the most essential part of the chatbot solutions seen in customer service scenarios nowadays.

An FAQbot matches queries to an question-answer pair through a process involving text classification and text matching. Text classification is suitable for FAQ that are more or less stable in nature. On the opposite side of things, text matching is more suitable for FAQ that have long tails, or that are less permanent in nature, changing over time.

Alibaba's AlimeBot is a smart customer service solution provided for merchants on Alibaba's e-commerce platforms. AlimeBot's basic function is to answer user questions based on frequently asked questions. We have conducted a great deal of research and experimentation on text classification and text matching. So this article will cover our research and concentrate on the basic model that we used at Alibaba for text matching.

Text matching is an important research field in natural language processing and has a long history. It is used in many natural language processing tasks such as natural language inference, paraphrase identification, and answer selection.

Before we do any research, when it comes to exploring text matching, we have to ask a few key questions. What currently are the best matching models? What are the differences between these matching models? What problems do these matching models have? By analyzing the models given in the Stanford Natural Language Inference (SNLI) list, we can reach the following conclusions:

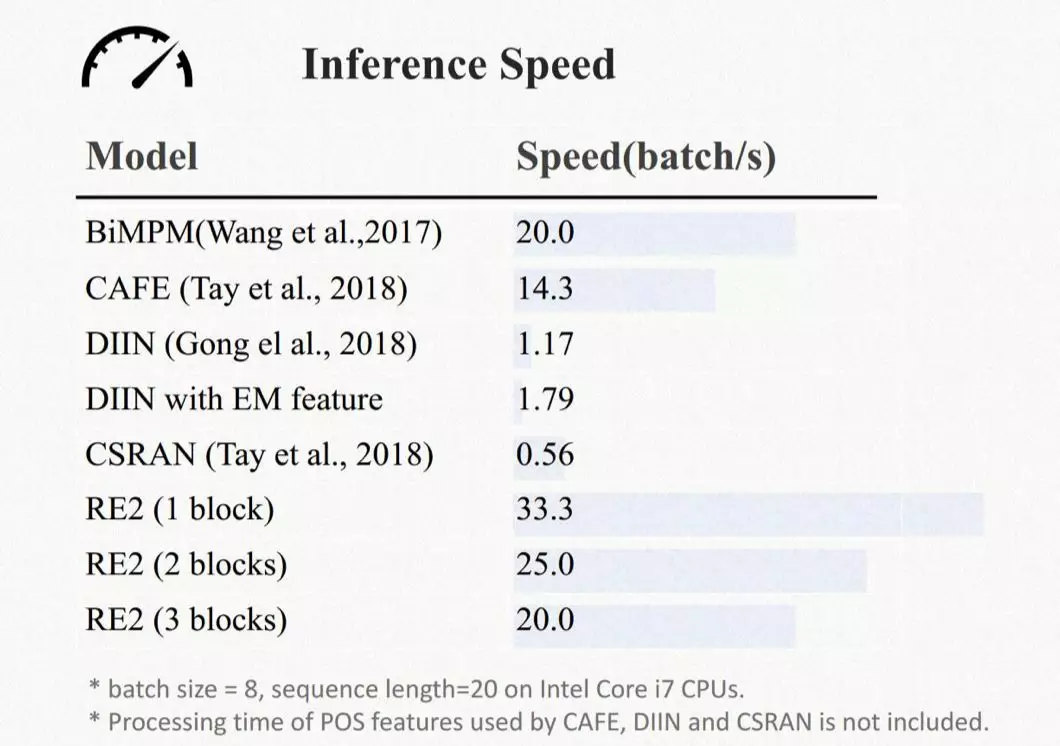

Based on these conclusions, we know that we needed a model with fewer parameters, a simpler structure, and a lower inference cost. Having such a model is important as it ensures the model can be easily trained and better suited to the production environment. In addition to meeting these prerequisites, we also wanted to leverage the advantages of deep networks so that we could easily deepen our network layers and empower our model with greater expression capabilities.

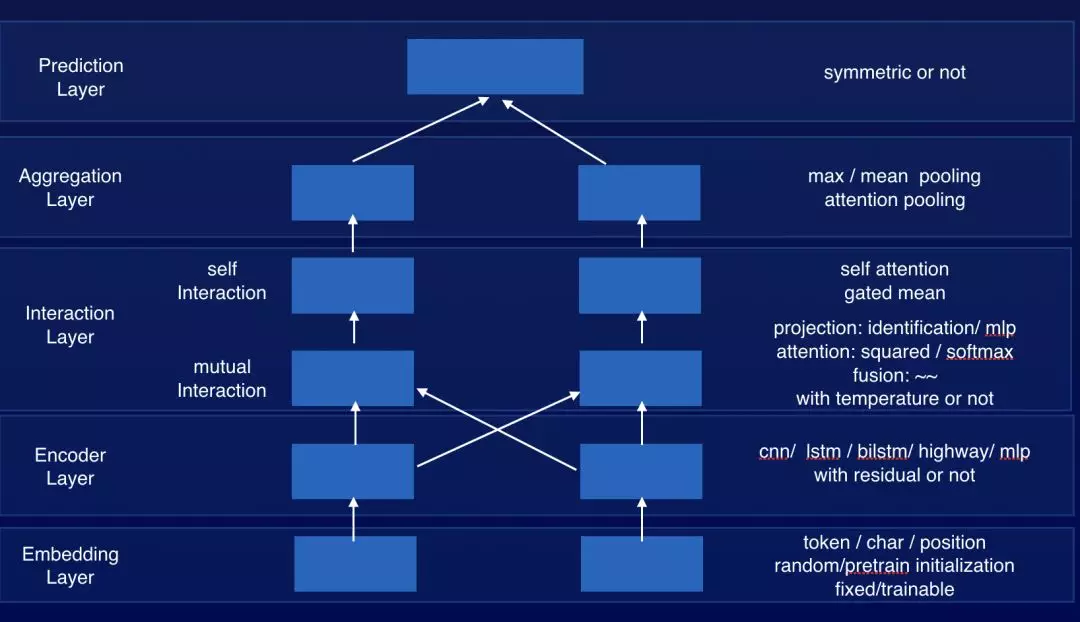

By summarizing the various models proposed by the academic community, including the Decomposable Attention Model, as well as CAFE, and DIIN, we designed five layers for our matching model: an embedding, encoder, interaction, aggregation, and prediction layer. Each layer has a different design. We used a pluggable framework for the matching model and design a typical implementation at each layer.

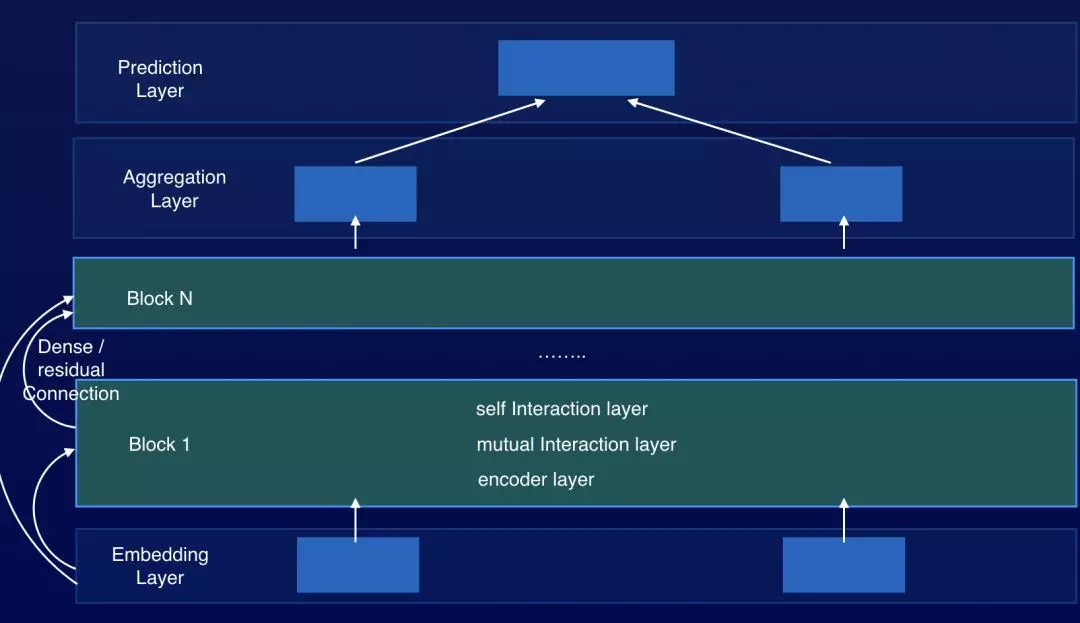

Next, to enhance the expression capability of our model, we packaged the encoder and interaction layers into one block. By stacking multiple blocks and multiple inter-sentence alignments, our model was able to fully understand the matching between two sentences.

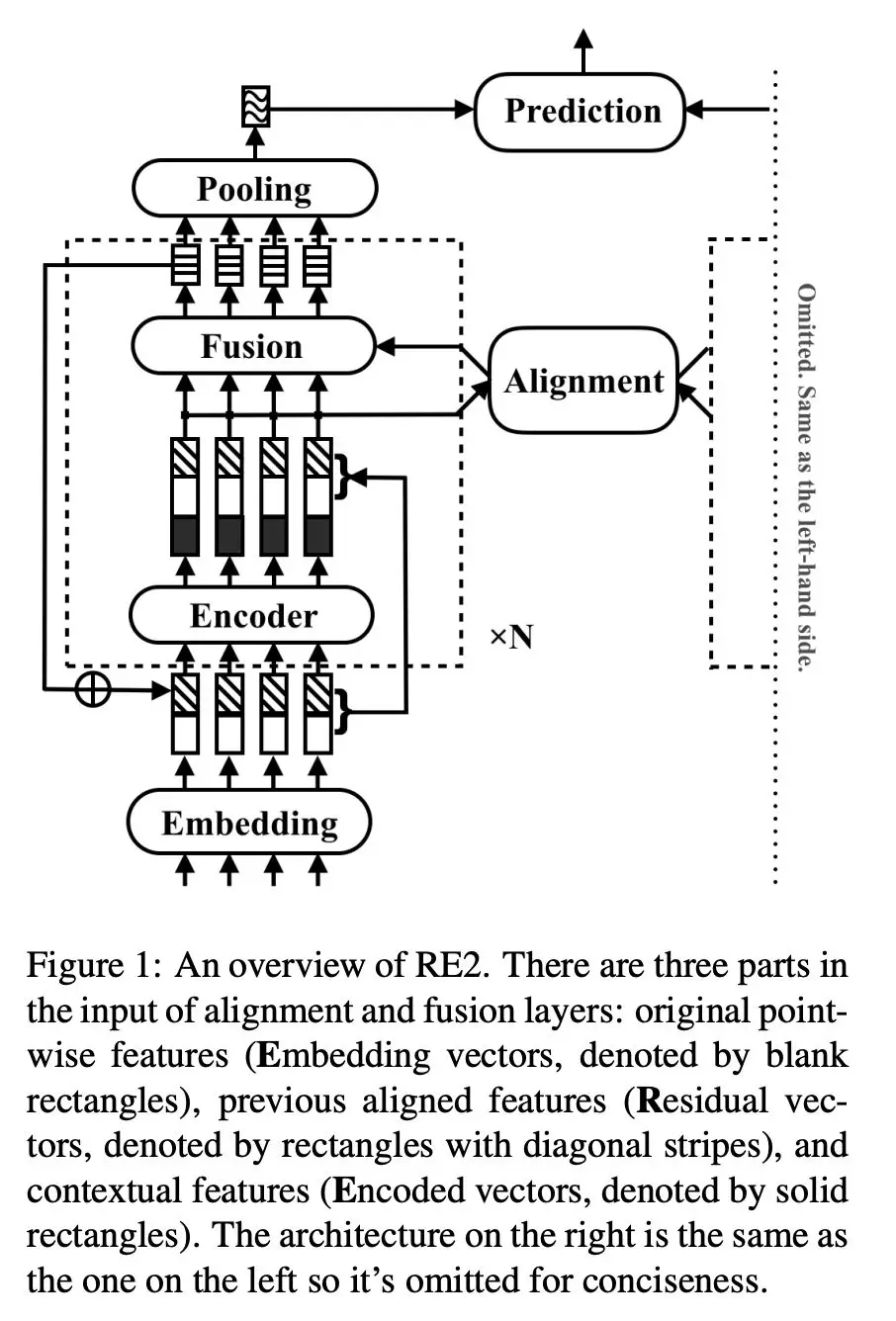

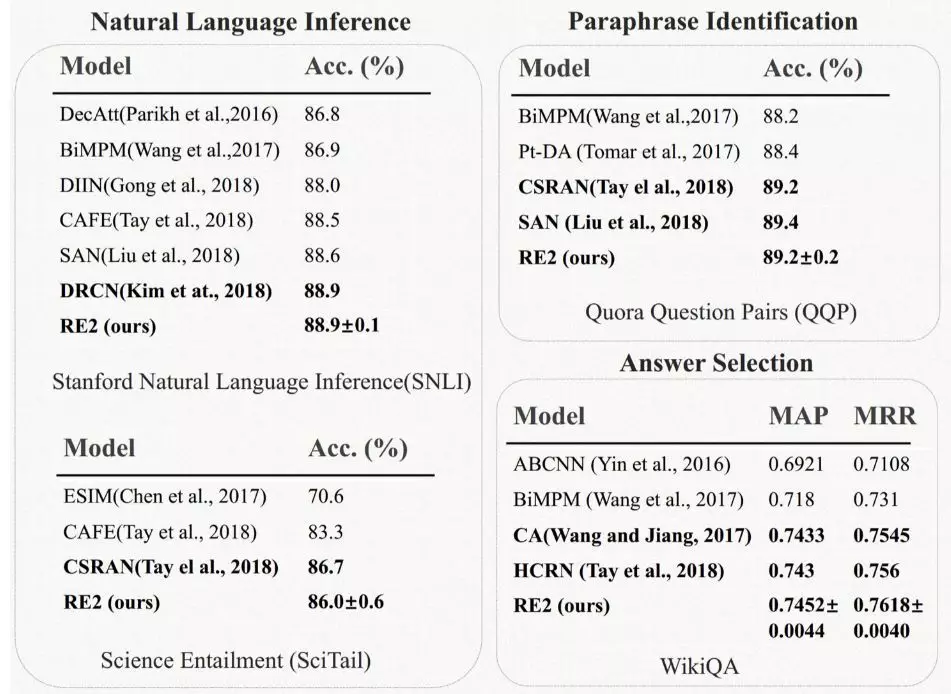

Then, we conducted many experiments based on this framework and finally obtained the model structure RE2. This model can obtain the optimal results from various public data sets and our business data, as shown in the following figure.

RE2 includes N blocks, whose parameters are completely independent. In each block, an encoder generates a contextual representation. Then, the input and output of the encoder are concatenated for inter-sentence alignment. Finally, the output of the block is obtained through fusion. The output of block 'i' is fused with the input of this block through augmented residual connection, and the fused result is used as the input of block i+1.

Below, we describe each part involved in detail.

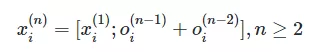

In our model, continuous blocks are connected by augmented residual connections, so we record the output of block n at position i as follows:  is a pure-zero vector.

is a pure-zero vector.

The input of the first block is  , which is the output of the embedding layer. In augmented residual connections, the input of block n is as follows:

, which is the output of the embedding layer. In augmented residual connections, the input of block n is as follows:

Where, [;] indicates a concatenation operation.

Three types of information are input at the interaction layer. The first is the original point-wise information, which is the original word vector information and is used in each block. The second is the contextual information obtained from the encoder. The third is the information processed and aligned by the first two blocks. These three types of information play an important role in the final results, which will be demonstrated in the experimental analysis. In our model, the encoders use a two-layer CNN (SAME padding).

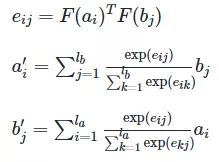

At the alignment layer, we use an alignment mechanism based on the Decomposable Attention Model (Parikh et al., 2016).

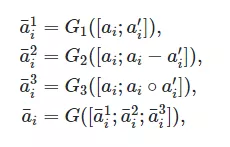

At the fusion layer, we calculate three scalar feature values through FM. This process is based on the concat, multiply, and sub operations in CAFE. For these three operations, we used three independent fully-connected networks to calculate three vector feature values and then concatenate the three vectors for concurrent projection.

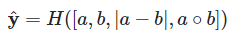

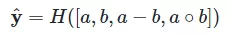

The prediction layer is the output layer. For tasks that match text similarities, we used the following symmetric format:

For text entailment and question and answer matching tasks, we used:

Where, H indicates a fully-connected network that has multiple layers.

To verify the performance of the model, we selected three types of natural language processing tasks: natural language inference, paraphrase identification, and question answering. We also selected the public data sets SNLI, MultiNLI, SciTail, Quora Question Pair, and Wikiqa. We decided to use accuracy (Acc) as the evaluation metric for the first two tasks and mean average precision (MAP) and mean reciprocal rank (MRR) as the evaluation metrics for the third task.

We used Tensorflow to implement our model and used Nvidia P100 GPU to train it. We also used the Natural Language Toolkit (NLTK) to separate English data sets, then converted them to lower-case and removed all punctuation in a unified manner. We did not limit the sequence length, but pad the length of each sequence in a batch to make it the same as the maximum sequence length in each batch. We selected 840B-300d Glove vectors as word vectors, which are fixed during the training. All out-of-vocabulary (OOV) words are rendered as pure-zero vectors and are not be updated during the training. All the other parameters are initialized through He initialization and then normalized through weight normalization. Each convolution layer or fully-connected layer is followed by a dropout layer, with the keep rate set to 0.8. The output layer is a two-layer feed-forward network. The number of blocks can range from 1 to 5.

In these public data sets, the size of the hidden layer is set to 150, and Gaussian error linear units (GeLu) is used as the activation function. Adam is used as the optimization algorithm. Learning warms up linearly and then attenuates exponentially. The initial learning rate can range from 1e-4 to 3e-3, and the batch size can range from 64 to 512.

We obtained results from all of these public data sets when no BERT model is used.

The model showed good performance and was highly competitive in terms of parameter volume and inference speed. Therefore, the model can be widely used in industry scenarios such as AlimeBot. It also significantly improves business metrics such as matching accuracy.

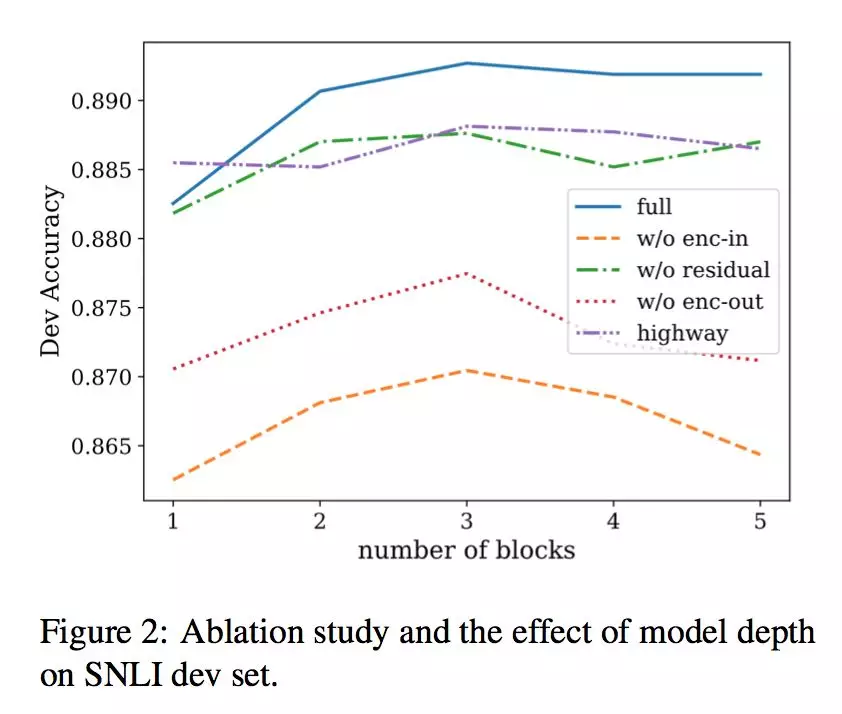

We constructed the four following baseline models:

The above figure shows the results obtained based on SNLI. By comparing models 1. and 3. with the complete model, we found that poorer of results are obtained when only encoder input or only encoder output is used at the alignment layer. This indicates that the original word vector information, alignment information generated by previous blocks, and contextual information generated by encoders in the current block are all indispensable for better results. By comparing model 2. and the complete model, we found that the residual connections among blocks play a role. And, by comparing model 4. and the complete model, we find that direct concatenation is a better solution.

Again, as shown in the above figure, a network connected through augmented residual connections is more likely to be effective in a deep network and support deeper network layers. In other baseline models, when the number of blocks exceeds three, performance will fall significantly. This is incompatible with the application of deeper models.

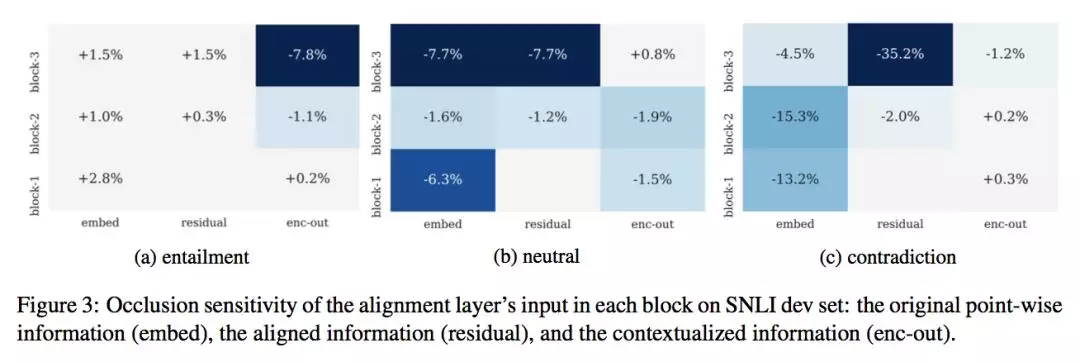

The input at the alignment layer is actually a concatenation of three types of information: the original word vector information, the alignment information generated by previous blocks, and the contextual information generated by the encoders in the current block. To have a more complete understanding of the impact of the three types of information on the final results, we analyzed the occlusion sensitivity based on relevant work in machine vision. We used an RE2 model that contains three blocks for the SNLI-dev data. Then, we masked certain input features at the alignment layer of a block into pure-zero vectors and observed the accuracy changes in entailment, neutral, and contradiction classes.

We reached the following conclusions:

First, masking original word vector information significantly impeded the process of identifying neutral and contradiction classes. This indicates that the original word vector information serves an crucial role in identifying the differences between two sentences.

Following this, masking alignment information generated by previous blocks also affects the neutral and contradiction classes. In particular, masking the alignment information generated by the last block has the more significant of effects on the final results. This indicates that residual connections enable the current block to better focus on the most important things.

Last, masking the encoder output affects entailment. We think that this is because encoders model uses phrase-level semantics, and the encoder output helps in identifying entailments.

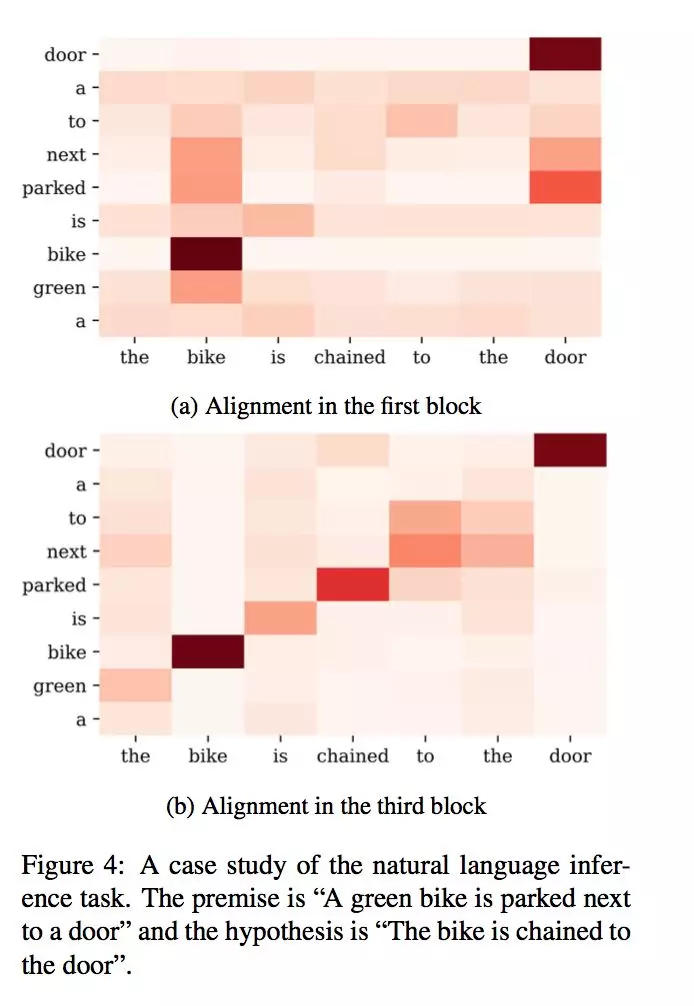

Now, in this section, we will present a specific case to analyze the role of multiple blocks.

Consider these two sentences "A green bike is parked next to a door" and "The bike is chained to the door". In the first block, the alignment is at the word or phrase level, and there is only a weak connection between "parked next to" and "chained to". In the third block, the two sentences are aligned. Therefore, the model can identify the overall semantic relationship between the two sentences based on the relationship between "parked next to" and "chained to". From this example, we can see that each block focuses on different information during alignment. After multiple alignments, the model can better understand the semantic relationship between the two sentences.

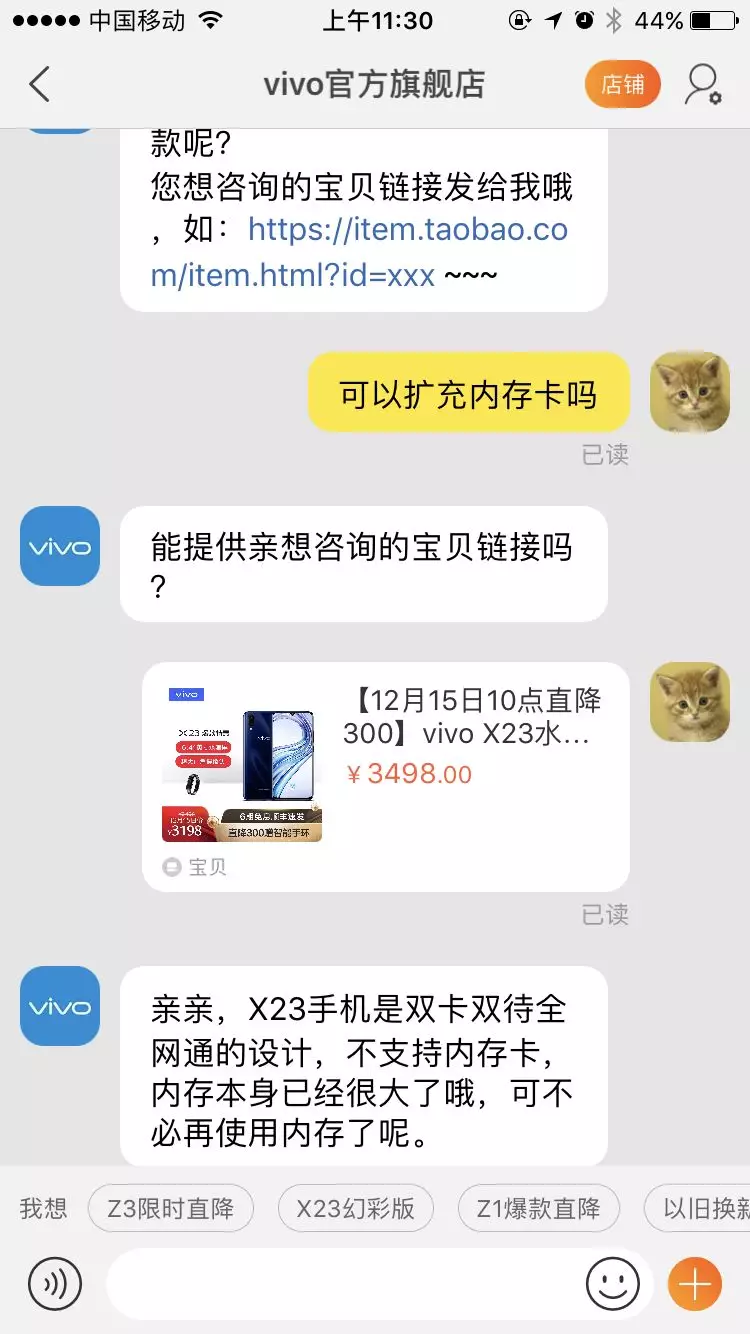

With our AlimeBot, merchants maintain custom knowledge bases and we provide solutions for knowledge positioning. If AlimeBot cannot provide an accurate reply, we recommend relevant knowledge. The text matching model introduced in this article is used in these two AlimeBot business modules. We have created optimized models for seven major industries-clothing and apparel, beauty and cosmetics, footwear, home appliances and electrics, food and beverage, maternity and childcare, and digital services-as well as a basic dashboard model, and a model for recommending relevant knowledge. While ensuring a high coverage rate, the industry models increased the accuracy from less than 80% to more than 89%, the basic dashboard model increased the accuracy to 84%, and the model for recommending relevant knowledge increased the effective click rate from about 14% to over 19%.

The following figure shows AlimeBot's background configuration of the custom knowledge base.

The following figure shows an AlimeBot chat example:

In industry scenarios, we implement a concise and highly expressive model framework and have achieved good results based on public data sets and business data sets.

This "general-purpose" semantic matching model has delivered significant improvements. However, we need to continue to work on solutions that are better suited to different industries and scenarios based on AlimeBot. For example, we can integrate external knowledge such as product and event knowledge for text matching.

We also need to focus on improving the technical systems behind FAQbots, and we hope to improve text classification and few-shot classification. With the emergence of BERT models, various natural language processing tasks have reached a new SOTA level. However, BERT models are too large and demand a great deal of computing resources. Therefore, we want to use the teacher-student framework to migrate the capabilities of BERT models to the RE2 model.

There's No Need for Hadoop: Analyze Server Logs with AnalyticDB

How Can Alibaba's Newest Databases Support 700 Million Requests a Second?

2,593 posts | 791 followers

FollowKey - February 20, 2020

Alibaba Cloud Community - August 30, 2024

Alibaba Clouder - January 4, 2021

Alibaba Cloud_Academy - July 20, 2020

JDP - June 10, 2022

plavookac - June 5, 2025

2,593 posts | 791 followers

Follow Intelligent Robot

Intelligent Robot

A dialogue platform that enables smart dialog (based on natural language processing) through a range of dialogue-enabling clients

Learn More Livestreaming for E-Commerce Solution

Livestreaming for E-Commerce Solution

Set up an all-in-one live shopping platform quickly and simply and bring the in-person shopping experience to online audiences through a fast and reliable global network

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Clouder