By Alexandru Andrei, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

The previous article in this series can be found here: Use Your Storage Space More Effectively with ZFS - Introduction.

We will be using Ubuntu in this tutorial because, at the moment, it has the best support for ZFS (on Linux). The other distributions offer ZFS as a separate module that has to be compiled with DKMS every time the kernel is updated. This can occasionally cause problems. Ubuntu includes the pre-compiled module natively in every kernel package.

It's worth noting that the BSD operating systems integrate ZFS exceptionally well, but the platform is used by fewer users and might seem slightly alien to people used with Linux-based environments. If you'd prefer to use an OS like FreeBSD, most of the commands described below will have a very similar syntax (e.g. devices will have different names there, so you'd have to replace words like vdb with the BSD equivalents).

Log in to the ECS Cloud Console, create a new instance and choose the newest Ubuntu distribution that is available. Make sure the version number starts with an even number such as 16, 18, 20, as these are Long Term Support, stable releases. Odd version numbers represent development releases which don't have the same level of stability and support longevity. Assign at least 2GB of memory to the instance and go with 4GB or more if you want to improve performance. The more memory ZFS has, the more data it can cache so that frequently accessed information is delivered much faster to users and applications.

If you intend to add a lot of Cloud Disks to your instance, allocating two or more CPU cores can improve performance by distributing input/output requests across multiple computing units.

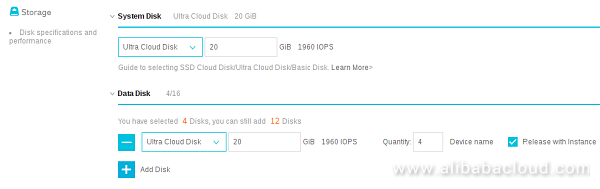

To be able to learn about and test every kind of vdev, we will be adding 4 data disks to the instance:

For testing purposes any size will work. In production systems however, the larger the size, the more IOPS you get, which leads to better performance. SSDs are much faster than Ultra Cloud disks. As a compromise between cost optimization, performance and ability to allocate more to your storage pool, you can use Ultra Cloud Disks for data and SSD for cache. This will be explained later, in the section dealing with cache vdevs (L2ARC).

For reference, you can create and attach Cloud Disks even after the instance has been created. So you can make changes to your ZFS storage pool whenever and however you see fit, without having to reboot your system.

Connect to your instance with an SSH client and log in as root. Upgrade all packages to bring your system up to date with all of the latest bug and security fixes:

apt update && apt upgradeReboot:

systemctl rebootWait at least 30 seconds so that the instance has time to reload the operating system and then log back in as root. Afterward, install the ZFS utilities:

apt install zfsutils-linuxThe benefits of this structure are:

zpool attach). You will have to mirror each disk vdev in the pool though.But this is a non-redundant type of vdev, meaning:

This type of vdev should only be used with data that can be easily recovered. Since Alibaba Cloud Disks have their own redundancy methods, the risks involved with using this type of virtual device are significantly reduced but are still higher than those of mirror and Raid-Z vdevs.

To view a list of disks and partitions available on your system:

lsblkvda represents the first disk (and in this case the system disk, where the operating system is stored). vda1 is the first partition on the first disk, vdb is the second disk, vdc the third, and so on.

Create a pool named "first", containing two disks:

zpool create -f first vdb vdcBe very careful when using this command, so that you don't mix up device names and overwrite useful data. You can first use the zpool command without the -f (force) parameter, which can give you warnings like these about possible mistakes:

root@ubuntu:~# zpool create first vdb vdc

invalid vdev specification

use '-f' to override the following errors:

/dev/vdb1 is part of potentially active pool 'fourth'In our case, we have to use -f initially, otherwise we would get a message like this:

root@ubuntu:~# zpool create second vdd vde

invalid vdev specification

use '-f' to override the following errors:

/dev/vdd does not contain an EFI label but it may contain partition

information in the MBR.

/dev/vde does not contain an EFI label but it may contain partition

information in the MBR.You can view pool status and structure with:

zpool statusThe output in this case should look like this:

root@ubuntu:~# zpool status

pool: first

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

first ONLINE 0 0 0

vdb ONLINE 0 0 0

vdc ONLINE 0 0 0

errors: No known data errorsUnder "NAME" we find the name of the pool, in this case "first", and under that we find the names of the vdevs that compose it: "vdb" and "vdc". In case of disk vdevs, this coincides with the name of the physical devices. In case of redundant vdevs, we will see a variation: under the pool name we will find the names of the mirror or Raid-Z vdevs that it contains and under each vdev name we will see the names of the physical devices these contain. You will find examples in the next sections.

"READ", "WRITE" and "CKSUM" indicate the number of errors ZFS has encountered when trying to read, write or checksum data. When they don't indicate 0, we should run commands such as zpool scrub to scan disks for other possible errors, wait for it to finish and then replace the faulty devices with zpool replace commands.

To view information about used and free space in the pool, fragmentation, etc.:

zpool listFor a more detailed view, listing the same info for every physical device:

zpool list -vNote however that this shows "raw" used and free space, meaning it also keeps track of what is used and what is available by/for redundancy and other metadata. If you want to see statistics regarding space used/available for storing your files, you should use zfs list. More about that in the third tutorial, where we will explore various types of ZFS datasets. Example: in a mirror vdev where you have two 50GB hard disks. "zpool list" will show approximately 100GB usable space, while "zfs list" will show only 50.

To add more disk vdevs to the "first" pool:

zpool add -f first vdd vdeThis shows how easy it is to expand a storage pool. However, it should be noted that performance gains are maximized only when all vdevs in a pool have the same amount of storage space available. Preferably, the physical devices composing the vdevs should also have the same size. Let's see how different sizes can be detrimental: imagine that at some point in the future, one of your virtual devices is left with only 100GB of space available and you add a 900GB disk to expand the pool. In such a case, when you write 10GB of data, ZFS would send approximately 1GB to the device with 100GB available and 9GB to the device with 900GB free space (it attempts to fill devices at the same rate). If writing on the first device would take 10 seconds, writing on the second will take 90 seconds. In such a case, to keep performance optimal, it would be better to create a second pool and add two 450GB disks + size of the vdev you want to replace. You could end up with two 1 terabyte disks. Then you can copy the data from the old pool to the new pool. Now reads and writes would be distributed evenly, so for a 10GB write, 5GB would be written to disk1 and 5GB to disk2. In this case the write would complete in 50 seconds instead of 90. An even better alternative to grow a pool is to replace smaller size devices in a vdev with bigger ones, one by one, using the zpool replace command and then, when all of them have been replaced, zpool online -e on any of the physical device names to grow the pool. Full syntax of commands is shown when typing zpool replace and zpool online.

Destroy this pool so we can move on to the next type of vdev:

zpool destroy firstMirrors have the following advantages:

zpool detach).The cost of mirrors:

To create a pool that consists of a mirror vdev (we'll name it "second"):

zpool create -f second mirror vdb vdcThe physical devices added to a mirror should have the same size.

Now zpool status will show:

root@ubuntu:~# zpool status

pool: second

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

second ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

vdb ONLINE 0 0 0

vdc ONLINE 0 0 0

errors: No known data errorsIf vdb encounters partial failures such as bad sectors, ZFS can automatically recover lost information from vdc. Even if one of the devices fails entirely, data can be recovered from the other healthy device(s). To increase reliability, you can add even more physical devices to a mirror:

zpool attach -f second vdb vddInstead of "vdb" we could have also used "vdc" since the parameter after the pool name signifies what physical device to mirror and vdb and vdc are identical. Now we can lose two devices and still recover.

Just like in the case of disk vdevs, data can be striped across multiple mirror vdevs to improve read/write performance. Instead of a pool consisting of one mirror with four devices, you create a pool consisting of two mirrors, each with two devices. Let's destroy and then recreate the initial mirror:

zpool destroy second

zpool create -f second mirror vdb vdcNow add another mirror to the same pool:

zpool add -f second mirror vdd vdeNew output of zpool status will be:

root@ubuntu:~# zpool status

pool: second

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

second ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

vdb ONLINE 0 0 0

vdc ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

vdd ONLINE 0 0 0

vde ONLINE 0 0 0

errors: No known data errorsIn such a structure, if vdb and vdd fail, the pool will still have valid data. We need at least one healthy device in each mirror vdev (such as mirror-0, mirror-1, etc.)

Destroy this pool so we can move to the next section:

zpool destroy secondIn a Raid-Z array, what is called parity is added to all data so that it can be reconstructed in case of partial failures. Parity information is distributed to all physical devices, so there is no dedicated device that stores it. With single parity, data can be recovered after losing any one of the storage devices. With double parity (Raid-Z2) you can lose two and with triple you can lose three.

Raid-Z also comes with advantages and disadvantages. The advantages it offers:

The disadvantages:

To create a Raid-Z1 vdev with four devices:

zpool create -f third raidz1 vdb vdc vdd vdeTo create a Raid-Z2 vdev, you just change raidz1 to raidz2.

Destroy the pool so we can go through another example in the next section:

zpool destroy thirdAs previously mentioned, log and cache vdevs are useful only if the physical devices used to back them are faster than those used for storing data. Example: you use hard-disks in a Raid-Z2 array and you add an SSD to a log vdev and another SSD to a cache vdev to significantly increase your storage pool performance. This type of structure is useful to decrease financial costs, especially when you need enormous amounts of storage. But if cost is not an issue or you don't need to store very large amounts of data, it's easier to use faster storage devices in your pool, exclusively. This will make your array perform slightly better than a hybrid hard-disk + SSD array. To simplify:

Create a pool:

zpool create -f fourth vdb vdcTo add a log vdev:

zpool add -f fourth log vddAnd to add a cache vdev:

zpool add -f fourth cache vdeThe log device usually requires very little space, even a few GB suffice. The size of the cache is a question of "What percentage of my data will be accessed very often?". For example, you may have a large database of 100GB that grows fast. But usually, only the last 20GB are accessed very often, the rest is just historic data that almost nobody uses when it's old. In this case you would use an SSD cache device sized around 20GB.

zpool status will show the structure of our pool with nicely formatted text that makes it easy to see how everything is connected:

root@ubuntu:~# zpool status

pool: fourth

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

fourth ONLINE 0 0 0

vdb ONLINE 0 0 0

vdc ONLINE 0 0 0

logs

vdd ONLINE 0 0 0

cache

vde ONLINE 0 0 0

errors: No known data errorsIn the next tutorial, Use Your Storage Space More Effectively with ZFS - Exploring Datasets, we will learn how to make our storage pools useful by utilizing datasets such as filesystems, snapshots, clones and volumes.

Use Your Storage Space More Effectively with ZFS: Exploring Datasets

2,593 posts | 793 followers

FollowAlibaba Clouder - November 14, 2018

Alibaba Clouder - November 14, 2018

Alibaba Clouder - August 23, 2018

digoal - January 30, 2022

Alibaba Clouder - July 18, 2019

Alibaba Clouder - November 14, 2017

2,593 posts | 793 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More Apsara File Storage NAS

Apsara File Storage NAS

Simple, scalable, on-demand and reliable network attached storage for use with ECS instances, HPC and Container Service.

Learn MoreMore Posts by Alibaba Clouder