By Jian Zhang

Based on the thinking of data time travel, this article leads to the thinking of three kinds of data warehouse forms and two kinds of data warehouse architecture. Combined with the application of data lake in Flink and the thinking of data lake metadata types, this article explores the practice of stream-batch integrated Flink SQL based on data lakes and designs and explores the expression consistency, result consistency, separation of stream and batch tasks, and mixed scheduling dependencies of SQL. We welcome everyone to share more specific practices and build a new data practice method together.

Large-scale data processing emerged from the development of the Hadoop ecosystem, and the key lies in the development of distributed storage and distributed computing, which has created nearly a hundred ecological technologies related to big data today. The data warehouse theory and data warehouse modeling theory have developed rapidly based on the big data technology system, in which the standardized construction of offline data warehouses has been widely used. The essence of data is a representation of behavior, and the core of the business's need for data is the explorable and observable behavior. Based on this, we need to make it clear whether the big data technology fully meets the certainty of business requirements for data in the time dimension. First, let's look at the time travel of the data.

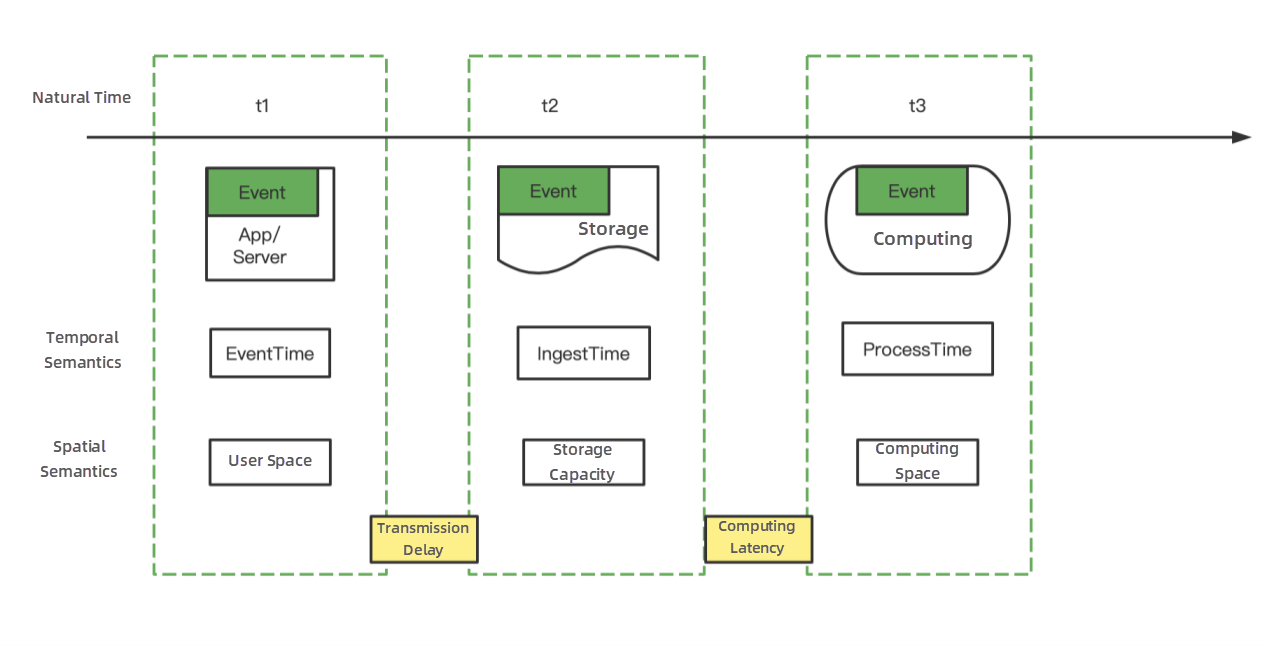

Expected Business Data: Time data in the user space, t1 time data, details or statistics of the user's natural time point or natural time period

Transmission Delay: The delay caused by the app user sending data to the gateway or log service system or the Server log landing on the file system. An event enters the storage space, which indicates the data is deterministic and observable. In most cases, the latency is low. However, in some cases, the app log is generated, but it has not been sent due to network and other problems, or the Server is down, resulting in delayed sending or final loss. Generally speaking, the transmission delay is an uncontrollable delay, and there is no good technical solution to solve it for the time being.

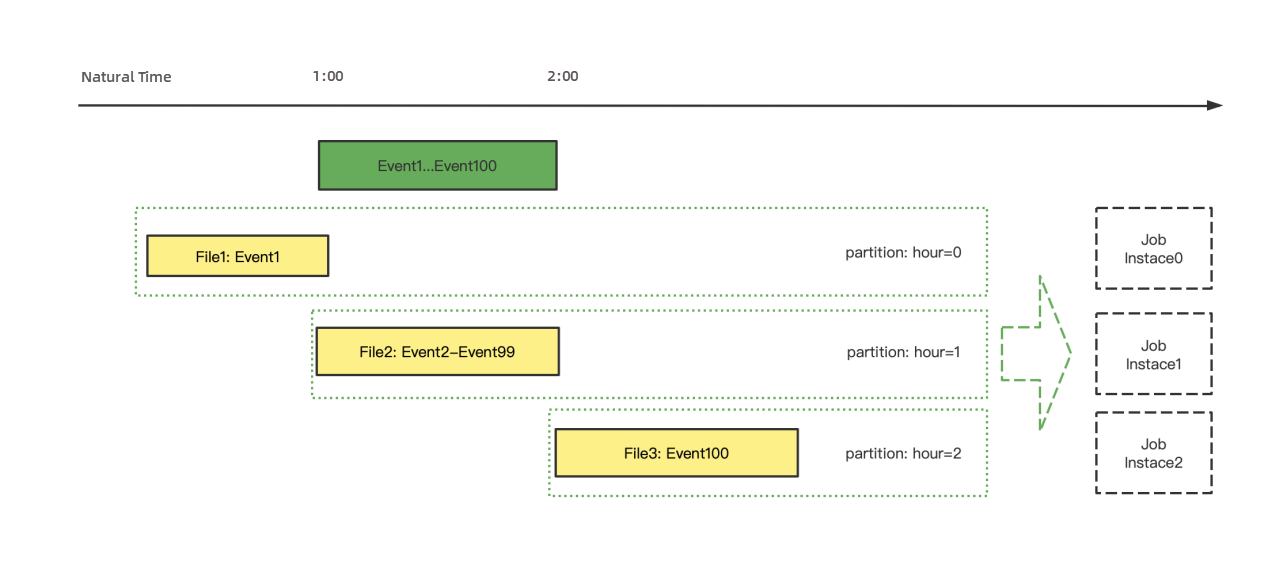

Storage Space: Data is stored in actual storage, offline data warehouses are based on specific distributed file systems, real-time data warehouses are based on Kafka, and quasi-real-time data warehouses are based on the data lake. Here, we can look at offline data warehouses abstractly. Events are hosted in a distributed file system. For example, an hourly partition is essentially a collection of files generated in natural time, and the time precision degrades to the hour level.

Computing Latency: It is the time difference between when data enters the storage space and when data enters the computing space, which is t3 minus t2. In real-time data warehouses, computing latency is ProcessTime minus IngestTime. In offline data warehouses, computing latency is the time difference between the scheduling generation instance and data entering the storage space. Essentially, the computing latency of offline data warehouses is the same as real-time data warehouses. The computing latency varies based on different data warehouse systems. We divide the mainstream data warehouse systems into three types: second-level real-time data warehouse, minute-level quasi-real-time data warehouse, and hour-level offline data warehouse. It can be seen that the timeliness difference of data warehouses is degraded into the difference in computing latency because the transmission delay is uncontrollable.

Bounded and unbounded data are often mentioned in offline data warehouses and real-time data warehouses. It is considered that the data in offline data warehouses is bounded, and the message flow in real-time data warehouses is unbounded. Accuracy depends on the certainty of the data.

The certainty of offline data warehouses lies in the certainty and unmodifiability of the natural file generation time. The natural file generation in a certain hour is approximately equal to the certainty of the event time in the natural time. The counterexample is that we can see the data drift, and the event time will more or less fall into the natural file generation time of the last hour or the next hour. The certainty of offline data warehouses is the certainty of data IngestTime, which has natural file attributes and is easy to segment. When we say that the data calculated in the offline data warehouse is accurate, we default that the impact of transmission delay is small or that the standard of the data metric of the current hour is the natural formation time of the file.

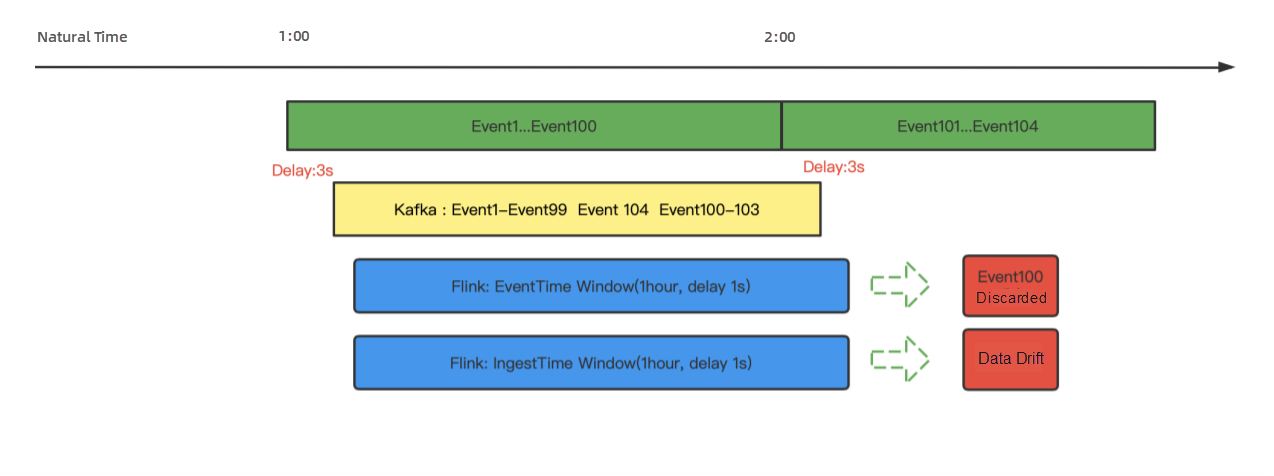

Uncertainty is often mentioned in real-time data warehouses, or the Lambda architecture is an alternative to the uncertainty of real-time data warehouses. What is the reason for this uncertainty? There are four reasons. The first one is ETL processing. A single piece of data is a window. The generation and destruction of a window are completed in an Event: y=window(data). The second is the time window based on EventTime. If you define the delay time, y=window(datas, datas.EventTime, delay), the third and fourth reasons are time window functions of IngestTime and ProcessTime, respectively. Compared with offline data warehouses, it can be seen that the time semantics of the time window based on IngestTime is the most consistent with offline data warehouses. The time window of offline data warehouses can be regarded as the hour window corresponding to the natural time when data enters the file. The certainty of the file carried by the data ensures the data certainty of the hour window: y=window(files).

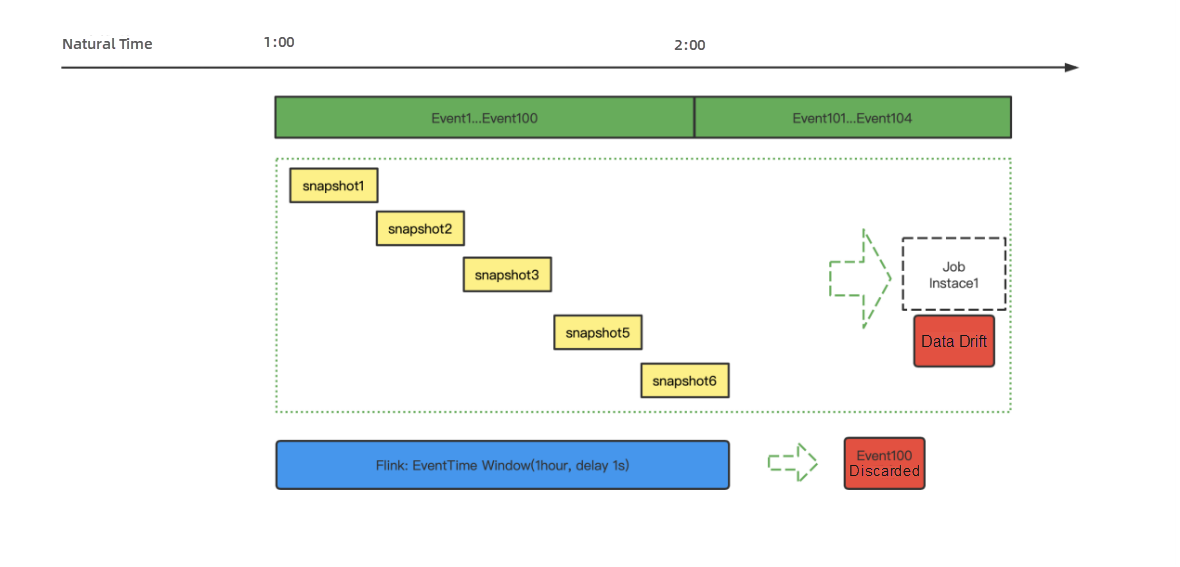

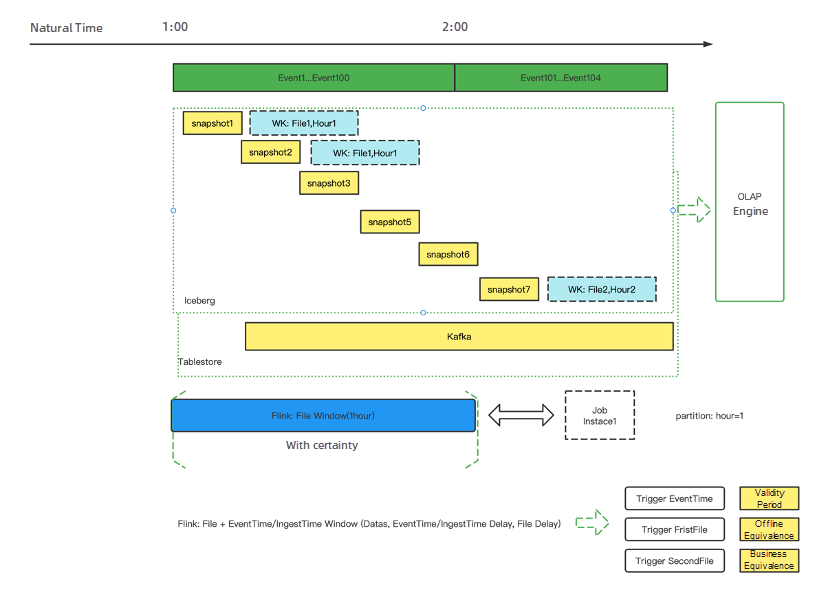

Compared with offline data warehouses, quasi-real-time data warehouses (such as the quasi-real-time data warehouses built based on the data lake of Iceberg) are used to subdivide hourly files into minute-level snapshot files, that is, y=window(snapshots). Compared with real-time data warehouses, the IngestTime of Kafka is currently inaccurate. Snapshot-based file division has a certain guarantee of accuracy, and at the same time, reduces the aging degree from seconds to minutes, which is tolerable in many scenarios.

In terms of the time dimension, one is that the essence of statistics is similar to the business requirements at a certain time. This essence is caused by transmission delay, but in practice, it does not affect the availability and statistical significance of data. The second is the division of different data warehouses, which is brought about by the development of storage and computing technology. The second is the certainty of offline data warehouses obscures the transmission delay, and the uncertainty of real-time data warehouses is a trade-off for transmission delay. The maximum delay time of EventTime is artificially limited, ensuring the timeliness of indicators, which is of practical significance.

When offline data warehouses were recently developed, there was only one data warehouse architecture, which was also based on the fact that distributed processing of big data was recently developed. With the development of real-time technology, we have more requirements on timeliness. However, when comparing with the data accuracy of offline data warehouses, different statistical windows inevitably lead to inconsistent indicator results at a certain time.

In order to address this non-strictly consistent problem, the Lambda architecture was proposed by Nathan Marz (Creator of Storm) and generated to ensure timeliness in real-time data warehouses and accuracy in offline data warehouses. In the end, the system collects the results of an event time window based on the three offline time windows and then backfeeds the real-time data warehouse as the EventTime window. The result of delayed data discarded due to timeliness is used to ensure the business requirements for the EventTime window. Alternatively, the file partitions generated by the offline IngestTime are considered to be approximately the time window of the EventTime by default. This kind of disadvantage brings about the maintenance of two sets of data routes, and everyone is always trying to solve it.

The proposal of Kappa architecture benefits from the efficiency improvement of real-time computing, but due to the shortcomings in batch processing technology, the promotion in production practice is limited. The Kappa architecture is for data processing based on real-time EventTime. The accuracy of the Kappa architecture is discounted because the IngestTime of Kafka is inaccurate, and the transmission delay of EventTime is uncontrollable for comparison with offline data warehouses. It is the most accurate time frame in business, but the feasibility is not good.

In recent years, the evolving OLAP query engine of the MPP architecture does not involve the calculation trade-off of the time window. The OLAP engine is essentially a technical means to accelerate queries based on ProcessTime and is an integral part of data warehouses. However, the uncontrollable transmission delay is not resolved, but the computing latency is pushed down to the query time, and the solution is to minimize the computing latency through fast queries, ensuring the flexibility of the query. The OLAP engine has a wide range of applications in self-service analysis and exploration.

From the perspective of the development of the data warehouse architecture, there are constant trade-offs around the certainty of results, technical feasibility, timeliness of data, and flexibility of queries. Each component is also developed based on actual needs.

Based on the thinking of three data warehouse systems and two architectures, each design takes one or more considerations into account. So, can we implement a mechanism to better meet the needs of data warehouse system construction? Judging from the current technological development, there is a certain possibility. The development of the architecture system is based on the technical foundation and continuous absorption of the advantages of components.

Excluding the division of real-time, quasi-real-time, and offline data warehouses, we can see the feasibility of data warehouse construction from a technical perspective. Then, we have to consider some important points and abandon some impossible implementations.

The first point is the certainty of the results, which is based on the development of offline data warehouses. The problem with uncertainty is the asymmetry of information, and the result of certainty can obscure the meaning of certain indicators.

The second point is the timeliness of the data. High timeliness is inevitably able to meet low timeliness and vice versa. In addition, the timeliness of the data is limited by the technological development of the basic components.

The third point is the convenience of development. The consideration behind timeliness is that convenience is based on application-level construction, and the difficulty is generally weaker than basic components. A good user experience can be achieved through continuous practice and optimization.

The fourth point is the flexibility and high response of queries. The basic design of OLAP ensures the query speed, so the technical architecture of OLAP can be reused or expanded.

Then, based on the four considerations above, the certainty problem can be solved first based on real-time data warehouses. This is a very important proposition to ensure the consistency of the calculation results with the offline data warehouse. In order to implement this, you can refer to the offline data warehouse, obscure EventTime, and IngestTime, use the start and end of the file as the basis for certainty, and calculate the intermediate data of the file in real-time to ensure timeliness. Then, based on Flink, you need to implement a Watermark mechanism based on natural file segmentation as the basis for computing window division.

After solving the certainty problem, you need to solve the cost of computing and the cost of use. The important thing here is the storage layer. Real-time data warehouses rely on Kafka. The development of Kafka does not have some important points about data warehouses in cost and query. Kafka cannot be built on various OLAP engines or computing engines. Here, the dependencies of quasi-real-time data warehouses (such as data lakes or Paimon) are time-effective at the minute level for data lakes. However, from a development point of view, it is a feasible solution. The data lake takes both stream computing and batch computing into account. In the future, it is possible that the OLAP engine can achieve query efficiency similar to the MPP architecture on the data lake. For example, data redundancy can be used in the short term to convert a copy of data in the data lake format to the corresponding OLAP engine to accelerate queries.

The third aspect is the management and dependency mechanism of stream computing, which is borrowed from the management mode of offline data warehouses. It requires a complete set of data dependency management mechanisms and task fault-tolerant and rollback mechanisms. Real-time data warehouses are generally managed based on a single task, and offline data warehouses are managed based on task flows. Therefore, the development of real-time data warehouses must implement task flow management to cover the entire development process.

In order to implement a statistical data warehouse architecture, the development work required is listed below:

First, focus on the development of storage layers (such as data lakes), which are better adapted to stream and batch engines but also highly adaptable to OLAP query engines. Second, introduce a scheduling dependency management mechanism and fault-tolerant and rollback mechanisms similar to offline data warehouses in real-time data warehouses or quasi-real-time data warehouses or make offline scheduling compatible with stream task dependency scheduling to achieve task flow-level management and stream-batch integrated data warehouse implementation. Third, focus on developing batch processing capabilities of Flink at the engine layer.

The final task running mode includes three types: real-time mode, offline mode, and business mode, which correspond to different data accuracy levels. You can also choose one or two as the operating mode.

Simple abstractions of offline scheduling and real-time tasks for data warehouse tasks:

Data Source=>Synchronization Task/Real-time Task=>

stg_table(partition=hour) =>Computing Tasks (insert overwrite partition=hour)=>

dwd_table(partition=hour)=>Computing Tasks (insert overwrite partition=hour)=>

dws_table(partition=hour)=>Synchronization Tasks=>OLAP Acceleration=>Data Service

If the storage layer is based on a data lake (take Paimon as an example):

The version information of the table generated by offline scheduling is of the commit_kind: insert overwrite type. At the same time, the driver of offline tasks is based on the driver of scheduling dependencies, and the scheduling is one by one.

If it is based on stream computing, for example, snapshots are generated at the minute level, the following changes occur:

Data Source=>Synchronization Task/Real-time Task=>

stg_table(version=snapshot_id) =>Computing Tasks (insert into version=snapshot_id) =>

dwd_table(version=snapshot_id)=>Computing Tasks (insert into version=snapshot_id)=>

dws_table(version=snapshot_id)=>Synchronization Tasks=>OLAP Acceleration=>Data Service

Then multiple tasks are started, and the tasks are continuously running. They are of the commit_kind: insert into type.

The following issues need to be resolved to implement a quasi-real-time data warehouse that integrates streaming and batching processing:

1. Flink batch computing capacity should be continuously strengthened.

According to the release of Flink 1.16/1.17, the batch processing capability has been significantly improved. At the same time, the community is continuously strengthening the batch processing capability and compatibility with Hive.

2. How can we use the same Flink SQL for both batch task scheduling and stream task running?

Calculate the word count of the two tables: dwd_partition_word_count and dws_partition_word_count.

CREATE TABLE tablestore.tablestore_test.dwd_partition_word_count (

logdate String,

user_id bigint

) PARTITIONED BY (logdate)

WITH (

'bucket' = '3'

);

CREATE TABLE tablestore.tablestore_test.dws_partition_word_count (

logdate String,

user_id bigint,

cnt BIGINT,

PRIMARY KEY (logdate,user_id) NOT ENFORCED

) PARTITIONED BY (logdate)

WITH (

'bucket' = '3'

);Flink SQL for batch tasks:

insert overwrite tablestore.tablestore_test.dws_partition_word_count PARTITION(logdate=${start_date})

select user_id,count(1) as cnt from tablestore.tablestore_test.dwd_partition_word_count where logdate=${start_date} group by user_id;

-- or

insert overwrite tablestore.tablestore_test.dws_partition_word_count

select logdate, user_id,count(1) as cnt from tablestore.tablestore_test.dwd_partition_word_count where logdate=${start_date} group by logdate,user_id;Flink SQL for stream tasks:

insert into tablestore.tablestore_test.dws_partition_word_count

select logdate,user_id,count(1) as cnt from tablestore.tablestore_test.dwd_partition_word_count group by logdate,user_id;How can we use a Flink SQL to realize the difference under the stream model and batch model?

The difference is the problem of Insert into and Insert overwrite. When submitting the running mode, if it is a batch task, Insert overwrite operation is performed, and if it is a stream task, Insert into operation is performed. This is not technically difficult.

The difference is the data range of the WHERE condition. In abstract terms, the time range of stream tasks and batch tasks can be unified in expression.

insert overwrite tablestore.tablestore_test.dws_partition_word_count

select logdate, user_id,count(1) as cnt from tablestore.tablestore_test.dwd_partition_word_count where logdate>=${start_date} and logdate<=${end_date} group by logdate,user_id;For example, if you run the data on April 22, the batch SQL statement executed is:

insert overwrite tablestore.tablestore_test.dws_partition_word_count

select logdate, user_id,count(1) as cnt from tablestore.tablestore_test.dwd_partition_word_count where logdate>='20230422' and logdate<='20230422' group by logdate,user_id;If you run the data in stream mode, the following SQL statement can be executed:

select logdate, user_id,count(1) as cnt from tablestore.tablestore_test.dwd_partition_word_count where logdate>='19700101' and logdate<='99990101' group by logdate,user_id;When executed by the platform, the insert overwrite/into and time range can be automatically converted, and parameters can be automatically input.

3. How can we separate the scheduling of batch tasks and the calculation of stream tasks?

After the task is developed, the logic is verified by scheduling tasks in batch mode. Then, you can use the stream mode to run tasks continuously. One thing is what to do with computational logic changes or historical data repair, and the other thing is whether it is possible to support stream running and batch running at the same time. These two problems are essentially the same problem. If the computational logic changes, you can modify the stream-batch integrated SQL logic and then restart the stream task to apply the new computational logic. At the same time, the stream-batch integrated SQL rolls back the historical data on the scheduling and rewrites the data.

When you roll back historical data, does the stream task read the historical data for computing?

This problem is mainly solved by the data lake version commit kind mentioned above. Only insert overwrite is applied to batch tasks, and insert into is applied to stream tasks. If the system detects that the stream task in the insert overwrite version is committed, it skips the task and does not read or process the data. Only data of stream tasks that use the insert into statement is processed. The execution of the batch task does not affect the stream task.

Currently, in data lake streaming reading, you only need to add a switch option to implement it.

4. How can we implement primary key writing by the Insert into of a stream task?

If the Insert into statement of a stream task cannot implement primary key writing, the duplication of partition data cannot be resolved. In this case, you can only perform both stream and batch processing to resolve the duplication of data. In other words, if the downstream is the primary key idempotent, the insert into and insert overwrite statements have equivalent semantics.

This can be achieved using a primary key table in the data lake (such as the primary key table of Paimon). The primary key table of Paimon is initially available for production.

5. Scheduling dependencies of stream tasks and batch tasks

If a stream task is followed by batch task scheduling, how can we implement scheduling dependencies?

A more elegant implementation is that when the stream task is written to the downstream table, if the watermark of the data is written to the attribute of the downstream table, and the latest data is five minutes later than the current hour, the downstream scheduling task of the current hour can determine whether the scheduling instance of the batch task should be pulled up by checking the attribute time of the table. Alternatively, you can check dependencies based on the running latency of the stream task.

Based on the implementation and solution above, we can realize the stream-batch integrated Flink SQL running in batch mode and stream mode. If the scheduling dependency is relatively perfect, we can realize the stream-batch mixed running. Simultaneous complement or double running does not affect the stability of stream tasks.

In actual development, you can use batch tasks to do development and verification, use the stream mode to pull up, and the data output is basically minute-level. Problems can be corrected with batch tasks.

Generic Log-based Incremental Checkpoint - Performance Evaluation & Analytics

206 posts | 54 followers

FollowApache Flink Community China - September 27, 2020

Apache Flink Community China - July 28, 2020

Alibaba Cloud MaxCompute - January 21, 2022

Apache Flink Community China - February 19, 2021

Apache Flink Community China - April 13, 2022

Alibaba EMR - March 18, 2022

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Apache Flink Community

Dikky Ryan Pratama July 12, 2023 at 3:39 am

Awesome!