By Xue Shuai

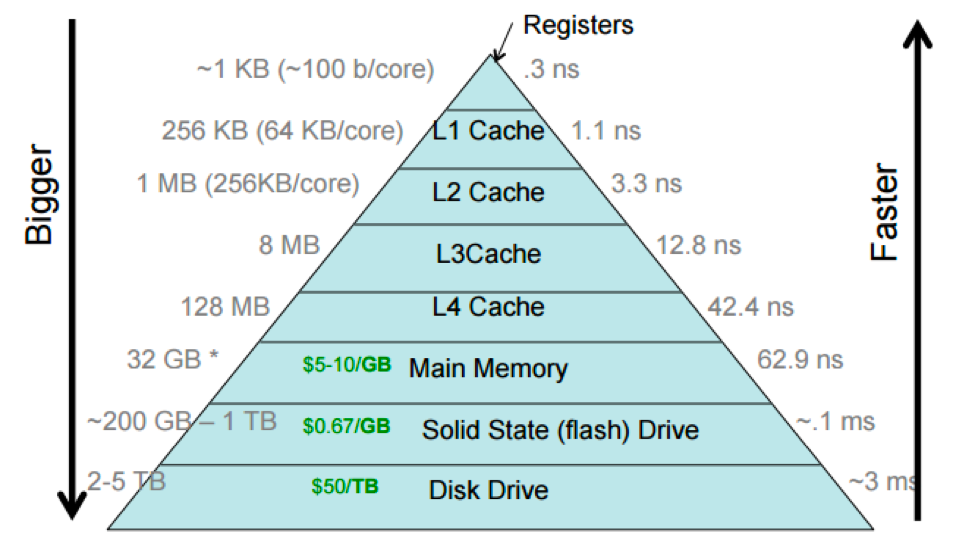

In computer architecture, the memory hierarchy separates computer storage into different levels based on the fact that the cost of memory, capacity is inversely proportional to speed. L1 cache is closest to the CPU and has the least access latency. However, its capacity is also the smallest. This article describes how to measure the access latency in a memory hierarchy system and introduces the mechanism behind it.

Source: https://cs.brown.edu/courses/csci1310/2020/assign/labs/lab4.html

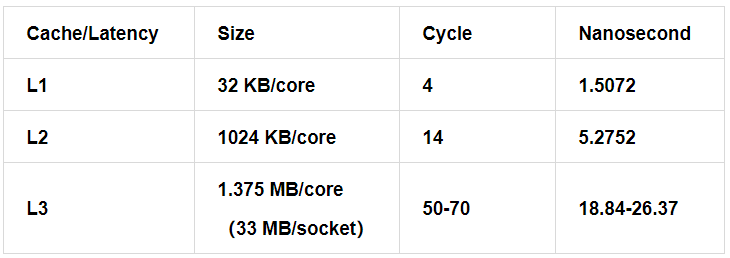

Wikichip [1] provides the data of cache latencies of different CPU models (unit: cycle generally). This unit is converted to nanosecond (ns) through simple operations. Let's take Intel skylake processor as an example. The reference value of access latency at each level of the CPU cache hierarchy is shown as below:

CPU Frequency: 2,654 MHz (0.3768 ns/clock)

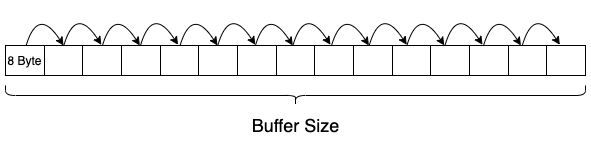

The straightforward design is:

Because all data is now loaded in cache, the time spent to iterate over is the cost of cache latency.

The code is implemented in mem-lat.c and pasted as below:

#include <sys/types.h>

#include <stdlib.h>

#include <stdio.h>

#include <sys/mman.h>

#include <sys/time.h>

#include <unistd.h>

#define ONE p = (char **)*p;

#define FIVE ONE ONE ONE ONE ONE

#define TEN FIVE FIVE

#define FIFTY TEN TEN TEN TEN TEN

#define HUNDRED FIFTY FIFTY

static void usage()

{

printf("Usage: ./mem-lat -b xxx -n xxx -s xxx\n");

printf(" -b buffer size in KB\n");

printf(" -n number of read\n\n");

printf(" -s stride skipped before the next access\n\n");

printf("Please don't use non-decimal based number\n");

}

int main(int argc, char* argv[])

{

unsigned long i, j, size, tmp;

unsigned long memsize = 0x800000; /* 1/4 LLC size of skylake, 1/5 of broadwell */

unsigned long count = 1048576; /* memsize / 64 * 8 */

unsigned int stride = 64; /* skipped amount of memory before the next access */

unsigned long sec, usec;

struct timeval tv1, tv2;

struct timezone tz;

unsigned int *indices;

while (argc-- > 0) {

if ((*argv)[0] == '-') { /* look at first char of next */

switch ((*argv)[1]) { /* look at second */

case 'b':

argv++;

argc--;

memsize = atoi(*argv) * 1024;

break;

case 'n':

argv++;

argc--;

count = atoi(*argv);

break;

case 's':

argv++;

argc--;

stride = atoi(*argv);

break;

default:

usage();

exit(1);

break;

}

}

argv++;

}

char* mem = mmap(NULL, memsize, PROT_READ | PROT_WRITE, MAP_PRIVATE | MAP_ANON, -1, 0);

// trick3: init pointer chasing, per stride=8 byte

size = memsize / stride;

indices = malloc(size * sizeof(int));

for (i = 0; i < size; i++)

indices[i] = i;

// trick 2: fill mem with pointer references

for (i = 0; i < size - 1; i++)

*(char **)&mem[indices[i]*stride]= (char*)&mem[indices[i+1]*stride];

*(char **)&mem[indices[size-1]*stride]= (char*)&mem[indices[0]*stride];

char **p = (char **) mem;

tmp = count / 100;

gettimeofday (&tv1, &tz);

for (i = 0; i < tmp; ++i) {

HUNDRED; //trick 1

}

gettimeofday (&tv2, &tz);

if (tv2.tv_usec < tv1.tv_usec) {

usec = 1000000 + tv2.tv_usec - tv1.tv_usec;

sec = tv2.tv_sec - tv1.tv_sec - 1;

} else {

usec = tv2.tv_usec - tv1.tv_usec;

sec = tv2.tv_sec - tv1.tv_sec;

}

printf("Buffer size: %ld KB, stride %d, time %d.%06d s, latency %.2f ns\n",

memsize/1024, stride, sec, usec, (sec * 1000000 + usec) * 1000.0 / (tmp *100));

munmap(mem, memsize);

free(indices);

}Here are three tricks :

Test script:

#set -x

work=./mem-lat

buffer_size=1

stride=8

for i in `seq 1 15`; do

taskset -ac 0 $work -b $buffer_size -s $stride

buffer_size=$(($buffer_size*2))

doneThe following part shows the test results of performance:

//L1

Buffer size: 1 KB, stride 8, time 0.003921 s, latency 3.74 ns

Buffer size: 2 KB, stride 8, time 0.003928 s, latency 3.75 ns

Buffer size: 4 KB, stride 8, time 0.003935 s, latency 3.75 ns

Buffer size: 8 KB, stride 8, time 0.003926 s, latency 3.74 ns

Buffer size: 16 KB, stride 8, time 0.003942 s, latency 3.76 ns

Buffer size: 32 KB, stride 8, time 0.003963 s, latency 3.78 ns

//L2

Buffer size: 64 KB, stride 8, time 0.004043 s, latency 3.86 ns

Buffer size: 128 KB, stride 8, time 0.004054 s, latency 3.87 ns

Buffer size: 256 KB, stride 8, time 0.004051 s, latency 3.86 ns

Buffer size: 512 KB, stride 8, time 0.004049 s, latency 3.86 ns

Buffer size: 1024 KB, stride 8, time 0.004110 s, latency 3.92 ns

//L3

Buffer size: 2048 KB, stride 8, time 0.004126 s, latency 3.94 ns

Buffer size: 4096 KB, stride 8, time 0.004161 s, latency 3.97 ns

Buffer size: 8192 KB, stride 8, time 0.004313 s, latency 4.11 ns

Buffer size: 16384 KB, stride 8, time 0.004272 s, latency 4.07 nsAs we mentioned, the buffer is allocated to iterate over and the different size is simulate the memory hierarchy. The char and char* are 8 bytes, so stride is 8. And the time and latency is the average latency for read access.

Compared with the referenced value in part1, the performance is not expected. The latency of L1 cache is a bit higher than expected and the latency of the L2 and L3 are not in the same order of magnitude as we expected.

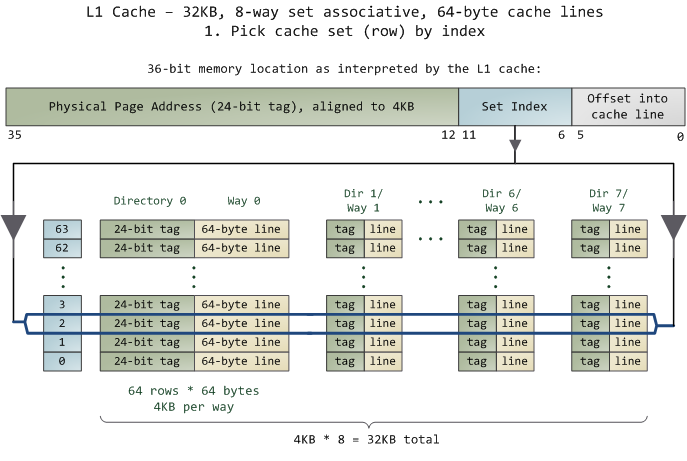

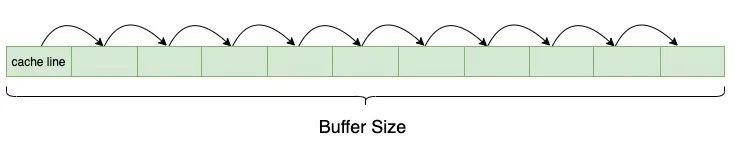

In modern processors, memory is organized in the granularity of cache line. The read and write operations of cache access are performed in cache line granularity. The most common cache line size is 64 bytes:

If we read the 128-KB buffer sequentially at a granularity of 8 bytes, the whole cacheline will be refilled into L1 cache when performing the first 8 bytes read in 64 byte aligned. The left 58 bytes read will hit L1, resulting in a significantly better performance than we expected.

The cache line size of the Skylake processor is 64 bytes. Then we set the stride to 64 to run the test case again.

The test results are listed below:

//L1

Buffer size: 1 KB, stride 64, time 0.003933 s, latency 3.75 ns

Buffer size: 2 KB, stride 64, time 0.003930 s, latency 3.75 ns

Buffer size: 4 KB, stride 64, time 0.003925 s, latency 3.74 ns

Buffer size: 8 KB, stride 64, time 0.003931 s, latency 3.75 ns

Buffer size: 16 KB, stride 64, time 0.003935 s, latency 3.75 ns

Buffer size: 32 KB, stride 64, time 0.004115 s, latency 3.92 ns

//L2

Buffer size: 64 KB, stride 64, time 0.007423 s, latency 7.08 ns

Buffer size: 128 KB, stride 64, time 0.007414 s, latency 7.07 ns

Buffer size: 256 KB, stride 64, time 0.007437 s, latency 7.09 ns

Buffer size: 512 KB, stride 64, time 0.007429 s, latency 7.09 ns

Buffer size: 1024 KB, stride 64, time 0.007650 s, latency 7.30 ns

Buffer size: 2048 KB, stride 64, time 0.007670 s, latency 7.32 ns

//L3

Buffer size: 4096 KB, stride 64, time 0.007695 s, latency 7.34 ns

Buffer size: 8192 KB, stride 64, time 0.007786 s, latency 7.43 ns

Buffer size: 16384 KB, stride 64, time 0.008172 s, latency 7.79 nsAlthough the access latency of L2 and L3 cache increase compared with the former test results, it is still not as expected.

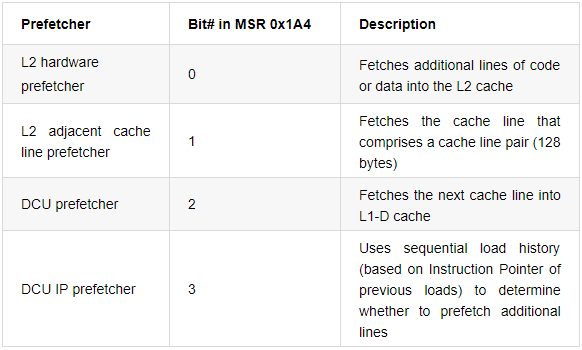

Modern processors usually support prefetch. The prefetch can load data to the cache that may be used later in advance. In this way, it reduces the time that the core stalls while loading data from the memory. It also improves the cache hit rate and the running efficiency of the software.

Intel processors support four types of hardware prefetcher [2], which can be enabled and disabled through MSR.

Here, we set stride to 128 and 256 to avoid hardware prefetching. The access latency in L3 after the test increases significantly.

// stride 128

Buffer size: 1 KB, stride 256, time 0.003927 s, latency 3.75 ns

Buffer size: 2 KB, stride 256, time 0.003924 s, latency 3.74 ns

Buffer size: 4 KB, stride 256, time 0.003928 s, latency 3.75 ns

Buffer size: 8 KB, stride 256, time 0.003923 s, latency 3.74 ns

Buffer size: 16 KB, stride 256, time 0.003930 s, latency 3.75 ns

Buffer size: 32 KB, stride 256, time 0.003929 s, latency 3.75 ns

Buffer size: 64 KB, stride 256, time 0.007534 s, latency 7.19 ns

Buffer size: 128 KB, stride 256, time 0.007462 s, latency 7.12 ns

Buffer size: 256 KB, stride 256, time 0.007479 s, latency 7.13 ns

Buffer size: 512 KB, stride 256, time 0.007698 s, latency 7.34 ns

Buffer size: 512 KB, stride 128, time 0.007597 s, latency 7.25 ns

Buffer size: 1024 KB, stride 128, time 0.009169 s, latency 8.74 ns

Buffer size: 2048 KB, stride 128, time 0.010008 s, latency 9.55 ns

Buffer size: 4096 KB, stride 128, time 0.010008 s, latency 9.55 ns

Buffer size: 8192 KB, stride 128, time 0.010366 s, latency 9.89 ns

Buffer size: 16384 KB, stride 128, time 0.012031 s, latency 11.47 ns

// stride 256

Buffer size: 512 KB, stride 256, time 0.007698 s, latency 7.34 ns

Buffer size: 1024 KB, stride 256, time 0.012654 s, latency 12.07 ns

Buffer size: 2048 KB, stride 256, time 0.025210 s, latency 24.04 ns

Buffer size: 4096 KB, stride 256, time 0.025466 s, latency 24.29 ns

Buffer size: 8192 KB, stride 256, time 0.025840 s, latency 24.64 ns

Buffer size: 16384 KB, stride 256, time 0.027442 s, latency 26.17 nsThe access latency in L3 is mostly in line with expectations but is higher in L1 and L2.

When test the random access latency, a more general approach is to randomize the buffer pointer when iterate over it.

// shuffle indices

for (i = 0; i < size; i++) {

j = i + rand() % (size - i);

if (i != j) {

tmp = indices[i];

indices[i] = indices[j];

indices[j] = tmp;

}

}After applying the above approach, the test results are mostly the same as when stride is set to 256.

Buffer size: 1 KB, stride 64, time 0.003942 s, latency 3.76 ns

Buffer size: 2 KB, stride 64, time 0.003925 s, latency 3.74 ns

Buffer size: 4 KB, stride 64, time 0.003928 s, latency 3.75 ns

Buffer size: 8 KB, stride 64, time 0.003931 s, latency 3.75 ns

Buffer size: 16 KB, stride 64, time 0.003932 s, latency 3.75 ns

Buffer size: 32 KB, stride 64, time 0.004276 s, latency 4.08 ns

Buffer size: 64 KB, stride 64, time 0.007465 s, latency 7.12 ns

Buffer size: 128 KB, stride 64, time 0.007470 s, latency 7.12 ns

Buffer size: 256 KB, stride 64, time 0.007521 s, latency 7.17 ns

Buffer size: 512 KB, stride 64, time 0.009340 s, latency 8.91 ns

Buffer size: 1024 KB, stride 64, time 0.015230 s, latency 14.53 ns

Buffer size: 2048 KB, stride 64, time 0.027567 s, latency 26.29 ns

Buffer size: 4096 KB, stride 64, time 0.027853 s, latency 26.56 ns

Buffer size: 8192 KB, stride 64, time 0.029945 s, latency 28.56 ns

Buffer size: 16384 KB, stride 64, time 0.034878 s, latency 33.26 nsAfter solving the low latency problem in L3, we continue to analyze why the access latencies of L1 and L2 are still higher than our expected. We can gernerate the assembly instructions from the executable program to see if the executed instructions is what we want.

objdump -D -S mem-lat > mem-lat.s-D: Display assembler content of all sections.-S: Intermix source code with disassembly. (-g is required to generate debugging information during gcc compilation.)The key part of generated assembly file mem-lat.s are listed bellow :

char **p = (char **)mem;

400b3a: 48 8b 45 c8 mov -0x38(%rbp),%rax

400b3e: 48 89 45 d0 mov %rax,-0x30(%rbp) // push stack

//...

HUNDRED;

400b85: 48 8b 45 d0 mov -0x30(%rbp),%rax

400b89: 48 8b 00 mov (%rax),%rax

400b8c: 48 89 45 d0 mov %rax,-0x30(%rbp)

400b90: 48 8b 45 d0 mov -0x30(%rbp),%rax

400b94: 48 8b 00 mov (%rax),%rax char **p = (char **)mem;

400b3a: 48 8b 45 c8 mov -0x38(%rbp),%rax

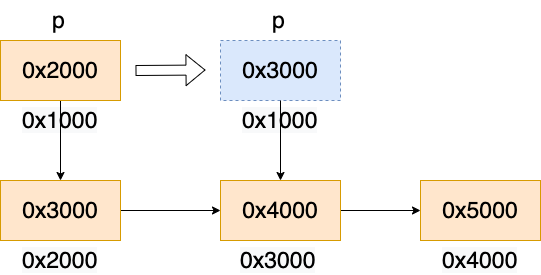

400b3e: 48 89 45 d0 mov %rax,-0x30(%rbp)The logic of cache access part:

HUNDRED; // p = (char **)*p

400b85: 48 8b 45 d0 mov -0x30(%rbp),%rax

400b89: 48 8b 00 mov (%rax),%rax

400b8c: 48 89 45 d0 mov %rax,-0x30(%rbp)rax register. The data type of variable p is char**, which means that p is a point of a char* variable. In other words, the value of p is an address). Let us take the following figure for example, the value of the variable p is 0x2000.rax register pointing to the variable to the rax register, which corresponds to the unary operation *p. In the following example, 0x2000 changes to 0x3000, and the value in the rax register is updated to 0x3000.rax register to the variable p. In the following figure, the value of the variable p is updated to 0x3000.

As we can see in disassembly code, the expected one move instruction is compiled into three instructions, which is reasonable to casue the cache access latency increases by three times. A programming trick is to use the keyword, register. The register keyword of the C language allows the compiler to save variables to registers, thus avoiding the overhead of reading from the stack each time.

Notice: It's a hint to the compiler that the variable will be heavily used and that you recommend it be kept in a processor register if possible.

When we declare p, we add the register keyword:

register char **p = (char **)mem;The following part shows the new test results:

// L1

Buffer size: 1 KB, stride 64, time 0.000030 s, latency 0.03 ns

Buffer size: 2 KB, stride 64, time 0.000029 s, latency 0.03 ns

Buffer size: 4 KB, stride 64, time 0.000030 s, latency 0.03 ns

Buffer size: 8 KB, stride 64, time 0.000030 s, latency 0.03 ns

Buffer size: 16 KB, stride 64, time 0.000030 s, latency 0.03 ns

Buffer size: 32 KB, stride 64, time 0.000030 s, latency 0.03 ns

// L2

Buffer size: 64 KB, stride 64, time 0.000030 s, latency 0.03 ns

Buffer size: 128 KB, stride 64, time 0.000030 s, latency 0.03 ns

Buffer size: 256 KB, stride 64, time 0.000029 s, latency 0.03 ns

Buffer size: 512 KB, stride 64, time 0.000030 s, latency 0.03 ns

Buffer size: 1024 KB, stride 64, time 0.000030 s, latency 0.03 ns

// L3

Buffer size: 2048 KB, stride 64, time 0.000030 s, latency 0.03 ns

Buffer size: 4096 KB, stride 64, time 0.000029 s, latency 0.03 ns

Buffer size: 8192 KB, stride 64, time 0.000030 s, latency 0.03 ns

Buffer size: 16384 KB, stride 64, time 0.000030 s, latency 0.03 nsThe access latency of all memory hierarchy are lower than 1 ns, which does not meet expectations. We are close to the answer. Let us think more.

Well, let's disassemble it again to see what went wrong. The code for compiling is listed below:

for (i = 0; i < tmp; ++i) {

40155e: 48 c7 45 f8 00 00 00 movq $0x0,-0x8(%rbp)

401565: 00

401566: eb 05 jmp 40156d <main+0x37e>

401568: 48 83 45 f8 01 addq $0x1,-0x8(%rbp)

40156d: 48 8b 45 f8 mov -0x8(%rbp),%rax

401571: 48 3b 45 b0 cmp -0x50(%rbp),%rax

401575: 72 f1 jb 401568 <main+0x379>

HUNDRED;

}

gettimeofday (&tv2, &tz);

401577: 48 8d 95 78 ff ff ff lea -0x88(%rbp),%rdx

40157e: 48 8d 45 80 lea -0x80(%rbp),%rax

401582: 48 89 d6 mov %rdx,%rsi

401585: 48 89 c7 mov %rax,%rdi

401588: e8 e3 fa ff ff callq 401070 <gettimeofday@plt>The HUNDRED macro does not generate any assembly code. The statements involving the variable p have no practical effect but just read the data. They are very likely optimized by the compiler.

register char **p = (char **) mem;

tmp = count / 100;

gettimeofday (&tv1, &tz);

for (i = 0; i < tmp; ++i) {

HUNDRED;

}

gettimeofday (&tv2, &tz);

/* touch pointer p to prevent compiler optimization */

char **touch = p;So, let us touch the pointer to prevent compiler optimization. Disassemble and verify the code again:

HUNDRED;

401570: 48 8b 1b mov (%rbx),%rbx

401573: 48 8b 1b mov (%rbx),%rbx

401576: 48 8b 1b mov (%rbx),%rbx

401579: 48 8b 1b mov (%rbx),%rbx

40157c: 48 8b 1b mov (%rbx),%rbxThe assembly code generated by the HUNDRED macro only involves the mov instruction that operates the register rbx. Cool, I can not wait to test the result.

// L1

Buffer size: 1 KB, stride 64, time 0.001687 s, latency 1.61 ns

Buffer size: 2 KB, stride 64, time 0.001684 s, latency 1.61 ns

Buffer size: 4 KB, stride 64, time 0.001682 s, latency 1.60 ns

Buffer size: 8 KB, stride 64, time 0.001693 s, latency 1.61 ns

Buffer size: 16 KB, stride 64, time 0.001683 s, latency 1.61 ns

Buffer size: 32 KB, stride 64, time 0.001783 s, latency 1.70 ns

// L2

Buffer size: 64 KB, stride 64, time 0.005896 s, latency 5.62 ns

Buffer size: 128 KB, stride 64, time 0.005915 s, latency 5.64 ns

Buffer size: 256 KB, stride 64, time 0.005955 s, latency 5.68 ns

Buffer size: 512 KB, stride 64, time 0.007856 s, latency 7.49 ns

Buffer size: 1024 KB, stride 64, time 0.014929 s, latency 14.24 ns

// L3

Buffer size: 2048 KB, stride 64, time 0.026970 s, latency 25.72 ns

Buffer size: 4096 KB, stride 64, time 0.026968 s, latency 25.72 ns

Buffer size: 8192 KB, stride 64, time 0.028823 s, latency 27.49 ns

Buffer size: 16384 KB, stride 64, time 0.033325 s, latency 31.78 nsThe latency is 1.61 ns in L1 and 5.62 ns in L2. Fantastic, we made it.

The ideas and code in this article are borrowed from lmbench [3] and the tool mem-lat is developed by Shanpei Chen, an kernel expert at Alibaba. When shuffling the buffer pointer, the impact of hardware TLB miss was not considered. If someone are interested in this topic, I will discuss it in subsequent articles.

[1] https://en.wikichip.org/wiki/intel/microarchitectures/skylake_(server)

[3] McVoy L W, Staelin C. lmbench: Portable Tools for Performance Analysis[C]//USENIX annual technical conference. 1996: 279-294.

101 posts | 6 followers

Followtianyin - November 8, 2019

Daniel Molenaars - June 19, 2025

Alibaba Cloud Native Community - July 13, 2022

Adrian Peng - February 1, 2021

Alibaba Container Service - July 16, 2019

ApsaraDB - May 29, 2025

101 posts | 6 followers

Follow Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by OpenAnolis