By Mintai

The Tair team of Alibaba Cloud's intelligent database is behind the development of a proprietary distributed key-value store (KVS) system, which covers almost all of Alibaba's core businesses, including Taobao, Tmall, Alimama, Cainiao, DingTalk, Youku, and Amap. For more than a decade, the Tair team has been providing Alibaba with highly reliable, high-performance, and low-cost data storage and access services.

Recently, a paper by the Tair team "HotRing: A Hotspot-Aware In-Memory Key-Value Store" was accepted by FAST'20 Research Track (a USENIX Conference on File and Storage Techniques [FAST]). It's a top CCF Class A conference in the storage field, with an acceptance rate of 16% in 2020.

Link to Paper: https://www.usenix.org/conference/fast20/presentation/chen-jiqiang

HotRing is the innovative in-memory KVS engine designed by the Tair team. The engine throughput can reach 600 million OPS/s, which is 2.58 times faster than the fastest current KVS system. HotRing significantly improves the KVS engine's capability to carry hot spot access traffic. This is especially critical for the stability and cost control of the KVS system.

To help you easily understand this paper, this article describes how the Tair team solves the hot spot problems that confront the database architecture during peak traffic at 00:00 on the day of Alibaba's 2019 Double 11 Shopping Festival. This article also introduces the innovative engine, HotRing, developed by the Tair team.

In 2019, the Tmall Double 11 Shopping Festival again set a world record, with orders peaking at 544,000 per second at 00:00. The massive order volume had a significant impact on Alibaba's databases because they had to process these orders while providing transaction assurance.

The reality was even tougher. An order was accessed dozens of times at the backend on being processed by the business logic. Many customers just browsed item after item without placing orders. Therefore, during the traffic peak at 00:00, 1 billion access requests were actually sent per second.

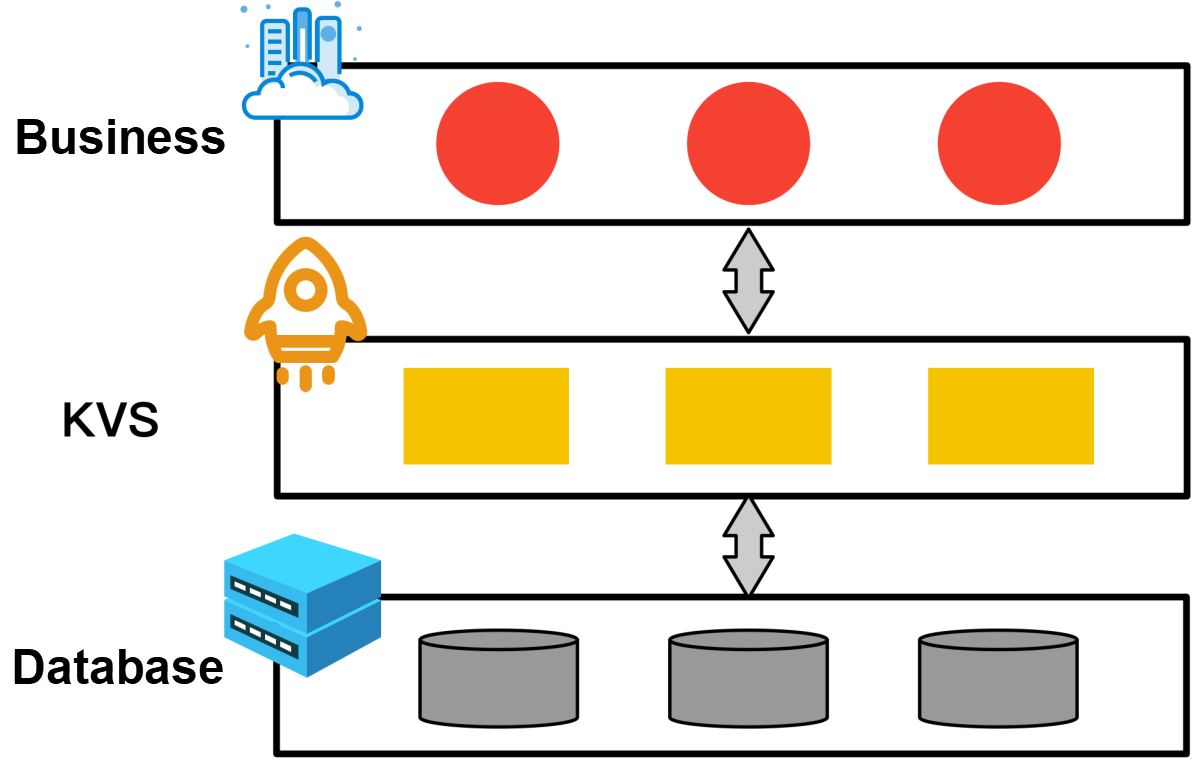

As a highly concurrent distributed KVS system, Tair assumed an important role in processing the traffic peak. As shown in the following logic diagram, Tair works as a distributed database cache system to cache a large amount of hot spot data, such as product information, inventory information, and risk control information. This relieves the database of the pressure of massive access-traffic volumes. During the Double 11 Shopping Festival in 2019, the peak access traffic to Tair was 992 million access requests per second.

From the business perspective, a typical hot spot scenario is flash sales at 00:00 during the Double 11 Shopping Festival. Flash sales produce a severely skewed power-law distribution of data access traffic.

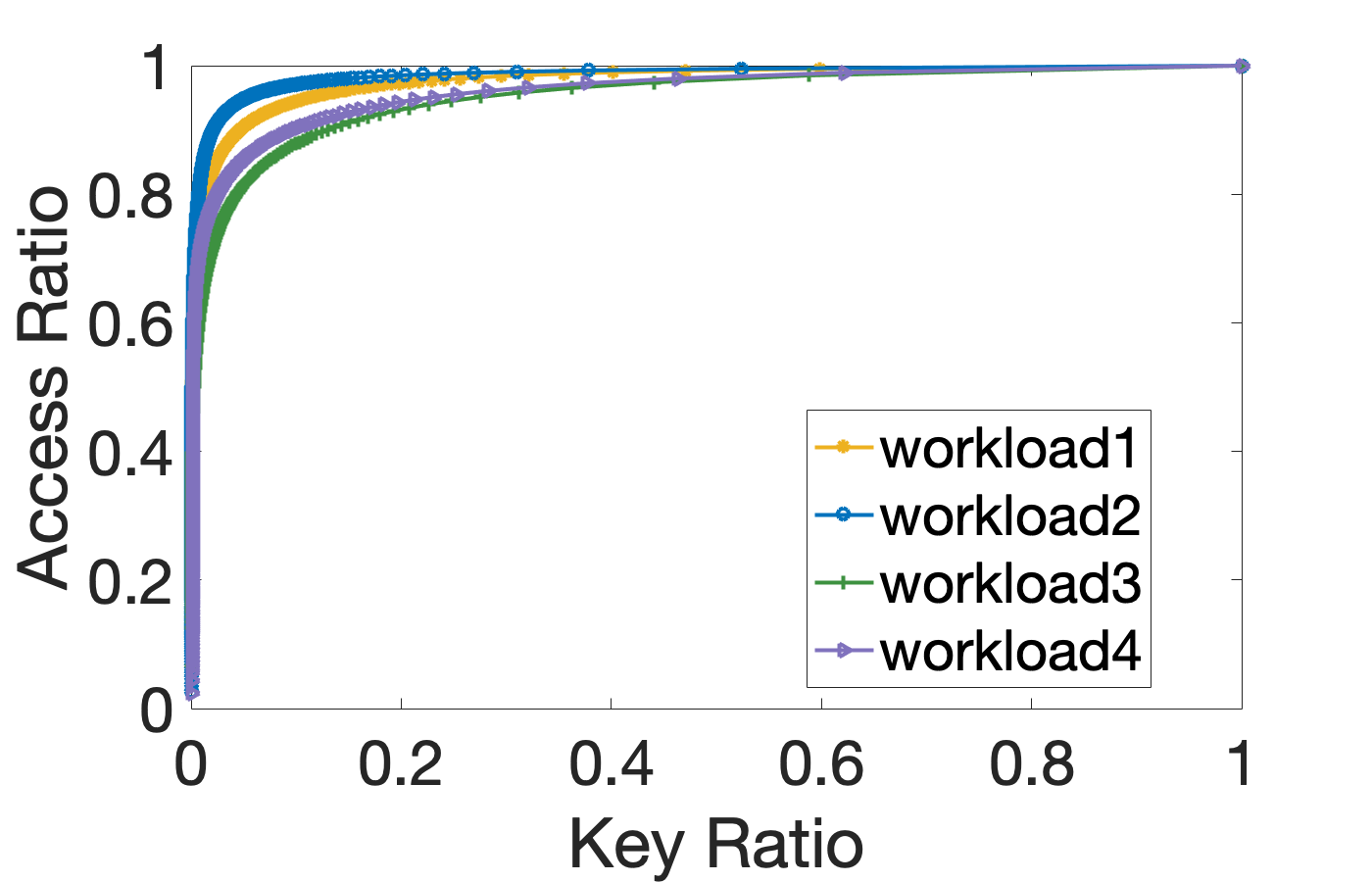

We analyzed the distribution of data access traffic in various businesses. As shown in the following figure, a large number of access requests are concentrated on a small amount of hot spot data. The θ value of this distribution is about 1.22 if it is expressed by using the Zipfian distribution, one of a family of discrete power-law probability distributions. A paper by Facebook shows an approximate θ value of data access distribution. For more information, see Paper [3] in the "References" section.

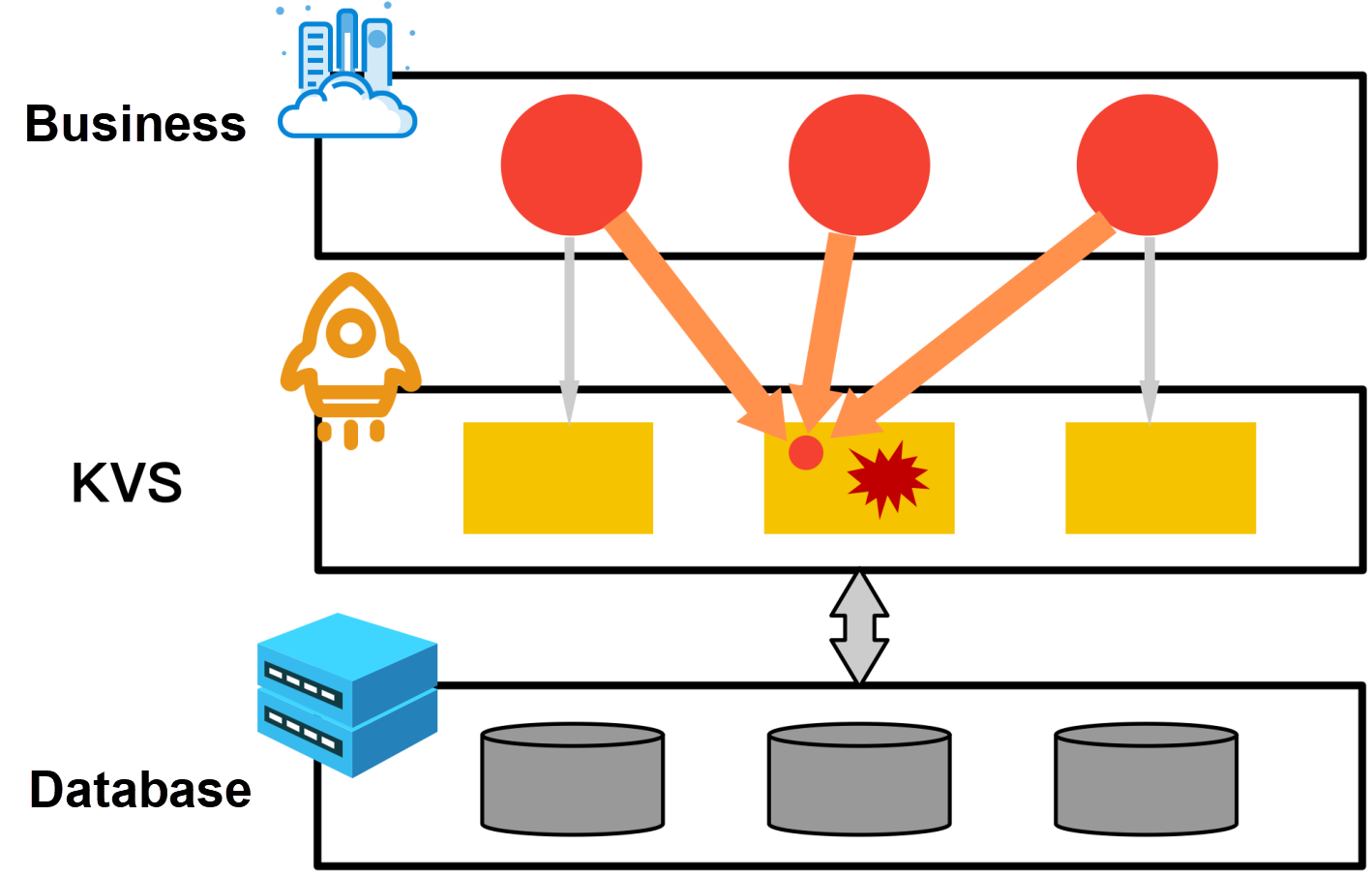

The following figure depicts the release of a new iPhone as an example. The iPhone inventory information and other information are stored on a single KVS node. When many orders are placed for the newly released iPhone, most business access traffic is concentrated on this node. If the node fails to process massive hot spot access traffic, the system crashes and the user experience is affected.

To ensure a smooth shopping experience during the Double 11 Shopping Festival, Tair made the following optimizations for hot spot access:

After 10 years of technical progress, we developed ApsaraDB for Redis Enterprise Edition, which integrates the caching function of Alibaba's Tair database on the cloud, where it's accessible by all.

This article briefly introduces HotRing. If you are interested in other Tair technologies, you may read related articles and watch the release conference for the ApsaraDB for Redis Enterprise Edition starting March 11 to learn more about the core technologies.

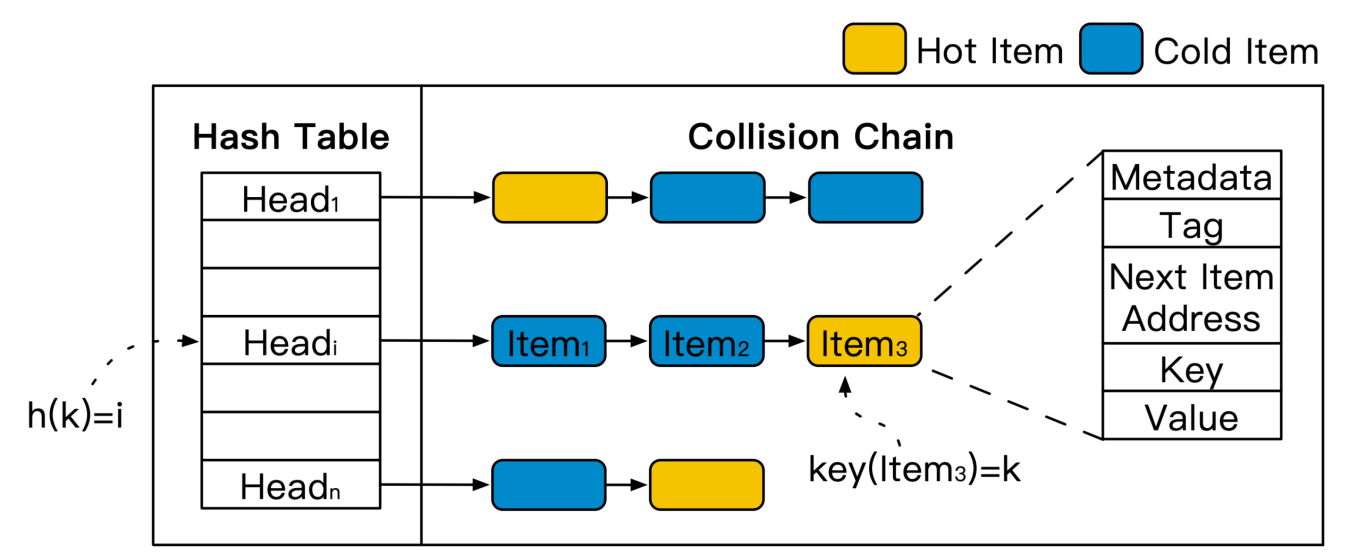

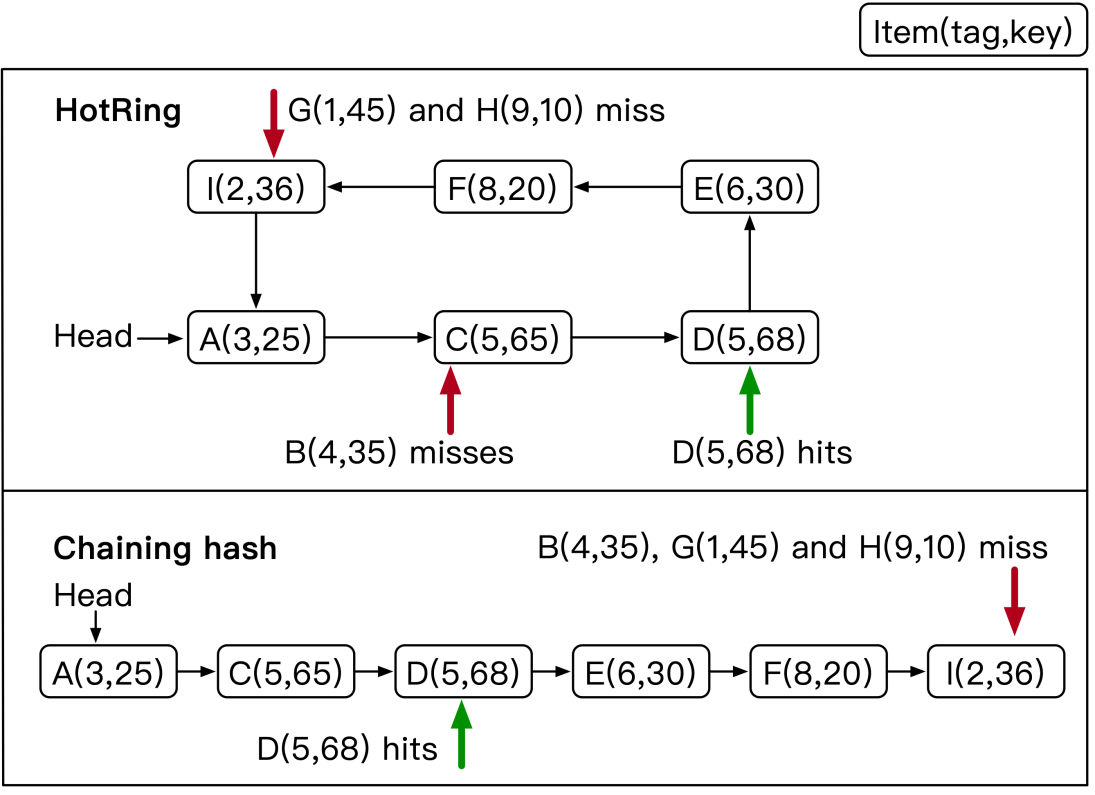

Existing in-memory KVS engines typically use chain hashes as indexes. The following figure shows the engine architecture. First, the hash value h(k) of the data is calculated based on its key value (k). This corresponds to a head pointer (Headi) of the hash table. All data items of the corresponding collision chain are traversed based on the head pointer to find the target data through key-value comparison. If the target data is not in the collision chain, which is called a read miss, the target data can be inserted at the chain head.

In a chaining hash index structure, access to data at the tail of the collision chain must traverse more index hops, resulting in more memory accesses. The system can respond to access requests for data at the head of the collision chain more quickly.

However, data items are sorted in the collision chain in the data insertion order, regardless of whether the data items are cold or hot. As shown in the preceding figure, hot spot data (hot items) is evenly distributed in the collision chain.

The ideal and intuitive design is to move all hot spot data to the head of the collision chain. However, this design is difficult for two reasons. First, the data access frequency changes dynamically, making it necessary to implement dynamic hot spot awareness and ensure hot spot information is up to date. Secondly, with an access latency of 100 ns, the in-memory KVS engine performance is sensitive. To ensure engine performance, implement lock-free hot spot awareness and maintain the high concurrency and throughput of the engine.

HotRing is designed with ordered ring hash indexes based on chain hashing. As shown in the following figure, the head and tail of the collision chain are connected to form a collision ring. This ensures that all data items in the ring are traversed no matter what data item is pointed to by the head pointed. HotRing moves the head pointer in lock-free mode to dynamically point to the items with higher access frequency or calculates the position of the optimal item by using an algorithm. This accelerates the responses to access requests for hot spot data.

The design of HotRing can be understood by considering the four following issues:

After implementing hash indexes, it is necessary to ensure the correctness of queries. For an unordered ring, when a read miss operation traverses the collision ring, it needs a flag to mark the end of traversal. Otherwise, it will continue in an infinite loop. However, all data in the ring changes dynamically due to operations such as update or deletion, and the head pointer moves constantly. This makes it impossible to mark the end of the traversal.

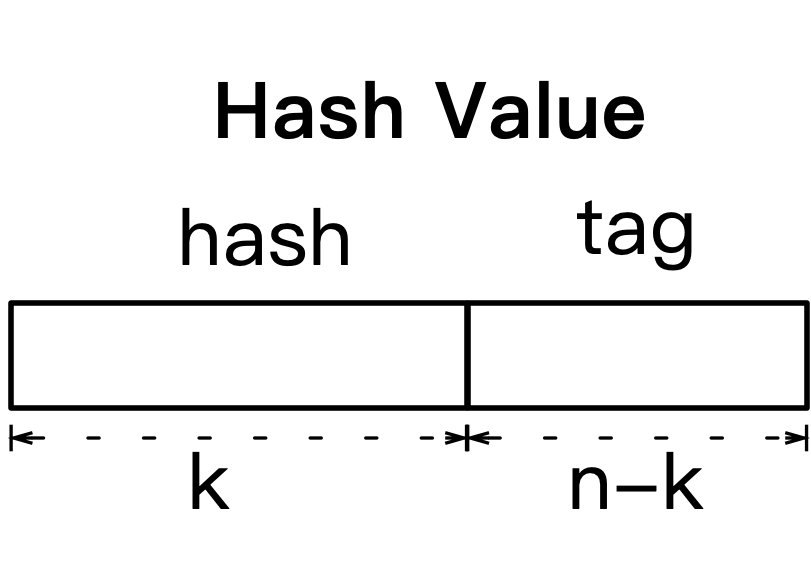

However, the problem can be solved through key sorting. If the target key is located between the keys of two consecutive items, it will indicate where the read miss operation was performed. Then, traversal is stopped and results are returned. In an actual system, a data key is 10-100 bytes in size. This causes significant overhead in the comparison process. The hash structure uses tags to reduce the comparison overhead of keys.

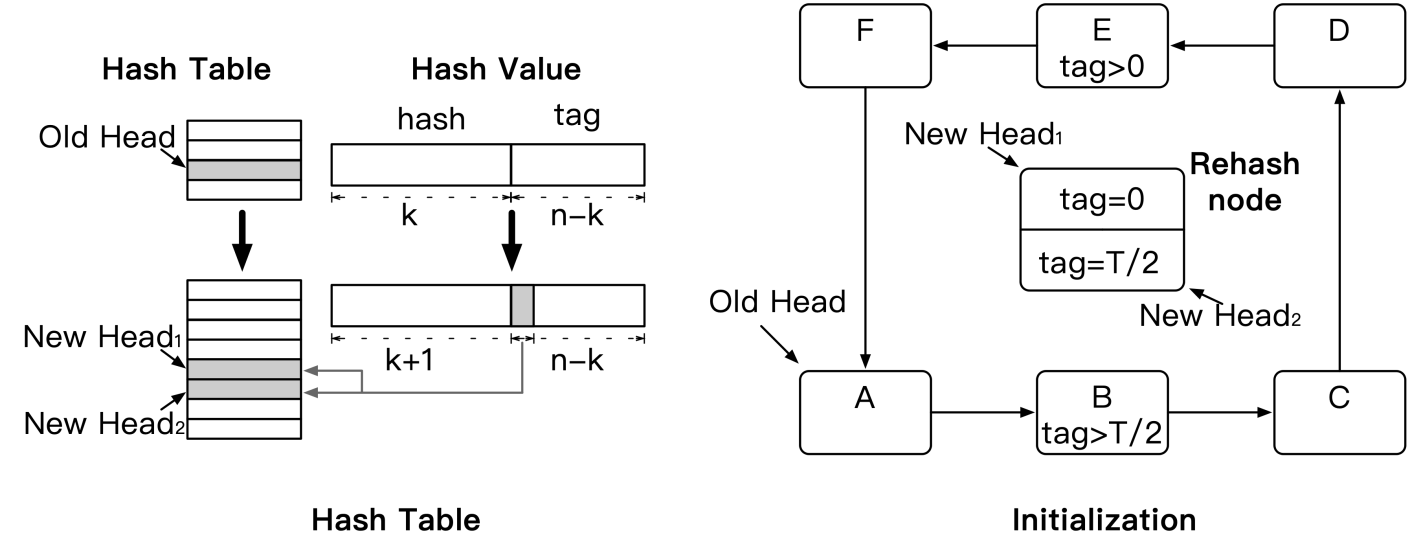

As shown in the following figure, a tag is part of the hash value. The first k bits of the hash value calculated by using each key are used to locate the hash table, and the last n-k bits are used to identify the partition key in the collision chain. To reduce the sorting overhead, we create the lexicographic order: order = (tag, key). Data is sorted by tag first. If duplicate tags exist, data is sorted by key.

The following figure compares HotRing with traditional chaining hash. For example, in Item B, the chaining hash needs to traverse all data to return the result of the read miss operation. HotRing determines that the read miss operation is targeted at Item B after accessing Item A and Item C. To perform the read miss operation, the chaining hash must traverse the collision chain, whereas HotRing only traverses 1/2 of the collision ring to properly terminate the traversal.

HotRing periodically identifies and adjusts hot spot data through two policies. Every R accesses form a cycle, with R typically set to 5. At the Rth access, the thread adjusts the head pointer. The two policies are as follows:

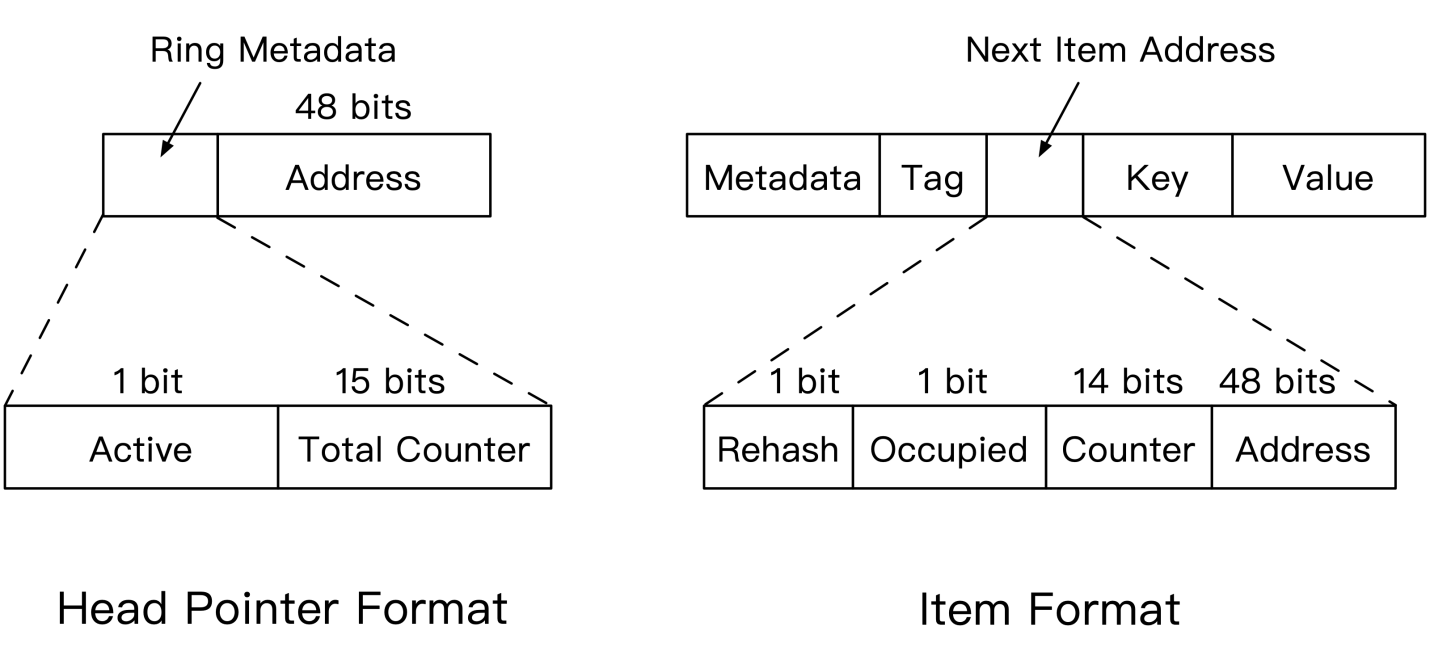

The following figure shows the structure of the metadata required for sampling. Total Counter is set for the head pointer to record the total ring accesses. Counter is set for every item to record the accesses to the item. A memory pointer is allocated 64 bits, but the actual system address index only uses 48 bits. The remaining 16 bits are used to set flags, such as Total Counter and Counter, ensuring there is no extra metadata overhead. Through sampling analysis, the system calculates the optimal position of the head pointer and performs better in the steady-state.

Specific design details include considerations of the following issues:

These design details are not described in this article. For more information, see the HotRing paper.

The RCU lock-free engine of Tair is the foundation of the HotRing design. Papers [1] and [2] in the "References" section describe how to implement lock-free linked lists. This implementation approach is applied to all subsequent lock-free designs. The following example shows a typical concurrent access scenario.

As shown in the following figure, on the A -> B -> D chain, Thread 1 inserts data to C, while Thread 2 updates B to B' in RCU mode. Thread 1 redirects the pointer of B to C to complete insertion. Thread 2 redirects the pointer of A to B' to complete the update. Thread 1 and Thread 2 modify different memories concurrently, and both operations are successful. However, C on the A -> B '-> D chain cannot be traversed, resulting in a correctness problem.

This problem can be solved by using the Occupied flag bit in the item format shown in the preceding figure. When updating B, Thread 2 first resets the Occupied flag bit. When inserting data to C, Thread 1 must redirect the pointer of B to the next item address. If the Occupied flag bit is reset, Thread 1 must traverse the chain to insert data. The concurrent operations contend to modify the memory at the same address. This ensures operation correctness.

By using the same approach, we also ensure that the head pointer is moved properly and that the concurrent CRUD operations are correct. This is how HotRing implements lock-free concurrent access.

As described in the "Background" section, in extreme power-law distributions, a large number of data access requests are concentrated on a small amount of hot spot data. In this case, we can place hot spot data in the position of the head pointer to ensure engine performance even when the collision ring is long. The engine performance is affected when the collision ring contains multiple hot spots. To solve this problem, HotRing is designed with a lock-free rehash based on the amount of hot spot data.

HotRing replaces the load factor of a rehash with the access overhead, which indicates the average number of memory access instances required to access hot spot data. In a power-law distribution scenario, if each collision ring has only one hot spot, HotRing ensures that the access overhead is less than 2. That is, the average number of memory access instances required to access hot spot data is less than 2. We can set the access overhead threshold to 2 so that rehash is triggered when the access overhead is greater than 2.

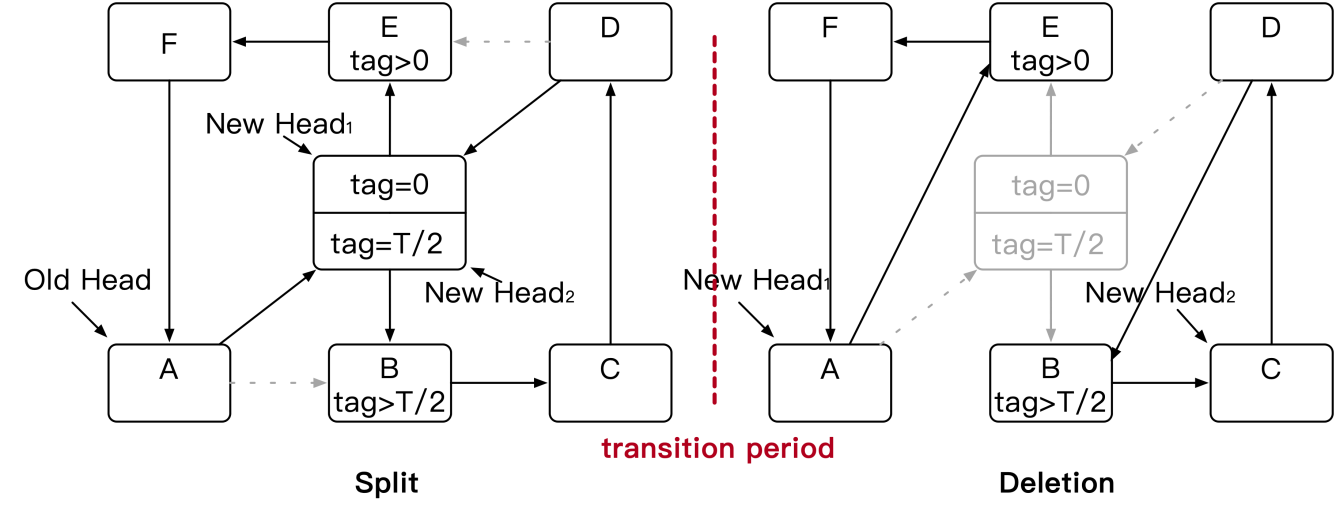

The rehash process is divided into three phases, which are illustrated with reference to the preceding four figures. The first figure shows the changes in the hash value of the hash table before and after rehash. The other three figures show the three phases of the rehash process.

With high performance and easy scalability, in-memory KVS systems are widely used by cloud storage services. An in-memory KVS system is typically used as the essential cache component of a persistent or distributed storage system to support hot spot access.

However, analysis shows that in-memory KVS systems present a severely skewed power-law distribution of hot spot access traffic. Existing in-memory KVS systems are not optimized for hot spot access. Some data nodes may be unable to process massive volumes of hot spot access traffic, which causes a system crash and affects the user experience.

This article introduced HotRing, an innovative in-memory KVS engine capable of hot spot awareness based on index structures. HotRing is extensively optimized to process hot spot access traffic characterized by a power-law distribution. HotRing can dynamically identify hot spots and adjust index structures in real-time in lock-free mode to provide highly concurrent lock-free access with high performance.

Compared with traditional in-memory KVS index systems, HotRing implements lightweight hot spot identification without extra metadata storage overhead. For hot spot data access with a power-law distribution, the HotRing engine reaches a throughput up to 6 billion OPS/s, which is 2.58 times against the fastest current KVS engine.

[1] John D Valois. Lock-free linked lists using compare-and-swap. (PODC 1995)

[2] Timothy L Harris. A Pragmatic Implementation of Non-blocking Linked-lists. (DISC 2001)

[3] Berk Atikoglu. Workload Analysis of a Large-Scale Key-Value Store. (SIGMETRICS 2012)

Why AnalyticDB for MySQL is the Best Bet for Building a Real-time Data Warehouse

Odyssey Data Value Mining Tool | A Next-generation Computing Engine for AnalyticDB for PostgreSQL

Alibaba Clouder - February 5, 2019

ApsaraDB - December 29, 2025

ApsaraDB - June 13, 2022

ApsaraDB - December 21, 2022

Apache Flink Community - June 11, 2024

Alibaba Container Service - April 28, 2019

Tair (Redis® OSS-Compatible)

Tair (Redis® OSS-Compatible)

A key value database service that offers in-memory caching and high-speed access to applications hosted on the cloud

Learn More ApsaraDB for Cassandra

ApsaraDB for Cassandra

A database engine fully compatible with Apache Cassandra with enterprise-level SLA assurance.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn MoreMore Posts by ApsaraDB