Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统, all rights reserved to the original author.

At the beginning of the blog series, I mentioned that the original intention of distributed systems is to solve two major problems:

· Low memory

· Slow calculation

Today, we will take a look at the first problem, and analyze how distributed file systems solve the problem of short storage.

First of all, let's think about this question: why a file can't be saved?

Is the file too big? Usually not. The servers we use nowadays vary from 10 GBs to hundreds of TBs. we rarely see a file so big. After all, one 720P movie is only several GBs.

The issue is that usually, the total amount accumulated by many files is too large.

So the natural idea is to distribute files to many servers.

But this method is not good enough, problems are coming up all the time, like:

· Unbalanced data distribution.

There are huge differences in the sizes of the files, which leads to a lot of data stored on some servers, while others have relatively little.

Of course, we are smart enough to compare the current file size with the remaining space on all servers when allocating, and then select the most suitable server to put it on.

But this will lead to another problem:

· The space allocation is not flexible enough

Try to think of such a situation again. Now there are 2 machines named A and B, respectively, with 200GB and 100GB space, and then here comes an 80GB file. Obviously, it should be put in A. But if there's another one after this file, a 150GB file, then it can't be stored anywhere.

And if we could know that there was a 150GB file coming in the first place, then we can put the first file in B, and place the second file in A. But alas, predicting the future is just the ability we don't possess.

These two problems are essentially caused by imbalance, which is reflected in the remaining space of the server and the size of the files to be stored.

So how to solve this imbalance?

It is still the idea of partition and conquers that I've been talking about from the begining.

Break up the file first, process each, and then merge the results.

The problem of uneven server storage space has long been solved by the operating system. You must have heard of a block or page when we read and write files, the operating system processes it at a fixed size.

However, the size of the files to be stored is uneven, so the application layer itself needs to handle it.

Since the imbalance is caused by inconsistent file sizes, we will have to make it consistent. We disassemble the file into several units of the same size, and then distribute and save these units to different servers. When we need to access the files, we will take them as one unity on each server.

In this way, files are stored in fixed-size units, not the File itself. In theory, as long as the hardware resources are sufficient, the problems of low storage are well solved (of course, only the most basic problems have been solved, and there will be many other problems, which I will be talking about one by one in future blogs).

HDFS was done according to this idea.

· A fixed-size unit is called a block.

· The service that stores these blocks and provides read and write functions is called DataNode

· The information of which blocks a file consists of and which servers it is stored on is called metadata.

· The service that stores this metadata and provides read and write operations is called NameNode

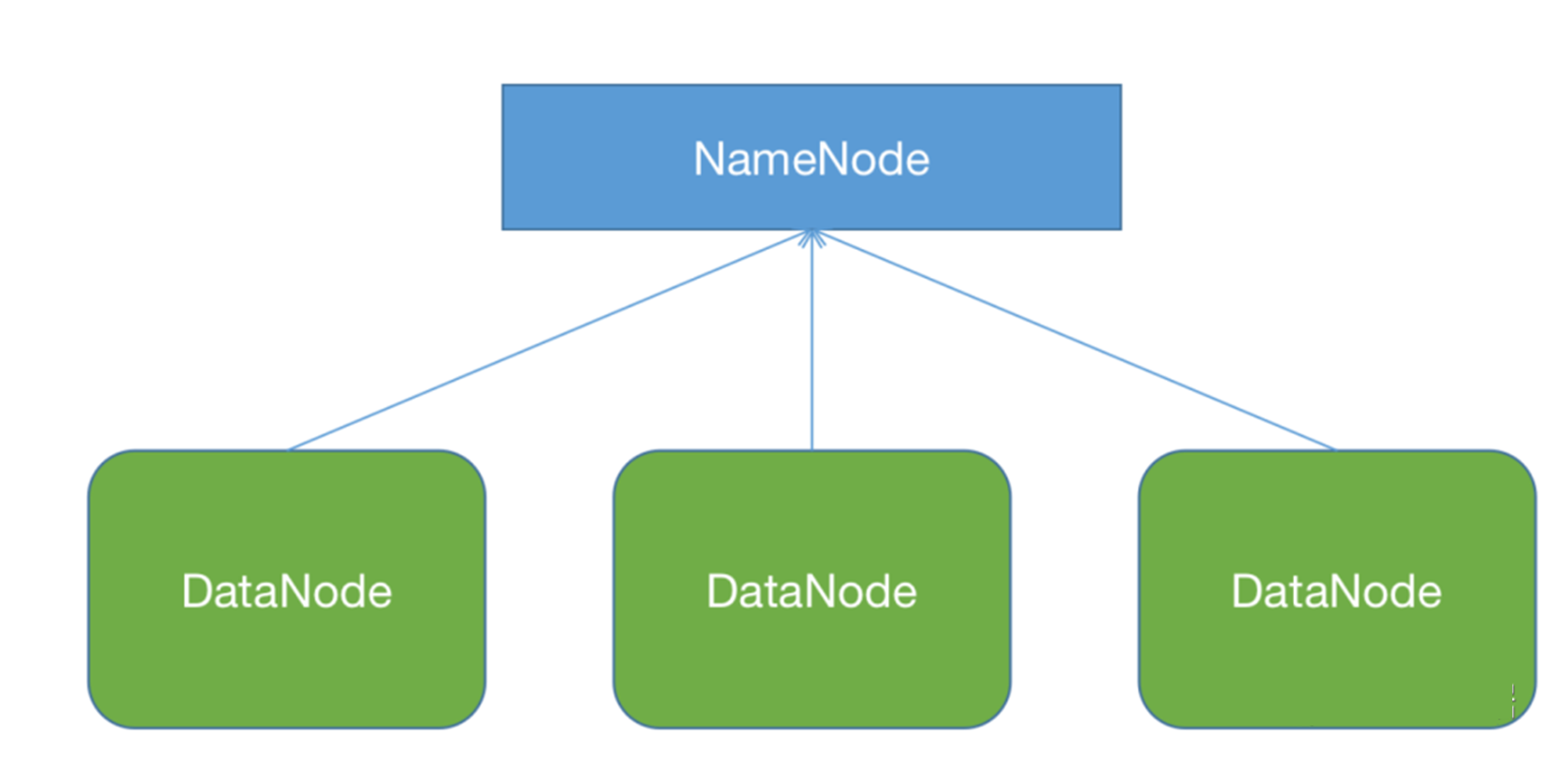

The simplified structure is shown in the figure below:

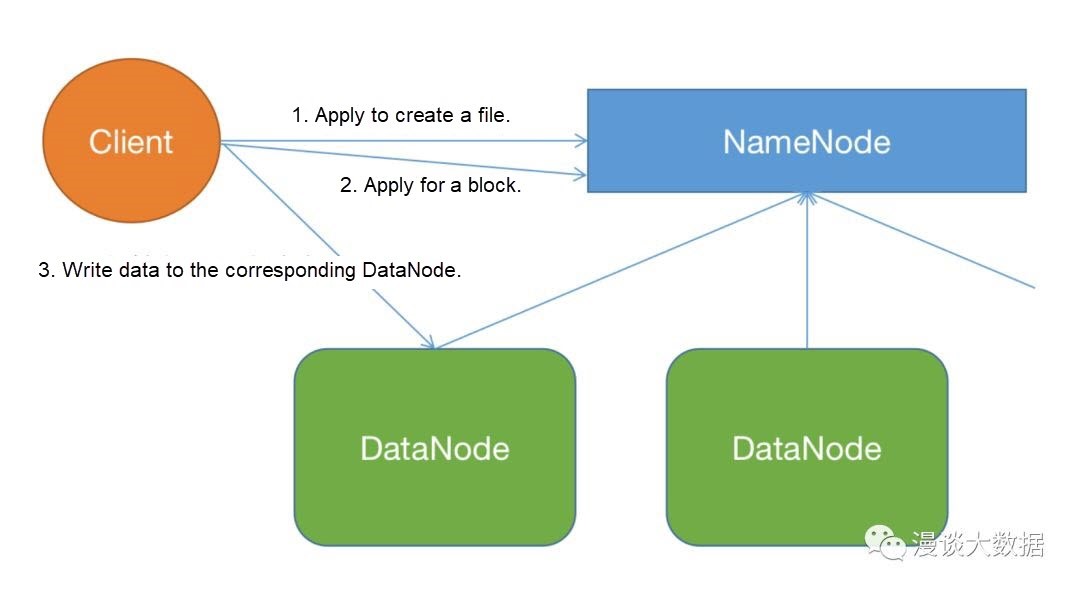

Let's take a look at the process of writing data.

Of course, what we're looking at here is just the simplified process:

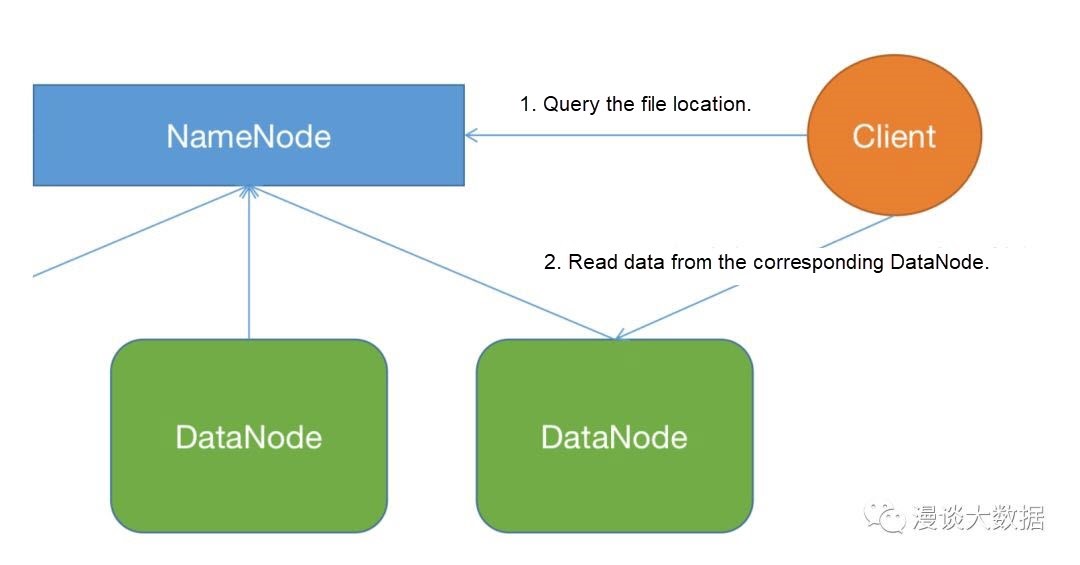

Let's take a look at the process of reading data.

Similarly, it is a simplified process :

Imagine you work in a library.

If a new batch of books is added to the collection, you'll need to register with the administrator (a person or a system) first, and the administrator will assign bookcases where they should be placed on.

If a reader wants to borrow a book, they will need to ask the administrator. The administrator tells them which bookcase the book is in, and the reader finds it easily.

If there is no place for the newly bought books, you only need to buy a few bookcases and register them with the administrator. As long as you have enough money to buy bookcases, you never have to worry about where to put books.

It's easy to understand, that NameNode acts like a librarian, and DataNode is the Bookshelf.

It's obvious to see, be it reading or writing, that the design is simple and effective.

Of course, here I only address the most basic problems. In an ideal situation where everything goes well, we don't have to worry about running out of places to store new books.

But the reality is always unsatisfactory.

For example, what happens if the administrator is sick (the management system is down)?

What if one of the bookcases collapsed in disrepair?

These issues will be discussed one by one in subsequent blogs.

TL;DR

· The reason why data cannot be stored is that the TOTAL file space is too big, rather than individual files.

· Distributed storage in units of files solves the problem of storage to a certain extent. However, due to uneven file sizes, bottlenecks will be encountered soon.

· The classic divide and conquer method can solve the imbalance problem very well. Files of different sizes are split into units of the same size and then scattered out in units.

· HDFS splits files into blocks, DataNode takes care of block reading and writing, and NameNode is for saving the correspondence between the file and the block, the correspondence between the block and the Machine, and other meta-information.

· All reading and writing actions on files are processed by NameNode metadata first, and then the exchange data with the corresponding DataNode.

It's not enough to be able to save, but also to save them smartly. After all, massive amounts of data bring massive costs. In the next blog, we will examine the storage governance of massive data.

Thanks for reading, leave your thoughts in the comment section below!

This is a carefully conceived series of 20-30 articles. I hope to let everyone have a basic and core grasp of the distributed system in a story-telling way. Stay Tuned for the next one!

Learning about Distributed Systems - Part 2: The Interaction Between Open Source and Business

Learning about Distributed Systems - Part 4: Smart Ways to Store Data

64 posts | 57 followers

FollowAlibaba Cloud_Academy - August 4, 2022

Alibaba Cloud_Academy - September 1, 2022

Alibaba Cloud_Academy - May 18, 2022

Alibaba Cloud_Academy - June 26, 2023

Alibaba Cloud_Academy - August 29, 2022

Alibaba Cloud_Academy - September 26, 2023

64 posts | 57 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Organizational Data Mid-End Solution

Organizational Data Mid-End Solution

This comprehensive one-stop solution helps you unify data assets, create, and manage data intelligence within your organization to empower innovation.

Learn MoreMore Posts by Alibaba Cloud_Academy