By Qing Tao,Gong Zijie (nickname Qing Tao,Hong Ying), representing the OceanBase team of Ant Financial.

Ant Financial 's record-breaking database, OceanBase, is unique in that its high-availability model guarantees automatic failover with zero data loss even in scenarios where the database is deployed in multiple data centers across several different regions. As such, OceanBase serves as a highly available and reliable disaster recovery solution that ensures that your services are uninterrupted.

The high-availability model of OceanBase serves as an integral, deeply ingrained piece of the core computing capabilities of the database, so much so that OceanBase becomes unavailable only in cases where it is no longer necessary for normal operations.

This article serves as a quick start to OceanBase, this revolutionary database, overviewing the technical details behind its high-availability model and disaster recovery solutions, covering various aspects of OceanBase, including both the theory and practice of its solutions. Last, this article also provides some recommendations for how to test OceanBase's high-availability model for yourself.

In this section, we will overview the theory behind OceanBase's high-availability model with zero data loss. In theory, we can expect that high availability of any database can ensure that the database will be capable of automatically failing over when a problem occurs, and no data will be lost after the failover is complete. However, for this to be true, services can be recovered in the shortest time possible only with automatic failover enabled. In the following subsections, we will discuss more details on how this high-availability solution can be ensured.

Database transactions must comply with the standard set of properties of ACID (Atomicity, Consistency, Isolation, and Durability). One important key to ACID compliance is the design of the transaction log. A transaction log must be generated and written to stable storage prior to data modifications, a process known as Write-ahead Logging (WAL). Typically, data modifications are not written to databases immediately. In addition to helping local databases recover from failures, a transaction log also can be used to create a redundant replica, which is a secondary database, and keep the primary and secondary databases in sync with each other.

However, different databases are not the same in every detail when it comes to implementing the preceding solutions, which may result in partly different results. This must be considered in writing transaction logs. For example, MySQL has two sets of transaction logs, InnoDB's Redo log and the server's Binlog log. The Redo log records the changes of data blocks, whereas the Binlog log records SQL statements for modifying data. Redo records the logs of non-submitted transactions, because InnoDB provides an UNDO function. Binlog only records the logs of submitted transactions. Therefore, a MySQL instance cannot even guarantee that both transaction logs are completely consistent after a failure recovery, not to mention that it relies on Binlog for primary and secondary synchronization. If MySQL relies on Binlog for primary/secondary synchronization and Binlog is likely not written to storage immediately, this solution cannot fully guarantee zero data loss.

Oracle ensures primary and secondary synchronization by transmitting the transaction logs to record changes to physical blocks, and writing the Redo log to storage in a timely manner. As long as the primary/secondary failover is carried out without a problem, Oracle can ensure no data loss after a failure recovery. However, if Redo log files are missing, Oracle cannot guarantee zero data loss.

Therefore, a mechanism needs to be in place to guarantee that the transaction logs are reliable. Writing transaction logs to a local disk is not enough, because hardware issues can prevent users from accessing and obtaining the transaction logs. The most reliable way is to write the transaction logs also to the host of the secondary database when submitting the transaction logs, which is called strong synchronization. Strong synchronization is applied to Oracle's maximally protected secondary databases. However, the strong synchronization mechanism requires that the secondary database is available. Otherwise, the primary database will deny service requests. For this reason, Oracle recommends configuring two maximally protected secondary databases. As such, whichever secondary database receives the transaction logs of the primary database and writes the logs to storage, the transactions of the primary database can be returned. Similar to Oracle, MySQL also provides a semi-sync approach, but degrades to asynchronization when the secondary databases are unavailable, contrary to that Oracle's primary database denies services in the same circumstances. Oracle prioritizes data consistency under the maximum protection mode, even at the cost of availability. MySQL prioritizes availability over data consistency. Therefore, core business data is advised to be stored in Oracle databases rather than MySQL databases, even if Oracle also provides the maximum availability and maximum performance synchronization modes.

OceanBase's transaction log, the CLog for short, also follows the WAL principles. OceanBase does not have the UNDO log, so the CLog written to storage only includes the logs of transactions to be submitted. In this process, the CLog is written to storage first, and OceanBase data modifications are suspended for a long time if the memory capacity is sufficient. OceanBase provides at least three replicas, one primary replica and two secondary ones, for data redundancy. When submitting transactions, the CLog of the primary replica is written to storage and also to the other two secondary replicas. Being different from Oracle's maximum protection mechanism, OceanBase considers a transaction ready for submission as long as two of the three replicas write the CLog to storage. This makes an extreme case possible: The submitted transaction of the primary replica fails to be written to storage, but succeeds in the two secondary replicas. In the subsequent primary/secondary failover, this transaction will be recovered and submitted. This scenario can also occur in the primary/secondary synchronization of MySQL that is likely to subsequently suffer primary/secondary inconsistency. Fixing this inconsistency is very difficult. In OceanBase, this scenario will first experience primary/secondary failover, where the previous primary replica becomes a secondary replica, and the missing logs during synchronization will be automatically downloaded from the new secondary replica or primary replica after the hardware issues are fixed. Ultimately, the third member will also write the transaction log to storage.

A high-availability solution usually requires automatic failover. Otherwise, it is less useful for operation and maintenance. In the traditional structure that features a primary replica and a secondary replica, the system might misjudge on whether to perform failover when the network connection between the primary replica and the secondary replica is disconnected. In this case, the secondary database can be enabled as the primary database, resulting in double primary databases and data inconsistency. Therefore, in the traditional two data center disaster recovery solution, the product responsible for failover with high availability will set up a monitoring point in a third data center to detect the availability of the two data centers to determine which one is unavailable.

External tools are still required for judgment and for completing the failover when choosing a new primary database when the old one fails, even if the synchronization solution uses one primary database and multiple secondary ones. Therefore, the availability of this highly available product itself is crucial.

In OceanBase's three-replica solution, when the primary replica fails, one of the remaining two secondary replicas is automatically selected as the new primary replica that is guaranteed to store all the latest transaction logs. If there are no failures, OceanBase uses the Paxos protocol to synchronize the transaction logs of the primary replica to the secondary replicas, ensuring that the log of every transaction is stored in most members of the three replicas anytime. In this way, the three-replica solution ensures that at least one secondary replica is qualified as a new primary replica, without losing the transaction logs. In actual implementations, strategies are in place to shorten the time for electing the new primary replica. However, if most members of OceanBase's three replicas are unavailable, election for the new primary replica will fail, and the services cannot be recovered.

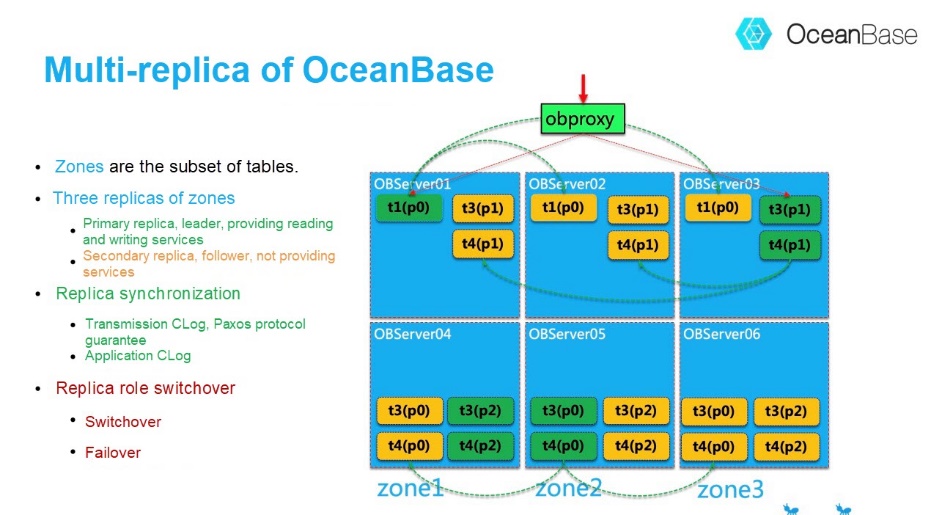

OceanBase differs from traditional databases in failover (primary replica election) by using zones as the granularity. In this granularity, one node can contain thousands of zones. Only those that assume the role of primary or secondary replicas need re-election when the node fails. This election is a parallel process, but at a low degree of parallelism relative to the number of zones in the election. Therefore, the election also shows serial characteristics overall. So in a sense, OceanBase does not have primary databases or secondary databases. In each node, there can be the primary replicas of some data, and the secondary replicas of other data. By default, only the primary replica supports reading and writing services.

It is noteworthy that the election mechanism of OceanBase always keeps running. Every new primary replica only lasts for a default lease time of 10 seconds. A new election starts when the lease time is about to expire. In a new election when no failures have occurred, the current primary replica is at the top of the priority list to be elected again as the primary replica. This is a switchover process. Typically, applications will not perceive this.

When talking about high-availability solutions, we usually focus on primary/secondary failover, rather than what needs to be done on the client. From the business perspective, the best case scenario is that nothing needs to be done. This is reasonable, but only possible when the necessary jobs are carried out in the other steps. For example, in a primary/secondary database failover, the IP address might have changed. In this case, how is the application connected to the new primary database?

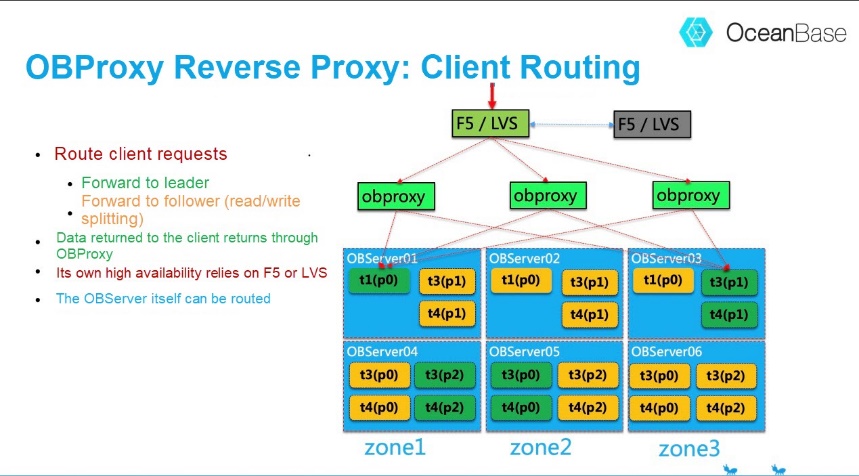

Traditional solutions include Virtual IP addresses, DNS, or forwarding with load balancing devices (F5 or LVS). Currently, common solutions forward the connection of the application with the database in the middleware stage. This is functions similar to a proxy mechanism. If properly implemented, connections only need to be established and maintained with the proxy. In the case of primary/secondary failover in back-end databases, the proxy will automatically maintain connections with the new primary database. In this way, sessions remain uninterrupted from the business perspective. But the transaction status of sessions might not be maintained. If primary/secondary failover occurs when the transaction has not been submitted, this transaction typically fails and rolls back. This requires that the application have the retry logic.

OceanBase's OBProxy is a reverse proxy for zones. Applications only need to connect with OBProxy, which will then forward requests to corresponding nodes that match the SQL characteristics. OBProxy will cache the location information of zones that it accessed. When primary/secondary failover occurs in a back-end zone, OBProxy will receive feedback from OBServer in the first forwarding, if the forwarding misses the target. OBProxy will then pull zone information again, and update its own cache information.

In addition to OBProxy, OBServer nodes can also route SQL requests. This happens frequently. This is because when OBProxy forwards SQL statements, it tries to forward related SQL statements of the same transaction to the same node.

Unavoidably, some SQL statements are likely to be forwarded to nodes where the Leader replicas are not located, and therefore have to be forwarded again. This is exactly why there are plans for executing remote SQL distributed SQL statements.

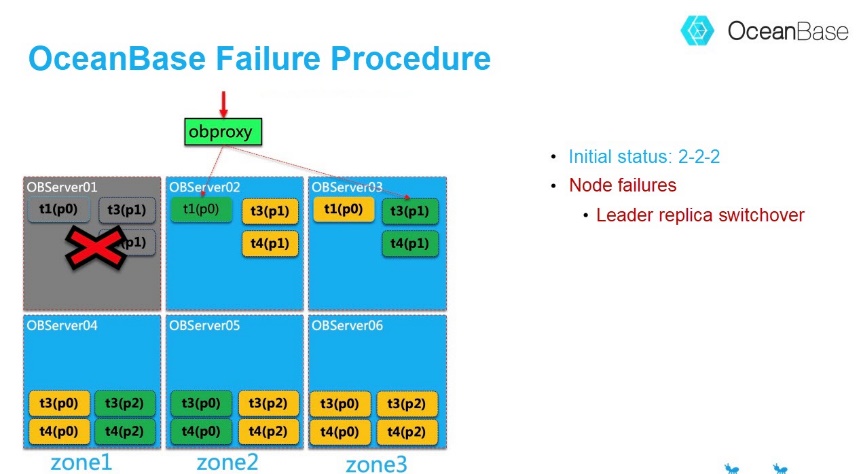

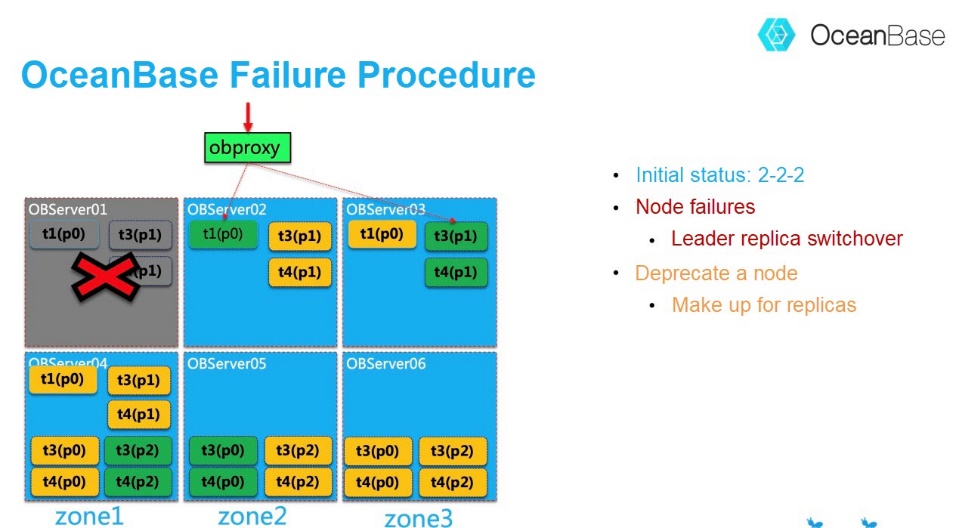

The following uses an OceanBase node failure event to showcase the high availability model of OceanBase.

Consider the figure below and assume that the OBServer01 node fails. At this point, only access requests for t1(p0) are affected. There will be no requests to access t3(p1) and t4(p1) because they are secondary replicas.

OceanBase starts a new election before the 10-second lease time of t1(p0) expires. The previous primary replica is unavailable if it fails to be re-elected, and the election will be a failover process. When secondary replicas of t1(p0) on OBServer02 become primary replicas, OBProxy can perceive this change and then automatically maintains the connections to a new node. Seen from the business perspective, application connections will not be interrupted. However, as discussed before, the business transactions will report errors and roll back if not submitted.

These zones (t1(p0) and t3(p1),t4(p1) will have only two replicas running. This is a dangerous condition that will not be tolerated for long by OceanBase. OceanBase will wait for the recovery of the node OBServer01. If the node does not recover over a given time (2 hours by default), OceanBase will deprecate this node and automatically select another one (OBServer04) in the same zone, to make up for the number of replicas for these three zones on the new node.

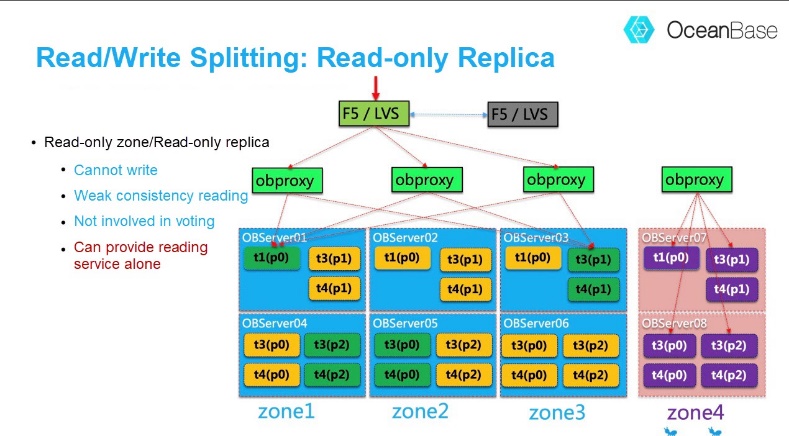

Traditional databases provide a read/write splitting solution, which includes a read-only replica. When the primary database fails, the read-only replica can provide the read-only service to minimize the impact of the failure on businesses. In OceanBase, Hint on SQL can also be used to access the replica. But the preferred solution is to add a read-only replica to the existing three replicas. This read-only replica will be in a separate read-only zone.

Read-only replicas cannot write, but only provide reading services of weak consistency. This can be set at the application session level, and there might be data latency. Read-only replicas are not involved in the CLog reliability voting. Therefore, read-only replicas can also be deployed to a remote IDC, without compromising the read/write performance. One thing that is special about OceanBase read-only replicas is that when both the primary replica and the secondary replicas become unavailable, read-only replicas can separately provide the read-only service to minimize the impact of failures.

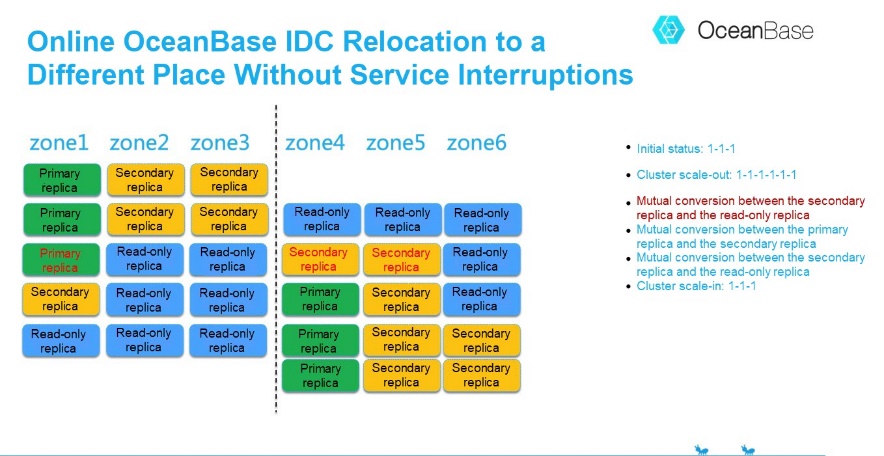

The high availability of OceanBase can also be used for online data center relocation. Online relocation means that the access to databases will not be interrupted, and therefore no special shutdown is required for maintenance. OceanBase alone guarantees strong data consistency throughout the relocation process, without compromising the high availability of OceanBase. This eliminates the concerns that database data center relocation might lead to unavailability or data loss.

The secret of online OceanBase relocation is the use of OceanBase's ability to add read-only replicas online, and to switch the roles of read-only replicas and secondary replicas online. The entire relocation process starts from increasing three replicas to six, followed by converting the roles of secondary replicas and read-only replicas one by one. When secondary replicas are all relocated to a remote place, the business writing performance will degrade. Therefore, this stage will be completed in off-peak business hours, but without the need of stopping the business. This process will not last for long before the relocation quickly goes to the next stage. At last, the OceanBase cluster scales back to three replicas from six.

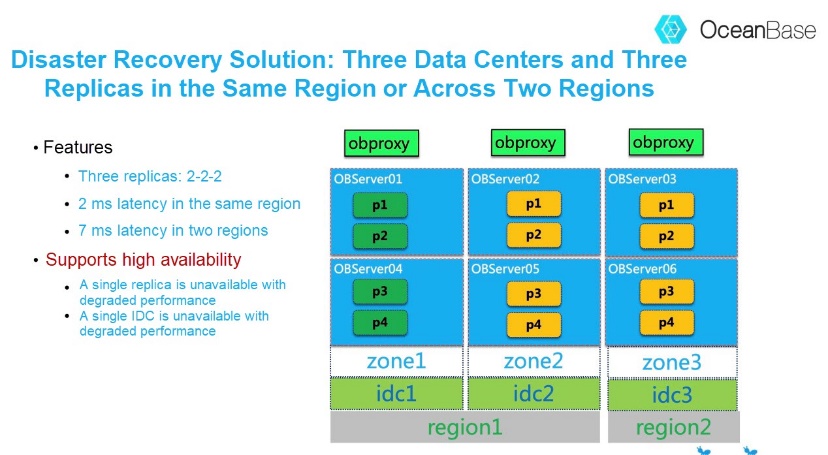

The characteristics of OceanBase make it a good choice for remote data center disaster recovery and active geo-redundancy solutions. Different customers might vary in data center characteristics and business requirements, and OceanBase can provide corresponding solutions in response to different requirements. There have been quite a number of articles about OceanBase data center disaster recovery solutions, so we will not go into details here.

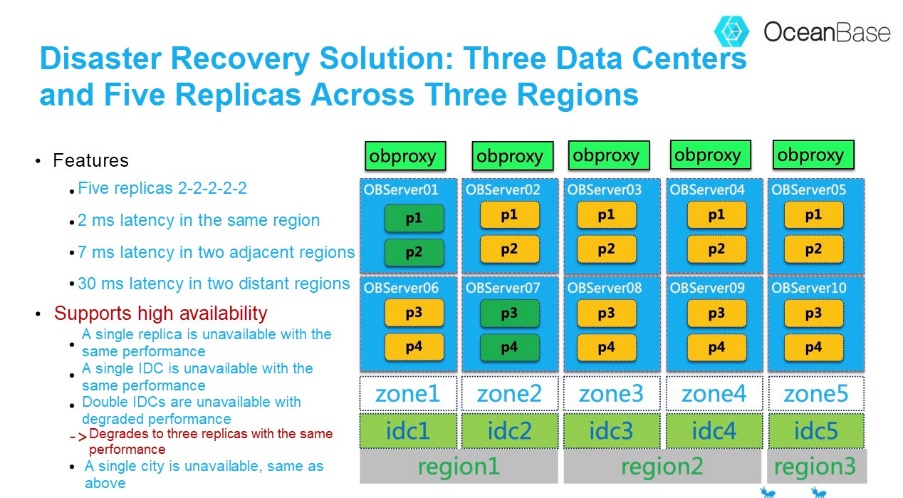

OceanBase resource management involves the concept of zones. A zone is a logical unit of disaster recovery. It can be a data center, a compartment of a data center, or a cabinet. This depends on the customer's specific data center conditions and deployment design.

An OceanBase region is a city. When two data centers are in the same region and the primary replica of one data center becomes unavailable, the secondary replica of the other zone in the same region is used as the new primary replica as a priority. This is a localization strategy.

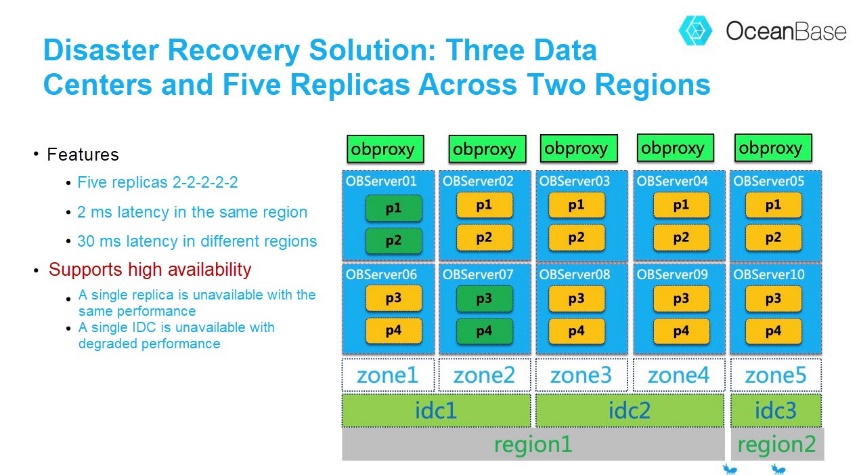

If you cannot accept performance degradation when a single replica fails, you may want to upgrade to the five-replica solution as follows.

If you cannot accept performance degradation when a single data center fails, you may want to upgrade to the five-replica solution as follows. Another choice is to degrade to the three-replica solution.

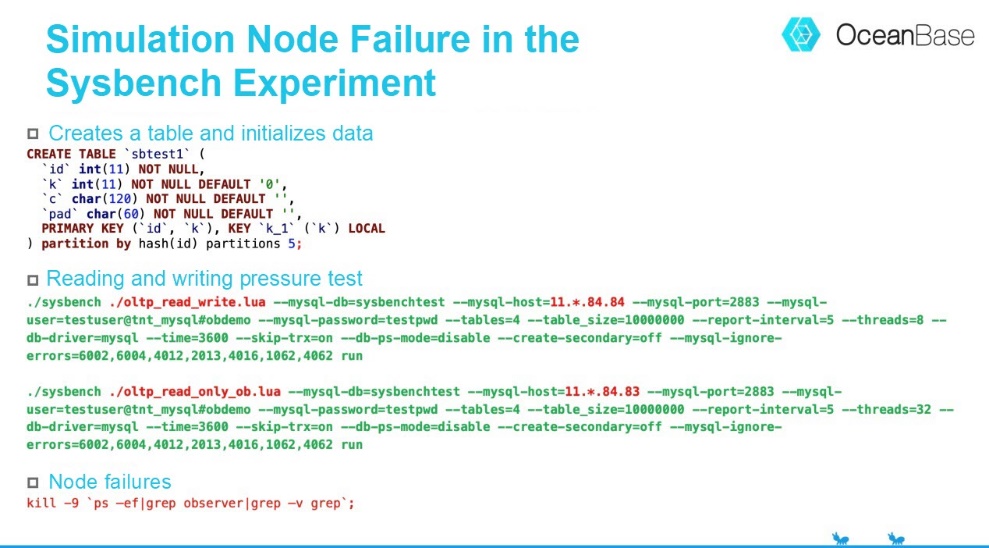

The OceanBase cluster has at least three replicas in the 1-1-1 structure. The 2-2-2 structure is recommended to make the test more inclusive. The function of read-only replicas is optional. OBProxy can be installed at an OBServer node or the application server.

If there is an existing application, the application needs to capture database errors and provide the automatic retry logic.

If there are no existing applications, use sysbench to perform simulations. One problem with using sysbench is that it will exit if the database reports an error, such as a primary key conflict. For this reason, some database error codes need to be ignored.

Consider the following example.

A simulation database failure can directly kill the observer process in the kill -9 mode. In this case, unplug the network cable or disconnect the power supply of the device.

OceanBase has its own operation and maintenance platform (OCP), and needs three additional devices for deployment. This is because OceanBase has its own metadatabase called OB.

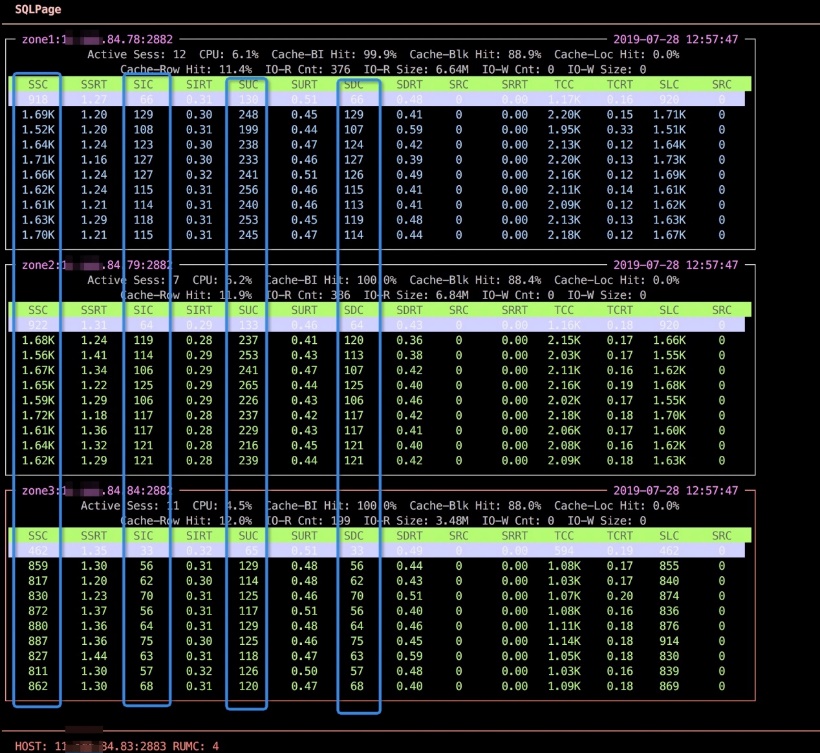

If OCP is not available, OceanBase's own script Dooba is also an option. Dooba is typically stored in /home/admin/oceanbase/bin/. It can monitor the requests from each node. Requests from a node indicate that the primary replicas of the node were accessed.

The following figure shows that all three OBServer nodes have reading and writing requests when running the sysbench reading and writing test.

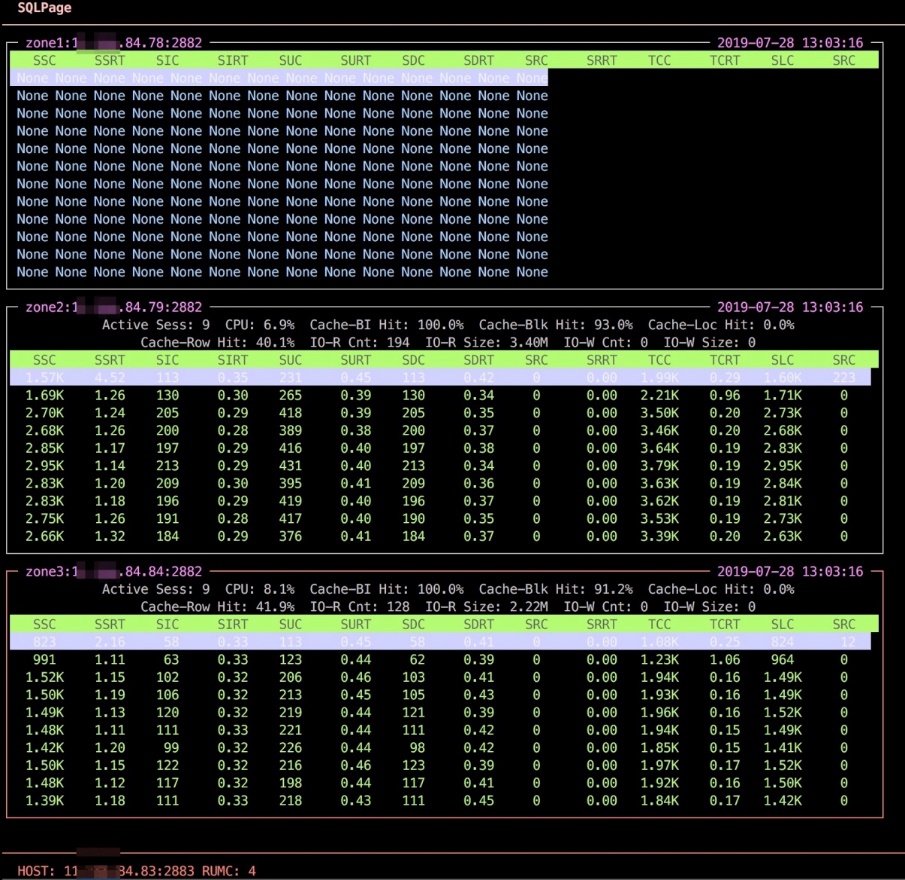

After the observer process of Node 78 is killed, it can be seen that all the reading and writing pressure shifts to the other two nodes.

OceanBase's multi-replica (with an odd number of replicas) design and use of the Paxos protocol to synchronize transaction logs is key to OceanBase's high-availability solution, and is what makes it capable of fulfilling automatic failover (in which case the RTO is about 20 seconds) and zero data loss. Multi-replica design is also the source of many characteristics, such as load balancing and active geo-redundancy.

1 posts | 0 followers

FollowAlibaba Clouder - January 22, 2020

Alibaba Clouder - March 26, 2020

Alipay Technology - November 6, 2019

Alipay Technology - November 12, 2019

OceanBase - August 26, 2022

Alibaba Clouder - March 23, 2021

1 posts | 0 followers

Follow Application High availability Service

Application High availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More Database Overview

Database Overview

ApsaraDB: Faster, Stronger, More Secure

Learn More