Batch operations (including Batch Insert, Batch Update, and Batch Delete) are common techniques in databases with several main functions:

However, for various reasons (mainly issues with the official MySQL driver), when using PolarDB-X and MySQL, there is often a situation where "I thought it was a Batch operation, but it still executed one by one."

This article will introduce how to achieve Batch operations in PolarDB-X (also applicable to MySQL).

In PolarDB-X (MySQL), there are two ways to commit a bunch of data to the database simultaneously. The one written in multiple SQL statements is called "multi-statement," and the one written in a single SQL statement is called "Batch".

For example, this is a multi-statement (essentially multiple SQL statements):

INSERT INTO t1 (c1,c2) VALUES (1,1);INSERT INTO t1 (c1,c2) VALUES (2,2);This is a Batch (essentially still a single SQL statement):

INSERT INTO t1 (c1,c2) VALUES (1,1),(2,2);The differences are as follows:

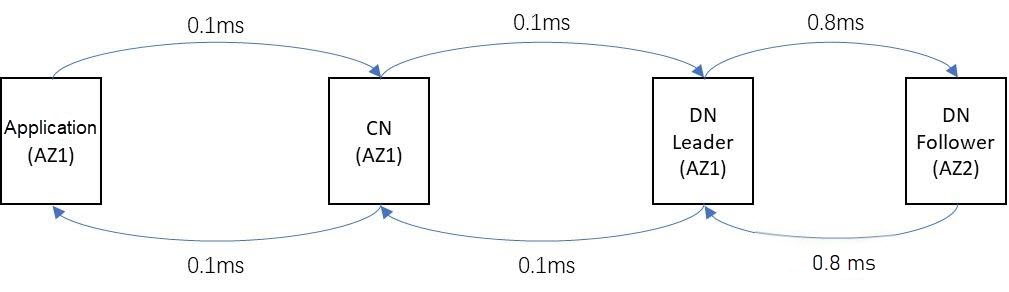

Typical Network Latency Between Components of PolarDB-X in Three Zones

Take a typical PolarDB-X deployed in three zones as an example. When inserting 1,000 records:

Both modes seem to commit data to the database at once, but in fact, the effects are very different. We should use Batch operations as much as possible instead of multi-statements.

However, MySQL drivers (such as mysql-connector-java), ORM frameworks (such as MyBatis), and similar tools can easily, if not carefully managed, convert what you believe to be Batch operations into multi-statement executions (or even single-statement executions). Below, we will introduce the correct methods for performing Batch operations.

The Batch Insert operation is straightforward and has only one format:

INSERT INTO t1 (c1,c2) VALUES (1,1),(2,2);There are several ways to implement it in Java:

First, use the addBatch() and executeBatch() interfaces of PreparedStatement (note, not addBatch(String sql), which is a multi-statement):

Connection conn = xxx;

PreparedStatement pstmt = conn.prepareStatement("INSERT INTO t1 (c1,c2) VALUES (?,?)");

pstmt.setInt(1, 1);

pstmt.setInt(2, 1);

pstmt.addBatch();

pstmt.setInt(1, 2);

pstmt.setInt(2, 2);

pstmt.addBatch();

pstmt.executeBatch();Does it seem simple? However, this method relies on mysql-connector-java to concatenate the SQL and parameters into a Batch format. This conversion only occurs if rewriteBatchedStatements=true (false by default) is set in the JDBC URL. Otherwise, mysql-connector-java will execute the two INSERT SQL statements as multi-statements.

Similarly, if frameworks such as MyBatis are used, and the SQL template defined in the mapper is in a similar format. In that case, this parameter also controls whether it executes as a Batch.

To avoid pitfalls, the second method is more recommended.

Second, applications (including MyBatis) directly construct the statement in Batch format. This way, the statement received by mysql-connector-java is already in Batch format, and it does not rely on the value of rewriteBatchedStatements. Take MyBatis as an example:

<insert id="batchInsert">

INSERT INTO t1 (c1, c2) VALUES

<foreach collection="list" item="item" separator=",">

(#{item.c1}, #{item.c2})

</foreach>

</insert>The most common error is to use the addBatch() and executeBatch() interfaces of PreparedStatement, for example:

Connection conn = xxx;

PreparedStatement pstmt = conn.prepareStatement("UPDATE t1 SET c1 = ? WHERE id = ?");

pstmt.setInt(1, 1);

pstmt.setInt(2, 1);

pstmt.addBatch();

pstmt.setInt(1, 2);

pstmt.setInt(2, 2);

pstmt.addBatch();

pstmt.executeBatch();mysql-connector-java does not support rewriting for UPDATE statements. Therefore, this form will definitely be executed as multi-statements (or even single statements: in the JDBC URL, if allowMultiQueries=true, it will be a multi-statement; if allowMultiQueries=false (false by default), it will be a single statement), and it is not a Batch operation.

Batch Update has two correct forms:

First, use CASE WHEN statements, for example:

UPDATE t1

SET

c1 = CASE id

WHEN 1 THEN 1

WHEN 2 THEN 2

END,

c2 = CASE id

WHEN 1 THEN 1

WHEN 2 THEN 2

END

WHERE id IN (1,2)Use MyBatis, for example:

<update id="batchUpdate">

UPDATE t1

SET

c1 = CASE id

<foreach collection="list" item="item" separator=" ">

WHEN #{item.id} THEN #{item.c1}

</foreach>

END,

c2 = CASE id

<foreach collection="list" item="item" separator=" ">

WHEN #{item.id} THEN #{item.c2}

</foreach>

END

WHERE id IN

<foreach collection="list" item="item" separator="," open="(" close=")">

#{item.id}

</foreach>

</update>The problems with this way are:

o The statement becomes lengthy and unattractive.

o The IN operation that uses the primary key in the WHERE clause can easily lead to full table scans.

Second, use INSERT ... ON DUPLICATE UPDATE (also known as upsert), for example:

INSERT INTO t1 (id,c1) VALUES (1,1), (2,2) ON DUPLICATE KEY UPDATE c1 = VALUES(c1)Use MyBatis, for example:

<update id="batchUpdate">

INSERT INTO t1 (c1, c2) VALUES

<foreach collection="list" item="item" separator=",">

(#{item.c1}, #{item.c2})

</foreach>

ON DUPLICATE KEY UPDATE c1 = VALUES(c1)

</update>This form is similar to Batch Insert.

Note:

o Columns that are not being updated do not need to be included in the VALUES or UPDATE clauses (even if they are not allowed to be NULL).

o For partitioned tables, include the partition key in the VALUES clause (though it is not required in the UPDATE clause).

o This method has certain limitations. It requires that the updated data must exist (if it does not exist, it will insert a new record, which may not be consistent with the business semantics).

o This form can also be implemented using rewriteBatchedStatements=true in Batch Insert, but it is not recommended.

This form of SQL is more refined and efficient.

Batch Delete is relatively straightforward, with only one method:

DELETE a FROM t1 a FORCE INDEX (PRIMARY) WHERE id IN (1,2)For partitioned tables, it is recommended to include the partition key:

DELETE a FROM t1 a FORCE INDEX (PRIMARY) WHERE (id,c2) IN ((1,1),(2,2))Note the following points:

• "single-table delete" does not support FORCE INDEX; "mult-table delete" supports FORCE INDEX.

• As long as you add an alias to the table, the DELETE operation belongs to the "multi-table delete".

No matter which form is used, it is recommended to determine whether Batch execution is used through the following methods:

[Infographic] Highlights | Database New Features in March 2025

ApsaraDB - December 12, 2025

ApsaraDB - September 19, 2022

ApsaraDB - March 26, 2025

ApsaraDB - August 23, 2024

ApsaraDB - April 16, 2025

ApsaraDB - April 10, 2024

Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn MoreMore Posts by ApsaraDB