The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Yao Jie (Lou Ge), Senior Technical Expert in Alibaba Container Platform Cluster Management.

All of Alibaba's core systems were running on the cloud and completely cloud native for 2019's big Double 11 shopping promotion. During the big event, Alibaba's systems supported over half a million peak concurrent transactions and a total 268.4 billion transactions without a hitch, proving that cloud native technologies based on Kubernetes , particularly when married with Alibaba's in-house X-Dragon bare metal server solution, are a powerful solution that can be used in super large scale business applications.

For 2019's Double 11 shopping event, Alibaba's systems relied on five resource units distributed across three geographic locations. Of the five resources, three were running completely on public cloud, specifically Alibaba Cloud, and the other two were running in a hybrid cloud environment. Among these, one of the most important were the X-Dragon bare metal servers, whose current generation already withstood the major traffic spikes seen during the midyear June 18 and autumn September 9 promotions on Alibaba's various e-commerce platforms, proving to Alibaba engineers they could easily take on Double 11, too. And, with that, all three resources running on the cloud for the 2019 Double 11 Shopping Festival were running specifically on the infrastructure provided by X-Dragon bare metal servers. This included tens of thousands of Kubernetes clusters.

The virtualization technology, X-Dragon, behind Alibaba Cloud Elastic Compute Service (ECS) is now in its third generation. The first two generations were called Xen and KVM. X-Dragon was specifically developed by a dedicated team of engineers for Alibaba Cloud's sixth generation of ECS instances. The X-Dragon architecture provides the following four advantages:

In short, to summarize the above points, X-Dragon offloads the storage and network virtualization overhead to a field-programmable gate array (FPGA) hardware accelerator card called MOC, thus reducing the computing virtualization overhead of the original Alibaba Cloud ECS instances by approximately 8%. At the same time, the overall cost of X-Dragon bare metal servers can maintained at a similar value given the advantages of manufacturing MOC cards at scale. The design of X-Dragon bare metal servers supports nested virtualization, leaving room for further technological innovation. It also makes it possible to use a variety of virtualization technologies, such as Kata and Firecracker.

Before Alibaba's large-scale migration to the X-Dragon architecture for this year's Double 11 Shopping Festival, the results of internal tests showed that the performance of e-commerce containers on X-Dragon showed a 10% to 15% improvement over those running on physical machines. This was a surprising finding, but it was borne during the June 18 and September 9 promotions. Upon analysis, we found that the main reason for the improvement was that the virtualization overhead was offloaded to the MOC card. On top of that, X-Dragon had no CPU/Mem virtualization overhead and each container running on X-Dragon had a dedicated ENI. This gave X-Dragon a surprising performance advantage. At the same time, each container used an ESSD storage disk with an IOPS of 1 million, which happens to be 50 times that of an average SSD cloud disk. This also wildly surpasses the performance of the typical non-cloud SATA and local SSD disk. These were key factors in our decision to move to X-Dragon for this year's Double 11 Shopping Festival.

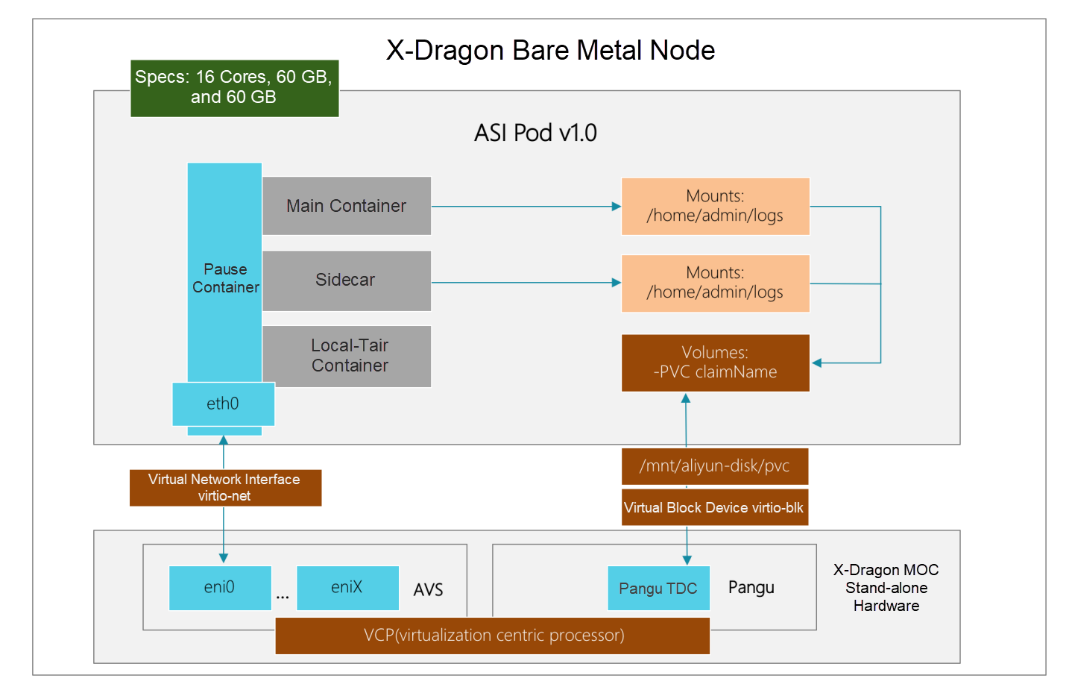

In the all in the cloud era, enterprise IT architectures are being reshaped and transformed, with cloud native becoming the quickest way to realize the value of cloud computing. For last year's Double 11 Shopping Festival, Alibaba moved its core system to a cloud-native platform, which was built on X-Dragon bare metal servers, lightweight cloud-native containers, and a Kubernetes-compatible orchestration platform know as the Alibaba Serverless Infrastructure (ASI). Kubernetes pods are well integrated with X-Dragon bare metal servers, as they can deliver business performance while X-Dragon bare metal instances provide the underlying power.

The following diagram shows Kubernetes pods running on X-Dragon:

Tens of thousands of X-Dragon clusters were used to handle core transactions during the 2019 Double 11 Shopping Festival, which represented a significant challenge to our O&M personnel. The tasks they faced included instance specification selection for all cloud services, large-scale cluster elastic scaling, node resource assignment and control, as well as core metric collection and analysis. Besides these, it also involved such things as infrastructure monitoring, downtime analysis, node label management, node reboot, lock, and release, auto-repair, as well as faulty node rotation, kernel patching and upgrades, and large-scale inspections.

This is a rather long list, so let's take a closer look below.

First up, we planned different instance specifications for different services, such as the ingress layer, core service system, middleware, database, and cache. Different services have different characteristics and require different hardware setups. Some require high-performance computing power, others require high packet throughput networking, yet others require high-performance disk read/write capabilities. These needed to be designed and planned beforehand so that insufficient resources would not impact service performance and stability. The specification of an instance includes its vCPU, memory, ENI quantity, cloud disk quantity, system disk size, data disk size, and packet throughput (PPS).

A typical instance hosting core e-commerce services has 96 cores and 527 GBs of memory, with each Kubernetes pod using one elastic network interface (ENI) and one elastic block storage (EBS) instance. Therefore, the number of ENIs and EBSs allowed is crucial. To handle the workload, X-Dragon will increase the limit to 64 and 40, effectively avoiding CPU and memory resource waste. This specification varies with different services. For example, an X-Dragon instance in the ingress layer requires high network throughput, which means high PPS for the MOCs. A typical X-Dragon instance has 4 cores assigned to MOC networking and 8 cores assigned to MOC storage. To avoid high CPU usage of AVS network software switches, 6 cores were assigned to networking and 6 cores were assigned to storage. In addition, instances in the hybrid units needed NVMe local disk instances for offline tasks. Using different instance specifications for different services reduces cost and improves performance and stability.

Alibaba's annual Double 11 shopping promotion brings about some massive traffic peaks. To cope with the traffic, every year we need massive volumes of computing resources. However, it would be wasteful to retain these resources for daily use. Therefore, we created a cluster group for daily operations and another for promotional events. Several weeks prior to Double 11, we started expanding the cluster group responsible for promotional events through our elastic scaling capability by applying for a large number of X-Dragon instances and scaled out the Kubernetes pods to handle the upcoming transactions. Immediately after the Double 11 Shopping Festival, the pod containers in the promotional events cluster were removed in batches, and the X-Dragon instances were removed. Only the cluster group for daily operations was retained. This method allowed us to be able to significantly reduce the cost of promotional events. After the migration to the cloud, the time from applying for an X-Dragon instance to its creation was reduced from hours or days to mere minutes. Now, we can apply for thousands of X-Dragon instances, including their computing, network, and storage resources, in five minutes and see them created and imported into Kubernetes clusters in 10 minutes. This leap in cluster creation efficiency will serve as the foundation for future elastic resource scaling.

There are three core metrics used to measure the overall health of a large scale X-Dragon cluster: uptime, downtime, and rate of successful orchestration.

Downtime of an X-Dragon bare metal instance is usually caused by a hardware fault or kernel issue. By collecting statistics on the daily downtime trends and analyzing the root cause of downtime, we can quantify the stability of the cluster to avoid the risk of large-scale downtime. The rate of successful orchestration is the most important of all three metrics. Containers in a cluster may fail to be orchestrated to an instance for many reasons, including if the load is greater than 1000, if there is too much pressure on the disks, if the Docker process does not exist, or if the Kubelet process does not exist. Instances with these issues are marked as notReady in a Kubernetes cluster. We used X-Dragon's downtime reboot and cold migration features to improve the failover efficiency and were able to keep the rate of successful orchestration above 98% during this year's Double 11 Shopping Festival. Since Double 11, we have been able to maintain X-Dragon instance downtime below 0.2%.

As the cluster size increases, management becomes more difficult. For example, how can we filter out all the instances in the cn-shanghai region with the type ecs.ebmc6-inc.26xlarge? Well, to facilitate such operations, we use predefined labels to manage resources. In the Kubernetes architecture, you can define labels to manage instances. Each label is a Key-Value pair. You can use the standard Kubernetes API to assign labels to X-Dragon instances. For example, we used sigma.ali/machine-model: ecs.ebmc6-inc.26xlarge to represent the instance type and sigma.ali/ecs-region-id: cn-shanghai to represent the region. With this label management system, we can quickly locate the instances we want from tens of thousands of X-Dragon instances and perform routine O&M operations such as batch canary releases, batch restarts, and batch releases.

Downtime is inevitable for large-scale clusters. That's just a fact. It's how you collect downtime statistics and analyze them that makes a difference. Many things can cause downtime, including hardware faults and kernel bugs. Once downtime occurs, services are interrupted and some stateful applications are also affected. We use SSH and port ping inspection to monitor the downtime of the resource pool and collect historical downtime trends. Alarms are triggered if there is a sudden uptick in downtime. At the same time, we conduct association analysis on the faulty instances. For example, we look at their data center, unit, and group to find out if the downtime was caused by a specific data center. Or, maybe it is related to certain hardware specifications, such as the model or CPU configuration. Downtime can even be related to software problems, such as the operating system or kernel version.

The root causes of downtime are analyzed to see if the problem is due to hardware faults or staff mistakes. kdump generates a vmcore when the kernel crashes. We can extract information from the vmcore and analyze it to see if the downtime is associated with a specific type of vmcore. Kernel logs such as MCE logs and soft lookup error messages are good places to check to see if the system was functioning abnormally before or after downtime.

Such issues are then submitted to the kernel team. Specialists on the team analyze the vmcore files and come up with hotfixes if the downtime is caused by a bug in the kernel.

Running large-scale X-Dragon clusters means you are going to encounter hardware and software faults. The more complex the technology stack, the more complicated the faults will be. It is unrealistic to simply rely on manual work to solve these issues. Automation is needed. Our 1-5-10 node recovery technology is able to discover there is a problem in 1 minute, locate the sources of the problem in 5 minutes, and recover the faulty nodes in 10 minutes. Some of the typical causes of downtime for X-Dragon instances include host machine downtime, high host load, full disk, too many open files, and unavailable core services such as Kubelet, Pouch, or Star-Agent. These problems can usually be solved by recovery operations such as machine reboot, container eviction, software reboot, and disk auto-clean. Over 80% of issues can be solved by using machine reboot and evicting the container to other nodes. In addition, we can automatically repair the NC-side system or hardware faults by monitoring two system events, X-Dragon Reboot and Redeploy.

For this year's Double 11 shopping festival, all of Alibaba infrastructure will be in a cloud native architecture that is going to be based on Kubernetes, and the next-generation hybrid deployment architecture based on runV security containers will be implemented on a large scale. As such, we will take lightweight container architecture to the next level.

In this context, Kubernetes node management will develop in the directions of the Alibaba economy and pool management by providing cloud inventory management, improving node elasticity, and using resources during off-peak hours. This will further reduce machine retention time, significantly reducing the associated costs.

We will use a core engine based on Kubernetes Machine-Operator to provide highly flexible node management and orchestration capabilities. In addition, complete system data collection and analysis capabilities will give us the ability to monitor, analyze, and perform kernel diagnostics from end to end. This will improve the stability of container infrastructure and provide the technical foundation for the evolution of lightweight containers and immutable infrastructure architectures.

How Kubernetes and Cloud Native's Working out in Alibaba's Systems

How Alibaba Overcame Challenges to Build a Service Mesh Solution for Double 11

639 posts | 55 followers

FollowAlibaba Developer - March 8, 2021

Alibaba Container Service - April 28, 2020

Alibaba Cloud ECS - September 10, 2020

Alibaba Cloud Native Community - November 5, 2020

Alibaba Developer - March 11, 2020

Alibaba Cloud ECS - January 8, 2020

639 posts | 55 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Livestreaming for E-Commerce Solution

Livestreaming for E-Commerce Solution

Set up an all-in-one live shopping platform quickly and simply and bring the in-person shopping experience to online audiences through a fast and reliable global network

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn MoreMore Posts by Alibaba Cloud Native Community