By Huiling Zhou (Zhule)

This article is a sharing on Open Data Processing Service (ODPS) offline development. It lists some important experiences and conclusions accumulated over the years, especially the skills and configuration methods in the daily data processing and scheduling of the algorithm. As for the specific reasons, we recommend visiting the Alibaba Cloud official website to check the working principles of the underlying map reduce and data flow.

Reference: https://www.alibabacloud.com/help/en/maxcompute/latest/overview-of-maxcompute-sql

Input data: two test tables zhule_a and zhule_b. The specific schema and data are listed below (to illustrate the problems caused by duplicate data, a duplicate key=2, ds='20220930' data will be inserted into tables a and b, respectively):

read zhule_a;

| key | ds |

| 1 | 20220930 |

| 2 | 20220930 |

| 1 | 20221001 |

| 2 | 20221001 |

| 3 | 20221001 |

read zhule_b;

| key | ds |

| 2 | 20220930 |

| 3 | 20220930 |

| 1 | 20221001 |

| 2 | 20221001 |

| 3 | 20221001 |

| 4 | 20221001 |

| 5 | 20221001 |

Usage: Returns the rows that have matching column values in the left table and the right table based on the Join condition. In a word, find the common parts of the two tables and pay attention to the performance optimization under the Cartesian product.

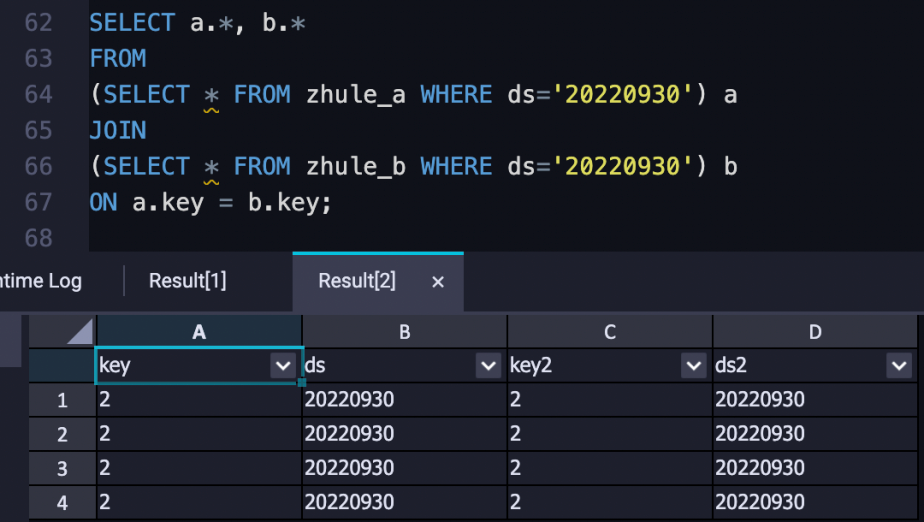

1. Select the subset of each table and then join:

-- better way to perform join, select small range of data first.

SELECT A.*, B.*

FROM

(SELECT * FROM A WHERE ds='20180101') A

JOIN

(SELECT * FROM B WHERE ds='20180101') B

ON a.key = b.key;Note: Before performing Join operations, be sure to check whether there is duplicate data in the left and right tables. Otherwise, the final duplicate results will increase by the number of Cartesian products. For example, if there are two duplicate data in each left and right tables, there will be as many as four duplicate data after Join.

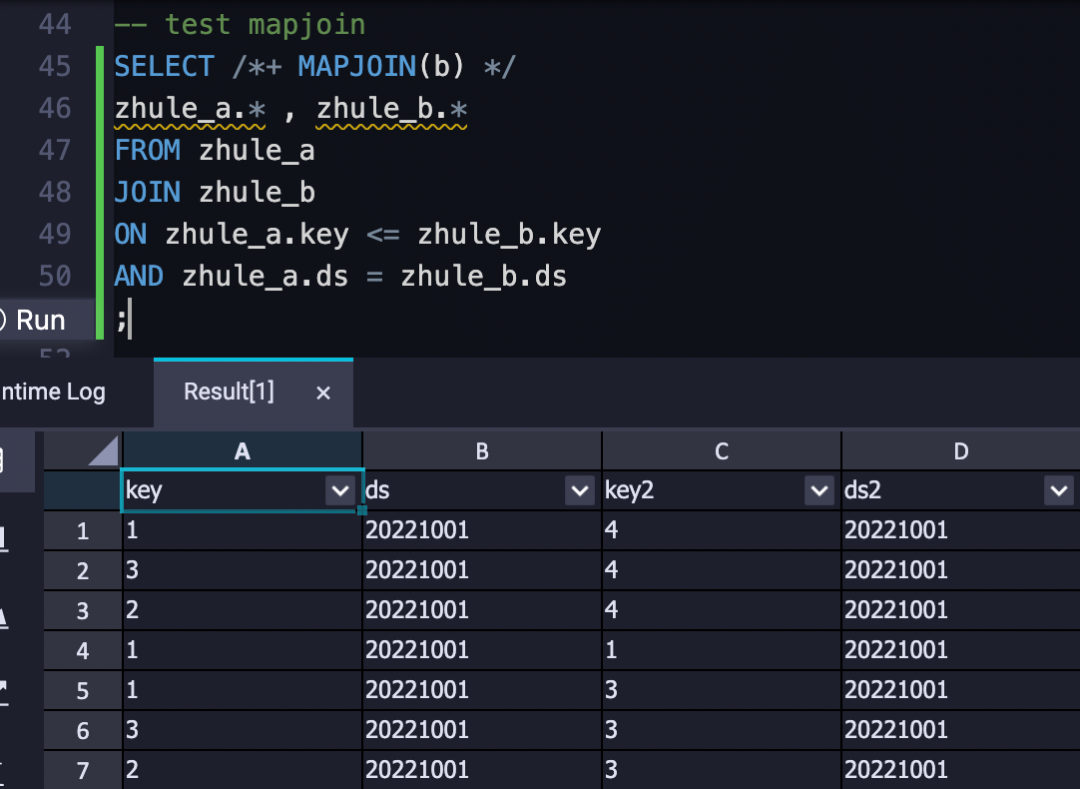

2. In the best case, large tables join small tables, and then mapjoin is used to implement.

Official explanation: In the map stage, MAPJOIN loads all data in the specified tables into the memory of the program that performs the JOIN operation. The tables specified for MAPJOIN must be small tables, and the total memory occupied by the table data cannot exceed 512 MB.

Limits on JOIN operations in MAPJOIN:

SELECT /*+ MAPJOIN(b) */

a.*

FROM test_a a

JOIN test_b b

ON a.user_key = b.user_key

;

// Add a mark before the sql statement to say that this is mapjoin, and write the alias of the small table in parentheses.An Interesting Point:

In addition to normal equality, mapjoin supports inequality, as shown the following example:

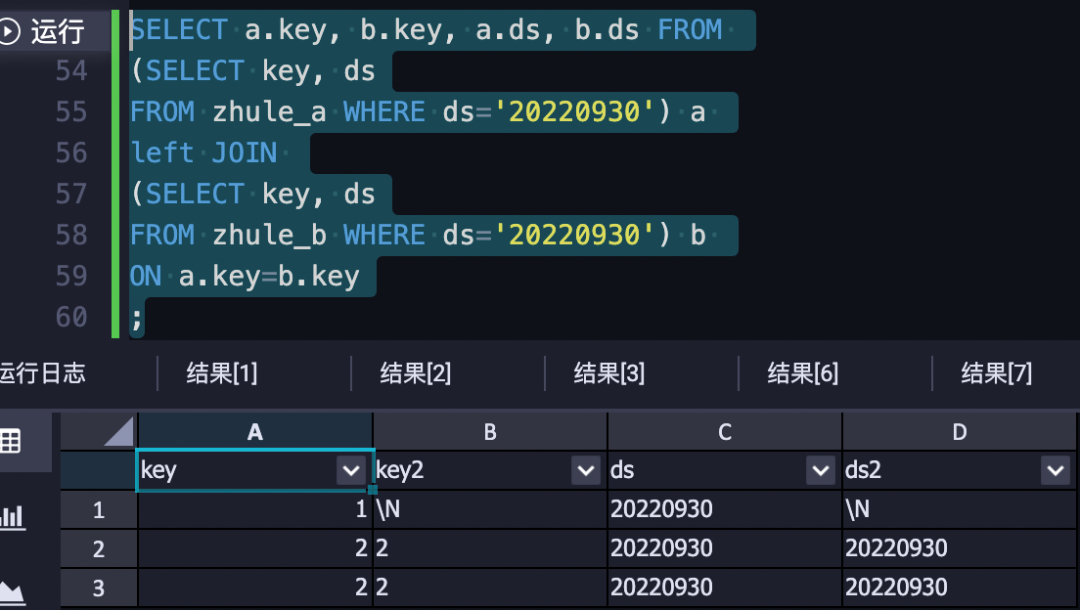

Usage: A LEFT JOIN operation takes the Cartesian product of the rows in Table A and Table B and returns all the rows of Table A and rows in Table B that meet the join condition. If the Join condition finds no matching rows in Table B for a row in Table A, the row in Table A is returned in the result set with NULL values in each column from Table B. In a word, return all records in the left table A and data in the right table B that meet the association conditions. When the rows in the right table do not meet the association conditions, NULL values are returned.

Usage: A LEFT SEMI JOIN operation only returns the rows in Table A that have a matching row in Table B. For a piece of data in the left table A, if there are rows in the right table B that meet the association conditions, the left table A will be returned. Otherwise, it will not be returned.

employee (2 columns - e_id and e_name)

10, Tom

20, Jerry

30, Emily

employee_department_mapping (2 columns - e_id and d_name)

10, IT

10, Sales

20, HR

-- inner join results

SELECT e.e_id, e.e_name, d.d_name FROM

employee e INNER JOIN employee_department_mapping d

on e.e_id = d.e_id

-- results

10, Tom, IT

10, Tom, Sales

20, Jerry, HR

-- left semi join results

SELECT e.e_id, e.e_name, d.d_name FROM

employee e LEFT SEMI JOIN employee_department_mapping d

on e.e_id = d.e_id

-- results

10, Tom, IT

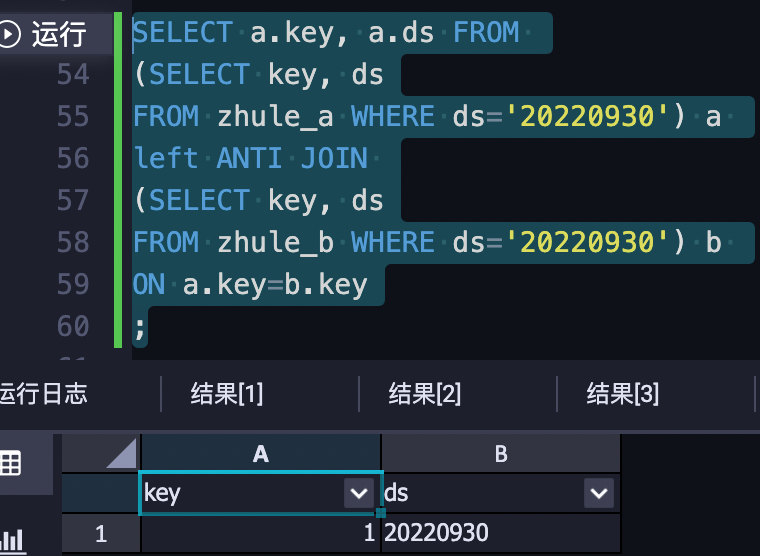

20, Jerry, HRUsage: A LEFT ANTI JOIN operation only returns the rows in Table A that have no matching rows in Table B. In a word, for a piece of data in the left table A, if there is no data in the right table B that meets the association conditions, the left table A is returned.

Usage: A FULL JOIN operation takes the Cartesian product of the rows in Table A and Table B and returns all the rows in Table A and Table B, whether the Join condition is met or not. In the result set, NULL values are returned in the columns from the table that lacks a matching row in the other table. In a word, return all records of the left table A and the right table B. For data that does not meet the association conditions, return NULL on the other side that is not associated.

It is useful to update the latest downstream data with addition, deletion, and modification operations, but few people know about it.

For example, today_feat is the new feature table computed today, and yest_feat is the feature of the previous partition.

SELECT COALESCE(a.main_image_url,b.main_image_url) AS main_image_url

,COALESCE(a.embedding,b.embedding) AS embedding

FROM today_feat a

FULL JOIN yest_feat b

ON a.main_image_url = b.main_image_urlThe effect of full Join is shown below, which meets the update of new, old, and updated features and is smoothly coupled with COALESCE.

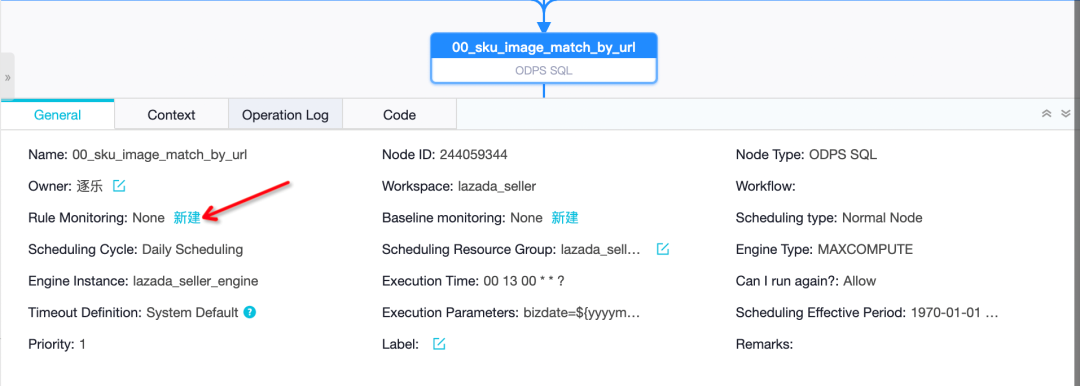

ODPS's update can only take effect in developing dev space. Setting priorities can improve the execution priority of queuing tasks to a certain extent. However, it will not take effect in the current online formal environment. We recommend optimizing your health scores and setting baselines for important online scheduling tasks to ensure the timeliness of output.

Official guidance changes the input data amount of each Map worker, which is the split size of the input file. You can use this property to indirectly control the number of workers at each Map stage (default value: 256, unit: MB). In a word, if there are many small files, you can increase the value of split.size, which ensures that it is easier to apply to Mapper under limited resources and improves execution efficiency. If you have abundant resources and want more Mapper resources, reduce the value of split.size to apply for more Mappers and improve execution efficiency. Deal with it in light of the circumstances.

For example:

-- original sql

CREATE TABLE if not EXISTS tmp_zhl_test LIFECYCLE 1 AS

SELECT sig, venture, seller_id, COUNT(product_id) as cnt

FROM sku_main_image_sig

WHERE LENGTH(sig) > 10 --some bad cases may have weird sigs like '#NEXT#'

GROUP BY sig, venture, seller_id

HAVING cnt>2

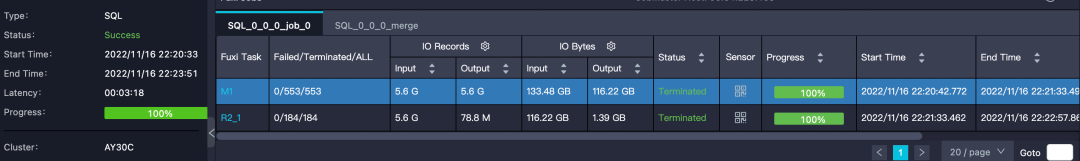

;If it is the default setting and 553 mappers and 184 reducers are allocated, it takes about 3m18s.

Under the condition of abundant resources, we can set odps.sql.mapper.split.size=64 to apply for more Mappers to process the parts of the file. At the same time, more reducers can be allocated. The execution time of the same SQL code is reduced to 2m34s.

Similarly, if your data volume is large with small pieces of data and limited space resources (after all, resource control is relatively strict now), instead of waiting for 9999 Mappers to be allocated, you can try to set odps.sql.mapper.split.size=2048 (or even larger) to reduce the number of Mappers to be allocated so the task can be executed quickly.

Display the number of reducers set (the default value is 0 to 4000). If it is not set, it will be dynamically allocated according to the task. After setting, the number of reducers set will be allocated. In the same example, when we set odps.sql.reducer.instances=1000, the duration becomes 2m.

Set the memory of each Map/Reducer worker (the default value is 1024, which can be set to a value between 256 and 12288). Generally, we do not need to set this value specially, but when the task reports an error and says, data exceeds the memory, we can set this option accordingly.

In this part, I would like to discuss how to install the required third-party libraries (such as numpy, opencv, etc.) in Python UDF of ODPS and the effective solutions if there is version incompatibility between different dependent libraries.

def include_package_path(res_name, lib_name):

archive_files = get_cache_archive(res_name)

dir_names = sorted([os.path.dirname(os.path.normpath(f.name)) for f in archive_files

if '.dist_info' not in f.name], key=lambda v: len(v))

for dir_name in dir_names:

if dir_name.endswith(lib_name):

sys.path.insert(0, os.path.dirname(dir_name))

break

elif os.path.exists(os.path.join(dir_name, lib_name + '.py')):

sys.path.insert(0, os.path.abspath(dir_name))

break

class PostProcess(BaseUDTF):

def __init__(self):

include_package_path('opencv_python-3.4.0.zip','cv2')

include_package_path('numpy-1.16.6.zip','numpy')

set odps.sql.python.version = cp37; --use python 3.7, default is 2.x version

set odps.isolation.session.enable = true;Sometimes, when using multiple libraries, conflicts between different versions may occur (for example, when using the opencv library, if the corresponding numpy version is incompatible, an error will be reported). Therefore, before uploading resource packages of multiple libraries, you need to confirm the compatibility between versions. We do not recommend trying different versions. Confirm the version locally before uploading. The feasible steps are listed below:

--exclude input or output tables (especially those tmp tables)

--@exclude_input=lsiqg_iqc_sku_product_detection_result

--@exclude_output=lsmp_sku_image_url_bizdate

-- include input or output tables (especially those separate venture tables)

--@extra_input=lsiqg_iqc_sku_product_detection_result

--@extra_output=lsmp_sku_image_url_bizdateINSERT OVERWRITE TABLE sku_main_image_sig PARTITION (ds = '${bizdate}',venture)

SELECT id

,image_url

,venture

FROM (

SELECT id

,image_url

,'ID' AS venture

FROM auction_image_id

WHERE ds = MAX_PT('auction_image_id')

UNION

SELECT id

,image_url

,'PH' AS venture

FROM auction_image_ph

WHERE ds = MAX_PT('auction_image_ph')

UNION

SELECT id

,image_url

,'VN' AS venture

FROM auction_image_vn

WHERE ds = MAX_PT('auction_image_vn')

UNION

SELECT id

,image_url

,'SG' AS venture

FROM auction_image_sg

WHERE ds = MAX_PT('auction_image_sg')

UNION

SELECT id

,image_url

,'MY' AS venture

FROM auction_image_my

WHERE ds = MAX_PT('auction_image_my')

UNION

SELECT id

,image_url

,'TH' AS venture

FROM auction_image_th

WHERE ds = MAX_PT('auction_image_th')

) union_table

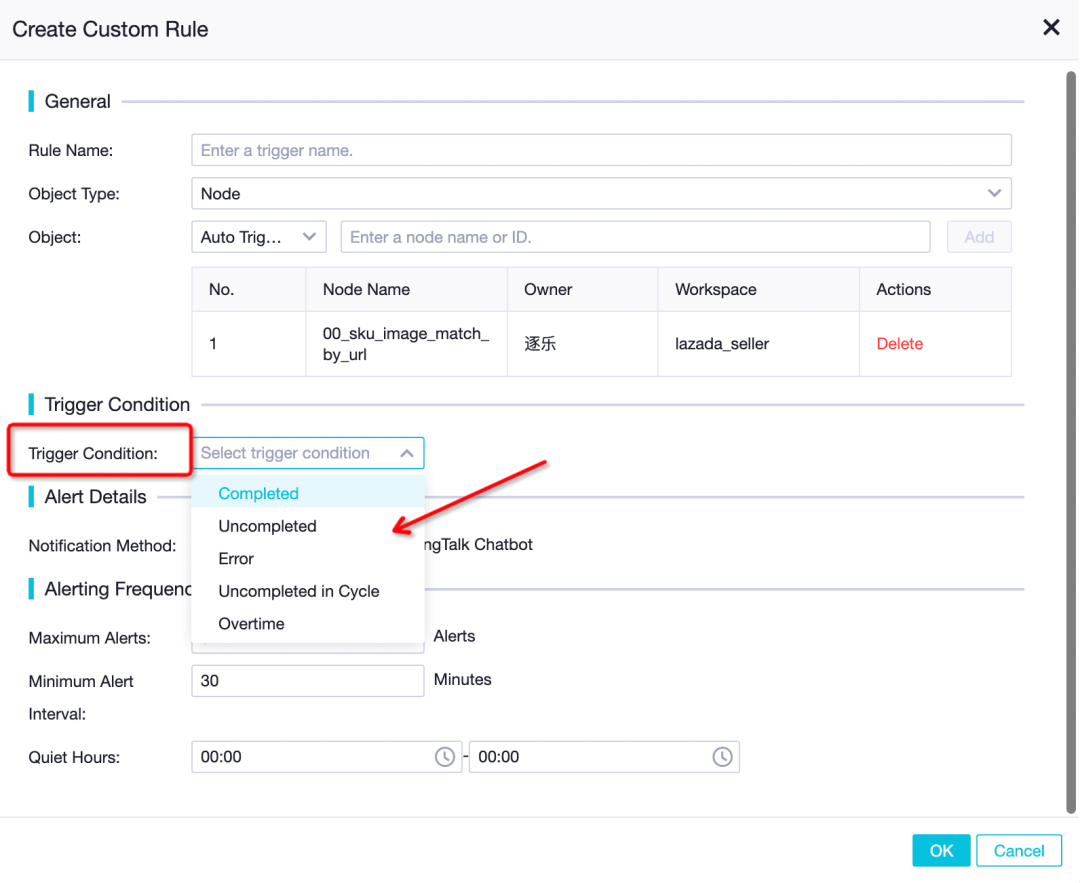

;Simple tasks can be set in the task center details.

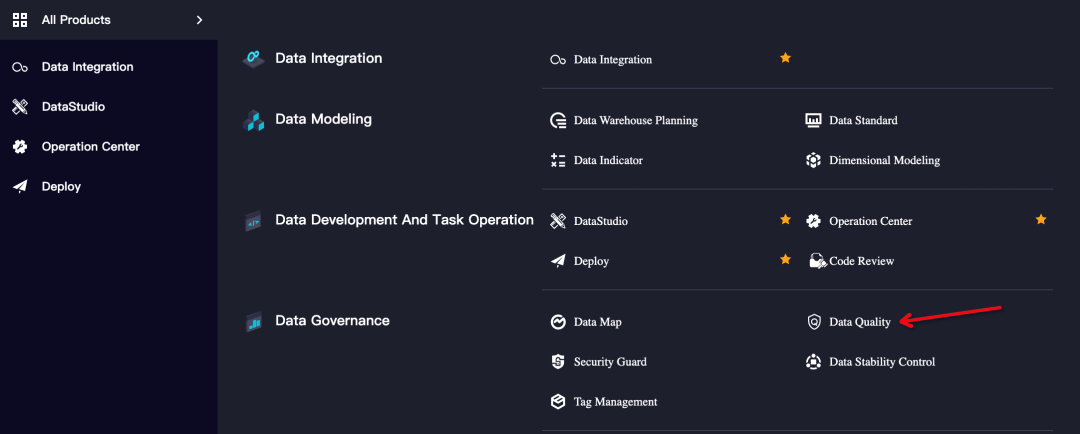

You can configure the DQC platform for more detailed data-level monitoring (such as no data output, data fluctuation, and data maximum/minimum monitoring).

Data development has a process from unfamiliarity to familiarity. If you write enough, you will find all kinds of useful tools/functions and all kinds of pitfalls. My experience from data acquisition to data development to data monitoring is listed below:

Enjoy Data Engineering!!

How Alibaba Deploys Green Technologies Throughout Its Offices

Alibaba Cloud Debuts Its Sustainability Solution at i Light Singapore 2023

1,346 posts | 475 followers

FollowAlibaba Cloud Community - June 2, 2023

Alibaba Cloud MaxCompute - January 29, 2024

Alibaba Cloud MaxCompute - February 18, 2024

Alibaba Cloud MaxCompute - March 4, 2019

Alibaba Cloud Community - January 8, 2024

Alibaba Cloud MaxCompute - January 15, 2019

1,346 posts | 475 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn MoreMore Posts by Alibaba Cloud Community