By Anish, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

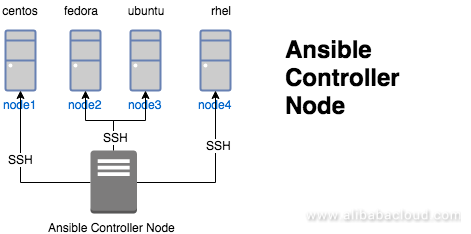

In this article, we will learn how to manage users using Ansible in an Alibaba Cloud environment. We will start by defining the architecture, as shown in the diagram below.

The diagram shows an Ansible controller node managing various nodes using SSH protocol. Extending this diagram, we are going to create playbooks that will manage different users with their sudoers privilege in the target node.

To follow this tutorial, you will need to have Alibaba Cloud Elastic Compute Service (ECS) instances. If you are not sure how to launch an ECS instance in Alibaba Cloud, refer to this documentation.

Here is a quick guide of creating and setting up ansible user in controller and target nodes.

Create ansible remote user to manage the installation from Ansible Controller node. This user should have appropriate sudo privileges. An example sudoers entry is given below.

Add user ansible

[root@controller-node] adduser ansibleSwitch to ansible user

[root@controller-node] su - ansibleGenerate the SSH-keyPair for ansible user

[ansible@controller-node]ssh-keygen -t rsa -b 4096 -C "ansible"Copy the id_rsa.pub file, to the target node Ansible /home/ansible.ssh/home directory

[ansible@controller-node]cd /home/ansible/.ssh/

[ansible@controller-node .ssh]$ ls

id_rsa id_rsa.pubNote down the public key and copy it over onto other machines

[ansible@controller-node .ssh]$ cat id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAgEApeDUYGwaMfHd7/Zo0nzHA69uF/f99BYktwp82qA8+osph1LdJ/NpDIxcx3yMzWJHK0eg2yapHyeMpKuRlzxHHnmc99lO4tHrgpoSoyFF0ZGzDqgtj8IHS8/6bz4t5qcs0aphyBJK5qUYPhUqAL2Sojn+jLnLlLvLFwnv5CwSYeHYzLPHU7VWJgkoahyAlkdQm2XsFpa+ZpWEWTiSL5nrJh5aA3bgGHGJU2iVDxj2vfgPHQWQTiNrxbaSfZxdfYQx/VxIERZvc5vkfycBHVwanFD4vMn728ht8cE4VjVrGyTVznzrM7XC2iMsQkvmeYTIO2q2u/8x4dS/hBkBdVG/fjgqb78EpEUP11eKYM4JFCK7B0/zNaS56KFUPksZaSofokonFeGilr8wxLmpT2X1Ub9VwbZV/ppb2LoCkgG6RnDZCf7pUA+CjOYYV4RWXO6SaV12lSxrg7kvVYXHOMHQuAp8ZHjejh2/4Q4jNnweciuG3EkLOTiECBB0HgMSeKO4RMzFioMwavlyn5q7z4S82d/yRzsOS39qwkaEPRHTCg9F6pbZAAVCvGXP4nlyrqk26KG7S3Eoz3LZjcyt9xqGLzt2frvd+jLMpgvnlXTFgGA1ITExOHRb+FirmQZgnoiFbvpeIFj65d0vRIuY6VneIJ6EFcLGPpzeus0yLoDN1v8= ansible

[ansible@controller-node $ exit

[root@controller-node $ exit Update the /etc/sudoers.d and add the ansible user to manage controller node itself

[root@controller-node $ visudo

#includedir /etc/sudoers.d

ansible ALL=(ALL) NOPASSWD: ALLCreate user Ansible and create a file named authorized_keys in the .ssh directory and change its file permissions to 600 (only the owner can read or write to the file).

[root@nod1]sudo adduser ansible

[root@node1]sudo su - ansible

[ansible ~]$ cd /home/ansible

[ansible ~]$ mkdir .ssh

[ansible ~]$ touch authorized_keys

[ansible ~]$ chmod 600 authorized_keysCopy the SSH public key from the ansible_controller node and add it to all the VM which is

[ansible ~]$ cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAgEApeDUYGwaMfHd7/Zo0nzHA69uF/f99BYktwp82qA8+osph1LdJ/NpDIxcx3yMzWJHK0eg2yapHyeMpKuRlzxHHnmc99lO4tHrgpoSoyFF0ZGzDqgtj8IHS8/6bz4t5qcs0aphyBJK5qUYPhUqAL2Sojn+jLnLlLvLFwnv5CwSYeHYzLPHU7VWJgkoahyAlkdQm2XsFpa+ZpWEWTiSL5nrJh5aA3bgGHGJU2iVDxj2vfgPHQWQTiNrxbaSfZxdfYQx/VxIERZvc5vkfycBHVwanFD4vMn728ht8cE4VjVrGyTVznzrM7XC2iMsQkvmeYTIO2q2u/8x4dS/hBkBdVG/fjgqb78EpEUP11eKYM4JFCK7B0/zNaS56KFUPksZaSofokonFeGilr8wxLmpT2X1Ub9VwbZV/ppb2LoCkgG6RnDZCf7pUA+CjOYYV4RWXO6SaV12lSxrg7kvVYXHOMHQuAp8ZHjejh2/4Q4jNnweciuG3EkLOTiECBB0HgMSeKO4RMzFioMwavlyn5q7z4S82d/yRzsOS39qwkaEPRHTCg9F6pbZAAVCvGXP4nlyrqk26KG7S3Eoz3LZjcyt9xqGLzt2frvd+jLMpgvnlXTFgGA1ITExOHRb+FirmQZgnoiFbvpeIFj65d0vRIuY6VneIJ6EFcLGPpzeus0yLoDN1v8= ansibleAlternatively from the Ansible Controller run the following command for each target node

[ansible@localhost ~]$ ssh-copy-id ansible@node3

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

[ansible@localhost ~]$ ssh-copy-id ansible@node2

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

[ansible@localhost ~]$ ssh-copy-id ansible@node1

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

ansible@node1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'ansible@node1'"

and check to make sure that only the key(s) you wanted were added.Update the /etc/sudoers.d and add the ansible user

[root@controller-node $ visudo

#includedir /etc/sudoers.d

ansible ALL=(ALL) NOPASSWD: ALLManaging users in the cloud environment such as Alibaba Cloud is often a security and infrastructure requirement. In general, we deal with three sets of users:

This lab will utilize and illustrates this concept.

| sudo access | non sudo access | nologin |

| user1 | User4 | user7 |

| user2 | User5 | |

| user3 | user6 |

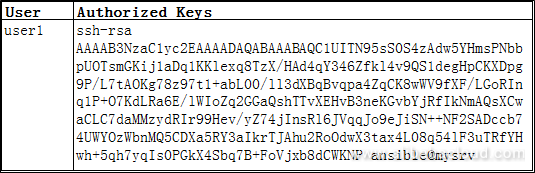

For security hardening, servers running on Alibaba Cloud should only accept password-less logins. Using change management process such as (git), all users will be deployed in their respective nodes through Ansible controller. The users must submit their public key to the controller. For example, user1 has submitted the keys to be rolled out in the listed servers.

In this section, we will discuss how to add and delete Ansible users.

[ansible@controller]$ tree

.

└── ssh

├── files

├── tasks

│ └── main.yml

└── varsHere are some definitions of the items used in the code above:

[ansible@controller]$ tree

.

└── ssh

├── files

├── tasks

│ └── main.yml

└── vars

└── users.yml [ansible@controller]$ cat ssh/vars/users.yml

---

users:

- username: user2

use_sudo: yes

- username: user4

use_sudo: no

- username: user6

use_sudo: no[ansible@controller]$ tree

.

└── ssh

├── files

│ ├── user2.pub

│ ├── user4.pub

│ └── user6.pub

├── tasks

│ └── main.yml

└── vars

└── users.yml

4 directories, 5 files[ansible@ ~]$ cat ssh/tasks/main.yml

---

- include_vars: users.yml

- name: Create users with home directory

user: name={{ item.username }} shell=/bin/bash createhome=yes comment='Created by Ansible'

with_items: '{{users}}'

- name: Setup | authorized key upload

authorized_key: user={{ item.username }}

key="{{ lookup('file', 'files/{{ item.username }}.pub') }}"

when: '{{ item.use_sudo }} == True'

with_items: '{{users}}'

- name: Sudoers | update sudoers file and validate

lineinfile: "dest=/etc/sudoers

insertafter=EOF

line='{{ item.username }} ALL=(ALL) NOPASSWD: ALL'

regexp='^{{ item.username }} .*'

state=present"

when: '{{ item.use_sudo }} == True'

with_items: '{{users}}'To run this playbook make sure we have the ansible inventory file set up correctly. Ansible inventory file is group of servers. For this example, I have created an inventory file named hosts and added all the nodes to it, which I need to manage.

[ansible@ ~]$ tree

.

├── hosts

└── ssh

├── files

│ ├── user2.pub

│ ├── user4.pub

│ └── user6.pub

├── tasks

│ └── main.yml

└── vars

└── users.yml

4 directories, 6 filesThe inventory file looks like this:

[ansible@~]$ cat hosts

[all]

node1

node2

node3

[ansible@localhost ansible]$Next we will create ssh.yml to tell ansible-playbook to use role ssh.

[ansible@~]$ cat ssh.yml

# To Run this Playbook Issue the command

#Author Anish Nath

# ansible-playbook ssh.yml

---

- hosts: all

become: yes

gather_facts: yes

roles:

- { role: ssh }Finally, run the playbook using the inventory hosts.

[ansible@controller]$ ansible-playbook ssh.yml -i hosts The ansible-playbook output shows in all the managed nodes respective users with configured sudoers access is pushed.

PLAY [all] *************************************************************************************************************************************

TASK [Gathering Facts] *************************************************************************************************************************

ok: [node1]

ok: [node3]

ok: [node2]

TASK [ssh : include_vars] **********************************************************************************************************************

ok: [node1]

ok: [node2]

ok: [node3]

TASK [ssh : Create users with home directory] **************************************************************************************************

changed: [node2] => (item={u'username': u'user2', u'use_sudo': True})

changed: [node1] => (item={u'username': u'user2', u'use_sudo': True})

changed: [node3] => (item={u'username': u'user2', u'use_sudo': True})

changed: [node2] => (item={u'username': u'user4', u'use_sudo': False})

changed: [node1] => (item={u'username': u'user4', u'use_sudo': False})

changed: [node3] => (item={u'username': u'user4', u'use_sudo': False})

changed: [node2] => (item={u'username': u'user6', u'use_sudo': False})

changed: [node1] => (item={u'username': u'user6', u'use_sudo': False})

changed: [node3] => (item={u'username': u'user6', u'use_sudo': False})

TASK [ssh : Setup | authorized key upload] *****************************************************************************************************

[WARNING]: when statements should not include jinja2 templating delimiters such as {{ }} or {% %}. Found: {{ item.use_sudo }} == True

[WARNING]: when statements should not include jinja2 templating delimiters such as {{ }} or {% %}. Found: {{ item.use_sudo }} == True

[WARNING]: when statements should not include jinja2 templating delimiters such as {{ }} or {% %}. Found: {{ item.use_sudo }} == True

ok: [node1] => (item={u'username': u'user2', u'use_sudo': True})

skipping: [node1] => (item={u'username': u'user4', u'use_sudo': False})

skipping: [node1] => (item={u'username': u'user6', u'use_sudo': False})

ok: [node3] => (item={u'username': u'user2', u'use_sudo': True})

skipping: [node3] => (item={u'username': u'user4', u'use_sudo': False})

ok: [node2] => (item={u'username': u'user2', u'use_sudo': True})

skipping: [node2] => (item={u'username': u'user4', u'use_sudo': False})

skipping: [node3] => (item={u'username': u'user6', u'use_sudo': False})

skipping: [node2] => (item={u'username': u'user6', u'use_sudo': False})

TASK [ssh : Sudoers | update sudoers file and validate] ****************************************************************************************

[WARNING]: when statements should not include jinja2 templating delimiters such as {{ }} or {% %}. Found: {{ item.use_sudo }} == True

[WARNING]: when statements should not include jinja2 templating delimiters such as {{ }} or {% %}. Found: {{ item.use_sudo }} == True

[WARNING]: when statements should not include jinja2 templating delimiters such as {{ }} or {% %}. Found: {{ item.use_sudo }} == True

ok: [node3] => (item={u'username': u'user2', u'use_sudo': True})

skipping: [node3] => (item={u'username': u'user4', u'use_sudo': False})

skipping: [node3] => (item={u'username': u'user6', u'use_sudo': False})

ok: [node1] => (item={u'username': u'user2', u'use_sudo': True})

skipping: [node1] => (item={u'username': u'user4', u'use_sudo': False})

skipping: [node1] => (item={u'username': u'user6', u'use_sudo': False})

ok: [node2] => (item={u'username': u'user2', u'use_sudo': True})

skipping: [node2] => (item={u'username': u'user4', u'use_sudo': False})

skipping: [node2] => (item={u'username': u'user6', u'use_sudo': False})

PLAY RECAP *************************************************************************************************************************************

node1 : ok=5 changed=1 unreachable=0 failed=0

node2 : ok=5 changed=1 unreachable=0 failed=0

node3 : ok=5 changed=1 unreachable=0 failed=0In the Alibaba Cloud environment, a user has a specific lifecycle. If the user is no longer required to be present in the system, the user must be deleted, and this should happen proactively. For an example, if user2 needs to deleted, then from the change management process, users.yml files needs to be edited by removing the entry of user2.

Before deleting user2:

[ansible@controller]$ cat ssh/vars/users.yml

---

users:

- username: user2

use_sudo: yes

- username: user4

use_sudo: no

- username: user6

use_sudo: noAfter deleting user2:

[ansible@controller]$ cat ssh/vars/users.yml

---

users:

- username: user4

use_sudo: no

- username: user6

use_sudo: noNow this user needs to be deleted across the Alibaba Cloud environment, which is managed by theAnsible controller. To do this, create a file deleteusers.yml in the vars directory and maintain a set of users that needs to be removed from the target node.

[ansible@controller ~]$ cat ssh/vars/deleteusers.yml

---

users:

- username: user2

- username: user3

- username: user5Next, update the main.yml file, which is present in tasks and add the delete instructions using:

- include_vars: deleteusers.yml

- name: Deleting The users

user: name={{ item.username }} state=absent remove=yes

with_items: '{{users}}'Finally, run the playbook using the inventory hosts.

[ansible@controller ~]$ ansible-playbook ssh.yml -i hostsThe "delete" task has been executed by this playbook. User2 has been removed from all ansible managed nodes.

TASK [ssh : Deleting The users] ****************************************************************************************************************

changed: [node2] => (item={u'username': u'user2'})

changed: [node1] => (item={u'username': u'user2'})

changed: [node3] => (item={u'username': u'user2'})That's the end of this post. In this article, we discussed how to manage users using Ansible, including adding and deleting users. Ansible is great way of automatically securing Alibaba Cloud environment. For more references around the Ansible User module refer to the Ansible Website.

2,605 posts | 747 followers

FollowAlibaba Clouder - August 31, 2020

Alibaba Clouder - April 23, 2020

Alibaba Clouder - April 22, 2019

Alibaba Clouder - January 9, 2019

Alibaba Cloud Community - September 15, 2021

Alibaba Clouder - December 17, 2018

2,605 posts | 747 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More RAM(Resource Access Management)

RAM(Resource Access Management)

Secure your cloud resources with Resource Access Management to define fine-grained access permissions for users and groups

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 8, 2019 at 8:20 am

Just imagine in the olden days, when we need to configure manually , touching each and every machine, ssh tests etc. Big clusters can consume lots of hours of man's days.