By Siddharth Pandey

A load balancer is a critical component of any cloud-based infrastructure that ensures incoming requests are distributed evenly across a group of servers or other resources. This helps to improve the performance, scalability, and reliability of the system, as it reduces the risk of any single server becoming overwhelmed and allows the workload to be distributed more efficiently.

The use of load balancers started in the 1990s in the form of hardware appliances that could be used to distribute traffic across a network. Today, load balancers form a crucial piece of infrastructure on the cloud, and users have a variety of load balancers to choose from. These load balancers can be categorized into physical (where the load balancer is developed on top of the architecture of physical machines) and virtual (where it is developed on top of network virtualization and no physical instance is needed).

Before we look into the details of each type of load balancer, let's discuss the function of load balancers in an architecture:

Health checks are the cornerstone of the entire load balancing process. The purpose of a load balancer is to distribute traffic to a target group of servers. However, that can only happen successfully if the underlying servers are functioning well and can receive and respond to the traffic request. Health checks ensure the underlying servers are up and running and can accept incoming traffic in a healthy manner.

Health checks are initiated as soon as a server is added to the target group and usually take about a minute to complete. The checks are performed periodically (which can be configured) and can be done in two ways:

Active Health Check: The load balancer periodically sends requests to servers in the target group to check the health of each server (as per the health setting for the target group). After the check is performed, the load balancer closes the connection that was established for the health check.

Passive Health Checks: The load balancer does not send any health check requests but observes how target servers respond to connections they receive. This check enables the load balancer to detect a deteriorating server whose health is falling, so it can take action before the server is reported as unhealthy.

Another important function of a load balancer is to decrypt the SSL-encrypted traffic that it receives and forward the decrypted data to the target servers. Secure Socket Layer (SSL) is the standard technology for establishing encrypted links between a web browser and a web server. Encryption via SSL is the key difference between HTTP and HTTPS traffic.

When the load balancer receives SSL traffic via HTTPS, it has two options:

End user’s session information is often stored locally in the web browser. This helps users receive consistent experience in the application. For example, in a shopping app, once I add items to the cart, the load balancer must send my requests to the same server, or else the cart information will be lost. Load balancers identify such traffic and ensure session persistence to the same server until the transaction is completed.

The load balancer routes the incoming traffic to target servers (as per various algorithms), which can be configured on the load balancer console:

Finally, a load balancer must be able to scale up and down as required. This applies to the load balancer’s specification as well as catering to a real-time increase and the decrease in number of target servers. Virtual load balancers can easily scale up and down (as per the incoming traffic management requirements).

For managing the target servers' scaling, load balancers are tightly integrated with the auto scaling component of the platform to add and remove servers from the target group as traffic increases or decreases.

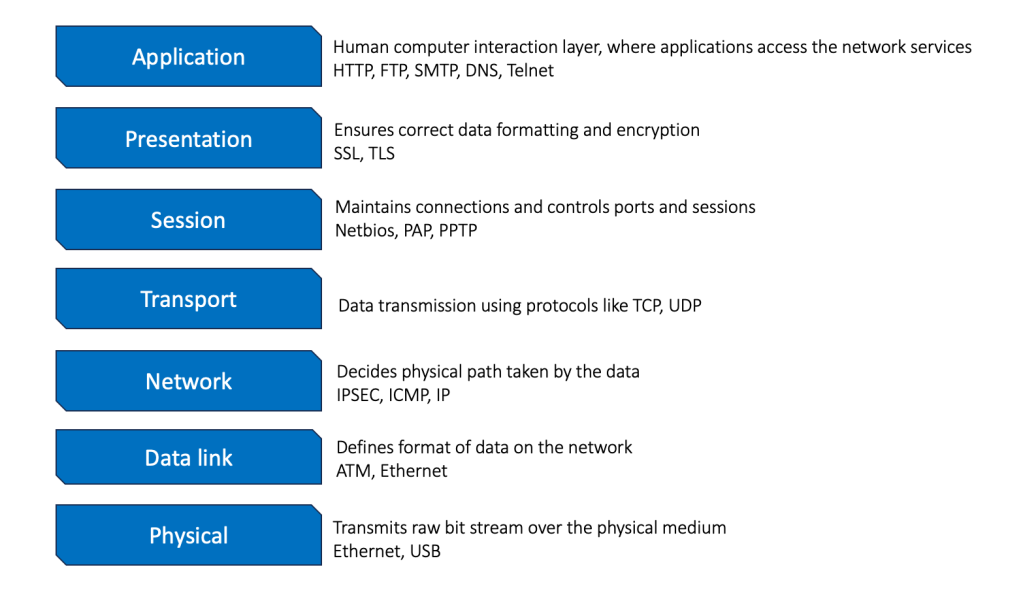

The OSI model is the universal language for computer networking. It is based on splitting the communication system into seven abstract layers, each one stacked upon the last. Each layer has a specific function to perform in networking. It is useful to revise what we know about the seven layers before we discuss different types of load balancers.

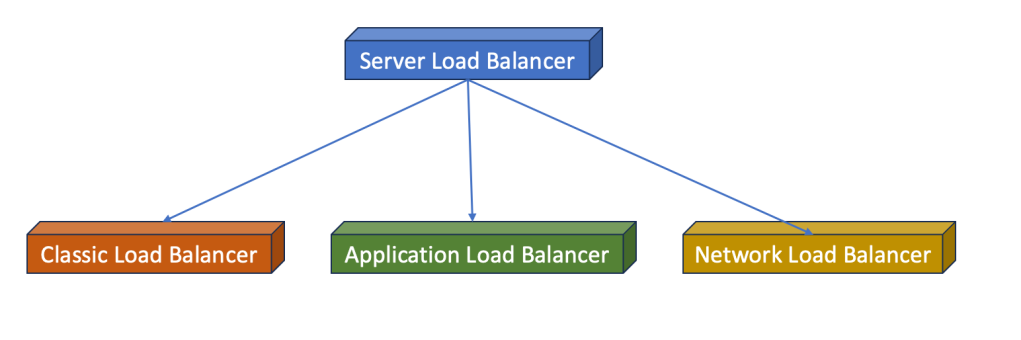

There are different types of load balancers available, each of which has specific features and capabilities. The main types of load balancers are the classic load balancer, application load balancer, and network load balancer. We will refer to Alibaba Cloud server load balancers for features and limitations.

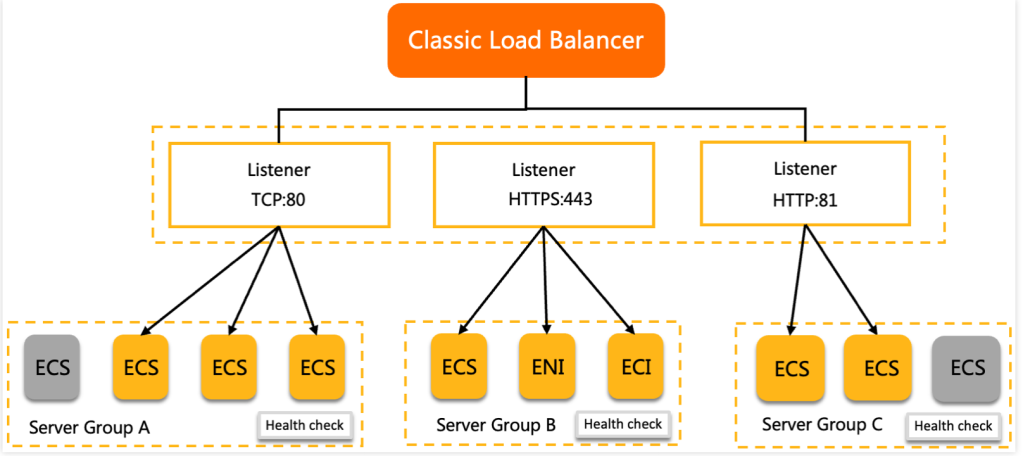

CLB supports TCP, UDP, HTTP, and HTTPS. CLB provides advanced Layer 4 processing capabilities and basic Layer 7 processing capabilities. CLB uses virtual IP addresses to provide load balancing services for the backend pool, which consists of servers deployed in the same region. Network traffic is distributed across multiple backend servers based on forwarding rules. This ensures the performance and availability of applications.

Classic load balancers are deployed on top of the architecture of physical machines, which has some advantages and disadvantages:

The underlying instances with specific processors used for CLB are specialized for receiving high traffic and managing it. This results in higher throughput of the system.

There is one type of load balancer to manage both layer 4 and layer 7 load balancing (to an extent).

Inability to Scale: Auto scaling for the load balancer instance can be difficult, as it is tied to a specific instance.

Fixed Cost: This could be negative if the traffic is unpredictable, resulting in lower utilization of the fixed-priced instance.

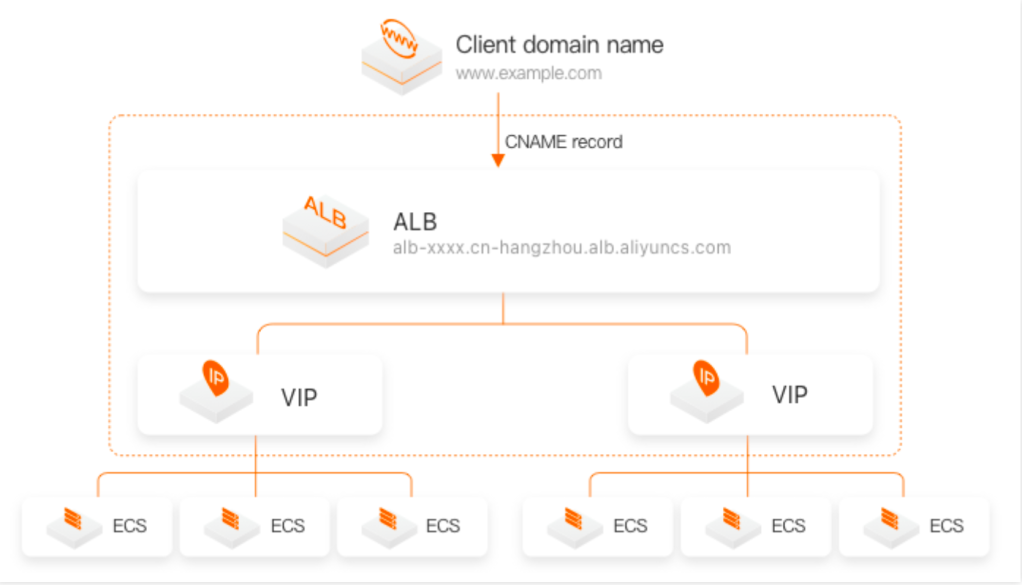

Application load balancers (also known as layer 7 load balancers) are a more recent development in the world of load balancing. They are similar to classic load balancers as they operate at the application layer of the OSI model, but they offer a number of additional features and capabilities. ALB can examine the contents of the incoming request to route the request to the appropriate server. This makes it ideal for environments where the workload is heavily focused on application-level processing (such as web applications or microservices). Application load balancers can also automatically scale to meet the demands of the application, and they can provide advanced routing capabilities based on the content of the request. For example, an application might have different microservice for iOS and Android users or different microservice for users coming in from different regions. As per this content, ALB can direct these users to the appropriate destination.

Alibaba Cloud ALB is optimized to balance traffic over HTTP, HTTPS, and QUIC UDP Internet Connections (QUIC). ALB is integrated with other cloud-native services and can serve as an ingress gateway to manage traffic.

ALB supports static and dynamic IP, and the performance varies based on the IP mode.

| IP | Maximum queries per second (QPS) | Maximum connections per second (CPS) |

| Static | 100,000 | 100,000 |

| Dynamic | 1,000,000 | 1,000,000 |

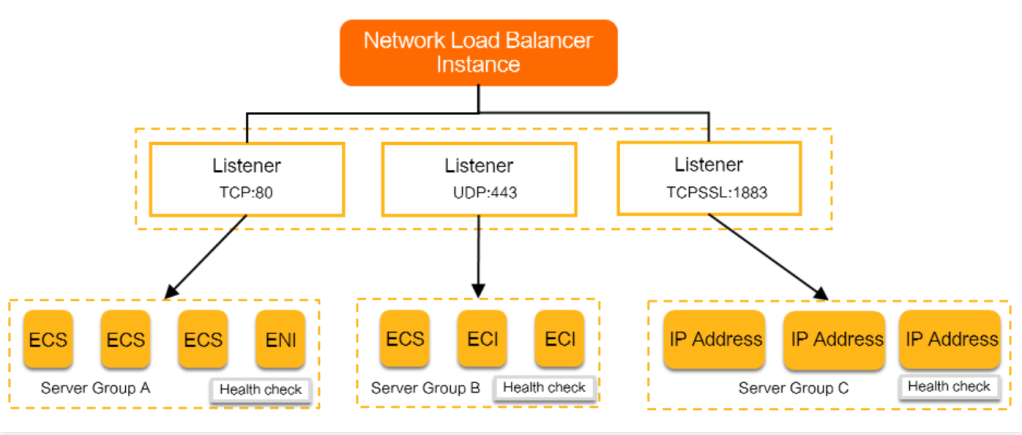

Network load balancers (also known as layer 4 load balancers) operate at the network layer of the OSI model. This means they are responsible for routing traffic based on the IP addresses of incoming packets, but they do not inspect the contents of the packets themselves. Network load balancers are often used to distribute traffic to applications that use the TCP or UDP protocols, but they do not provide the advanced routing capabilities of classic or application load balancers.

NLB is often used in environments where the workload is heavily focused on network-level processing (such as load balancing for a network of web servers or in gaming scenarios with very high traffic). An NLB instance supports up to 100 million concurrent connections and 100 Gbit/s throughput. You can use NLB to handle massive requests from IoT devices.

You do not need to select a specification for an NLB instance or manually upgrade or downgrade an NLB instance when workloads change. An NLB instance can automatically scale on demand. NLB also supports dual stack networking (IPv4 and IPv6), listening by port range, limiting the number of new connections per second, and connection draining.

Pricing for server load balancers on Alibaba Cloud is calculated based on a unit of consumption called Load Balancer Capacity Unit (LCU). The definition of LCU for ALB, NLB, and CLB and the prices are listed below:

| Instance type | Price per LCU (Unit: USD/hour/transit router) |

LCU definition |

| Application Load Balancer | 0.007 | 1 ALB LCU = 25 new connections per second 3,000 concurrent connections (sampled every minute) 1 GB of data transfer processed per hour 1,000 rules processed per hour |

| Network Load Balancer | 0.005 | 1 LCU = For TCP: 800 new connections per second 100,000 concurrent connections (sampled every minute) 1 GB of data transfer processed per hour For UDP: 400 new connections per second 50,000 concurrent connections (sampled every minute) 1 GB of data transfer processed per hour For SSL over TCP: 50 new connections per second 3,000 concurrent connections (sampled every minute) 1 GB of data transfer processed per hour |

| Classic Load Balancer | 0.007 | 1 LCU = For TCP: 800 new connections per second 100,000 concurrent connections (sampled every minute) 1 GB of data transfer processed per hour For UDP: 400 new connections per second 50,000 concurrent connections (sampled every minute) 1 GB of data transfer processed per hour For HTTP/HTTPS 25 new connections per second 3,000 concurrent connections (sampled every minute) 1 GB of data transfer processed per hour 1,000 rules processed per hour |

With these different types of load balancers with high availability and usage-sbased pricing, users can choose the correct type of instance and maintain high performance and high availability.

Alibaba Cloud Partners with Asia Pacific College to Nurture IT Talents in the Philippines

1,310 posts | 461 followers

FollowKidd Ip - May 19, 2025

Alibaba Cloud Indonesia - March 11, 2025

JDP - August 19, 2021

blog.acpn - November 27, 2023

blog.acpn - November 27, 2023

Alibaba Cloud Community - June 9, 2023

1,310 posts | 461 followers

Follow Server Load Balancer

Server Load Balancer

Respond to sudden traffic spikes and minimize response time with Server Load Balancer

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Global Application Acceleration Solution

Global Application Acceleration Solution

This solution helps you improve and secure network and application access performance.

Learn MoreMore Posts by Alibaba Cloud Community

Peter Dang October 15, 2023 at 3:29 pm

nice article. Thanks Siddharth Pandey.