By Jiying Dong (Senying)

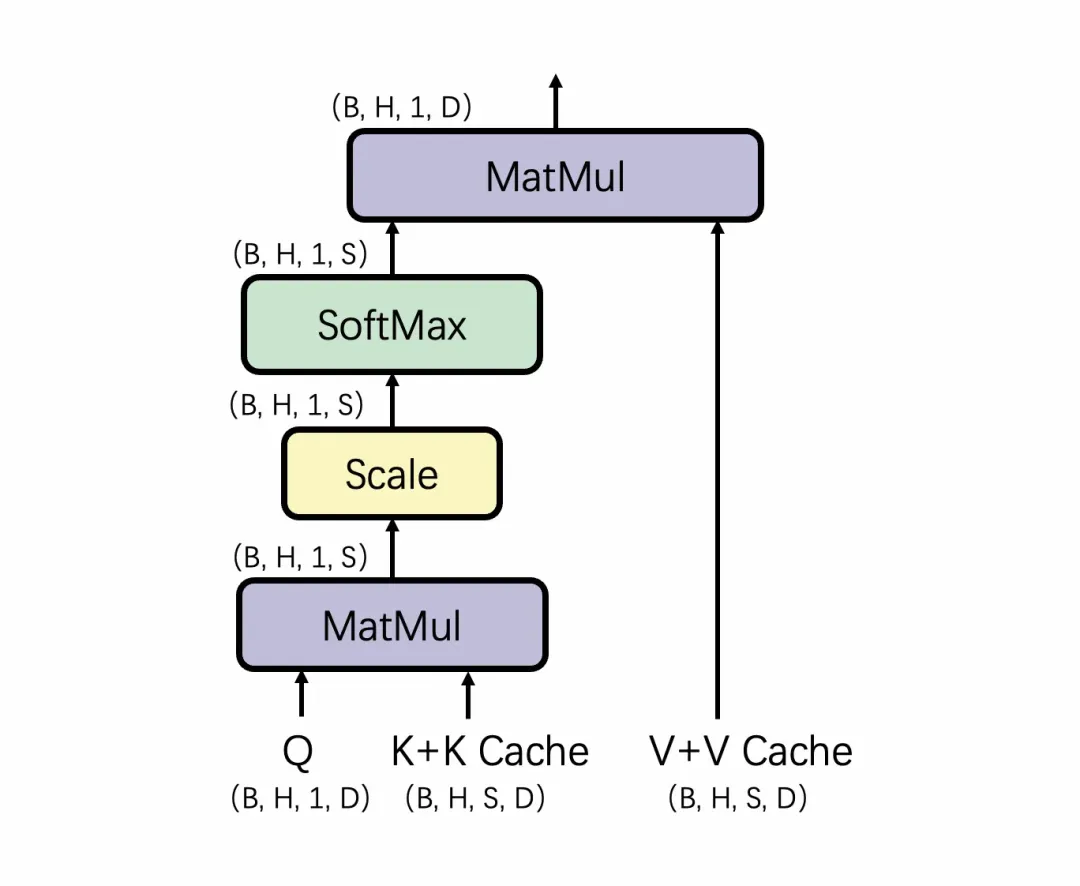

With the wide application of large language models, how to build high-performance inference services at low cost has increasingly become the focus of the industry. RTP-LLM is a Large Language Model inference acceleration engine developed by Alibaba's Intelligence Engine team. It has been widely used within Alibaba Group and has accumulated some practical experience. In the previous article LLM Inference Acceleration: GPU Optimization for Attention in the Decode Phase, we analyzed the current MMHA calculations on the GPU:

The whole computing process is similar to the concatenation of two MatMul. The implementation of MHA is equivalent to that of Batch GEMV with batch size = B * H, which is a memory bound issue. The implementation of GQA is equivalent to that of Batch GEMM with batch size = B, which is likely to fall into the compute bound.

As described earlier, SoftMax, while less computationally intensive, limits the task division of MMHA calculations. In the scenario of long seq, we will adopt the idea of flash decoding to split on S to increase the occupancy. However, in the scenario of medium seq (1k-2k), the revenue of splitting S may not be enough to cover the reading and writing overhead of the newly added buffer. At this point, the memory load efficiency is relatively low, and the seq of a large number of LLM tasks undertaken by our platform are distributed in this interval.

Therefore, in this article, we will take the implementation of MMHA on A10 as an example to briefly discuss how to further improve the calculation performance of MMHA in this interval.

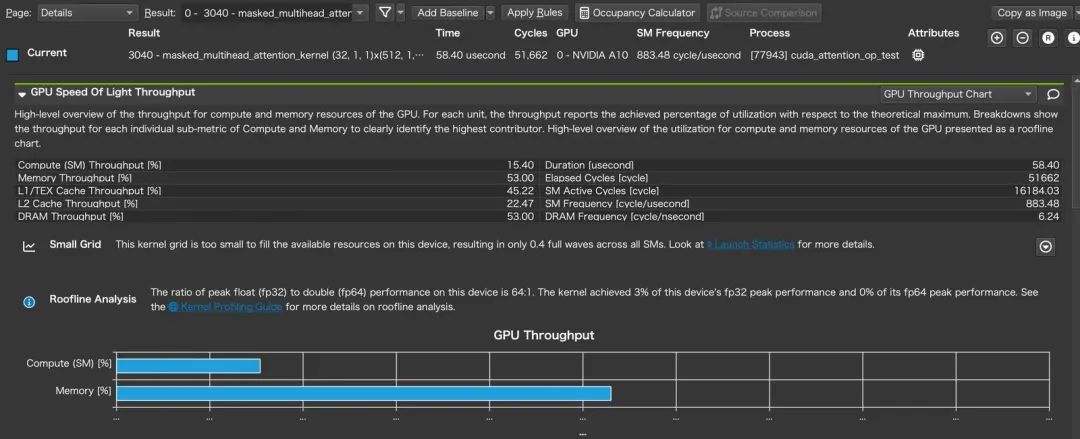

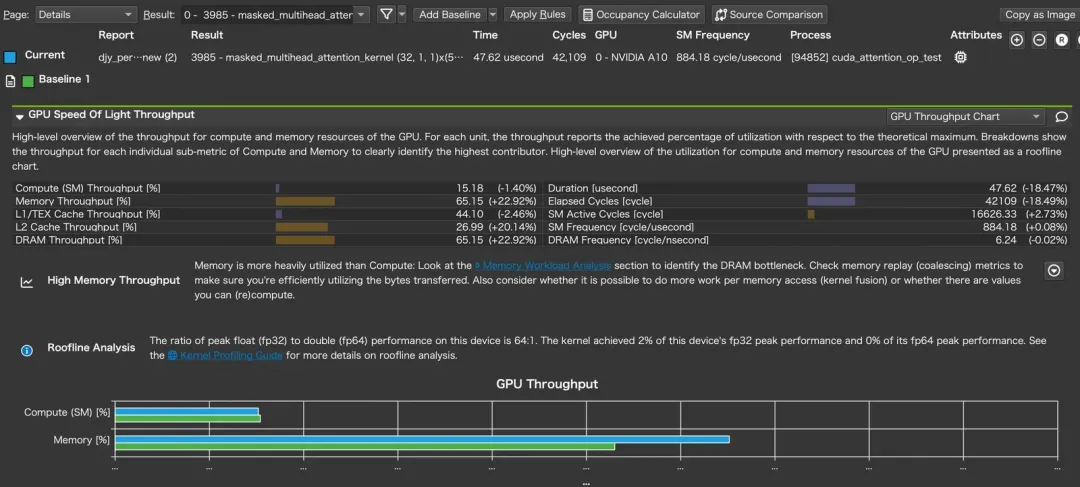

Let's take a look at the initial performance first: A10, B=1, H=32, D=128, S=1024, execution time=58.40us.

At present, the bottleneck of the kernel lies in memory bound so the most important modification should be memory load. Before making any changes, let's adjust the code first. In addition to streamlining some code and removing some features that have been iterated and are no longer used, we also put the relatively complex calculation of Rotary Embedding into the template parameters.

Our inference framework, RTP-LLM, currently supports a variety of different Rotary Embedding, from basic Llama, to slightly changed ChatGLM, Linear, Dynamic NTK, to complex Yarn and Llama 3: although only one implementation will be used in the actual calculation, various branches show higher register usage and thus reduced occupancy in the compiled code. Therefore, we judge that there is a potential benefit to expanding ROPE.

However, since MMHA itself also needs to generate a large number of instances based on various data types and head_size passed in, the number of instances is multiplied several times by expanding ROPE. For this reason, we also split the compiled so to avoid the issue of symbol table overflow.

Take a look at the performance benefits from a simple expansion:

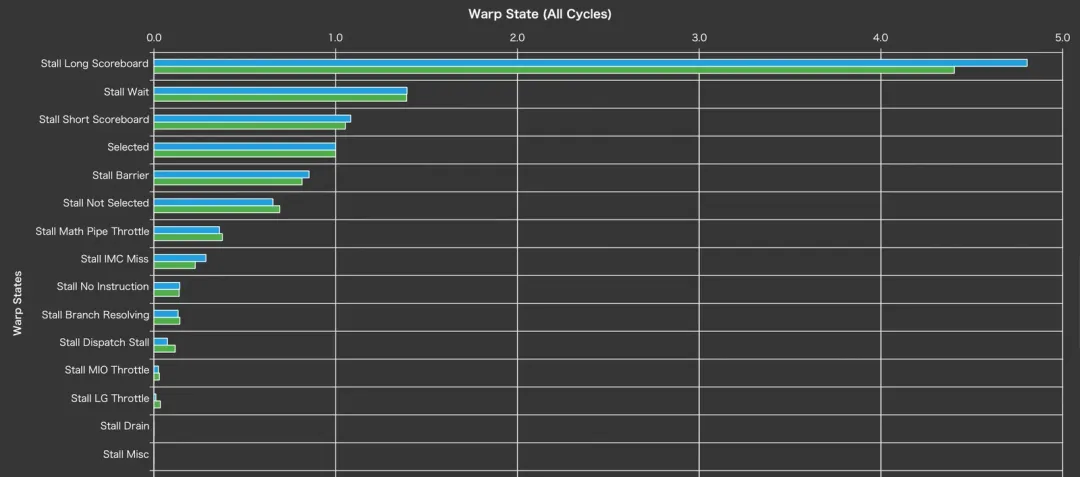

Looking at the stall situation at this time, a large proportion of stall long scoreboard still reminds us that memory load must be the bottleneck of this kernel:

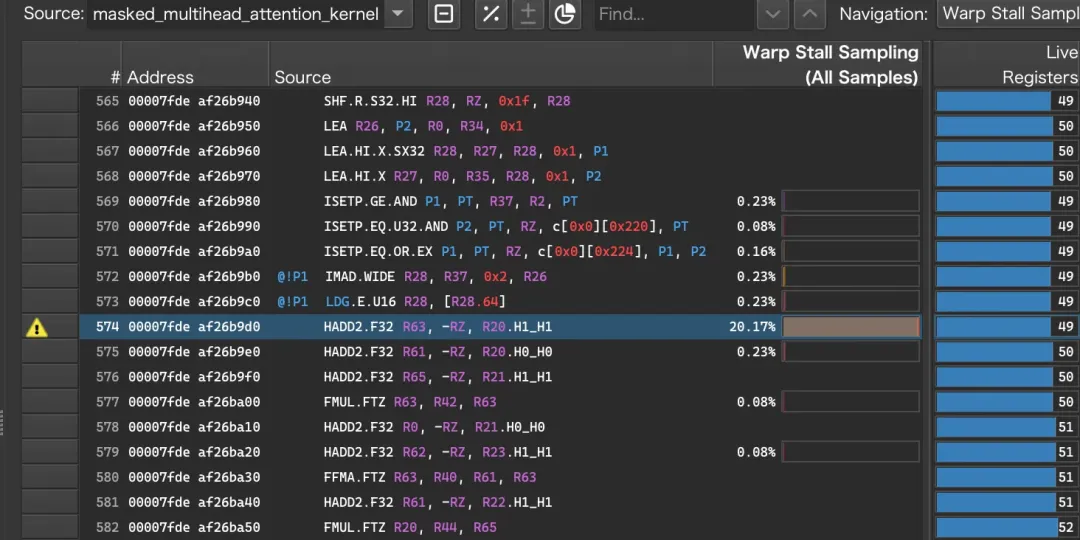

Specifically, almost every stall is in HADD2. HADD2 here is to perform calculations on the KV Cache loaded from the global. It is reasonable to infer that the stall here is because the data has not been retrieved. Therefore, the key to optimization is to improve the load efficiency.

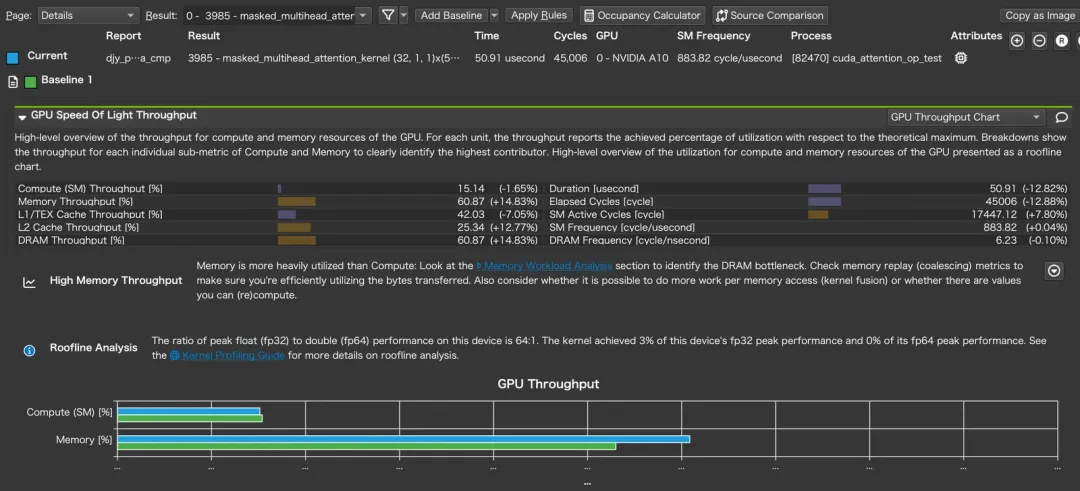

For the most critical memory bound issue, we should start with improving the load efficiency. According to the previous analysis, the load efficiency of the basic version of MMHA is not low. Under the task allocation determined by the computing characteristics, each thread group performs a continuous load of 256 Bytes according to each thread 16 Bytes, and each thread caches some data through the register, leading to a certain degree of overlap in data loading and computing. If you want to further improve the load efficiency, the best solution is to make the data cache more advanced.

However, due to the relatively complex calculation of MMHA, the register usage must also be strictly controlled. Using the register to cache data in advance will affect the occupancy due to increased register usage. Therefore, we use shared memory to cache data in advance. At the same time, in Ampere and later, the enhanced data load efficiency (cp.async) from global to shared memory also further improves the load efficiency.

Of course, this optimization method similar to multi stage in GEMM will be limited by the capacity of shared memory, thus affecting the stage depth. In addition, shared memory is also used to store some results of QK dot and output: K Cache and V Cache can reuse SMEM buffer; while the results of V Cache and QK dot cannot be reused. Therefore, the optimization of loading into smem in advance has significant benefits on medium-length S. With the expansion of S, it should comply with the split of S.

The improvement brought by the change of load strategy is very obvious:

In contrast, the issue of stall long scoreboard is somewhat more eased:

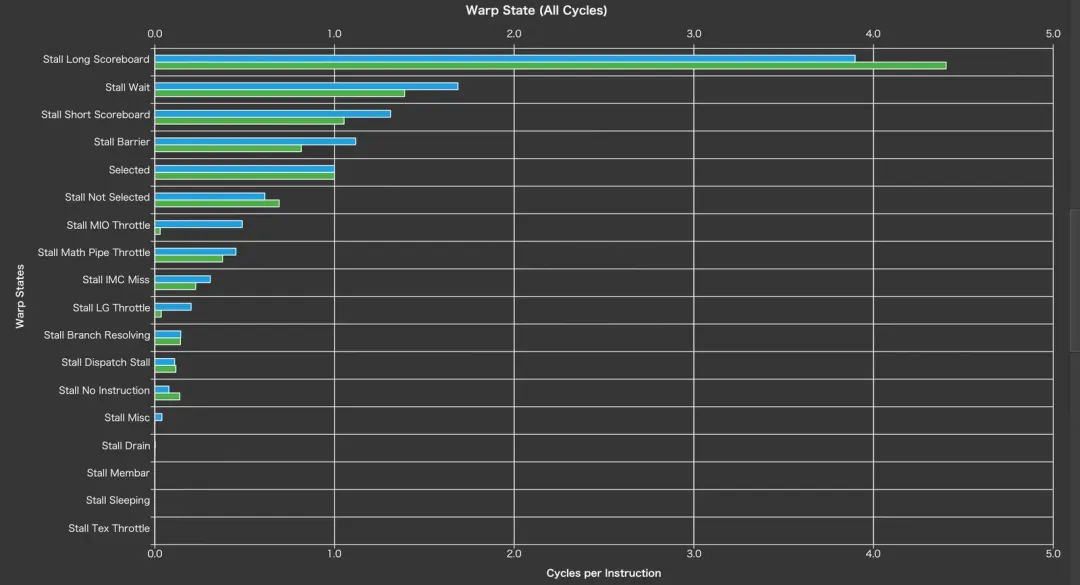

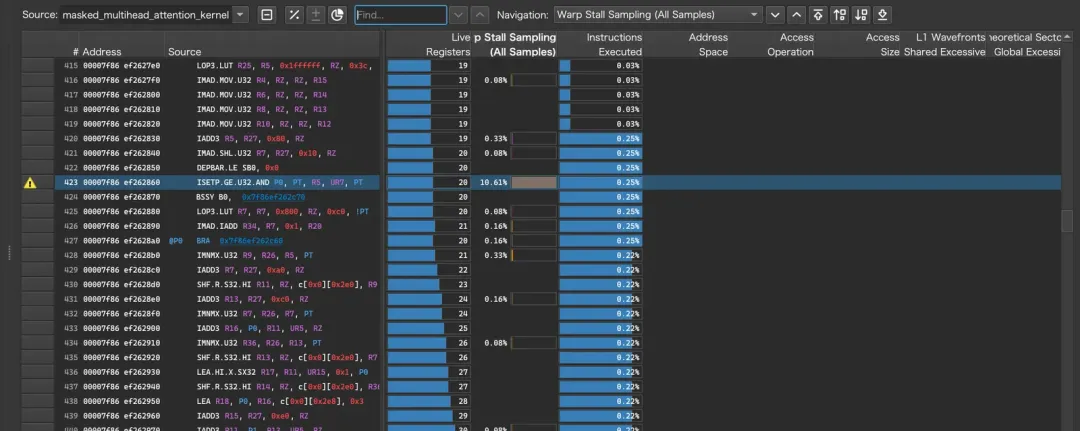

Now the warp stall is in ISETP. The analysis shows that the stall shown here should still be the wait of stall after memcpy async.

Generally speaking, if the stall is in async memcpy, on the basis of merging memory accesses and fixed stage depth, it is actually very difficult for us to do more optimization besides allocating tasks in a better way to transmit enough load instructions: after all, load still mainly depends on bandwidth. However, the implementation of MMHA is slightly different. The main difference here is the non-continuous KV Cache.

In the previous analysis, we only introduced continuous KV Cache storage; However, in the actual implementation, we use a storage method of PageAttention similar to vLLM, because completely continuous KV Cache cannot meet the actual service requirements. In a continuous KV Cache, the layout stored in both the K Cache and the V cache is (B, H_kv, S, D).

This storage method either allocates a buffer according to the longest seq at the beginning, which is likely to cause a waste of video memory; or it allocates a new buffer as the seq increases and copies the original KV Cache to the new buffer during the generation process, which will lead to a significant increase in latency and potential video memory fragmentation. PageAttention divides the KV Cache into fixed-size Block Caches. Each Block Cache contains a KV Cache of fixed length. If the length is num_tokens, the layout of each Block can still be expressed as (B, H_kv, s, D), where the value range of s is [0 : num_tokens].

In this storage method, when we load KV Cache, we need to calculate the block in which the corresponding KV Cache is located, and then calculate the address of seq corresponding to the block. The load efficiency of this secondary addressing method is not as good as that of the continuous KV Cache. We adjusted the order of the loop during calculation, reduced the repeated load of repeated KV Cache Block pointers, and loaded the KV Cache Block pointers in advance, which also helped improve kernel performance:

Finally, we also interspersed some tuning tips in the optimization process:

PTX ISA provides a series of instructions to control cache behavior relatively finely, such as the commonly used .ca (cache at each level) and .cg (bypass L1, cache only at L2). The strategy is determined based on whether the loaded data will be used multiple times. Furthermore, in MMHA calculation, each number in the KV Cache is actually accessed only once. In addition to bypassing L1, we can use evict_first as a cache hint to further improve load efficiency.

The forceinline forces the compiler to inline functions, which will help the compiler to complete instruction rearrangement and further optimize instructions, such as optimizing FMUL + FADD to FFMA.

The individual improvements of these small tricks are relatively limited, so this article will not elaborate on them as an optimization point.

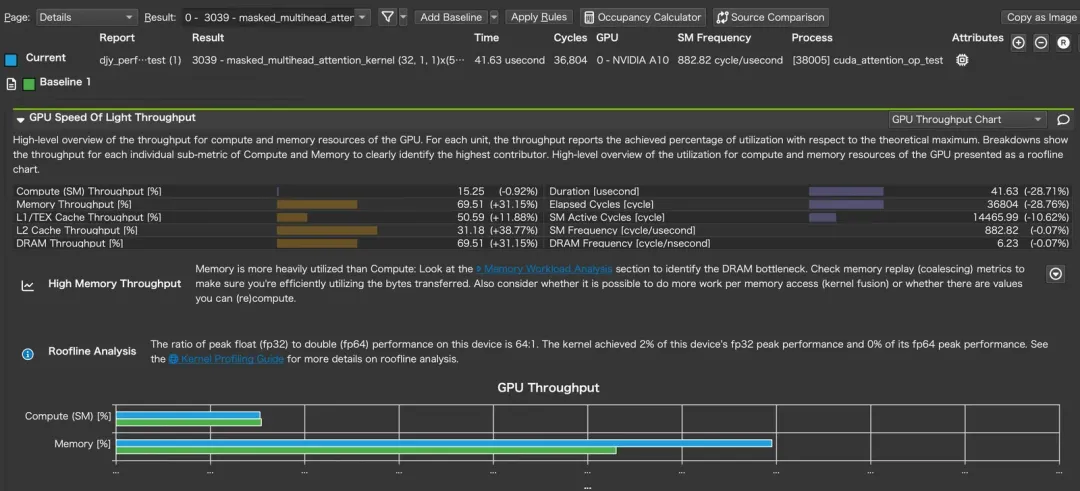

Finally, we can see that after a series of optimization explorations, the execution time of the same kernel has been reduced from 58.4us to 41.64us; the memory efficiency has also been improved to 69.5% (which is a statistical value).

In this article, we only take A10 as an example to introduce how to optimize MMHA in specific seq scenarios. However, the performance bottlenecks of the kernel are different on different cards, in different seq scenarios, or under the difference between MHA and GQA. Correspondingly, the optimization strategies should also be different. For example, GQA should change the task division, A100 should start from the perspective of increasing occupancy, and H100 may need to think about how to make good use of Hopper's new feature.

Our optimization attempts will persist. In the future, we will continue to explore more about LLM optimization strategies and practical experience, and share them with you in the RTP-LLM project. Welcome to exchange your ideas.

[01] flash decoding

https://crfm.stanford.edu/2023/10/12/flashdecoding.html

[02] RTP-LLM

https://github.com/alibaba/rtp-llm

[03] vLLM

https://github.com/vllm-project/vllm

[03] PTX ISA

https://docs.nvidia.com/cuda/parallel-thread-execution/index.html#

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

A Brief Introduction to Getting Started and Practicing with Elasticsearch

1,348 posts | 478 followers

FollowAlibaba Cloud Community - October 10, 2024

ApsaraDB - December 29, 2025

ApsaraDB - February 4, 2026

Alibaba Container Service - January 15, 2026

Alibaba Cloud Native Community - September 25, 2023

Alibaba Container Service - February 26, 2026

1,348 posts | 478 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Community