In order to win this inevitable battle and fight against COVID-19, we must work together and share our experiences around the world. Join us in the fight against the outbreak through the Global MediXchange for Combating COVID-19 (GMCC) program. Apply now at https://covid-19.alibabacloud.com/

Written by Wang Jinzhou, who's nicknamed Qianjie at Alibaba. Wang is a Senior Engineer at AliENT.

Due to the coronavirus epidemic, the start of the fall semester for all elementary schools, middle schools, and high schools in China was delayed for some time after Chinese New Year. In response to this, Youku announced the launch of its "Homeschool Plan" to provide free live-streaming tools for teachers, as opening school doors wasn't an option. Live classrooms were launched on Youku as early as February 10.

To accomplish a superior live-streaming experience for China's educators, Youku had to ensure a smooth and interactive experience during live-streams despite a larger than normal demand on its systems.

Considering this, the Youku live streaming media team looked into various methods to reduce the latency of streaming media on their site so there would be minimal latency, an indiscernible amount. Their goal was to reduce the average latency of live-streams from an average of 0.3 seconds to being less than 0.6 seconds.

In the end, they were able to achieve this striking goal, despite a dramatic increase in demand, by optimizing live-streaming classrooms. In the reminder of this article, Wang Jinzhou, a senior engineer from the Alibaba Entertainment team, will share just how the team was able to achieve this goal.

Interactive live streaming scenarios involve a primary live-streamer, and possibly also a secondary live-streamer, as well as their viewers, or online audience. Therefore, conceptually speaking, to break live streaming up into its fundamental parts, you have the relationships between these three fundamental roles as constituting various interactive scenarios.

Therefore, we need to improve the interaction between the three roles during live streams so that a consistent user experience can be provided between live-streamers and their viewers. The Youku live streaming media team looked into methods to reduce latency for streaming media so that the latency between primary and second live-streamers is less than 0.3 seconds and that between live-streamers and viewers is less than 0.6 seconds. In the end, they were able to solve various interaction problems in live-stream rooms.

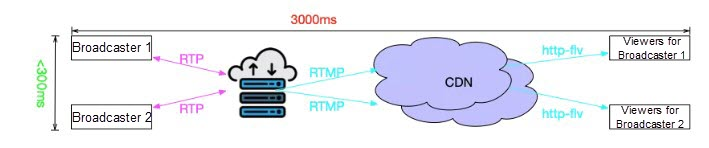

In traditional live streaming solutions, the transmission latency between live-streamers is less than 0.3 seconds in real-time. However, the overall latency between live-streamers and viewers is normally around 3 seconds in a link transmission or content delivery network (CDN) distribution mode, as shown in the following diagram:

Live stream latency is related to, and is caused by, the routing table maintenance protocol (RTMP) transmission link, CDN connection, as well as player buffer. To improve the user experience and reduce latency, we needed to modify these three processes, in particular the CDN that controls the transmission link. However, it's not possible to modify CDN connections in most cases.

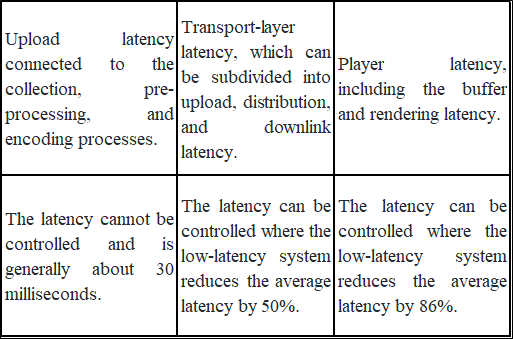

Based on this analysis, we can draw the following conclusions:

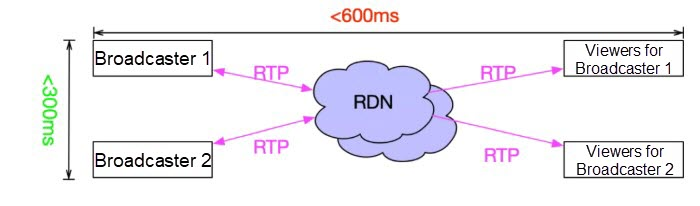

To address the above issues, we developed a rough and ready solution by building a streaming media transmission system that allows for the control the entire process, illustrated below:

If you simply do what you want, the results are often disappointing. This design uses the notion of CDN to transform the entire real-time communication system, taking into account both the high concurrency of CDN distribution and the low latency of real-time communication. Real-time communication systems and CDNs are both complex entities. Moreover, a system that integrates both components have many hidden pitfalls.

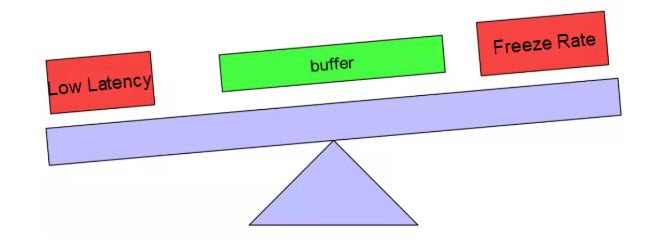

Even worse, there are more challenges to face. In the actual engineering of the network infrastructure, we must find ways to reduce the latency and ensure smoothness, which are often contradictory goals. To reduce the latency, it is necessary to reduce the buffer on the playback side and the buffer on the server side, which however can increase instances of freezing. On the other hand, to make the playback smoother, we must increase the buffer, which also increases the latency. So, in the end, how can we solve this kind of problem?

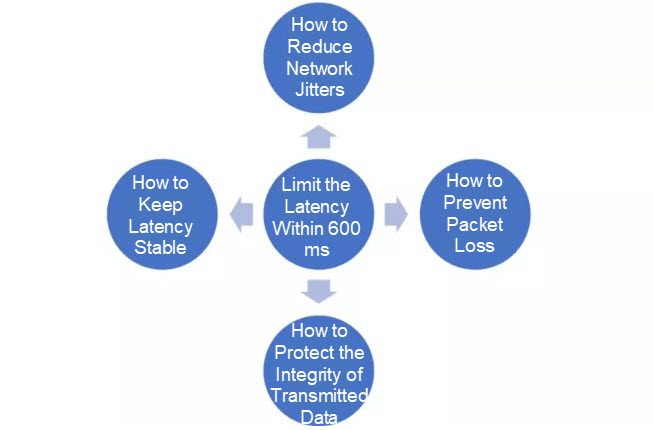

The low-latency live streaming system combines technologies such as the CDN connections, the private real-time communication protocol, WebRTC, and cloud-native infrastructure to meet the demanding requirements of projects based on specific business needs. The technical problems are illustrated in the following figure:

From the above, we can see that only the transport-layer latency and player buffer latency are controllable. In low-latency projects, the RDN system controls the entire transmission process and optimizes every detail. It reduces latency to less than 0.2 seconds, or more precisely 118 milliseconds. The low-latency player adaptively adjusts the buffer size and strictly controls the audio and video speed. It ensures that latency does not exceed 415 milliseconds while maintaining smooth playback. We can control the target latency of the player to prioritize either smooth playback or low latency. This meets the needs of both live-streamer on-mic interaction and viewer interaction scenarios.

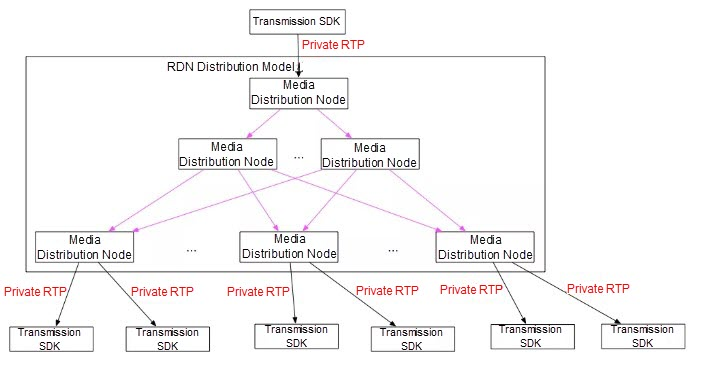

For traditional CDN and video conferencing systems, you can look up their details online. The resource delivery network (RDN) system is a system that integrates the CDN architecture and uses video conference media servers as nodes. RDN uses lazy loading to transmit media. When the live-streamer transmits the stream to the receiving edge node and a viewer plays the stream, the system returns the nearest edge node through global server load balancing (GSLB) and performs resource indexing to quickly distribute the stream to the transmission SDK on the player. The following figure illustrates the distribution of media streams:

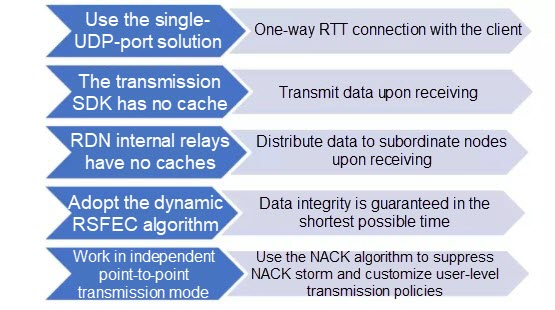

Transmission latency and playback latency are the only two factors that we can control. Under this premise, we use the following methods to minimize transmission latency:

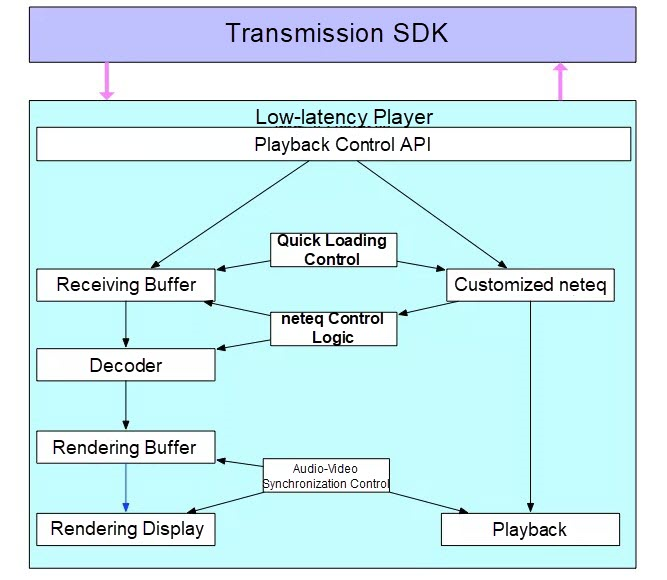

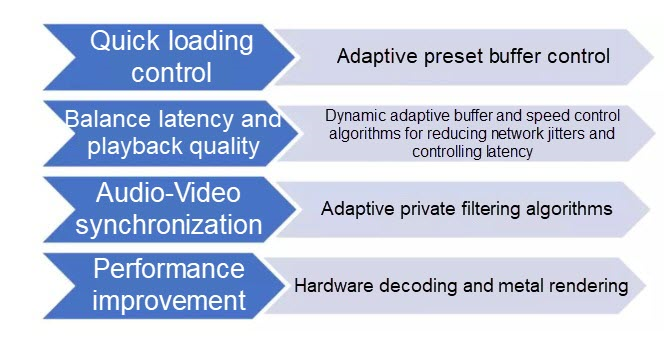

Low-latency players constitute an important part of latency control in the system. They are the only components that can control and adjust the latency. Compared with traditional players, low-latency players ensure low latency and meet user requirements for smoothness, quick loading, and audio-video synchronization. The low-latency player architecture is shown below:

The low-latency player can customize the optimized neteq to reduce network jitter, packet loss, and other problems, significantly improving the user experience on weak networks. The filter-based audio-video synchronization solution ensures efficient audio and video convergence and a superior user experience.

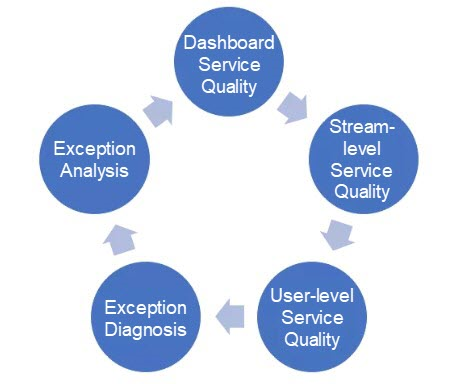

The Bian Que system collects logs from the clients and servers, monitoring end-to-end service quality and troubleshooting various online problems.

In scenarios where the smoothness and the quick loading rate are no less than those of traditional live streaming solutions, the interaction latency indicator shows a reduction of 86%. Since it achieved stable operation in 2017, the system has provided live stream users with high-quality interactive live-stream rooms that feature low-latency interaction, click-to-see, and clear and smooth playback. It also supports head-to-head talent contests, providing low-latency live streaming for various scenarios and ensuring the smooth operation of various competitions over the past two years.

The low-latency live streaming system was created for interactive live streaming by integrating the traditional live-streaming technologies, along with real-time communication technology and WebRTC technology. By synchronizing text and audio-video playback, it can support various types of real-time audience interaction with zero waiting time. Looking back on the development of the system, I realize some of my technical ideas have changed over time. I think back to my first exposure to these three technologies:

Later, as I learned more about the technical details, the needs of interactive live streaming services, and the pain points in the development process, I began to think that we could use these technologies to move forward. This brought me to the conclusion that an in-house low-latency live streaming system could solve these problems once and for all. After concluding that we were heading in the right direction, we decided that we would push forward to see where this road would lead. Ultimately, we arrived at our goal.

While continuing to wage war against the worldwide outbreak, Alibaba Cloud will play its part and will do all it can to help others in their battles with the coronavirus. Learn how we can support your business continuity at https://www.alibabacloud.com/campaign/supports-your-business-anytime

Ant Financial Unveils SaaS Version of Its Database Solution Oceanbase

Learn How Alibaba Engineers Accommodated for Face Masks in their Algorithms

2,593 posts | 791 followers

FollowAlibaba Clouder - March 29, 2021

Alibaba Clouder - June 26, 2018

Alibaba Clouder - March 13, 2020

Alibaba Clouder - December 25, 2019

Alibaba Clouder - April 8, 2020

Alibaba Clouder - December 3, 2019

2,593 posts | 791 followers

Follow CDN(Alibaba Cloud CDN)

CDN(Alibaba Cloud CDN)

A scalable and high-performance content delivery service for accelerated distribution of content to users across the globe

Learn More Global Accelerator

Global Accelerator

Provides network acceleration service for your Internet-facing application globally with guaranteed bandwidth and high reliability.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Clouder