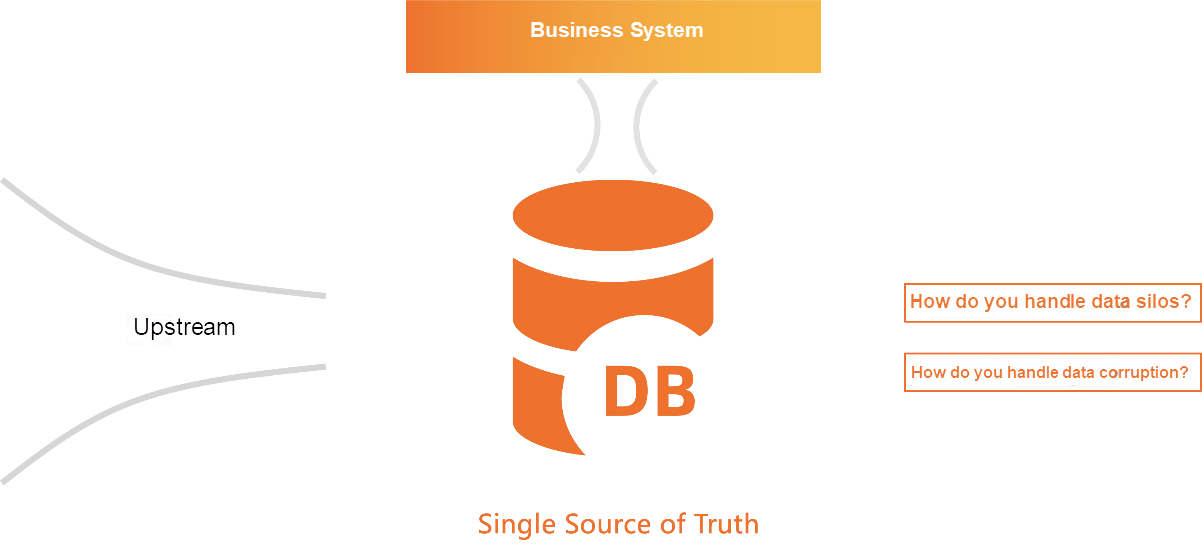

As developers, we're all familiar with the background system, which can be broken down into two components. One is the business system, responsible for processing the system's business logic. In modern architecture, this part is typically designed as a horizontally scalable, stateless node. The other is the database system, responsible for storing the system's state, including the core business data. From the database's perspective, data inflow consists of two parts. One is the real-time writing of the business system, which is the main source of core data. The other is data imported from the upstream data system, either once or periodically. Since this core data is generated here for the first time, this database is also referred to as the Single Source of Truth (SSOT).

SSOT is the most important data asset in the background system, leading to two primary challenges that need to be addressed carefully. The first issue is that, as a key asset, this data often requires real-time synchronization with other systems for further processes like BI analysis. Without a real-time synchronization mechanism, this data will become a data silo. Second, this data may be compromised due to various reasons, such as hardware failure, software bugs, improper operation, or incorrect SQL, which can lead to data corruption or disorder. Therefore, it is essential to provide multiple mechanisms to ensure the safety of this data.

PolarDB-X is a distributed database service that is highly compatible with the MySQL ecosystem, so let's first look at how MySQL solves the problem of data silos.

MySQL uses the binlog mechanism to synchronize incremental data with downstream systems in real time. A binlog can be regarded as a message queue that stores detailed incremental data changes in MySQL in sequence. By consuming this queue, downstream systems or tools synchronize data from MySQL in real time. This mechanism is also referred to as Change Data Capture (CDC).

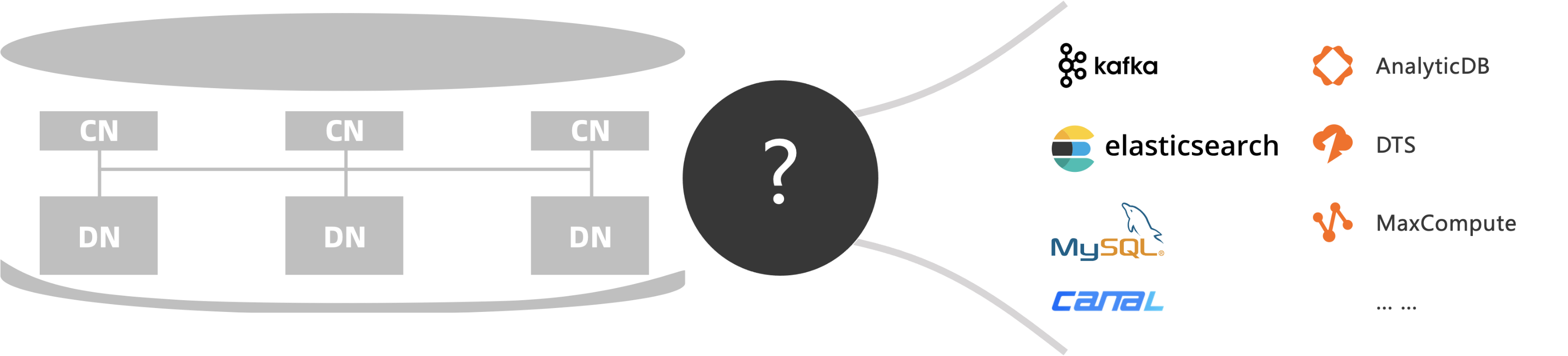

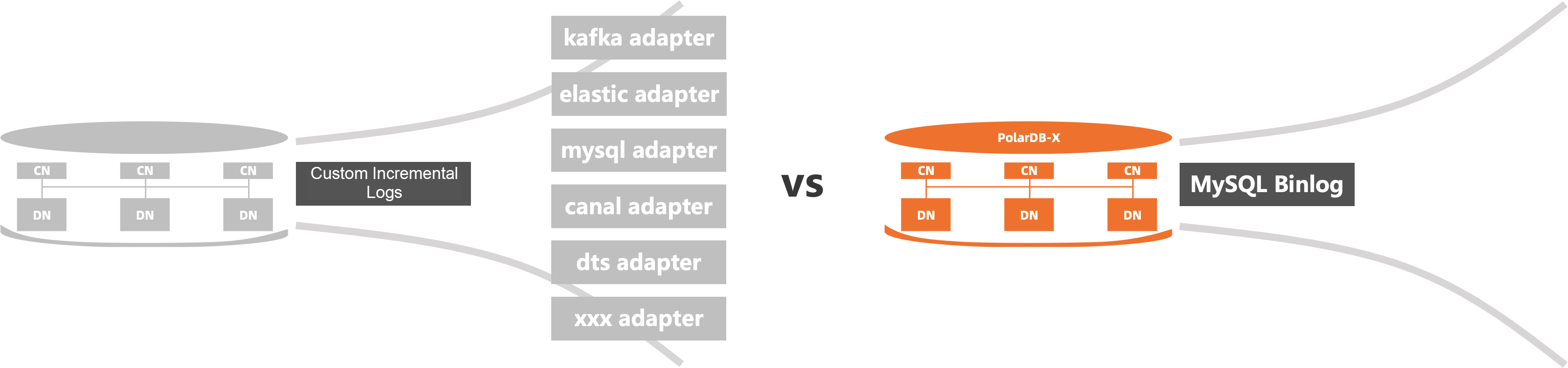

Compared with a standalone database, it is more complex to provide the CDC capability for a distributed database. A distributed database usually contains multiple nodes and these nodes generate multiple incremental log queues. If the downstream needs to consume multiple queues, several problems are involved.

Faced with these problems, the CDC capability of the distributed database needs to perform a trade-off in terms of implementation difficulty, supported features, and ease of use. In general, it is easy to implement a solution that provides the downstream with multiple queues, not guaranteed transaction integrity but only final consistency, and incremental logs in a custom format. However, this solution imposes higher requirements on downstream consumption, such as developing corresponding consumption codes or tools and considering the collaboration of multiple queues. A more user-friendly way is to provide the same CDC capability as MySQL Binlog so that the downstream can consume incremental changes of the distributed database as transparently as it consumes MySQL binlogs. This greatly reduces the cost of building a data synchronization process. This is also the solution adopted by PolarDB-X 2.0, which is referred to as a global binlog.

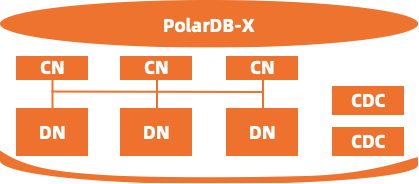

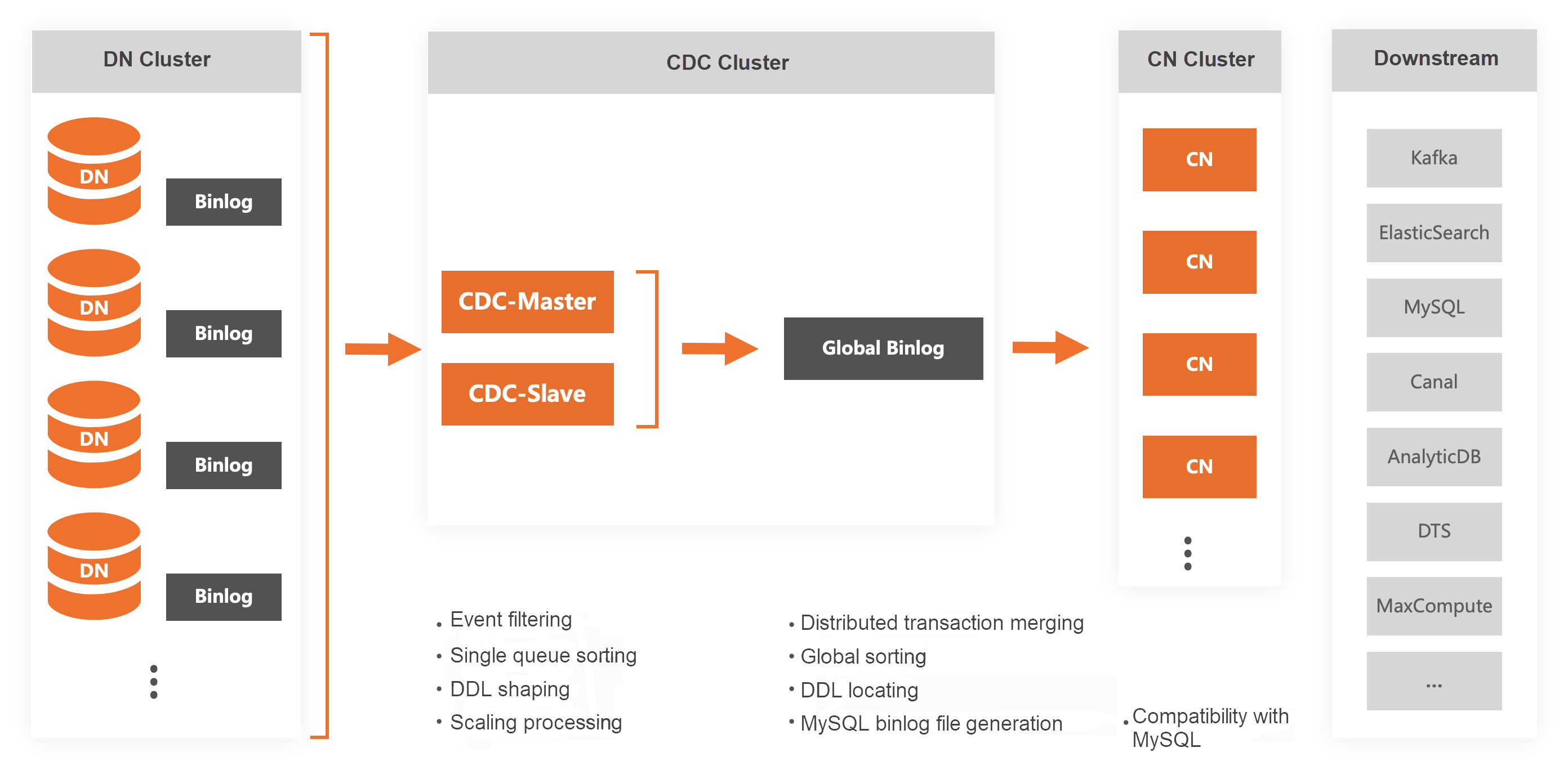

PolarDB-X 2.0 adopts a horizontally scalable Share-Nothing architecture. The basic constituent unit of the system is the node, and each node can be divided into two parts: the compute node (CN) and the data node (DN). As the preceding figure shows, the CDC component is added to PolarDB-X 2.0 to provide the global binary capability. CDC is a cluster with elastic capabilities.

The global binlog generation process can be divided into three stages:

After the preceding three stages, PolarDB-X 2.0 implements the global binlog capability that is fully compatible with MySQL binlogs.

For data corruption, PolarDB-X 2.0 provides data restoration at different levels, including instance-level consistent backup and restoration, table-level table recycle bin, SQL flashback, and row-level Flashback query. The following describes the features and scenarios of these four capabilities.

First, let's take a look at consistent backup and restoration. This feature allows you to restore historical data of an instance to a specified point in time that is accurate to the second. In a standalone database, full data and incremental logs can be stored on one machine. Therefore, if you want to implement consistent backup and restoration, you only need to back up full data and incremental logs. However, if you want to implement consistent backup and restoration in a distributed database, it will be additionally complex because full data and incremental logs are stored on multiple machines.

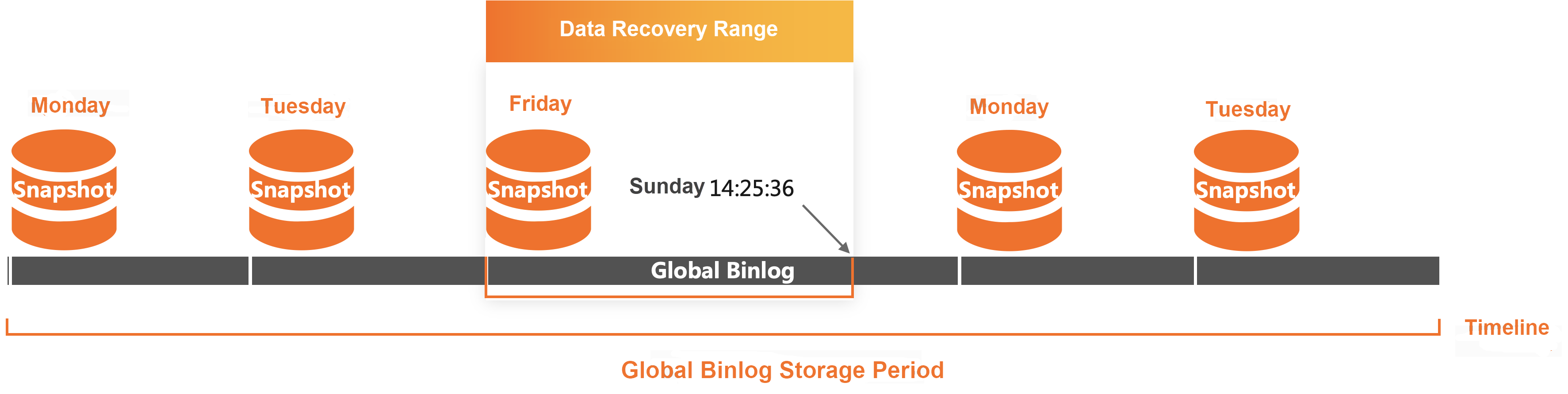

PolarDB-X 2.0 implements consistent backup and restoration by performing full backup and global binlogs for all data nodes.

Taking the above figure as an example, we have a PolarDB-X 2.0 instance that is backed up at 00:00 every Monday, Tuesday, and Friday. If you want to restore the instance data of 14:25:26 on the previous Sunday, the system will select a full backup set that is generated at a point in time that is closest to the recovery time point. The selected point in time is 00:00:00 on the previous Friday. Then, the system replays global binlogs from 00:00:00 of the previous Friday to 14:25:26 of the previous Sunday to obtain the required snapshot.

The consistent backup and restoration capabilities of PolarDB-X 2.0 do not lock the database during backup. The capability depends on global binlogs, that is, the range that can be restored is the storage range of global binlogs. The capability currently has several limitations, such as no scaling during backup and only support for homogeneous restoration.

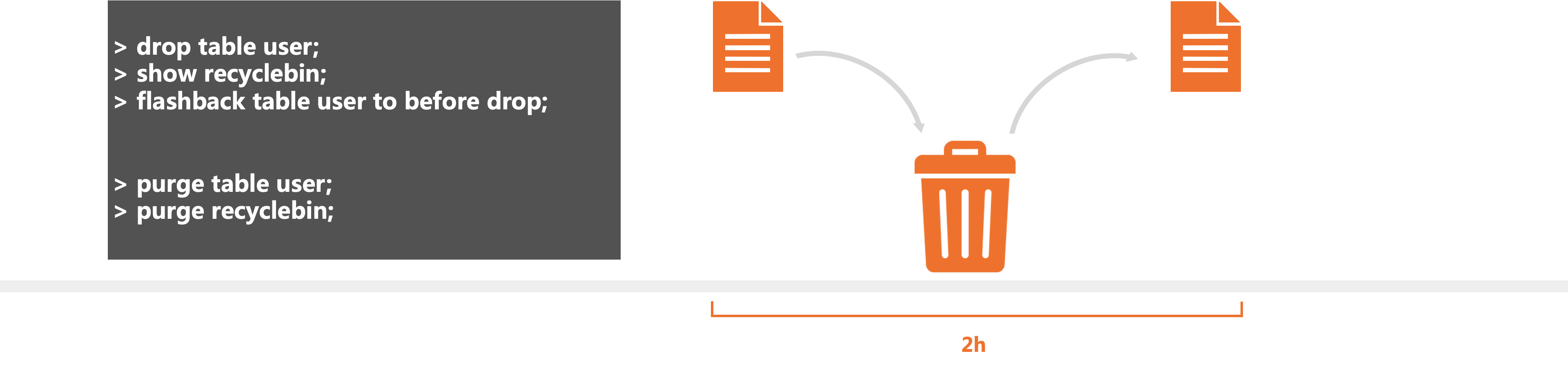

The second data restoration capability provided by PolarDB-X 2.0 is the table recycle bin. As the name implies, we temporarily put the DROP table into a recycle bin. If the table needs to be restored within two hours, then it can be retrieved from the recycle bin. The table recycle bin provides complete management functions, such as viewing all tables in the recycle bin, completely deleting a table, and restoring a table. The recycle bin caches only tables that are deleted within two hours. You cannot call the TRUNCATE TABLE operation to retrieve tables that are deleted.

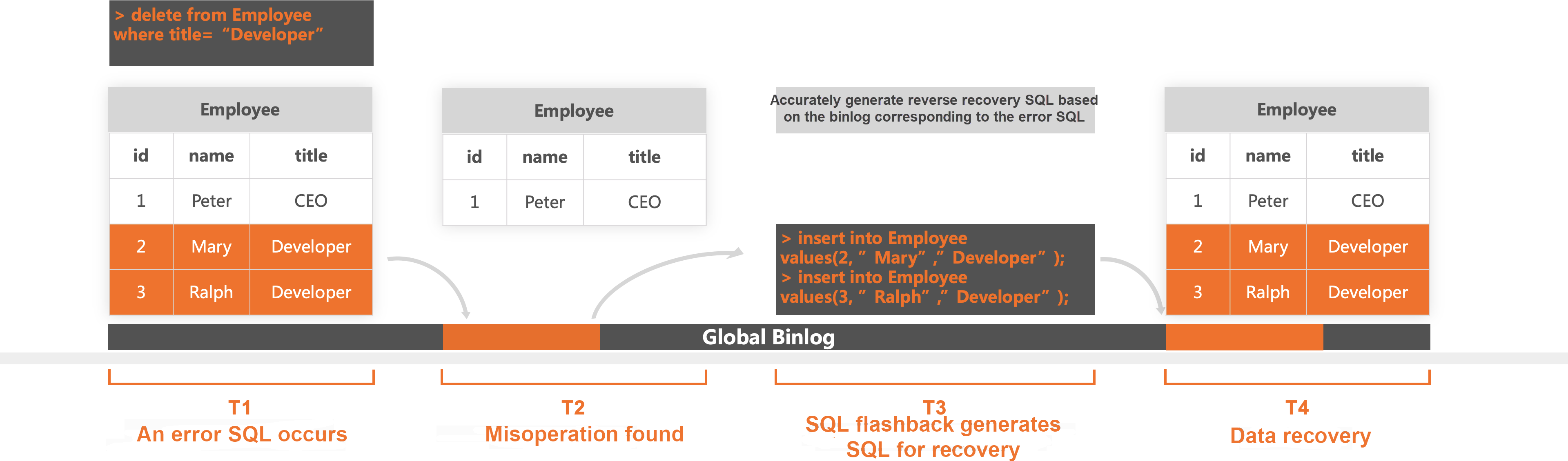

The third data restoration capability provided by PolarDB-X 2.0 is the SQL Flashback. This function can accurately restore data that is affected by an incorrect SQL statement. This capability is also provided in PolarDB-X 1.0. Since its launch, this capability has helped many users who accidentally deleted data to retrieve data. It is a widely recognized data restoration capability.

Let's take an example to introduce the specific use process of this capability.

As shown in the above figure, at T1, we wanted to delete the record with the title "Developer" and the name "Ralph", but we forgot to add "name='Ralph'" in the WHERE condition, resulting in the record with the name "Mary" being deleted together. The two deletion events and the ID of the corresponding SQL are recorded in global binlogs.

At T2, we specified the accidental operation and found the corresponding SQL and ID through the PolarDB-X auditing function.

At T3, we generated the recovery SQL by using the SQL ID and the SQL flashback capability. The principle of SQL flashback is that after you obtain the SQL ID, you can search global binlogs to find all the change events (in this example, two deletion events) corresponding to the SQL, and then generate reverse recovery SQL one by one.

At T4, we restored the two pieces of data that were accidentally deleted after SQL execution.

SQL flashback provides accurate data restoration for SQL accidental operation scenarios. It can be seen that the period that can be restored depends on the storage period of global binlogs.

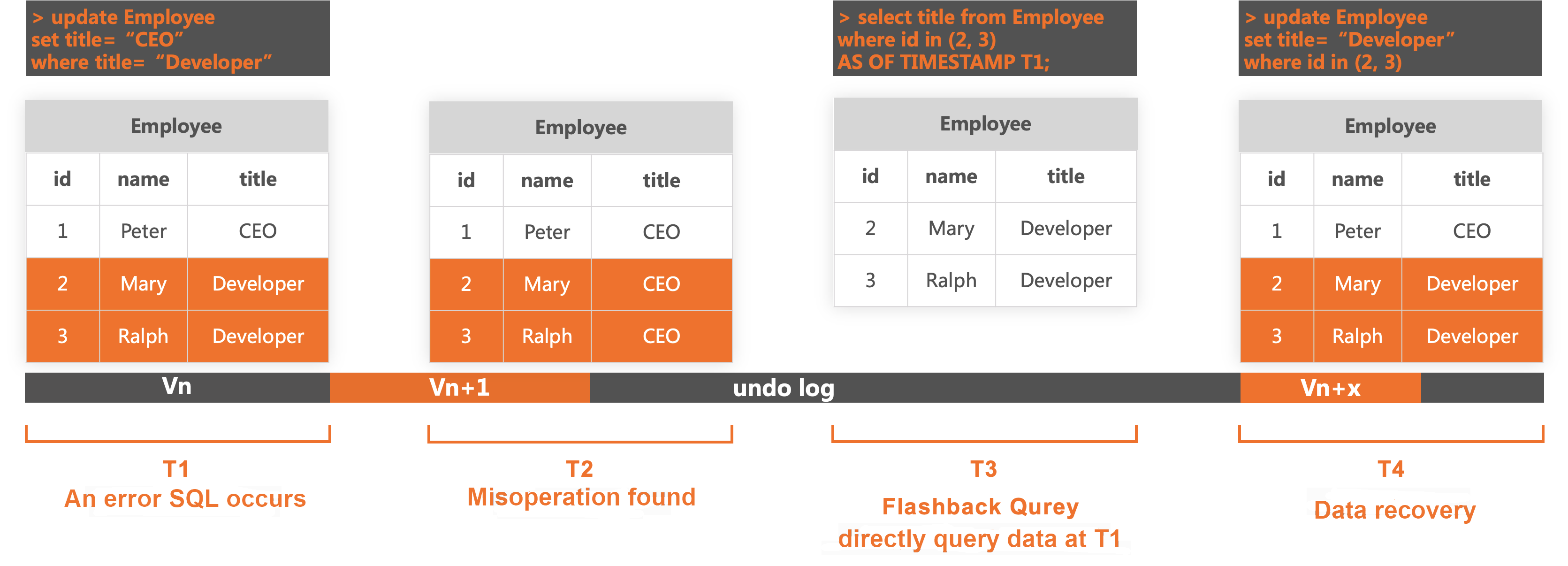

The fourth data restoration capability provided by PolarDB-X 2.0 is Flashback Query. This capability provides accurate data restoration at the row level within a certain time range. We will also take the SQL accidental operation scenario as an example.

As shown in the above figure, at T1, we wanted to update the title "Developer" of the record with the name "Ralph" to "CTO", but we forgot to add "name='Ralph'" in the WHERE condition, resulting in all the records with the title "Developer" being updated to "CTO". These changes will be recorded in the undo log with version Vn+1 (undo log is a basic data structure in the database, in which changes of each row of data are recorded in detail. You can simply compare it to a GIT commit log).

At T2, we immediately specified the accidental operation and determined the operation time and the data range affected.

At T3, we directly queried the correct values of the affected two rows at T1 through Flashback Query.

At T4, we revised the data based on the correct values returned by the Flashback Query.

It can be seen that the Flashback Query capability depends on the storage period of the undo log. Compared with SQL query flashback, this capability provides faster and more accurate row-level restoration. However, undo logs are usually not stored as long as global binlogs, so the period that can be restored is shorter than that of SQL flashback.

PolarDB-X 2.0 provides the global binlog capability to address data silos. This capability provides the downstream ecosystem with the same incremental log consumption experience as MySQL binlogs. Furthermore, it offers data restoration capabilities at various levels, including instance, table, SQL, and row levels, to address data corruption issues. These capabilities encompass consistent backup and restoration, table recycle bin, SQL flashback, and flashback query.

Safeguarding Your Data with PolarDB-X: Backup and Restoration

ApsaraDB - June 13, 2024

ApsaraDB - June 13, 2024

ApsaraDB - October 17, 2024

ApsaraDB - October 24, 2022

ApsaraDB - September 11, 2024

ApsaraDB - November 1, 2022

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB