By Digoal.

In some application scenarios, multi-field matching may be required. For example, consider a scenario in which a table includes several fields like artist, track, album, composer, and Lyrics.

In this kind of scenario, users may want to use the word-breaking function to find matches for singers and performers like the Hong Kong singer Andy Lau or Canadian personality Justin Bieber, for example, and have it return TRUE if any field matches. The traditional practice is to establish a word-breaking index on each field and perform the matching one by one. However, this leads to lengthy SQL statements and requires lots of OR operations. Can a better method be used in this scenario?

Well, actually, the answer's yes. We can convert this whole record into one string and create a word-breaking index on this string. At this point, the question is what is the format of the output, and if this will impact the result of the word-breaking.

Now, create a table and inset the value.

create table t1(id int, c1 text, c2 text, c3 text);

insert into t1 values (1 , 'speed-fast e5a1cbb8' , 'speed-fast e5a1cbb8' , 'abc');

postgres=# select t1::text from t1;

t1

-----------------------------------

(1,speed-fast e5a1cbb8,speed-fast e5a1cbb8,abc)

(1 row)

postgres=# \df+ record_out

List of functions

Schema | Name | Result data type | Argument data types | Type | Security | Volatility | Owner | Language | Source code | Description

------------+------------+------------------+---------------------+--------+----------+------------+----------+----------+-------------+-------------

pg_catalog | record_out | cstring | record | normal | invoker | stable | postgres | internal | record_out | I/O

(1 row) Now consider the source code of the record output src/backend/utils/adt/rowtypes.c.

/*

* record_out - output routine for any composite type.

*/

Datum

record_out(PG_FUNCTION_ARGS)

{

...

/* And build the result string */

initStringInfo(&buf);

appendStringInfoChar(&buf, '('); // Use parentheses (()) to enclose the field.

for (i = 0; i < ncolumns; i++)

{

...

if (needComma)

appendStringInfoChar(&buf, ','); // Use a comma (,) between fields.

needComma = true;

...

/* Detect whether we need double quotes for this value */

nq = (value[0] == '\0'); /* force quotes for empty string */

for (tmp = value; *tmp; tmp++)

{

char ch = *tmp;

if (ch == '"' || ch == '\\' ||

ch == '(' || ch == ')' || ch == ',' ||

isspace((unsigned char) ch))

{

nq = true;

break;

}

}

/* And emit the string */

if (nq)

appendStringInfoCharMacro(&buf, '"'); // For some types, use the double quotation markers ("")

for (tmp = value; *tmp; tmp++)

{

char ch = *tmp;

if (ch == '"' || ch == '\\')

appendStringInfoCharMacro(&buf, ch);

appendStringInfoCharMacro(&buf, ch);

}

if (nq)

appendStringInfoCharMacro(&buf, '"');

}

appendStringInfoChar(&buf, ')');

... So it may seem that no problems should occur. Only a comma and double quotes are added. These are all characters and scws should be able to process them.

In reality, however, some problems do exist in this scenario. Consider the following example. The two words only differ in the ending parts. If an additional comma is added, the result is like this.

postgres=# select * from ts_debug('scwscfg', 'speed-fast e5a1cbb8,');

alias | description | token | dictionaries | dictionary | lexemes

-------+-------------+-------+--------------+------------+---------

k | head | speed | {} | |

a | adjective | fast | {simple} | simple | {fast}

e | exclamation | e5a | {simple} | simple | {e5a}

e | exclamation | 1cbb | {simple} | simple | {1cbb}

e | exclamation | 8 | {simple} | simple | {8}

u | auxiliary | , | {} | |

(6 rows)

postgres=# select * from ts_debug('scwscfg', 'speed-fast e5a1cbb8');

alias | description | token | dictionaries | dictionary | lexemes

-------+-------------+----------+--------------+------------+------------

k | head | speed | {} | |

a | adjective | fast | {simple} | simple | {fast}

e | exclamation | e5a1cbb8 | {simple} | simple | {e5a1cbb8}

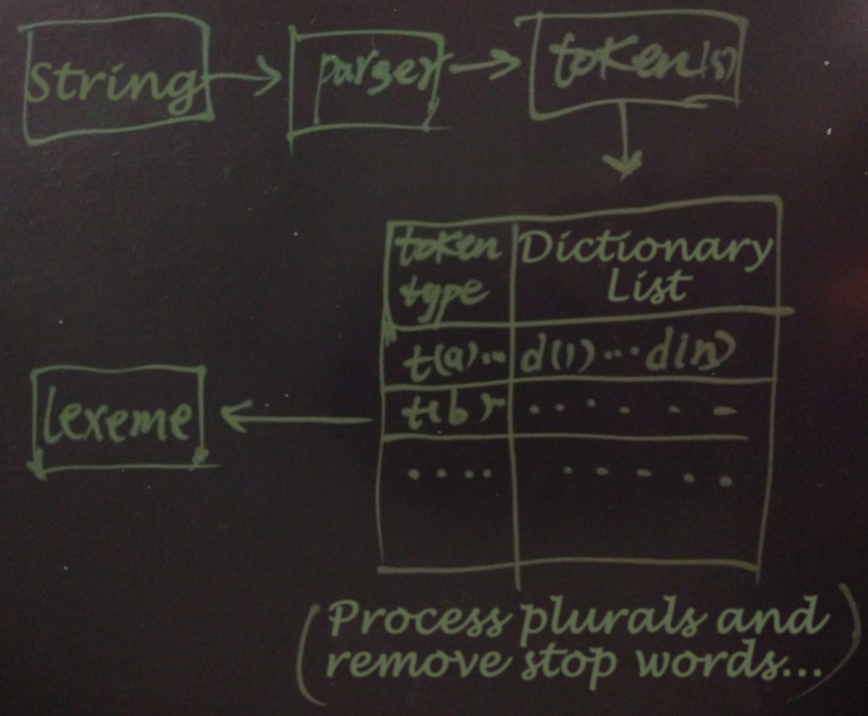

(3 rows) The following diagram shows the word-breaking steps in PostgreSQL.

First, use parse to split a string into multiple tokens and specify the type of each token.

Therefore, when creating the text search configuration, you need to specify a parser, which is also the core part of the word-breaking process.

Command: CREATE TEXT SEARCH CONFIGURATION

Description: define a new text search configuration

Syntax:

CREATE TEXT SEARCH CONFIGURATION name (

PARSER = parser_name |

COPY = source_config

) At the same time, token types supported by the parser must also be specified when you create the parser.

Command: CREATE TEXT SEARCH PARSER

Description: define a new text search parser

Syntax:

CREATE TEXT SEARCH PARSER name (

START = start_function ,

GETTOKEN = gettoken_function ,

END = end_function ,

LEXTYPES = lextypes_function

[, HEADLINE = headline_function ]

) View parsers that have been created.

postgres=# select * from pg_ts_parser ;

prsname | prsnamespace | prsstart | prstoken | prsend | prsheadline | prslextype

---------+--------------+--------------+------------------+------------+---------------+----------------

default | 11 | prsd_start | prsd_nexttoken | prsd_end | prsd_headline | prsd_lextype

scws | 2200 | pgscws_start | pgscws_getlexeme | pgscws_end | prsd_headline | pgscws_lextype

jieba | 2200 | jieba_start | jieba_gettoken | jieba_end | prsd_headline | jieba_lextype

(3 rows) View the token types that a parser supports.

postgres=# select * from ts_token_type('scws');

tokid | alias | description

-------+-------+---------------

97 | a | adjective

98 | b | difference

99 | c | conjunction

100 | d | adverb

101 | e | exclamation

102 | f | position

103 | g | word root

104 | h | head

105 | i | idiom

106 | j | abbreviation

107 | k | head

108 | l | temp

109 | m | numeral

110 | n | noun

111 | o | onomatopoeia

112 | p | prepositional

113 | q | quantity

114 | r | pronoun

115 | s | space

116 | t | time

117 | u | auxiliary

118 | v | verb

119 | w | punctuation

120 | x | unknown

121 | y | modal

122 | z | status

(26 rows) Each token type corresponds to one or more dictionaries for matchmaking.

ALTER TEXT SEARCH CONFIGURATION name

ADD MAPPING FOR token_type [, ... ] WITH dictionary_name [, ... ] View the map information of configured token types and dictionaries.

postgres=# select * from pg_ts_config_map ; Next, for the first matching dictionary, convert the token output into a lexeme.

(Will remove stop words), delete plural forms, so on.The following functions can be used to debug word-breaking problems.

ts_token_type(parser_name text, OUT tokid integer, OUT alias text, OUT description text): Returns token types supported by the parser.ts_parse(parser_name text, txt text, OUT tokid integer, OUT token text): Specifies a parser and returns the string as a token.ts_debug(config regconfig, document text, OUT alias text, OUT description text, OUT token text, OUT dictionaries regdictionary[], OUT dictionary regdictionary, OUT lexemes text[]): Specifies the word-breaking configuration and returns the string as a token and additional information.In the preceding case, when the scws parser is used, the token output changes.

postgres=# select * from pg_ts_parser ;

prsname | prsnamespace | prsstart | prstoken | prsend | prsheadline | prslextype

---------+--------------+--------------+------------------+------------+---------------+----------------

default | 11 | prsd_start | prsd_nexttoken | prsd_end | prsd_headline | prsd_lextype

scws | 2200 | pgscws_start | pgscws_getlexeme | pgscws_end | prsd_headline | pgscws_lextype

jieba | 2200 | jieba_start | jieba_gettoken | jieba_end | prsd_headline | jieba_lextype

(3 rows)

postgres=# select * from ts_parse('scws', '子远e5a1cbb8,');

tokid | token

-------+-------

107 | speed

97 | fast

101 | e5a

101 | 1cbb

101 | 8

117 | ,

(6 rows) Without modifying the scws code, we can first replace the comma with a space, because scws will ignore spaces.

postgres=# select replace(t1::text, ',', ' ') from t1;

replace

-----------------------------------

(1 speed-fast e5a1cbb8 speed-fast e5a1cbb8 abc)

(1 row)

postgres=# select to_tsvector('scwscfg', replace(t1::text, ',', ' ')) from t1;

to_tsvector

---------------------------------------

'1':1 'abc':6 'e5a1cbb8':3,5 'fast':2,4

(1 row) postgres=# create or replace function rec_to_text(anyelement) returns text as

$$

select $1::text;

$$

language sql strict immutable;

CREATE FUNCTION

postgres=# create index idx on t1 using gin (to_tsvector('scwscfg', replace(rec_to_text(t1), ',', ' ')));

CREATE INDEX

SQL Writing

postgres=# explain verbose select * from t1 where to_tsvector('scwscfg', replace(rec_to_text(t1), ',', ' ')) @@ to_tsquery('scwscfg', 'speed-fast e5a1cbb8');

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------------------------

Bitmap Heap Scan on public.t1 (cost=4.50..6.52 rows=1 width=100)

Output: c1, c2, c3, c4

Recheck Cond: (to_tsvector('scwscfg'::regconfig, replace(rec_to_text(t1.*), ','::text, ' '::text)) @@ '''fast'' & ''e5a1cbb8'''::tsquery)

-> Bitmap Index Scan on idx (cost=0.00..4.50 rows=1 width=0)

Index Cond: (to_tsvector('scwscfg'::regconfig, replace(rec_to_text(t1.*), ','::text, ' '::text)) @@ '''fast'' & ''e5a1cbb8'''::tsquery)

(5 rows) postgres=# create extension pg_scws;

CREATE EXTENSION

Time: 6.544 ms

postgres=# alter function to_tsvector(regconfig,text) volatile;

ALTER FUNCTION

postgres=# select to_tsvector('scwscfg','How can I speed up the loading speeds of PostgreSQL?');

to_tsvector

-----------------------------------------------------------------------------------------

'postgresql':4 'How':2 'How can I':1 'word-breaking':6 'speed up':3 'loading':7 'PostgreSQL':5 'speeds':8

(1 row)

Time: 0.855 ms

postgres=# set zhparser.dict_in_memory = t;

SET

Time: 0.339 ms

postgres=# explain (buffers,timing,costs,verbose,analyze) select to_tsvector('scwscfg','How can I speed up the loading speeds of PostgreSQL?') from generate_series(1,100000);

QUERY PLAN

------------------------------------------------------------------------------------------------------------

Function Scan on pg_catalog.generate_series (cost=0.00..260.00 rows=1000 width=0) (actual time=11.431..17971.197 rows=100000 loops=1)

Output: to_tsvector('scwscfg'::regconfig, 'How can I speed up the loading speeds of PostgreSQL?'::text)

Function Call: generate_series(1, 100000)

Buffers: temp read=172 written=171

Planning time: 0.042 ms

Execution time: 18000.344 ms

(6 rows)

Time: 18000.917 ms

postgres=# select 8*100000/18.000344;

?column?

--------------------

44443.595077960732

(1 row) For the CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 32

On-line CPU(s) list: 0-31

Thread(s) per core: 1

Core(s) per socket: 32

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 63

Model name: Intel(R) Xeon(R) CPU E5-2680 v3 @ 2.50GHz

Stepping: 2

CPU MHz: 2494.224

BogoMIPS: 4988.44

Hypervisor vendor: KVM

Virtualization type: full

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 30720K

NUMA node0 CPU(s): 0-31 Location Matching Filtering Syntax for PostgreSQL Full-Text Searches

digoal - December 11, 2019

digoal - December 11, 2019

digoal - October 22, 2018

digoal - February 3, 2020

Alibaba Clouder - January 10, 2018

ApsaraDB - November 18, 2025

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by digoal