By Zehuan Shi

During the business evolution process, along with the gradual growth of business values, stability has become the most important cornerstone for enterprises to build digital systems. In the construction of business architecture, achieving high availability has become the first and foremost issue. Cloud products are built on the infrastructure of multiple regions and zones. Therefore, building a reliable and highly available architecture for customers to solve stability issues is an important capability for cloud products.

A complete high-availability technical architecture should consider two aspects. First, in terms of the deployed infrastructure, to avoid single point of failure, a high-availability architecture should be deployed in multiple geographical locations, which requires available resources in these locations. On the other hand, the resource scheduling system should be able to schedule resources in multiple geographical locations correctly. Second, a high-availability architecture should have comprehensive service protection capabilities to protect critical services from being defeated in scenarios that may have significant impacts on services, such as burst traffic and malicious traffic. In the cloud-native context, the standard solution provided by the cloud-native community is to use Kubernetes along with ASM. Kubernetes addresses resource scheduling and management. ASM is responsible for managing more complex issues of security, traffic, and observability. This article will explore how to use ASM to build a highly available business system.

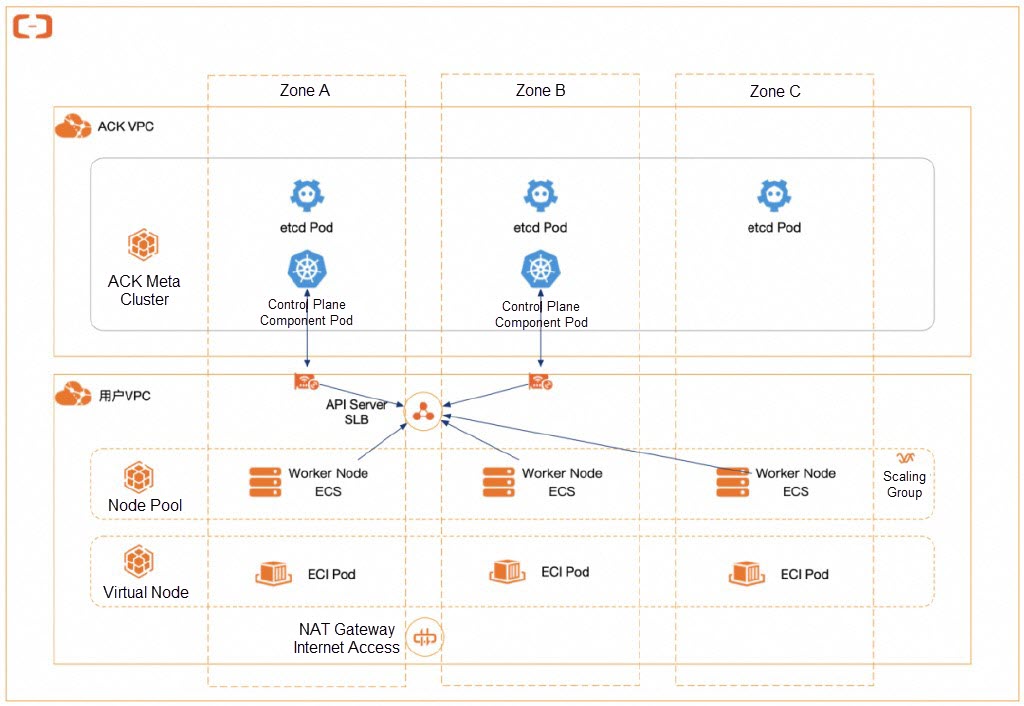

In the scenario of a single Kubernetes cluster, you can use the multi-zone feature of the cloud for high-availability deployment. Each managed component in ACK clusters runs in multiple replicated pods that are evenly spread across multiple zones to ensure that the cluster can still function as expected when a zone or node is down. WorkerNode and elastic container instances in the cluster are also distributed in different zones. If a zone-level failure occurs, such as power outages or network disconnection in the data center due to uncontrollable factors, a healthy zone can still provide services.

In addition to the distributed deployment of applications and infrastructure, which provides high availability through physical isolation, software-level protection is also essential. Circuit breaking and throttling are widely recognized application protection methods. Applying circuit breaking and throttling can significantly improve the overall availability of applications, control the blast radius of local problems, and effectively prevent cascading failures.

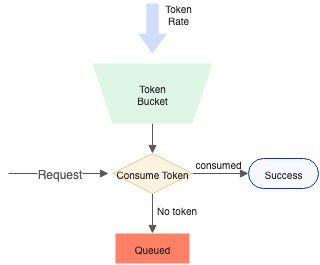

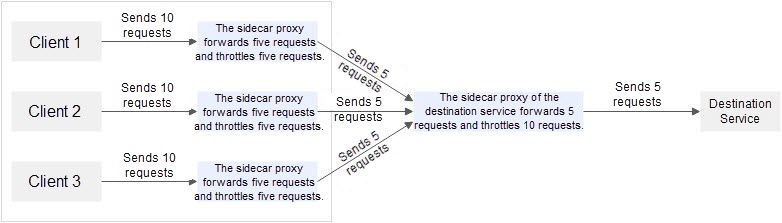

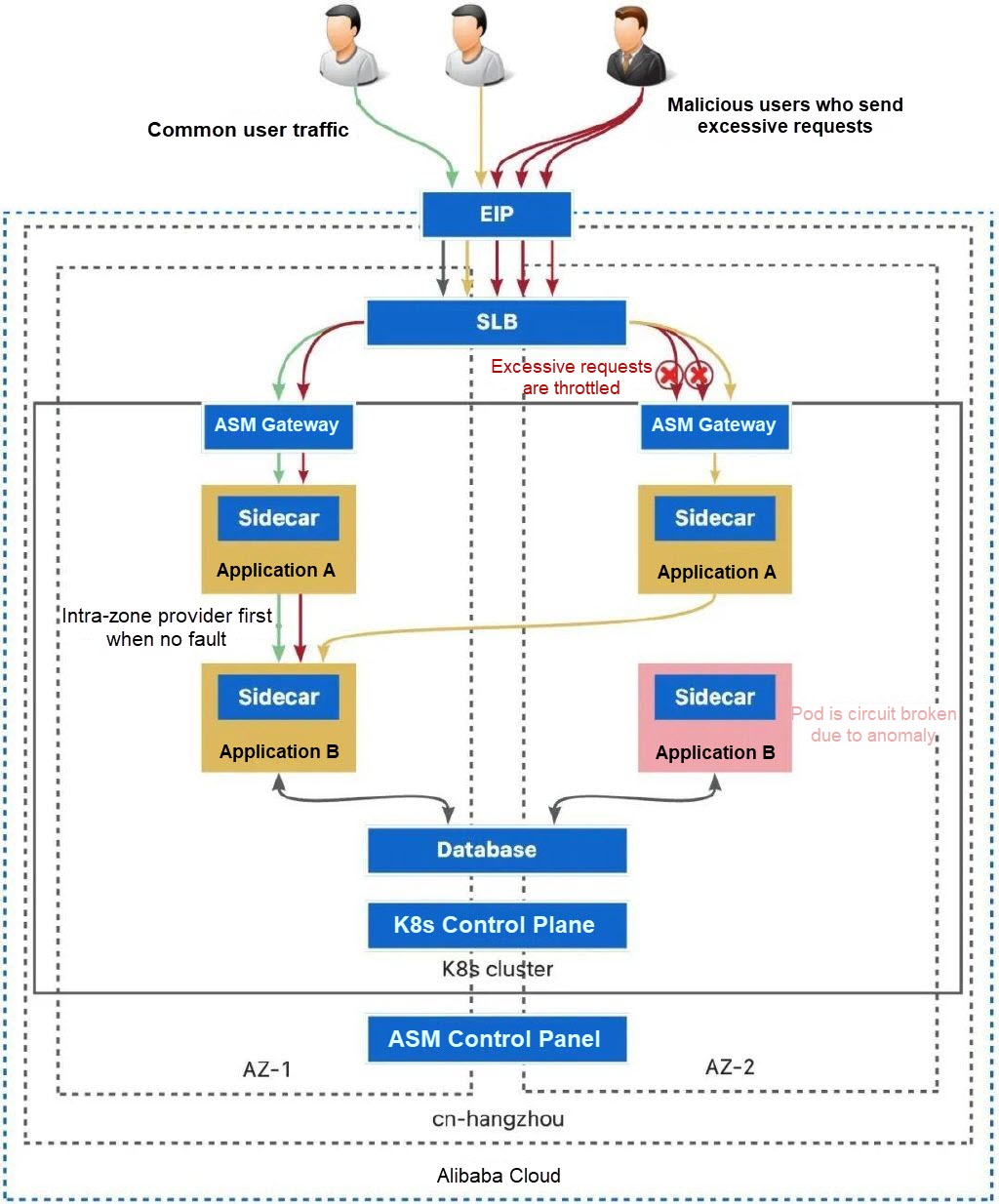

Throttling protects applications on the server. You can enable throttling to prevent the server from being overwhelmed by excessive traffic and automatically downgrade the system when it is overwhelmed. ASM supports local and global throttling, as well as higher-level custom throttling rules (such as single-user QPS throttling).

Circuit breaking is applied on the client side to temporarily break abnormal endpoints as soon as possible when the upstream (server) fails or is overloaded, so as to minimize the impact on the global performance of the system. In traditional microservice applications, some development frameworks provide the circuit breaking feature. However, compared with the traditional approach, the circuit breaking feature provided by ASM does not need to be integrated within the application code of each service and protects the target service from excessive requests without business interruptions.

Compared with open-source Istio, the circuit breaking and throttling capabilities of ASM are more powerful and support richer conditions (for more information, see the section on circuit breaking and throttling in the ASM documentation), thereby ensuring that the application achieves the best global performance.

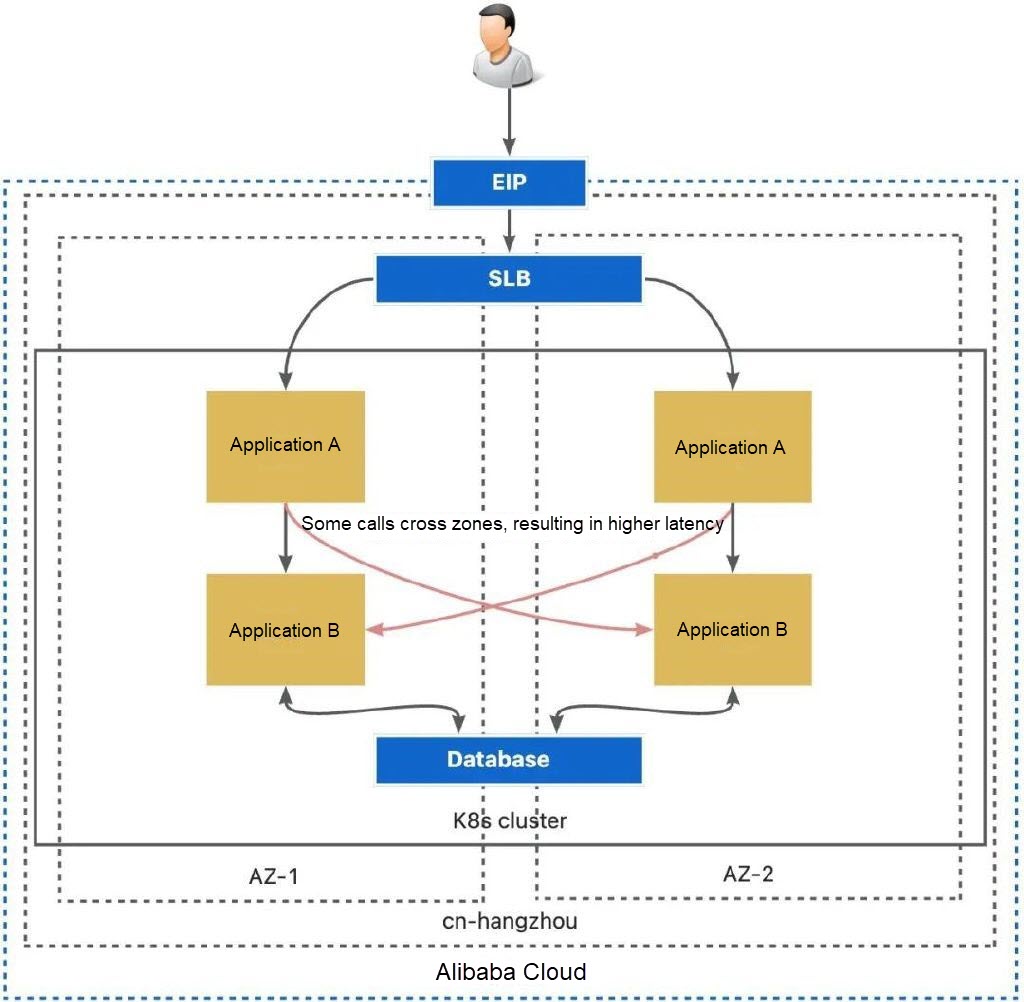

In multi-zone deployment scenarios, workloads are distributed across multiple zones. Therefore, the load balancer based on the Kubernetes Service evenly distributes traffic to pods in different zones.

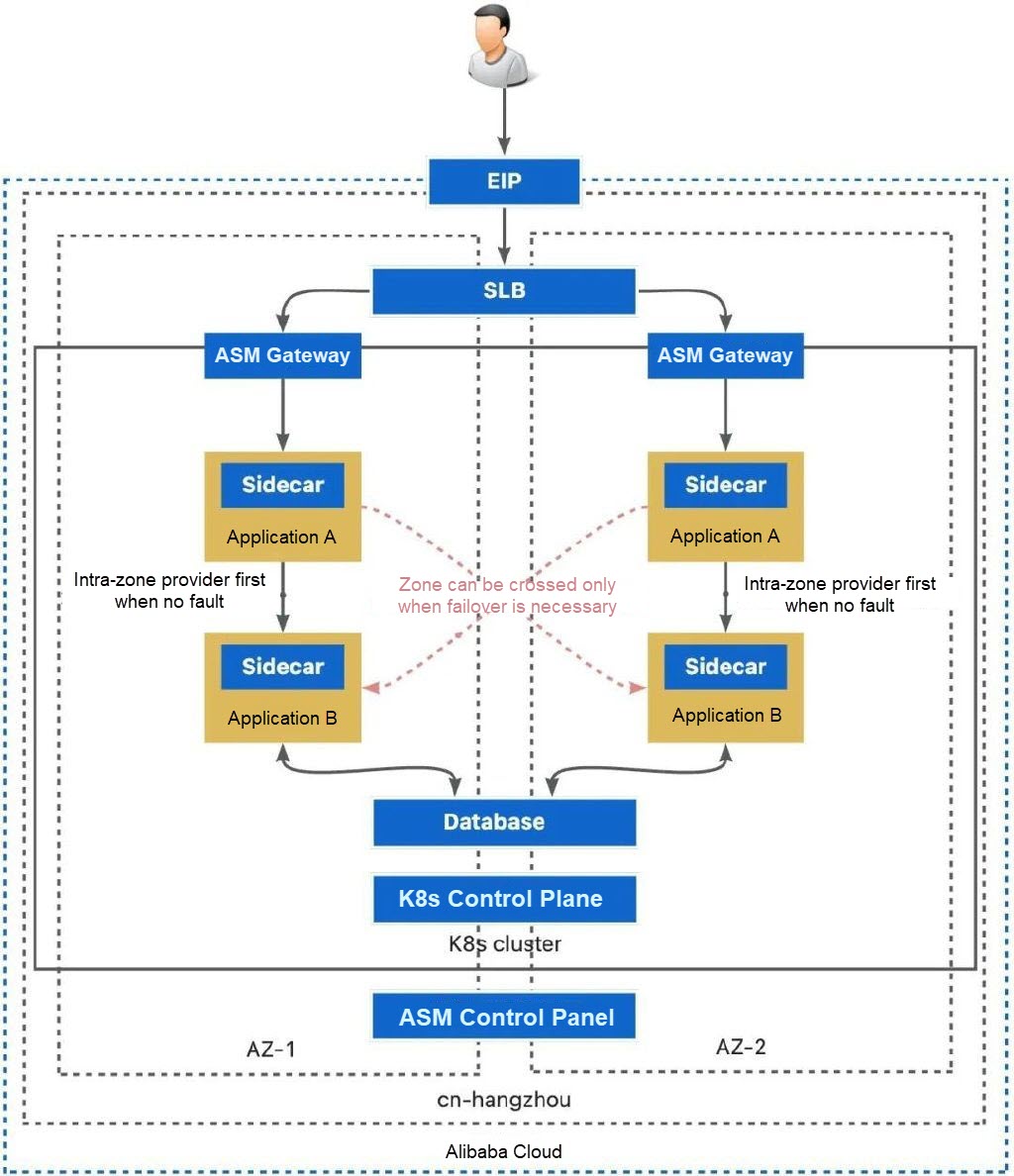

Cross-zone calls undoubtedly increase service latency. Therefore, it is ideal for calls to remain in the same zone when no failure occurs. By using the location priority capability of ASM, you can keep a single call within the same zone as much as possible. Failover to other zones will only occur if an application in the call chain experiences a failure.

By default, the control plane managed by ASM is distributed in multiple zones. This ensures that services can still be provided for the data plane when a single zone fails. Additionally, the data plane of ASM has the cache capability. Even if all zones fail within a period of time, the data plane can still work with the cache configuration.

The advantage of disaster recovery in a single cluster with multiple zones is its simple architecture and relatively low O&M costs. However, a single cluster cannot handle regional failures, such as failures in a single region due to uncontrollable factors or natural disasters. Therefore, to achieve higher availability requirements, it is inevitable to deploy geographical isolation, which we will discuss in the remaining section.

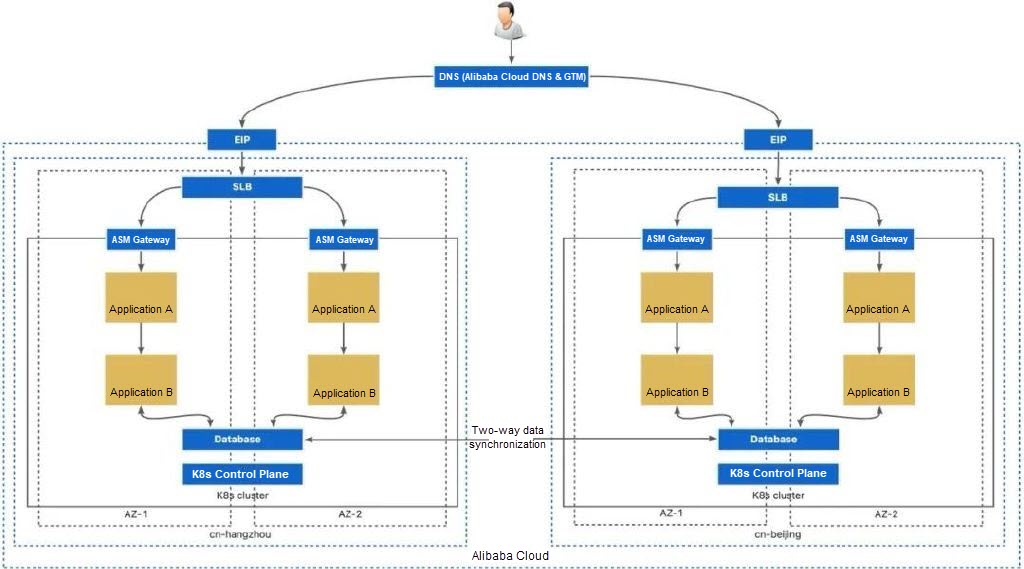

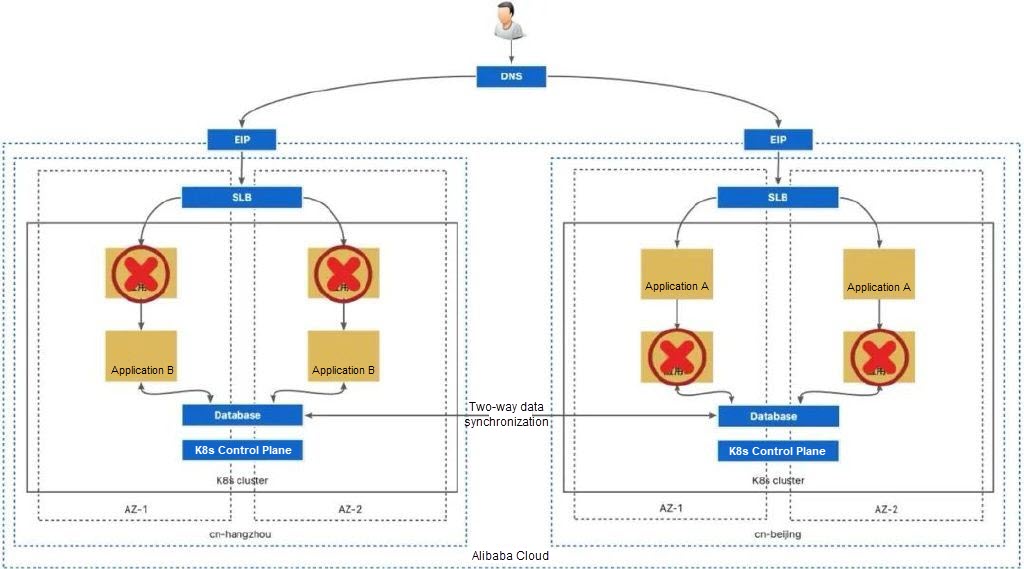

If you want to deploy data in multiple regions, you must deploy it in multiple clusters. In a multi-cluster deployment scenario, the ingress is split into two. In normal (non-failure) conditions, you can use DNS (Domain Name System) to distribute traffic to the two cluster ingresses based on geographical locations or other strategies. In this scenario, you can use Alibaba Cloud Intelligent DNS or Alibaba Cloud Global Traffic Manager (GTM) to switch traffic from DNS, and use GTM along with health check conditions to automatically remove unhealthy ingresses. Moreover, you need to keep using ASM gateways to enable the cluster ingress to obtain more advanced circuit breaking and throttling capabilities to protect businesses in the cluster.

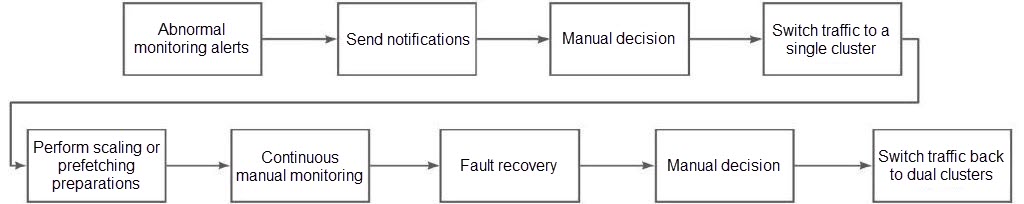

In the current solution, traffic is distributed to healthy clusters mainly through ingress switching. Despite its conciseness and effectiveness, there are still some tricky scenarios:

1. Global switchover is more than switchover.

Global switchover means the transfer of all traffic, which indicates that a cluster has to instantly handle a significantly higher load than usual. This is a great challenge for scaling speed and cache reconstruction. If these tasks are not done well, it could potentially overwhelm an otherwise fault-free data center. The above factors make the data center switchover often require manual operation and decision.

2. The solution is unable to cope with complex failures.

For example, if application A in the cn-hangzhou cluster fails and application B in the B cluster fails, the failure cannot be completely avoided in either cluster.

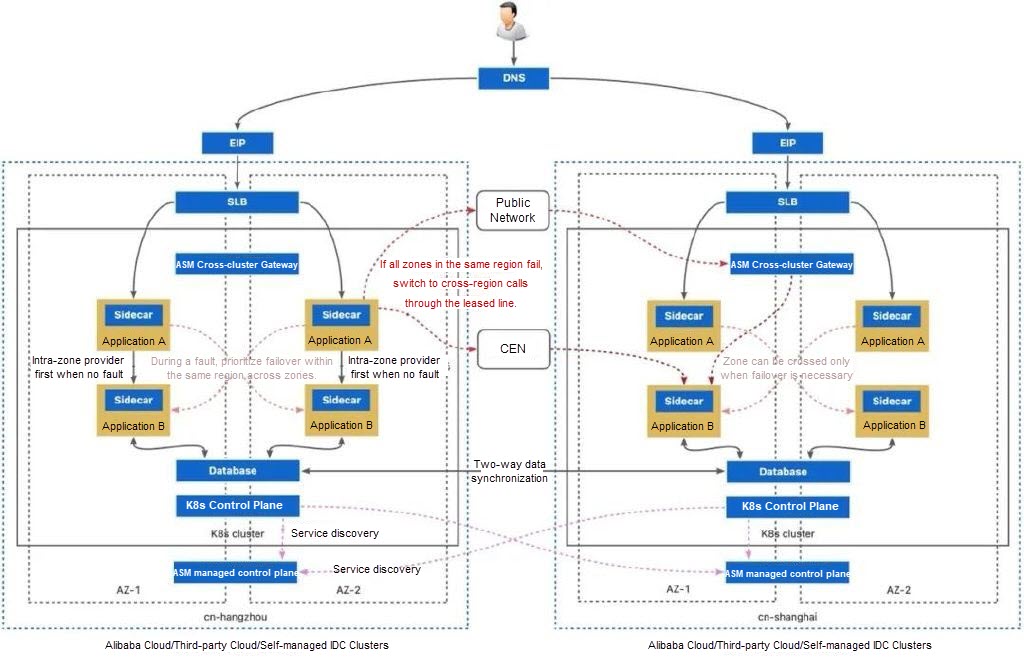

Then, in the multi-cluster scenario, is there any other method besides global traffic switchover? The answer is to use ASM for non-global failover. ASM provides a variety of multi-cluster solutions, among which the multi-instance mutual service discovery solution is tailored for customers who have the highest requirements for availability. By sharing the service discovery information of multiple clusters, multiple clusters can be fully connected. This allows you to implement failover within seconds when minor faults occur (service level, node level, and zone level). When there are faults on both sides, as long as a single application is normal in the cluster on either side, the application can be globally available.

ASM allows you to manage multiple clusters. In this scenario, you can create an ASM instance in the region where each cluster is located (for third-party cloud clusters, select a location that is closer to the region) and add the cluster in the other region to ASM in service-only mode. In this case, the ASM on both sides can discover all services in the other region. Therefore, in a multi-cluster scenario, the same automatic failback capability as in a single-cluster scenario and the fault tolerance capability in complex scenarios can also be achieved.

To achieve mutual calls in the multi-cluster scenario, you need to rely on routes to connect pods in cluster A to pods in cluster B. In multi-region scenarios, you can use Cloud Enterprise Network (CEN) to connect physical networks. In complex network scenarios, such as Alibaba Cloud clusters and non-Alibaba Cloud clusters, non-Alibaba Cloud clusters and non-Alibaba Cloud clusters, and clusters deployed on the cloud and in a data center, physical networks cannot be connected. In these scenarios, ASM provides a method to establish connectivity through the public internet. By using the cross-cluster gateway of ASM, you can establish an mTLS secure channel between clusters over the public Internet for necessary cross-cluster communication.

To achieve high availability that can handle regional failures, it is necessary to implement multi-cluster deployment. Multiple clusters introduce multiple ingresses, while only supporting switching from ingresses is not enough in some scenarios. Using ASM to connect multiple clusters significantly improves disaster recovery capabilities in multi-region and multi-cluster scenarios. ASM allows the management of any Kubernetes cluster, which can thus obtain enhanced disaster recovery capabilities.

Decipher the Open-source Serverless Container Framework: Event-driven

ACK Edge and IDC: New Breakthrough in Efficient Container Network Communication

222 posts | 33 followers

FollowAlibaba Clouder - December 22, 2020

Alibaba Cloud Native Community - June 29, 2022

Alibaba Container Service - May 23, 2025

Alibaba Clouder - April 8, 2020

Alibaba Container Service - December 18, 2024

Alibaba Clouder - February 22, 2021

222 posts | 33 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Backup and Archive Solution

Backup and Archive Solution

Alibaba Cloud provides products and services to help you properly plan and execute data backup, massive data archiving, and storage-level disaster recovery.

Learn More Cloud Backup

Cloud Backup

Cloud Backup is an easy-to-use and cost-effective online data management service.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Container Service