By digoal

It is critical for businesses to maintain databases accurately. If there are a set of read-only nodes in a cluster with an HA architecture based on streaming replication, it is crucial to determine whether these read-only nodes can be in sync with the new primary node after primary/standby switchover occurs in the HA cluster.

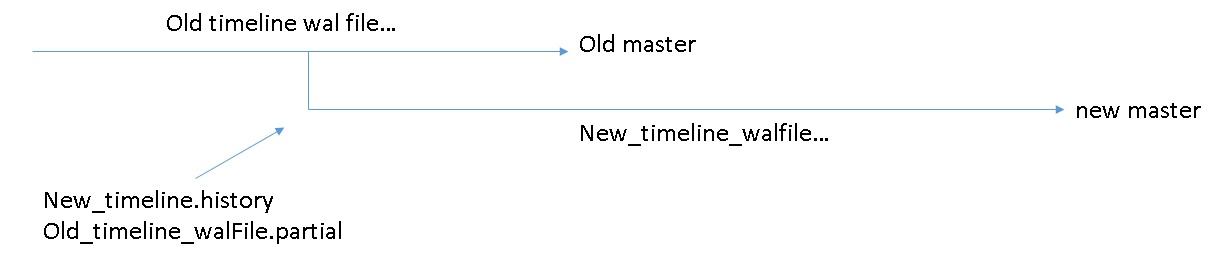

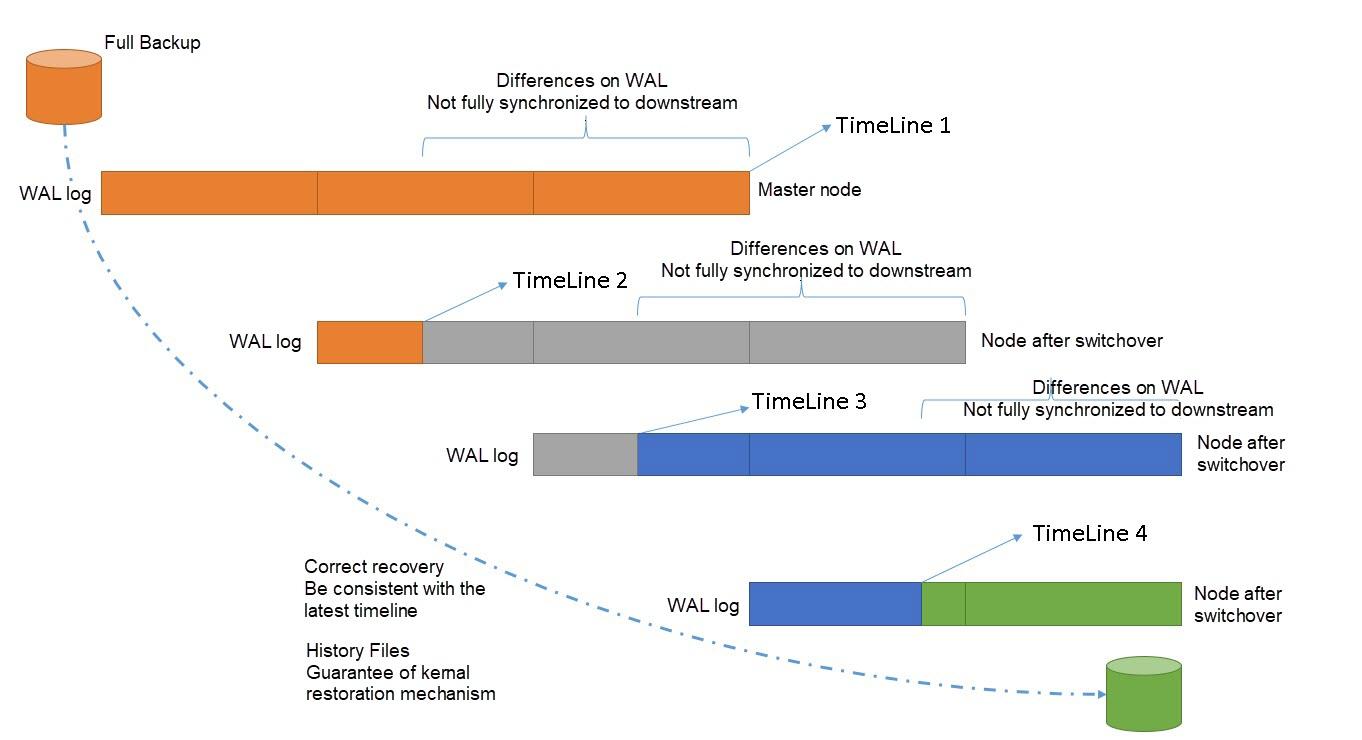

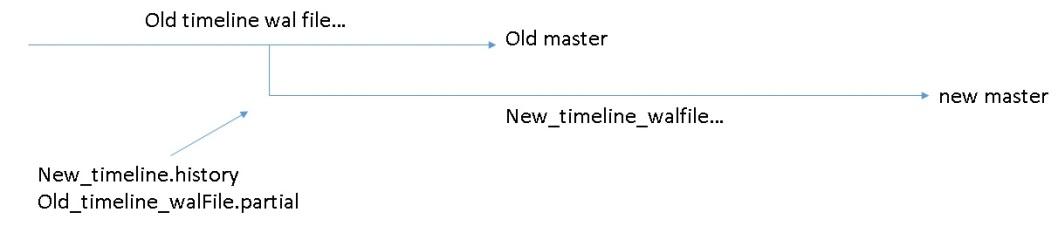

In the primary/standby database mode based on streaming replication, both the primary and the standby databases can archive WAL logs. If you use asynchronous replication, there may be some differences on WAL logs between the primary and standby databases. If the standby database becomes the primary database, a new timeline is enabled.

This implies that not all the WAL logs on the old timeline are synchronized to the new timeline, and the archiving is also different.

So, how to configure the point-in-time recovery (PITR) to keep up with the new timeline?

The solution lies in whether the history files of the new timeline are discovered first before the wrong WAL.

Examine the figure below.

For a single host, different instances use different listening ports.

1) Port 8001 for the primary database (TL = 1)

/data01/digoal/pg_root8001

2) Port 8000 for the standby database 1 (TL = 2 after switchover)

/data01/digoal/pg_root8000

3) Port 8002 for the PITR instance (use the restore_command to restore to the latest TL)

/data01/digoal/pg_root8002

4) Port 8003 for the standby database 2 that is created based on the standby database 1 after it is activated (TL = 3 after switchover)

/data01/digoal/pg_root8003

5) Archive directory

/data01/digoal/wal

.bash_profile

export PS1="$USER@`/bin/hostname -s`-> "

export PGPORT=8001

export PGDATA=/data01/digoal/pg_root$PGPORT

export LANG=en_US.utf8

export PGHOME=/home/digoal/pgsql11.1

export LD_LIBRARY_PATH=$PGHOME/lib:/lib64:/usr/lib64:/usr/local/lib64:/lib:/usr/lib:/usr/local/lib:$LD_LIBRARY_PATH

export DATE=`date +"%Y%m%d%H%M"`

export PATH=$PGHOME/bin:$PATH:.

export MANPATH=$PGHOME/share/man:$MANPATH

export PGHOST=$PGDATA

export PGUSER=postgres

export PGDATABASE=postgres

alias rm='rm -i'

alias ll='ls -lh'

unalias vi initdb -D $PGDATA -U postgres -E UTF8 --lc-collate=C --lc-ctype=en_US.utf8 postgresql.conf

本例测试场景的关键配置

listen_addresses = '0.0.0.0'

port = 8001

max_connections = 1000

unix_socket_directories = '.,/tmp'

max_worker_processes = 128

wal_level = replica

archive_mode = on # 如果配置为always 则从库也归档日志

archive_command = 'cp -n %p /data01/digoal/wal/%f' # 拷贝WAL到指定目录, 包括激活时产生的 partial,history 文件,-n 避免重复拷贝

max_wal_senders = 8

hot_standby = on

wal_receiver_status_interval = 1s pg_hba.conf

确保流复制可用

# "local" is for Unix domain socket connections only

local all all trust

# IPv4 local connections:

host all all 127.0.0.1/32 trust

# IPv6 local connections:

host all all ::1/128 trust

# Allow replication connections from localhost, by a user with the

# replication privilege.

local replication all trust

host replication all 127.0.0.1/32 trust

host replication all ::1/128 trust recovery.done

restore_command = 'cp /data01/digoal/wal/%f %p'

recovery_target_timeline = 'latest'

standby_mode = on

primary_conninfo = 'host=localhost port=8001 user=postgres' pg_basebackup -D /data01/digoal/pg_root8000 -F p -c fast -h 127.0.0.1 -p 8001 -U postgres Configure the parameter.

postgresql.conf

port = 8000 Modify the recovery.done file name.

mv recovery.done recovery.conf Start standby database 1.

pg_ctl start -D /data01/digoal/pg_root8000 Ensure that primary/standby synchronization starts normally.

查询主库

postgres=# select * from pg_stat_replication ; Primary and standby instances archive WAL logs to the same FTP directory or NAS directory, so that files can be retrieved during PITR.

pg_basebackup -D /data01/digoal/pg_root8002 -F p -c fast -h 127.0.0.1 -p 8001 -U postgres pgbench -i -s 100

pgbench -M prepared -n -r -P 1 -c 16 -j 16 -T 30 查询主库,确保主从WAL完全已同步

postgres=# select * from pg_stat_replication ; After activating the standby database 1, it becomes readable and writable and is not in sync with the primary database.

pg_ctl promote -D /data01/digoal/pg_root8000 The standby database 1 adopts the new timeline, which is TL 2.

digoal@pg11-test-> pg_controldata -D /data01/digoal/pg_root8000|grep -i timeline

Latest checkpoint's TimeLineID: 2

Latest checkpoint's PrevTimeLineID: 2

Min recovery ending loc's timeline: 0 The original primary database is still using TL 1.

digoal@pg11-test-> pg_controldata -D /data01/digoal/pg_root8001|grep -i timeline

Latest checkpoint's TimeLineID: 1

Latest checkpoint's PrevTimeLineID: 1

Min recovery ending loc's timeline: 0 Files generated during switchover:

cd /data01/digoal/pg_root8000/pg_wal

三个文件:

patial

history

新时间线WAL文件

如下:

-rw------- 1 digoal digoal 42 Jan 20 18:11 00000002.history

-rw------- 1 digoal digoal 16M Jan 20 18:11 000000010000000200000058.partial

-rw------- 1 digoal digoal 16M Jan 20 18:11 000000020000000200000058 On the primary database, there is a file 000000010000000200000058, which is complete. When the standby database 1 is activated here, writing the 000000010000000200000058 file has not finished, thus generating a 000000010000000200000058.partial file. The name of this file is different from that of the file on the master database. Meanwhile, a new timeline file 00000002.history is generated, indicating the timestamp of WAL logs from which this timeline was generated.

In addition, the WAL logs under the new timeline will copy the written content from 000000010000000200000058.partial and generate a new file. The name of the new file is based on the new timeline. The first eight characters are inline with the new timeline, which does not conflict with that of the file on the original primary database due to different timelines.

Content of timeline files:

digoal@pg11-test-> cat 00000002.history

1 2/58FE0B80 no recovery target specified The .partial file under the old timeline and the WAL file under the new timeline:

digoal@pg11-test-> md5sum 000000010000000200000058.partial

163531112a802bb152e7a57c9b06d62d 000000010000000200000058.partial

digoal@pg11-test-> md5sum 000000020000000200000058

1b4e44ffa9e3f5025ddb260a1d117f5e 000000020000000200000058 Data is still written to the original primary database. Therefore, other WAL files are generated on TL 1, which are different from those on TL 2.

digoal@pg11-test-> md5sum 000000010000000200000058

e0193410858e5f0440d1a3f9404edcd4 000000010000000200000058 Note that when activating the standby database, the WAL file and partial content (the WAL content generated on TL1) are consistent in the three files.

Standby database 1:

digoal@pg11-test-> pg_waldump 000000010000000200000058.partial|head -n 5

rmgr: Heap2 len (rec/tot): 58/ 58, tx: 0, lsn: 2/58000030, prev 2/57FFFFB8, desc: CLEAN remxid 130039429, blkref #0: rel 1663/13285/16515 blk 26035

rmgr: Heap len (rec/tot): 72/ 72, tx: 130184523, lsn: 2/58000070, prev 2/58000030, desc: HOT_UPDATE off 114 xmax 130184523 ; new off 115 xmax 130184523, blkref #0: rel 1663/13285/16516 blk 48

rmgr: Heap len (rec/tot): 74/ 74, tx: 130184538, lsn: 2/580000B8, prev 2/58000070, desc: HOT_UPDATE off 9 xmax 130184538 ; new off 88 xmax 0, blkref #0: rel 1663/13285/16515 blk 26035

rmgr: Heap len (rec/tot): 72/ 72, tx: 130184530, lsn: 2/58000108, prev 2/580000B8, desc: HOT_UPDATE off 107 xmax 130184530 ; new off 116 xmax 0, blkref #0: rel 1663/13285/16516 blk 48

rmgr: Heap len (rec/tot): 72/ 72, tx: 130184531, lsn: 2/58000150, prev 2/58000108, desc: HOT_UPDATE off 113 xmax 130184531 ; new off 117 xmax 0, blkref #0: rel 1663/13285/16516 blk 48

digoal@pg11-test-> pg_waldump 000000020000000200000058|head -n 5

rmgr: Heap2 len (rec/tot): 58/ 58, tx: 0, lsn: 2/58000030, prev 2/57FFFFB8, desc: CLEAN remxid 130039429, blkref #0: rel 1663/13285/16515 blk 26035

rmgr: Heap len (rec/tot): 72/ 72, tx: 130184523, lsn: 2/58000070, prev 2/58000030, desc: HOT_UPDATE off 114 xmax 130184523 ; new off 115 xmax 130184523, blkref #0: rel 1663/13285/16516 blk 48

rmgr: Heap len (rec/tot): 74/ 74, tx: 130184538, lsn: 2/580000B8, prev 2/58000070, desc: HOT_UPDATE off 9 xmax 130184538 ; new off 88 xmax 0, blkref #0: rel 1663/13285/16515 blk 26035

rmgr: Heap len (rec/tot): 72/ 72, tx: 130184530, lsn: 2/58000108, prev 2/580000B8, desc: HOT_UPDATE off 107 xmax 130184530 ; new off 116 xmax 0, blkref #0: rel 1663/13285/16516 blk 48

rmgr: Heap len (rec/tot): 72/ 72, tx: 130184531, lsn: 2/58000150, prev 2/58000108, desc: HOT_UPDATE off 113 xmax 130184531 ; new off 117 xmax 0, blkref #0: rel 1663/13285/16516 blk 48 Original primary database:

digoal@pg11-test-> pg_waldump 000000010000000200000058|head -n 5

rmgr: Heap2 len (rec/tot): 58/ 58, tx: 0, lsn: 2/58000030, prev 2/57FFFFB8, desc: CLEAN remxid 130039429, blkref #0: rel 1663/13285/16515 blk 26035

rmgr: Heap len (rec/tot): 72/ 72, tx: 130184523, lsn: 2/58000070, prev 2/58000030, desc: HOT_UPDATE off 114 xmax 130184523 ; new off 115 xmax 130184523, blkref #0: rel 1663/13285/16516 blk 48

rmgr: Heap len (rec/tot): 74/ 74, tx: 130184538, lsn: 2/580000B8, prev 2/58000070, desc: HOT_UPDATE off 9 xmax 130184538 ; new off 88 xmax 0, blkref #0: rel 1663/13285/16515 blk 26035

rmgr: Heap len (rec/tot): 72/ 72, tx: 130184530, lsn: 2/58000108, prev 2/580000B8, desc: HOT_UPDATE off 107 xmax 130184530 ; new off 116 xmax 0, blkref #0: rel 1663/13285/16516 blk 48

rmgr: Heap len (rec/tot): 72/ 72, tx: 130184531, lsn: 2/58000150, prev 2/58000108, desc: HOT_UPDATE off 113 xmax 130184531 ; new off 117 xmax 0, blkref #0: rel 1663/13285/16516 blk 48 Keep the original primary database on TL1 and the new primary database on TL2 to generate WAL files on both timelines.

Restore data from the backup set of TL1 until it is consistent with that on TL2.

Mechanism: if restore_command detects a history file, it first copies the partial file and starts the new timeline branch. It will adopt 000000010000000200000058.partial instead of 000000010000000200000058.

When configuring recovery.conf, primary_conninfo is not allowed to connect to the original primary database. Otherwise, the TL1 will be enabled instead of TL2 after the difference occurs. Here, the solution is to use the restore_command to enable the TL2.

The reason for this is straightforward: The entire WAL file is received in streaming mode, thus falling into the same old issue. In fact, you should use the WAL file that starts from the switchover timestamp. Part of the content in this WAL file is on TL1, and the rest is generated after switchover.

recovery.conf

restore_command = 'cp /data01/digoal/wal/%f %p'

recovery_target_timeline = 'latest' # 这一条一定要配置,才会走新时间线

standby_mode = on

# primary_conninfo = 'host=localhost port=8001 user=postgres' # 注释这一条即可,或者改成指向新的主库,但是建议不要改,直接注释最好,因为主备可能经常切换。 If it only needs to restore to the old timeline, set the recovery_target_timeline parameter.

Under what circumstances will the wrong timeline be taken?

1) Streaming recovery is adopted, and the original primary database is connected.

2) The WAL file during the switchover of the original primary database is copied to the pg_wal directory of the target set, for example, the 000000010000000200000058 file in this case.

cd /data01/digoal/pg_root8002 Configure the parameter.

postgresql.conf

port = 8002 Modify the recovery.done file name.

mv recovery.done recovery.conf Modify the content.

recovery.conf

restore_command = 'cp /data01/digoal/wal/%f %p'

recovery_target_timeline = 'latest' # 这一条一定要配置,才会走新时间线

standby_mode = on

# primary_conninfo = 'host=localhost port=8001 user=postgres' # 注释这一条即可,或者改成指向新的主库,但是建议不要改,直接注释最好,因为主备可能经常切换。 Start a PITR instance.

pg_ctl start -D /data01/digoal/pg_root8002 You can see that the PITR instance is on TL2.

Create a standby database 2.

pg_basebackup -D /data01/digoal/pg_root8003 -F p -c fast -h 127.0.0.1 -p 8000 -U postgres Configure the parameter.

postgresql.conf

port = 8003 Modify the recovery.done file name.

mv recovery.done recovery.conf

vi recovery.conf

restore_command = 'cp /data01/digoal/wal/%f %p'

recovery_target_timeline = 'latest' # 这一条一定要配置,才会走新时间线

standby_mode = on

primary_conninfo = 'host=localhost port=8000 user=postgres' Start the standby database 2.

pg_ctl start -D /data01/digoal/pg_root8003 Ensure that primary/standby synchronization starts normally.

查询主库8000

postgres=# select * from pg_stat_replication ; Perform stress testing on the new primary database (original standby database 1).

pgbench -M prepared -n -r -P 1 -c 16 -j 16 -T 120 -h 127.0.0.1 -p 8000 Ensure that the standby database of the new primary database is synchronized normally.

查询主库8000

postgres=# select * from pg_stat_replication ; Activate the standby database of the new primary database, namely standby database 2.

pg_ctl promote -D /data01/digoal/pg_root8003

时间线现在是TL3 Perform stress testing on the standby database of the new primary database.

pgbench -M prepared -n -r -P 1 -c 16 -j 16 -T 120 -h 127.0.0.1 -p 8003 After completing the stress test, switch the WAL file to ensure that PITR can restore to the last WAL logs by using restore_command.

psql -h 127.0.0.1 -p 8003 -U postgres

checkpoint;

select pg_switch_wal();

checkpoint;

select pg_switch_wal(); Check whether PITR restores WAL logs to that on the new primary database (the original standby database).

digoal@pg11-test-> psql -h 127.0.0.1 -p 8003

psql (11.1)

Type "help" for help.

postgres=# select count(*) from pgbench_history ;

count

---------

9319998

(1 row)

postgres=# \q

digoal@pg11-test-> psql -h 127.0.0.1 -p 8002

psql (11.1)

Type "help" for help.

postgres=# select count(*) from pgbench_history ;

count

---------

9319998

(1 row) The results are completely consistent.

TL has also become 3.

digoal@pg11-test-> pg_controldata -D /data01/digoal/pg_root8002|grep -i timeline

Latest checkpoint's TimeLineID: 2

Latest checkpoint's PrevTimeLineID: 2

Min recovery ending loc's timeline: 3

digoal@pg11-test-> pg_controldata -D /data01/digoal/pg_root8003|grep -i timeline

Latest checkpoint's TimeLineID: 3

Latest checkpoint's PrevTimeLineID: 3

Min recovery ending loc's timeline: 0

PITR实例,做完检查点就打通了

digoal@pg11-test-> psql -h 127.0.0.1 -p 8002

psql (11.1)

Type "help" for help.

postgres=# checkpoint;

CHECKPOINT

postgres=# \q

digoal@pg11-test-> pg_controldata -D /data01/digoal/pg_root8002|grep -i timeline

Latest checkpoint's TimeLineID: 3

Latest checkpoint's PrevTimeLineID: 3

Min recovery ending loc's timeline: 3 1) Run the restore command in recovery.conf instead of stream.

2) After switchover, archive the history file as soon as possible.

1) Streaming recovery is adopted, and the original primary database is connected.

2) The WAL file during the switchover of the original primary database is copied to the pg_wal directory of the target set, for example, the 000000010000000200000058 file in this case.

Set the recovery_target_timeline parameter.

PostgreSQL: How libpq Helps Configure Multi-Backend Connection Preferences

How to Prevent Downstream Timeline Errors Caused by Primary/Standby Switchover

digoal - June 24, 2021

ApsaraDB - May 29, 2025

Alibaba Cloud Native Community - October 26, 2023

ApsaraDB - January 16, 2026

ApsaraDB - November 12, 2024

digoal - April 26, 2021

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by digoal