By Chen Qiwei.

As soon as you open the Tmall app, it starts to display content that is specifically designed for your online shopping experience based on the intelligent recommendation algorithms running in the background. Today, Chen Qiwei, an algorithm engineer from the Alibaba Search and Recommendation Department, will introduce how personalized search recommendations are implemented on Tmall, specifically on the Tmall homepage, helping understand all the nit-and-grit that goes on in the background.

As a general overview, the Tmall homepage, thanks to recommendation algorithms, provides users with the first impression they will receive when they open the Tmall app. Products recommended on the homepage can change the user's subsequent behaviors in the app. Tmall homepage plays a vital role in undertaking and distributing user traffic, improving the user's overall shopping experience, and more or less presenting and representing the cost-effectiveness, quality, and branding power of Tmall products. In other words, the Tmall homepage has become a key piece for improving the user's experience in Tmall.

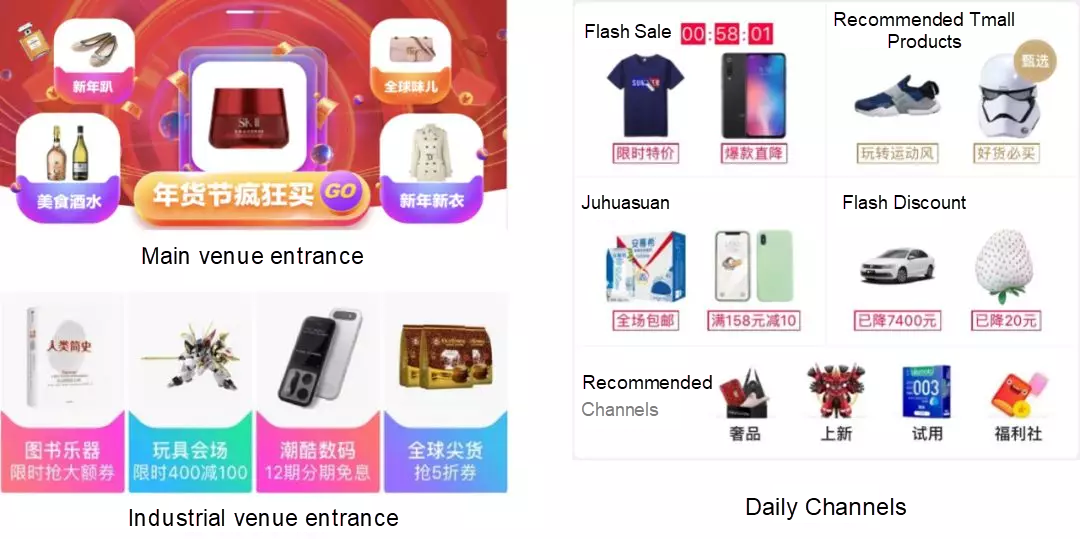

There are namely two types of Tmall homepages, or homepage scenarios that the user may come across, which are strikingly different structurally speaking. One is the entrance page homepage for promotion venues on Tmall, which the user may see during major shopping events such as during the Double 11 and Double 12 shopping festivals, and other is the daily channels homepage, which is what the user would typically see when he or she opens the mobile app. Examples of these two types are shown in Figure 1. The figure on the left shows entrances to the main and industrial venues. The main venue entrance recommends users to seven products with a dynamic carousel that consists of three products in the middle, which distributes traffic to the main venue. Over tens of millions of unique visitors (UVs) have been redirected to this kind of main venue page. The industrial venue entrance (shown below) recommends four personalized venues to distribute traffic to tens of thousands of venues. The figure on the right (below) shows daily channels, which includes Flash Sales, Recommended Tmall Products, Juhuasuan (¾Û»®Ëã, Group Buys), Flash Discounts, and Recommended Channels. Through personalized product recommendations, the homepage distributes traffic to various channels to increase user loyalty and promote users to shop more on Tmall.

Figure 1. Tmall Homepage Types.

In the past, the recommendation system algorithms for the Tmall homepage focused on optimizing relevant recommendations. Now, the recommendation system not only considers the relevance of recommendation results but also serve to optimize the discovery and diversity of recommendation results. Efficiency and user experience equally are now equally the two main optimization objectives of the Tmall homepage nowadays. New technology such as graph embeddings, transformers, deep learning, and knowledge graphs have been applied to the recommendation system for the Tmall homepage. All of these changes have helped to ensure a double-digit click-through rate (CTR) growth and a double-digit fatigue reduction in different scenarios.

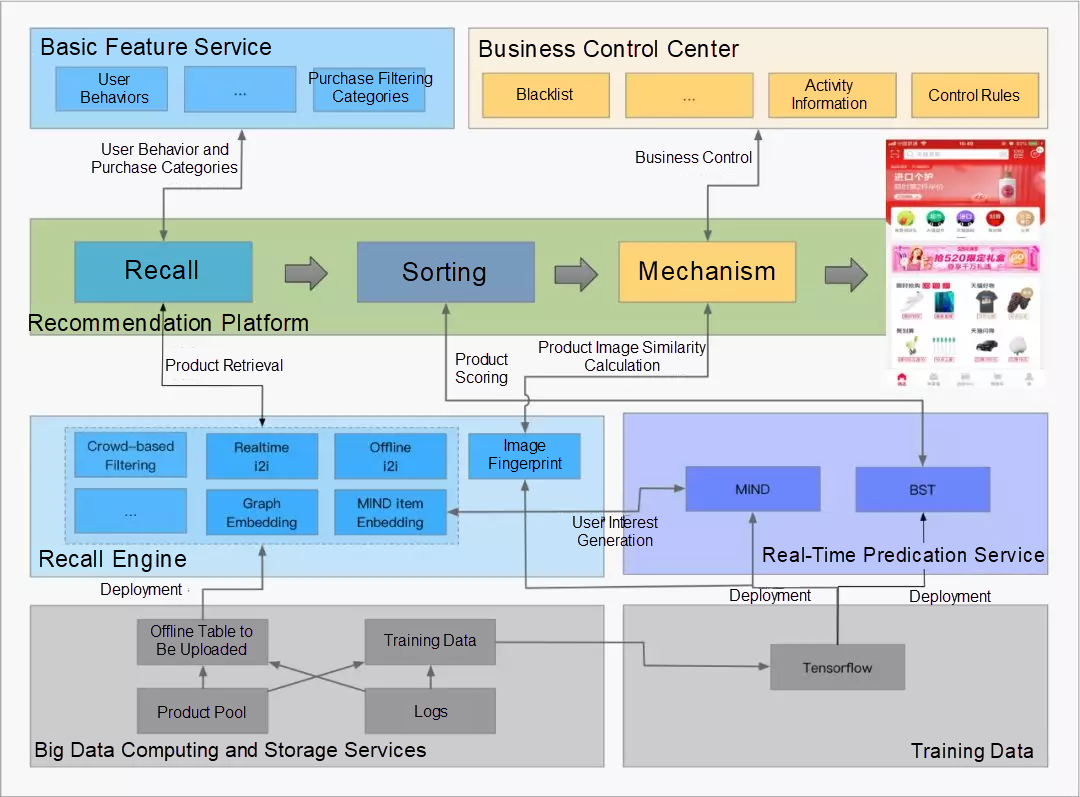

The personalized recommendation system for the Tmall homepage consists of the (1) recall module, (2) sorting module, and (3) mechanism module, all of which we will discuss more in the following sections in much greater detail. In short, the recall module retrieves top-k candidate products that users may be interested in from all products. The sorting module focuses on the product's click-through rate (CTR) evaluation. And the mechanism module controls traffic, optimizes user experience, adjusts the strategies of the system algorithm, and sorts products. Next, the recommendation system also uses a number of new technologies, as discussed above. The following topics shows these key technologies in detail.

Figure 2. The Framework of the Recommendation System for Tmall Homepages.

Item-CF is the most widely used recall algorithm. It calculates the similarity (simScore) between products based on the frequency that both products are concurrently clicked to obtain an i2i table. Then, it queries the i2i table based on users' triggers to expand products that users are interested in. Although the Item-CF algorithm is simple, it must be optimized based on actual business scenarios for better effects. Noise data clearance, such as clearing crawlers and fake orders, reasonable selection of the time window for calculating similarity between products, time attenuation introduction, considering only product pairs of the same category, normalization, truncation, and discretization can significantly optimize the Item-CF algorithm.

Ranki2i is an optimized Item-CF algorithm. It multiplies the similarity (simScore) between products obtained by Item-CF by the CTR of the target item recalled by the trigger item in the past period to correct simScore in the i2i table. Therefore, the i2i table considers not only the click co-occurrence of both products, but also the CTR of recalled products.

Based on the network-wide CTR data and Tmall homepage scenario logs, we calculate the Ranki2i table and deploy it on the basic engine of the retrieval system. When a user accesses the Tmall homepage, the basic engine retrieves the user's trigger from the Ali Basic Feature Server (ABFS) and queries the Ranki2i table to recall products that the user is interested in.

The classic Item-CF algorithm calculates the similarity between products based on the frequency that both products are clicked at the same time. It provides several great advantages in identifying the similarity and correlation between user-clicked products and their matching products, and features high simplicity and performance. Now, it has become the most widely used recall algorithm. However, in the classic Item-CF algorithm, the candidate sets of recall results are only based on the user's historical behavior categories but not on the product-side information. Consequently, the recommendation results have weak discovery capabilities and poor effects on long-tail products. The recommendation system provides a smaller number of and narrower scope of product recommendations, restricting the sustainable development of the system. To precisely recommend favorite products to users, maintain the sustainable development of the recommendation system, and resolve the problems of weak discovery and poor effects on long-tail products, we proposed the S3 graph embedding and MIND algorithms.

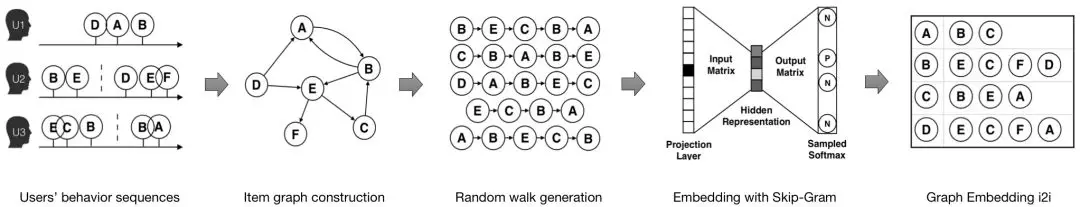

Graph embedding is a machine learning technology that projects complex networks to low-dimensional space. Typically, this technology vectorizes network nodes and ensures that the vector similarity between nodes is close to the multi-dimensional similarity between original nodes in terms of the network structure, neighborship, and metadata.

The S3 graph embedding algorithm builds hundreds of billions of graphs based on billions of users' serialized clicks on billions of products. The algorithm also uses the deep random walk technology to perform virtual sampling on the user's behavior, and then also introduces the product-side information to improve the general learning capability, and builds the embedding of all products to vectorize them in the same dimension. This vector is directly used in the Item-CF algorithm to calculate the product similarity. Unlike the classic Item-CF algorithm, the S3 graph embedding algorithm can calculate the product similarity without requiring the concurrent clicks of two products. It also introduces the side information to better process long-tail and cold-started products.

Figure 3. Graph Embedding.

Based on the Behemoth X2Vec platform, we use the CTR data and product side information on the network to build the embedding of all products, calculate the graph embedding i2i table, and deploy the table on the basic engine. When a user accesses the Tmall homepage, the basic engine retrieves the user's trigger from the ABFS and queries the graph embedding i2i table to recall products that the user is interested in.

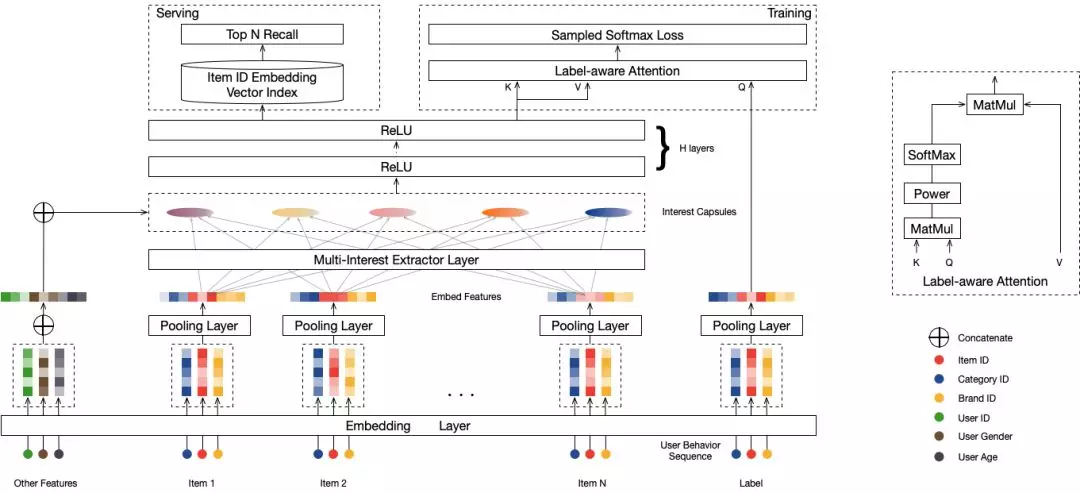

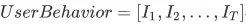

Multi-Interest Network with Dynamic Routing (MIND) is a vector recall method proposed by our team. It constructs multiple user interest vectors in the same vector space as the product vector to represent users' interests. Then, it retrieves the top K neighboring product vectors based on these interest vectors to conclude the top K products that users are interested in.

The traditional DeepMatch method generates an interest vector for each user. However, in actual shopping scenarios, a user's interests are varied and may even unrelated with each other. For example, a user may want to buy clothing, cosmetics, and snacks at the same time. However, a vector with limited length can hardly well-represent all these interests of the user. Our MIND model uses the dynamic routing method to dynamically learn vectors indicating a user's interests from user behaviors and attribute information to better capture the user's diversified interests and improve the recall richness and accuracy.

Figure 4. The MIND Model.

We have developed a complete MIND online service system based on the real-time predication (RTP) service and the recall basic engine. In each access scenario, the multi-interest extractor layer of the MIND model deployed on the RTP service extracts multiple user interest vectors and uses these vectors in the basic engine to recall products that users are interested in through the AITheta search engine.

Retargeting is a strategy that recommends products that users have clicked, added to favorites, or purchased before. In e-commerce recommendation systems, users' behaviors include browsing, clicking, adding to favorites, additional purchasing, and ordering. Even though we hope that users' behaviors can eventually be converted to transactions, the reality is a different story. When a user initiates upstream behaviors for ordering, the user may not complete the transaction for various reasons. It does not mean that the user is not interested in the product. When the user visits Tmall again, we will understand and identify the user's real intention based on the user's prior behaviors, recommend products that meet the user's intention again, and finally urge the user to place an order.

The retargeting strategy is often used to promote transactions in promotional venues, and its recall volume must be controlled strictly.

The preceding recall strategies can recall products that users are interested in based on the user's historical behavior. However, the number of recalled products is limited for offline and cold-started users. Crowd-based filtering is a substitute recall strategy that recommends products based on coarse-grained attributes of user groups, such as the gender, age, and recipient city. Then, it selects the top K products with a high CTR for each group based on the behavioral data of each group as the products that the group is interested in.

To combine the advantages of different recall strategies and improve the diversity and coverage of candidate sets, we converged products that are recalled by the preceding recall strategies. During convergence, we properly modulated the recall ratios of different recall algorithms based on their historical recall results and throttling requirements.

Sorting features greatly affect the sorting effect. The sorting module of the recommendation system for the Tmall homepage has the following types of features:

Sorting samples also affect the sorting effect. Sorting samples come from exposure and click logs generated in scenarios. The following methods are used to improve the sorting effect: Clean scenario logs and remove noise data from logs. Accurately calculate scenario-specific active and blacklisted users in quasi real time and retain users who are interested in the scenarios. Filter out data related to cheating behaviors, such as crawling and scalping. Filter out abnormal behavior logs in special time segments, for example, ordering at 00:00:00 and red packet rain periods.

The Wide and Deep Learning (WDL) model proposed by Google builds the basic framework of the deep sorting model.

Deep Factorization-Machine (or DeepFM), Probabilistic Neural Networks (PNN), Dynamic Circuit Networks (DCN), Deep Residual Networks (Deep ResNet), among other models, move the traditional discrete LR's feature engineering experience to the deep learning (DL) and use the manually constructed algebraic system prior to establishing a preset cognitive model to facilitate modeling. Deep interest networks (DIN) and other models introduce user behavior data, use the attention mechanism to capture users' diversified interests and forecast the local correlation of targets, and model large-scale discrete user behavior data.

Models such as DeepFM, PNN, DCN, and Deep ResNet focus on how to better use ID and bias features to approach the model upper limit that they can reach. These models seldom explore how to effectively use sequence features. DIN and other models further explore sequence feature modeling. They use target items to attend sequence features and then perform weighted sum pooling. Even though they properly present the correlation between the scoring items and the user behavior sequences, the correlation between user behavior sequences is not abstracted.

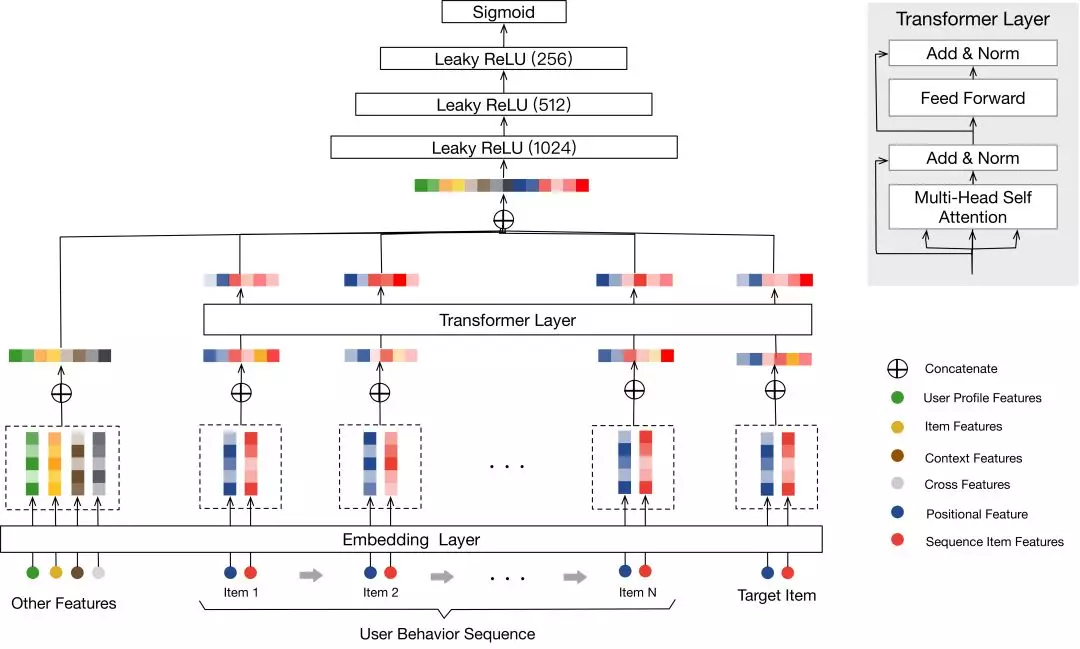

To resolve the preceding problems of the Wide and Deep Learning (WDL) and DIN models and enable the transformer to effectively process word sequences in NLP tasks, we proposed the behavior sequence transformer (BST) model. This model uses the transformer to model users' behavior sequences and to learn the correlation between user behavior sequences and their correlation with scoring items.

Figure 5. The Behavior Sequence Transformer (BST) Model.

Figure 5 shows the BST model structure. The model uses user behavior sequences including scoring items, user features, product features, contextual features, and crossing features as inputs. First, the model uses the embedding technology to embed them to low-dimensional dense vectors. To better learn the correlation between user behavior sequences and their correlation with scoring items, the transformer learns the deep representation of each user behavior sequence. Then, the model combines user features, product features, contextual features, crossing features, and the transformer layer output to obtain samples' feature representation vectors and uses the layer-3 Multi-Layer Perceptron (MLP) network to further learn more abstract feature representation vectors and the relationship between features. Finally, the model outputs by using Sigmoid.

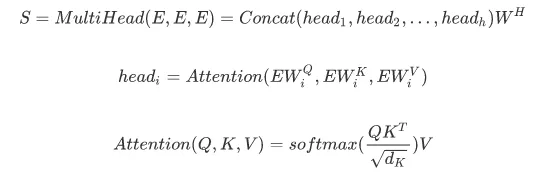

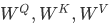

The model uses the transformer to model user behavior sequences. It uses self-attention to learn the correlation between user behavior sequences and their correlation with scoring items. Considering the physical meanings of vectors, the inner product is used to calculate the attention. More similar products indicate a larger inner product and a higher attention gain. In addition, multi-head attention is used to effectively calculate sequential features in multiple parallel spaces to improve the fault tolerance and accuracy of the model.

Wherein,  are the projection matrixes, E is the embedding representation after the user behavior sequence

are the projection matrixes, E is the embedding representation after the user behavior sequence  is spliced with the current scoring Q,

is spliced with the current scoring Q,  , and h is the number of heads.

, and h is the number of heads.

To further enhance the non-linear representation capability of the network, Point-wise Feed-Forward Networks (FFN) is performed on the self-attention output.

Based on the RTP service, we deploy the quantified BST model in the GPU cluster. The RTP service splits and scores each scoring item to predict the CTR of each scoring item in the list in a timely manner.

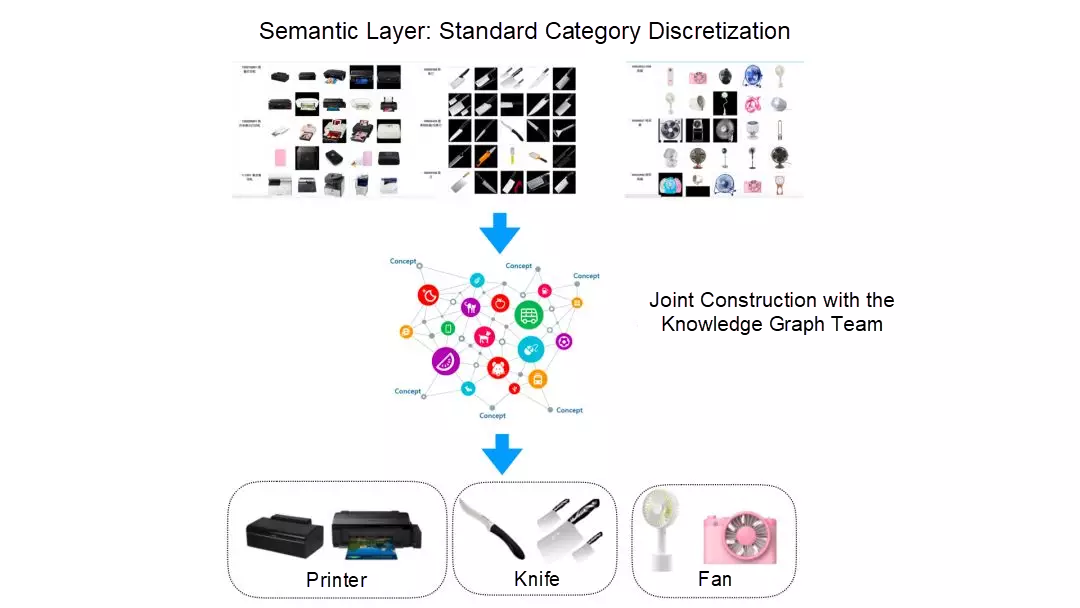

Restricted by various factors, product categories on Taobao and Tmall are refined and do not comply with users' subjective product classification in recommendation scenarios. In collaboration with the knowledge graph team, we have established a standard category system to aggregate similar leaf categories based on semantics and scenario features and applied it to filter purchase categories and expand categories when the categories are scattered.

Figure 6. Standard category system.

Taobao has a huge amount of product materials, and there are endless similar pictures among these products. This similarity does not belong to product attributes and cannot be identified through semantic information, such as the title and category of the products. We have developed the similar image detection system to detect the similarity between product images.

The similar image detection system uses a Convolutional Neural Network (CNN) as the classifier to identify the leaf categories to which product images belong, uses the last hidden layer vectors as image feature vectors, and calculates the similarity between products based on the vector similarity. To accelerate vector similarity calculation, it uses the SimHash algorithm to convert image feature vectors to high-dimensional image fingerprints. In this case, the distance between image fingerprints instead of the Hamming distance between feature vectors is calculated. This greatly reduces the computational complexity within the range of acceptable precision loss.

Figure 7. Similar-Image Detection System.

The Tmall homepage consists of venue entrances and daily channels. Venue entrances include entrances to the main and industrial venues, while daily channels include Flash Sale, Recommended Tmall Products, Juhuasuan, Flash Discount, and Recommended Channels. Each channel has independent and similar product materials and may duplicate with each other to some extent. These channels may provide similar recommendation results if the similarity is not restricted. As a result, the limited and precious homepage space is not fully utilized, the user experience is degraded, and the cultivation of users' interests to scenarios are negatively affected. We have designed a variety of discretization solutions to jointly discretize materials recommended by various channels on the homepage from multiple dimensions (such as products, standard categories, brands, venues, and similar images) to ensure diverse recommendation results.

Because the Tmall homepage is the first screen in the Tmall app, it is exposed each time a user opens the app. However, multiple invalid exposures exist. For example, users directly access the search channel or shopping cart or seize red packets or coupons in promotional periods. In these invalid exposures, users are not interested in the content of the Tmall homepage. The common method of recording fake exposed products and using them to filter real-time exposures is far too strict for scenarios where the invalid exposure rate is high on the homepage, and will greatly weaken the recommendation effect. To address this issue, we have designed a template-based and real-time exposure filtering method. This method recommends multiple templates to a user at a time, records the ith template previously viewed by the user, and displays the (i+1)th template for the user. If the user has new behaviors, the recommended content in the template will also be updated.

In the past, the recommendation system was always complained for providing recommendations to purchased products. To resolve this problem, the user's purchase categories need to be filtered properly. Because leaf categories have different purchase periods and users have different category purchase periods, the user's personalized requirements for filtering different purchase categories need to be considered. Purchase filtering is a common problem in all recommendation scenarios. Partnered with the engineering team, we have launched a unified global purchase filtering service to customize a purchase blocking period for each category. Based on the user's recent purchase behavior, a real-time purchase filtering category is maintained for each user. If a user clicks a category multiple times during the purchase blocking period, it indicates that the user is still interested in and may purchase a product of that category. In this case, the category will be unblocked. By applying the purchase filtering service to the Tmall homepage, the problem of recommendations to purchased products is greatly mitigated.

In this article, we have looked into the Tmall homepage recommendation system in terms of the various algorithms it uses. We have specifically looked into how Tmall uses the graph embedding, transformer, deep learning, knowledge graph, and user experience modeling technologies to build an advanced recommendation system in terms of recall, sorting, and recommendation mechanism. A complete recommendation system is complex. To build a Tmall homepage dedicated for you, we need to cooperate with the product, engineering, and operations personnel. At Alibaba, we will continue to accumulate experience, improve our solutions, and deepen our technologies to create better personalized services for enhanced personalized recommendation in the future.

Alibaba Clouder - October 12, 2019

Alibaba Clouder - September 2, 2019

Alibaba Clouder - December 31, 2020

Alibaba Clouder - December 2, 2020

Alibaba F(x) Team - September 30, 2021

Alibaba Clouder - November 27, 2018

It constructs multiple user interest vectors in the same vector space as the product vector to represent users' interests. Then, it retrieves the top K neighboring product vectors based on these interest vectors to conclude the top K products that users are interested in.https://cpsandtypingtest.com/

AIRec

AIRec

A high-quality personalized recommendation service for your applications.

Learn More Artificial Intelligence Service for Conversational Chatbots Solution

Artificial Intelligence Service for Conversational Chatbots Solution

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More

Adnan Zaidi September 14, 2019 at 5:22 pm

Thanks! its really supportive for me.