The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

With the development of Taobao's content-based strategy, the demand for real-time product selection has strengthened. For some products (SPUs) with demanding real-time requirements, the operation personnel want the product pools that they define to take effect in real time to be instantly available to article writers. For this purpose, stream computing can be used to implement real-time product selection. This article describes Alibaba's Blink real-time stream computing technology, which is used to implement real-time product selection and has achieved satisfying results in trials.

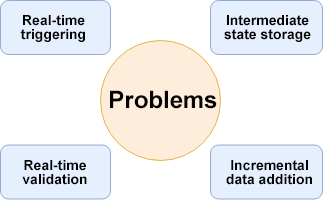

The following problems must be resolved to implement real-time product selection:

The "TT + Blink + HBase + Swift" solution addresses these problems. Specifically, TimeTunnel (TT) can resolve the real-time triggering problem, HBase can resolve the intermediate state storage problem, and Swift can resolve the real-time validation and incremental data addition problems. TT, HBase, and Swift are described as follows:

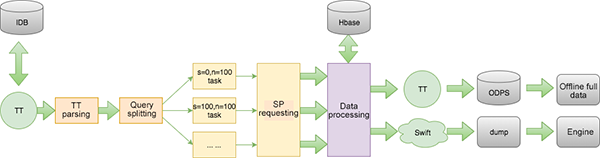

The workload of the Blink process is scattered to six nodes, namely the log resolution node, query splitting node, SP requesting node, data processing node, TT writeback node, and Swift messaging node. The real-time computing process is as follows:

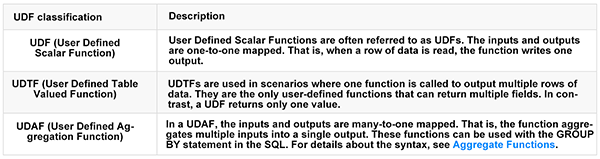

The implementation of the product selection function mainly depends on developing Blink tasks. Before developing Blink tasks, you must understand the concepts of UDF, UDTF, and UDAF.

A major task during Blink development is to implement UDFs. First, multiple compute nodes are divided based on the stream computing process, for example, the query splitting node and the SP requesting node are independent compute nodes during implementation. Then, UDF classes must be determined and implemented based on the implementation logic of each node. The following uses the SP requesting node as an example to explain the implementation process in detail.

The following lists the UDTF implementation code for the SP requesting node. The basic idea of the code is to concurrently output the returned results of the SP to the next node.

public class SearchEngineUdtf extends TableFunction<EngineFields> {

private static final Logger logger = LoggerFactory.getLogger(SearchEngineUdtf.class);

/**

* Request the engine to retrieve the recall field

* @param params

*/

public void eval(String params) {

SpuSearchResult<String> spuSearchResult = SpuSearchEngineUtil.getFromSpuSearch(params);

if(spuSearchResult.getSuccess()){

// Result parsing

JSONObject kxuanObj = SpuSearchEngineUtil.getSpResponseJson(spuSearchResult, "sp_kxuan");

if(null == kxuanObj || kxuanObj.isEmpty()){

logger.error("sp query: " + spuSearchResult.getSearchURL());

logger.error(String.format("[%s],%s", Constant.ERR_PAR_SP_RESULT,"get key:sp_kxuan data failed! "));

}else {

List<EngineFields> engineFieldsList = SpuSearchEngineUtil.getSpAuction(kxuanObj);

// Concurrently output to the data stream

for(EngineFields engineFields : engineFieldsList){

collect(engineFields);

}

}

}else {

logger.error(String.format("[%s],%s",Constant.ERR_REQ_SP, "request SpuEngine failed!"));

}

}

}

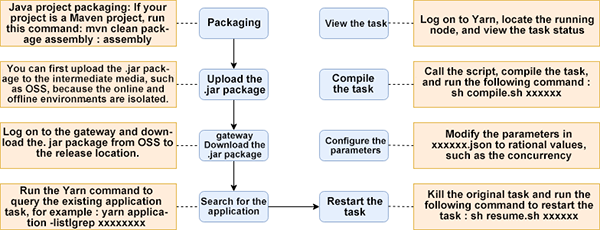

Currently, the process of launching a Blink task in a cluster is not fully automated. After finishing developing the Blink task, you need to launch it by going through the procedure shown in the preceding figure. After launching the task, you can log on to Yarn to check the running status of the task node.

After the function is launched, a selection pool with over 10,000 SPUs can take effect in minutes, greatly improving the product selection efficiency for creators.

Author: Cui Qinglei (nickname: Chenxin), Senior Development Engineer of the Search System Service Platform, Alibaba Search Business Unit

Having joined Alibaba in 2015, Cui Qinglei is mainly engaged in the development of content-based product selection servers and is familiar with technologies related to search engine services and stream computing.

Exploration and Practice of Database Disaster Recovery in the DT Era

2,593 posts | 793 followers

Follow5251873121041033 - November 8, 2019

Alibaba Clouder - May 11, 2020

Alibaba Clouder - October 23, 2020

Apache Flink Community - August 14, 2025

Alibaba Cloud MaxCompute - October 18, 2021

Alibaba Clouder - April 1, 2021

2,593 posts | 793 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreLearn More

Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Clouder