By Jianbo Sun

Helm Charts are very popular that you can find almost 10K different software packaged in this way. While in today's multi-cluster/hybrid cloud business environment, we often encounter these typical requirements: distribute to multiple specific clusters, specific group distributions according to business need, and differentiated configurations for multi-clusters.

In this blog, we'll introduce how to use KubeVela to do multi cluster delivery for Helm Charts.

If you don't have multi clusters, don't worry, we'll introduce from scratch with only Docker or Linux System required. You can also refer to the basic helm chart delivery in single cluster.

This section is preparation for multi-cluster, we will start from scratch for convenience. if you're already KubeVela users and have multi-clusters joined, you can skip this section.

1) Install KubeVela control plane

velad install2) Export the KubeConfig for the newly created cluster

export KUBECONFIG=$(velad kubeconfig --name default --host)Now you have successfully installed KubeVela. You can join your cluster to kubevela by:

vela cluster join <path-to-kubeconfig-of-cluster> --name fooVelaD can also provide K3s clusters for convenience.

3) Create and Join a cluster created by velad named foo

velad install --name foo --cluster-only

vela cluster join $(velad kubeconfig --name foo --internal) --name fooAs a fully extensible control plane, most of KubeVela's capabilities are pluggable. The following steps will guide you to install some addons for different capabilities.

4) Enable velaux addon, it will provide UI console for KubeVela

vela addon enable velaux5) Enable fluxcd addon for helm component delivery

vela addon enable fluxcdIf you have already enabled the fluxcd addon before you joined the new cluster, you NEED to enable the addon for the newly joined cluster by:

vela addon enable fluxcd --clusters fooFinally, we have finished all preparation, you can check the clusters joined:

$ vela cluster ls

CLUSTER ALIAS TYPE ENDPOINT ACCEPTED LABELS

local Internal - true

foo X509Certificate https://172.20.0.6:6443 trueOne cluster named local is the KubeVela control plane, another one named foo is the cluster we just joined.

We can use topology policy to specify the delivery topology for helm chart like the following command:

cat <<EOF | vela up -f -

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: helm-hello

spec:

components:

- name: hello

type: helm

properties:

repoType: "helm"

url: "https://jhidalgo3.github.io/helm-charts/"

chart: "hello-kubernetes-chart"

version: "3.0.0"

policies:

- name: foo-cluster-only

type: topology

properties:

clusters: ["foo"]

EOFThe clusters field of topology policy is a slice, you can specify multiple cluster names here. You can also use label selector or specify namespace with that, refer to the reference docs for more details.

After deployed, you can check the deployed application by:

vela status helm-helloThe expected output should be as follows if deployed successfully:

About:

Name: helm-hello

Namespace: default

Created at: 2022-06-09 19:14:57 +0800 CST

Status: running

Workflow:

mode: DAG

finished: true

Suspend: false

Terminated: false

Steps

- id:vtahj5zrz4

name:deploy-foo-cluster-only

type:deploy

phase:succeeded

message:

Services:

- Name: hello

Cluster: foo Namespace: default

Type: helm

Healthy Fetch repository successfully, Create helm release successfully

No trait appliedYou can check the deployed resource by:

$ vela status helm-hello --tree

CLUSTER NAMESPACE RESOURCE STATUS

foo ─── default ─┬─ HelmRelease/hello updated

└─ HelmRepository/hello updatedYou can also check the deployed resource by VelaUX.

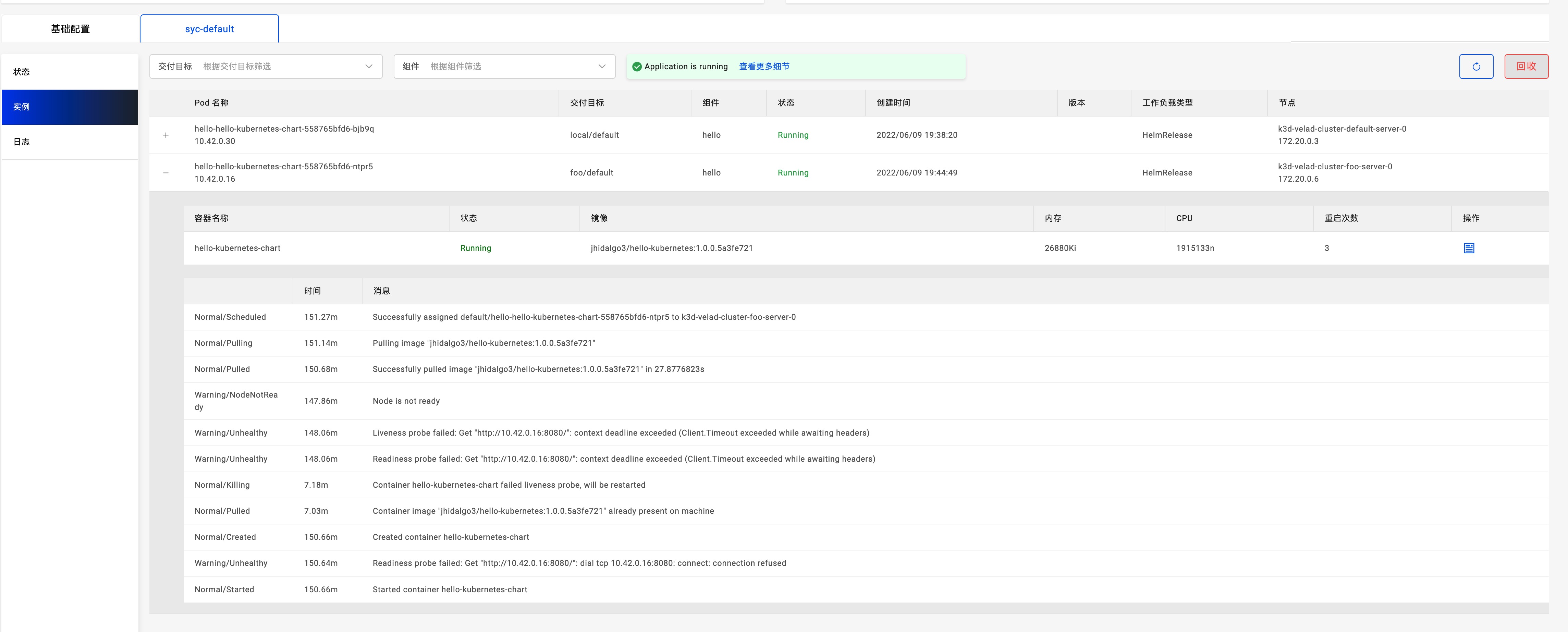

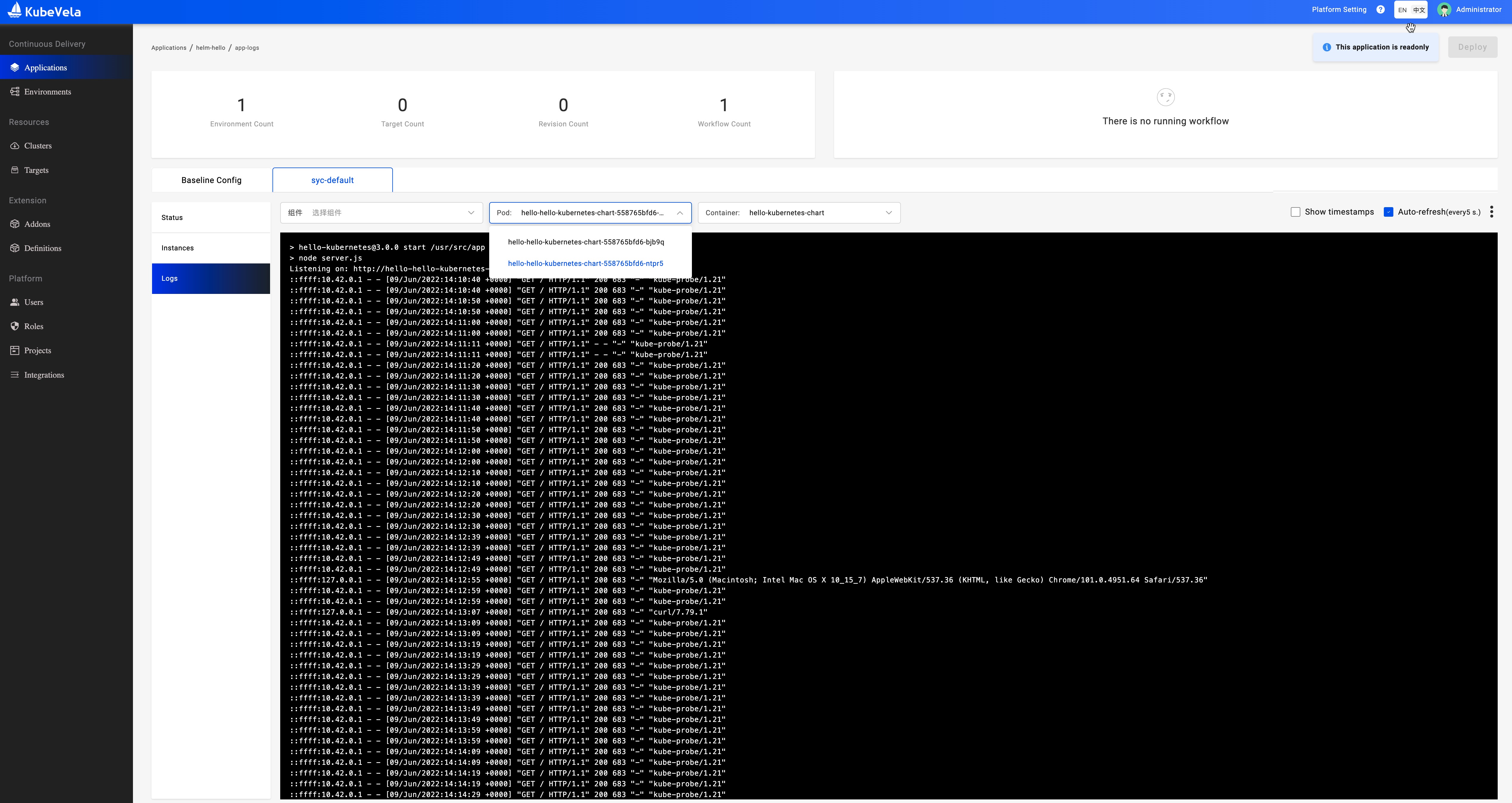

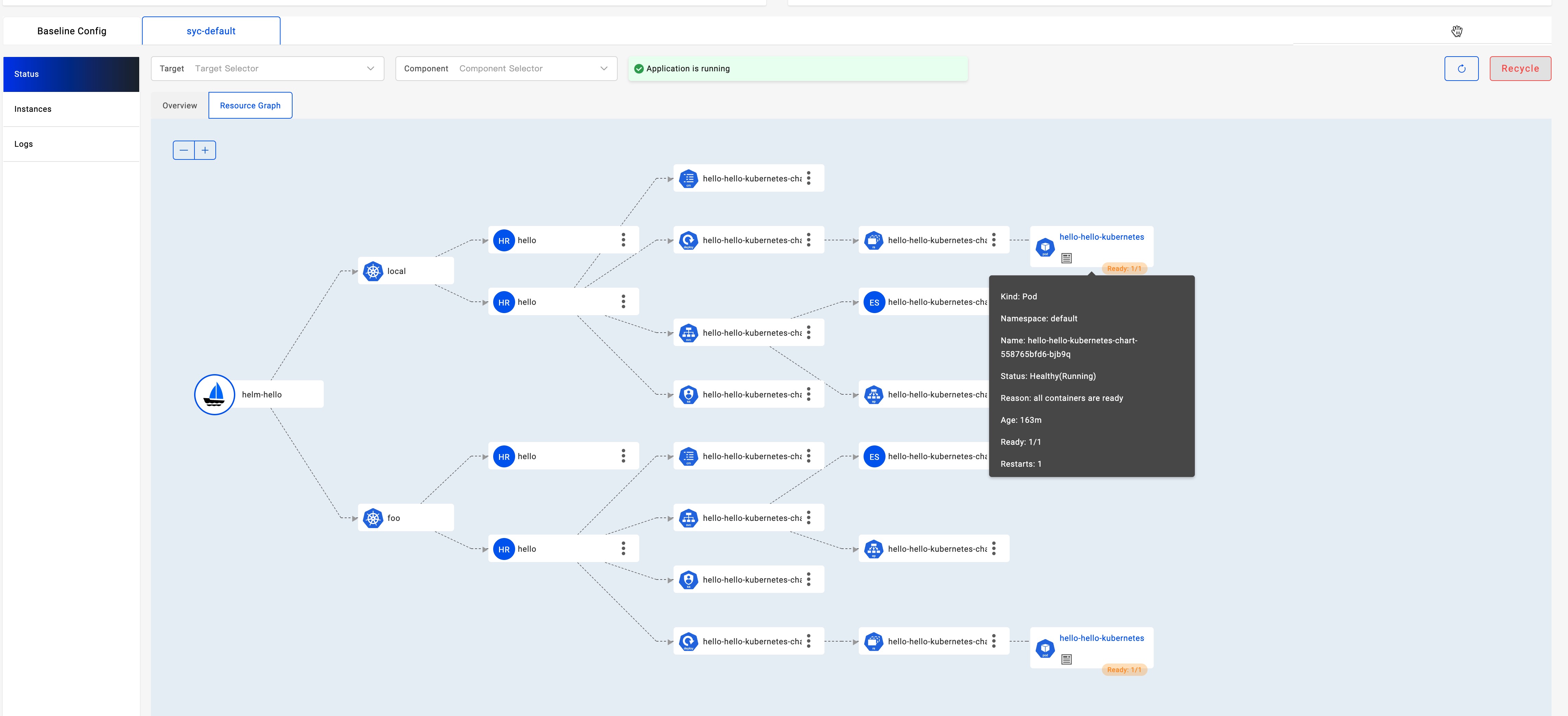

By using the velaux UI console, you can get even more information with a unified experience for multi clusters. You can refer to this doc to learn how to visit VelaUX.

With the help of UI, you can:

In some cases, we will deploy helm chart into different clusters with different values, then we can use the override policy.

Below is a complex example that we will deploy one helm chart into two clusters and specify different values for each cluster. Let's deploy it:

cat <<EOF | vela up -f -

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: helm-hello

spec:

components:

- name: hello

type: helm

properties:

repoType: "helm"

url: "https://jhidalgo3.github.io/helm-charts/"

chart: "hello-kubernetes-chart"

version: "3.0.0"

policies:

- name: topology-local

type: topology

properties:

clusters: ["local"]

- name: topology-foo

type: topology

properties:

clusters: ["foo"]

- name: override-local

type: override

properties:

components:

- name: hello

properties:

values:

configs:

MESSAGE: Welcome to Control Plane Cluster!

- name: override-foo

type: override

properties:

components:

- name: hello

properties:

values:

configs:

MESSAGE: Welcome to Your New Foo Cluster!

workflow:

steps:

- name: deploy2local

type: deploy

properties:

policies: ["topology-local", "override-local"]

- name: manual-approval

type: suspend

- name: deploy2foo

type: deploy

properties:

policies: ["topology-foo", "override-foo"]

EOFNote: If you feel the policy and workflow is a bit complex, you can make them as an external object and just reference the object, the usage is the same with the container delivery.

The deploy process has three steps:

1) deploy to local cluster;

2) wait for manual approval;

3) deploy to foo cluster.

So you will find it was suspended after the first step, just like follows:

$ vela status helm-hello

About:

Name: helm-hello

Namespace: default

Created at: 2022-06-09 19:38:13 +0800 CST

Status: workflowSuspending

Workflow:

mode: StepByStep

finished: false

Suspend: true

Terminated: false

Steps

- id:ww4cydlvee

name:deploy2local

type:deploy

phase:succeeded

message:

- id:xj6hu97e1e

name:manual-approval

type:suspend

phase:succeeded

message:

Services:

- Name: hello

Cluster: local Namespace: default

Type: helm

Healthy Fetch repository successfully, Create helm release successfully

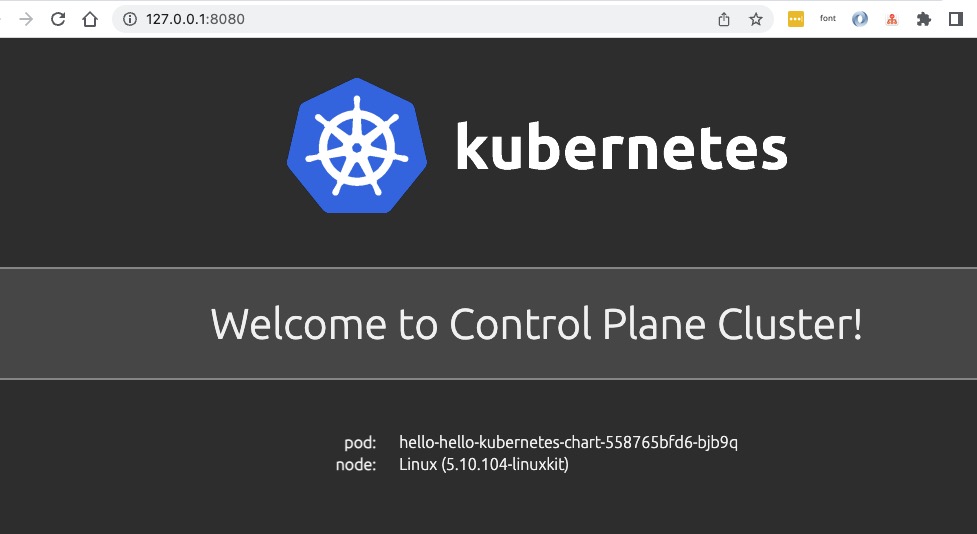

No trait appliedYou can check the helm chart deployed in control plane with the value "Welcome to Control Plane Cluster!".

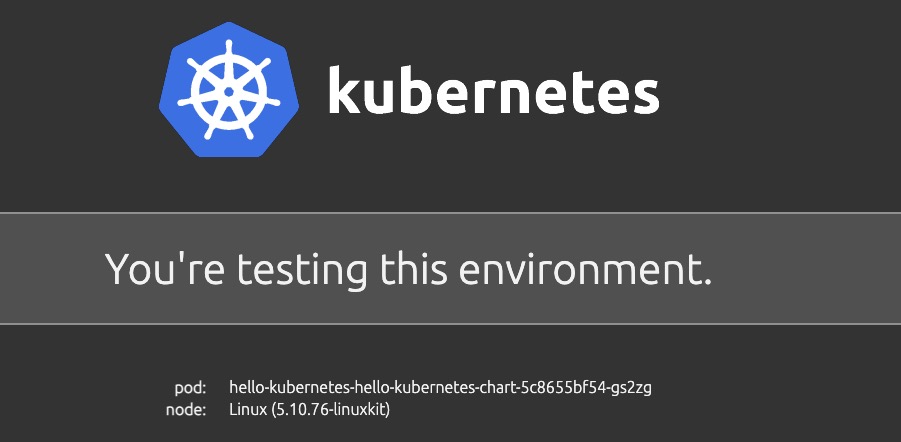

vela port-forward helm-helloIt will automatically prompt with your browser with the following page:

Let's continue the workflow as we have checked the deployment has succeeded.

vela workflow resume helm-helloThen it will deploy to the foo cluster, you can check the resources with detailed information:

$ vela status helm-hello --tree --detail

CLUSTER NAMESPACE RESOURCE STATUS APPLY_TIME DETAIL

foo ─── default ─┬─ HelmRelease/hello updated 2022-06-09 19:38:13 Ready: True Status: Release reconciliation succeeded Age: 64s

└─ HelmRepository/hello updated 2022-06-09 19:38:13 URL: https://jhidalgo3.github.io/helm-charts/ Age: 64s Ready: True

Status: stored artifact for revision 'ab876069f02d779cb4b63587af1266464818ba3790c0ccd50337e3cdead44803'

local ─── default ─┬─ HelmRelease/hello updated 2022-06-09 19:38:13 Ready: True Status: Release reconciliation succeeded Age: 7m34s

└─ HelmRepository/hello updated 2022-06-09 19:38:13 URL: https://jhidalgo3.github.io/helm-charts/ Age: 7m34s Ready: True

Status: stored artifact for revision 'ab876069f02d779cb4b63587af1266464818ba3790c0ccd50337e3cdead44803'Use port forward again:

vela port-forward helm-helloThen it will prompt some selections:

? You have 2 deployed resources in your app. Please choose one: [Use arrows to move, type to filter]

> Cluster: foo | Namespace: default | Kind: HelmRelease | Name: hello

Cluster: local | Namespace: default | Kind: HelmRelease | Name: helloChoose the option with cluster foo, then you'll see the result that has was overridden with new message.

$ curl http://127.0.0.1:8080/

...snip...

<div id="message">

Welcome to Your New Foo Cluster!

</div>

...snip...You can choose different value file present in a helm chart for different environment. eg:

Please make sure your local cluster have two namespaces "test" and "prod" which represent two environments in our example.

We use the chart hello-kubernetes-chart as an example.This chart has two values files. You can pull this chart and have a look all contains files in it:

$ tree ./hello-kubernetes-chart

./hello-kubernetes-chart

├── Chart.yaml

├── templates

│ ├── NOTES.txt

│ ├── _helpers.tpl

│ ├── config-map.yaml

│ ├── deployment.yaml

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── service.yaml

│ ├── serviceaccount.yaml

│ └── tests

│ └── test-connection.yaml

├── values-production.yaml

└── values.yamlAs we can see, there are values files values.yaml values-production.yaml in this chart.

cat <<EOF | vela up -f -

apiVersion: core.oam.dev/v1beta1

kind: Application

metadata:

name: hello-kubernetes

spec:

components:

- name: hello-kubernetes

type: helm

properties:

repoType: "helm"

url: "https://wangyikewxgm.github.io/my-charts/"

chart: "hello-kubernetes-chart"

version: "0.1.0"

policies:

- name: topology-test

type: topology

properties:

clusters: ["local"]

namespace: "test"

- name: topology-prod

type: topology

properties:

clusters: ["local"]

namespace: "prod"

- name: override-prod

type: override

properties:

components:

- name: hello-kubernetes

properties:

valuesFiles:

- "values-production.yaml"

workflow:

steps:

- name: deploy2test

type: deploy

properties:

policies: ["topology-test"]

- name: deploy2prod

type: deploy

properties:

policies: ["topology-prod", "override-prod"]

EOFAccess the endpoints of application:

vela port-forward hello-kubernetesIf you choose Cluster: local | Namespace: test | Kind: HelmRelease | Name: hello-kubernetes you will see:

If you choose Cluster: local | Namespace: prod | Kind: HelmRelease | Name: hello-kubernetes you will see:

If you're using velad for this demo, you can clean up very easily by:

velad uninstall -n foovelad uninstallWith the help of KubeVela and its addon, you can get the capability of Canary Rollout for your helm charts!

Go and ship Helm chart with KubeVela, makes deploying and operating applications across today's hybrid, multi-cloud environments easier, faster and more reliable.

How to Migrate Existing Terraform Cloud Resources to KubeVela

Use Nocalhost and KubeVela for Cloud Debugging and Multi-cluster Hybrid Cloud Deployment

664 posts | 55 followers

FollowAlibaba Cloud Native Community - June 21, 2022

Alibaba Cloud Native Community - January 9, 2023

Alibaba Cloud Native Community - September 16, 2022

Alibaba Developer - June 21, 2021

Alibaba Cloud Native Community - January 27, 2022

Alibaba Cloud Native Community - November 16, 2022

664 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Cloud Native Community