By Alun from Alibaba Cloud Storage Team

Single point failure is normal in the real world, and ensuring business continuity under failure is the core capability of a highly available system. How can we guarantee 24/7 high availability in key fields, such as finance, insurance, and government affairs? Generally speaking, a business system consists of computing, network, and storage. Network multipathing and storage distribution ensure stability and high availability on the cloud. However, single point failure on the computing and business ends must be resolved to achieve comprehensive-process high availability. Let's take the common database as an example. It is hard to accept that single point failure causes a business interruption. How can we quickly recover the businesses when an instance stops providing services due to power loss, breakdown, or hardware failure?

The solutions vary in different scenarios:

MySQL usually builds an active/standby or a primary/secondary architecture to achieve high business availability. When the primary database fails, it switches to the secondary database to continuously provide external services.

However, how can the data consistency between the primary and secondary databases be guaranteed after an instance switch? According to the business tolerance for data loss, MySQL usually uses synchronous or asynchronous mode for data replication. This causes additional problems.

Data loss occurs in some scenarios, synchronizing data affects system performance, a complete set of equipment needs to be added for business expansion, full data replication is required, and long switchover time between primary and secondary databases affects business continuity.

The architecture will become complex and difficult to balance availability, reliability, extensibility, costs, and performance to build a highly available system. Is there a better solution that considers all the above aspects? The answer must be yes!

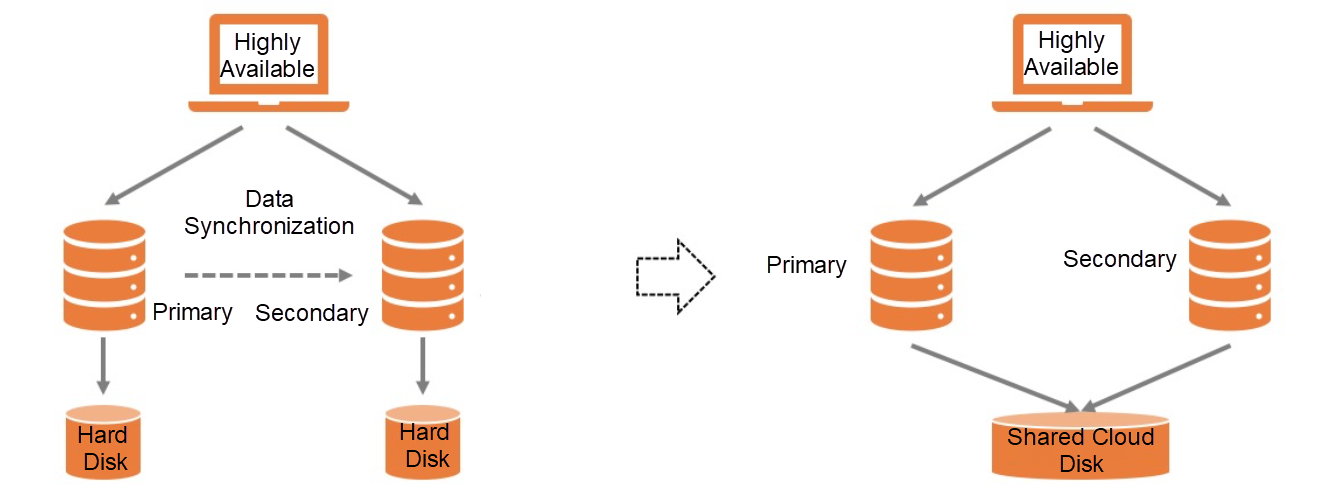

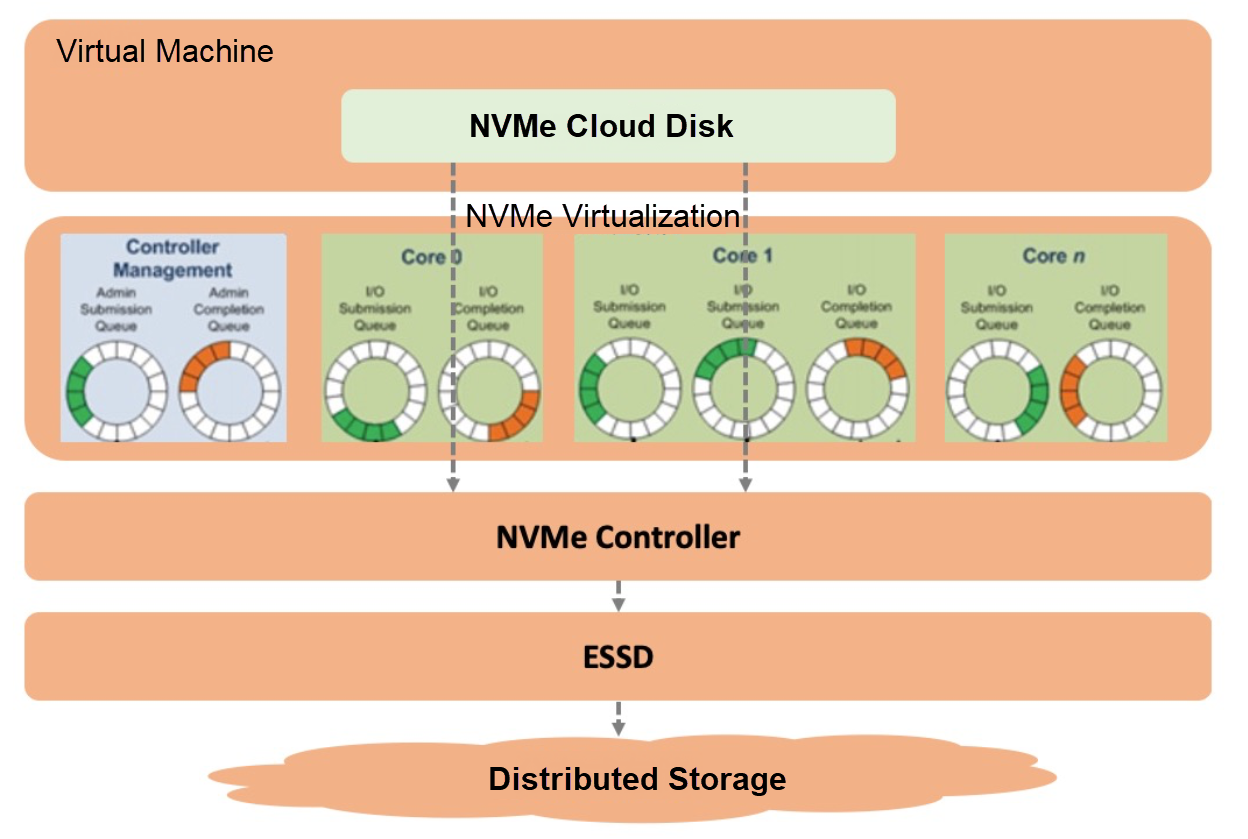

Figure 1: High-Availability Architecture of the Database

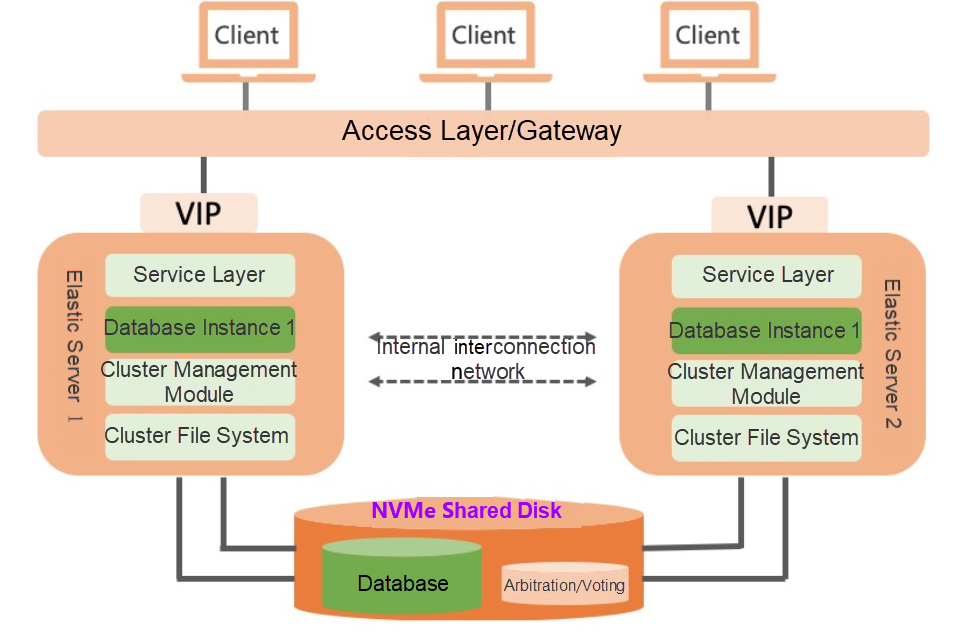

Different database instances can share the same data through shared storage, thus obtaining high availability through fast switching of compute instances (Figure 1). Alibaba Cloud PolarDB databases is one of the representatives of this approach. The key here lies in the shared storage. The traditional SAN is expensive, the scale-in and scale-out are troublesome, and it is easy for the machine head to become a bottleneck. Its high threshold is not friendly to users. Is there a better, faster, and more economical shared storage to solve the pain points for users? The NVMe disk and sharing features recently launched by Alibaba Cloud fully meets the demands of users. We will introduce them in this article. Here is a question. After the instance switches, if the original library is still writing data, how can we ensure the data correctness? You can think about it first.

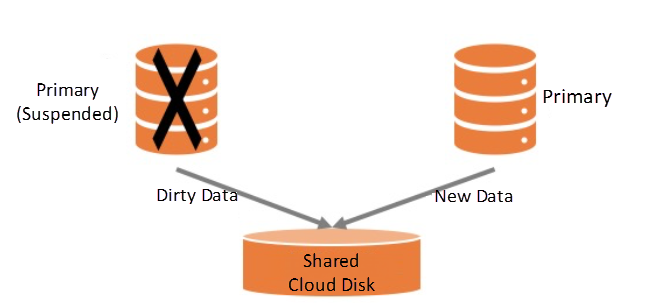

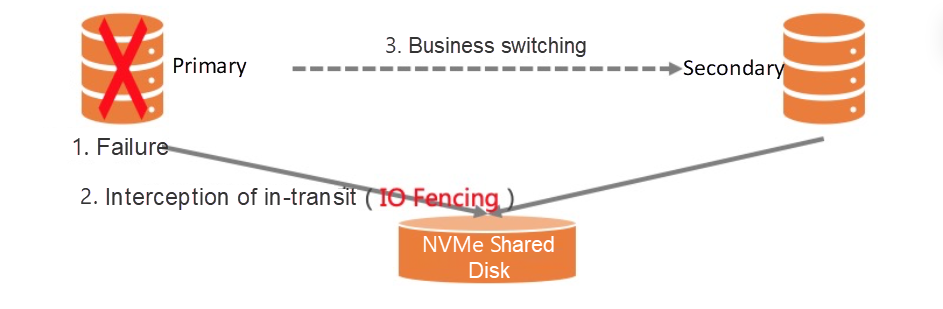

Figure 2: Data Correctness Problem in Primary-Secondary Switching Scenario

We have entered the digital economy era, where data is of great value. The rapid development of cloud computing, artificial intelligence, the Internet of Things, 5G, and other technologies has promoted the explosive growth of data. According to the IDC 2020 report, the global data scale is growing year by year and will reach 175 ZB in 2025. Data will be mainly concentrated in public clouds and enterprise IDCs. The rapid growth of data has provided new impetus and requirements for the development of storage. Let's recall how the block storage form has evolved.

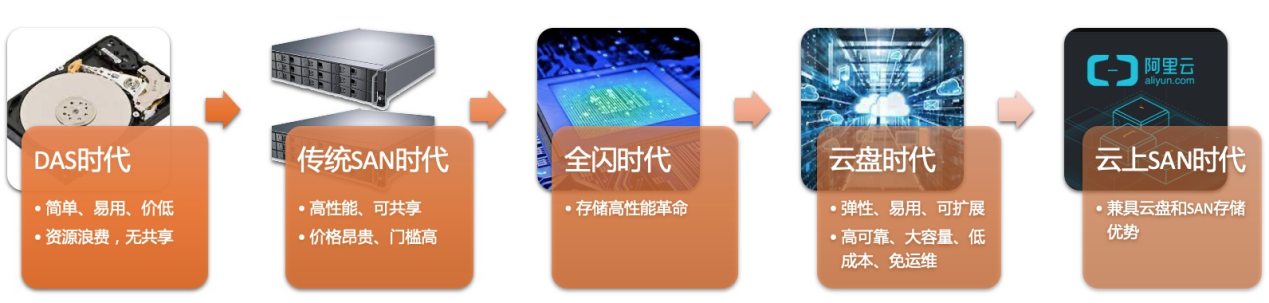

Figure 3: Evolution of Block Storage

Direct-Attached Storage (DAS): The storage device is directly attached to the host (conforming to SCSI, SAS, FC, and other protocols). The system is simple and easy to configure and manage, and the cost is low. Since the storage resources cannot be fully utilized and shared, it is difficult to achieve unified management and maintenance.

Storage Area Network (SAN): Connect the storage array and business host through a private network, solving the problems of unified management and data sharing and realizing high-performance and low-latency data access. However, the storage cost of SAN is high, the O&M is complex, and SAN has poor scalability. It raised the threshold of use for users.

All Flash: The revolution and cost reduction of the underlying storage medium mark the advent of the all-flash era. Since then, the storage performance bottleneck has shifted to the software stack, which forces software to undergo large-scale changes, promotes the rapid development of user-mode protocols, RDMA, and other technologies, drives the software and hardware integration, and brings a leap in storage performance.

Cloud Disk: Storage has been transferred to the cloud during the rapid development of cloud computing. Cloud disk has inherent advantages: flexibility, elasticity, ease of use, easy expansion, high reliability, large capacity, low cost, and no O&M. It has become a solid foundation for storage in digital transformation.

Cloud SAN: Cloud SAN was created to support storage services in all aspects and replace traditional SAN storage. It inherits many advantages of cloud disks and also has traditional SAN storage capabilities, including shared storage, data protection, synchronous/asynchronous replication, and fast snapshots. It will continue to play an important role in the storage market.

In addition, NVMe is becoming the darling of the new era in the evolution of storage protocols.

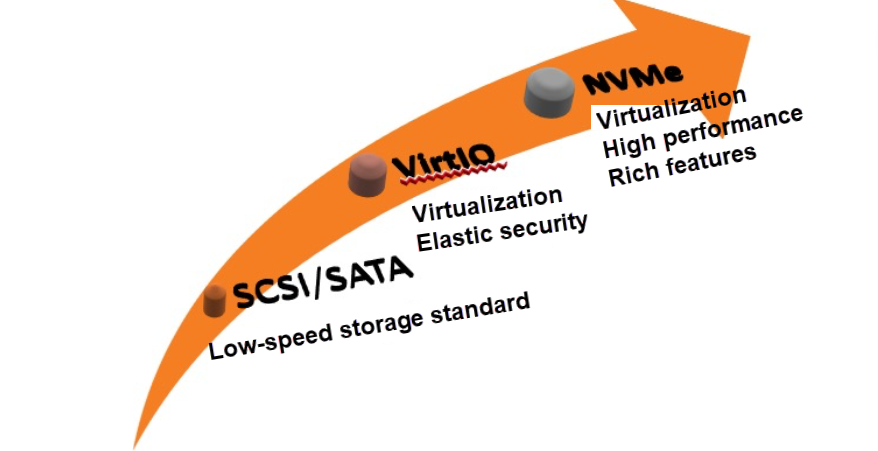

Figure 4: Evolution of Storage Protocols

SCSI/SATA: In early times, hard disks were mostly low-speed devices. Data was transmitted through the SCSI layer and SATA bus. Performance was limited by slow storage media, such as mechanical hard disks, which covered up the performance disadvantages of the SATA single channel and SCSI software layer.

VirtIO-BLK/VirtIO-SCSI: With the rapid development of virtualization technology and cloud computing, VirtIO-BLK/VirtIO-SCSI gradually became the mainstream storage protocol of cloud computing, making the use of storage resources more flexible, agile, secure, and scalable.

NVMe/NVMe-Of: The development and popularization of flash memory technology have promoted the revolution of a new generation of storage technology. When a storage medium no longer becomes a roadblock to performance, the software stack becomes the biggest bottleneck. Thus, it gave birth to various high-performance lightweight protocols, such as NVMe/NVMe-oF, DPDK/SPDK, and user-mode networks. The NVMe protocol family has high performance, advanced features, and high extensibility. It will surely lead the future development of cloud computing.

In the near future, SAN and NVMe in the cloud will surely become the trend.

The rapid development and popularization of flash memory technology have shifted the performance bottleneck to the software side. More demands for storage performance and functions have pushed NVMe onto the historical stage. NVMe has designed data access protocols specifically for high-performance devices. Compared with the traditional SCSI protocol, the data access protocols of NVMe are simpler and lighter. Its multi-queue technology can significantly improve storage performance. NVMe also provides rich storage features. Since its birth in 2011, the NVMe standard has continuously improved the protocols and standardized many advanced functions. These functions include multi-namespace, multi-path, comprehensive-process data protection T10-DIF, persistent reservation permission control protocol, and atomic writing. The new storage features defined by the NVMe standard will continue to help users create value.

Figure 5: Alibaba Cloud NVMe Cloud Disk

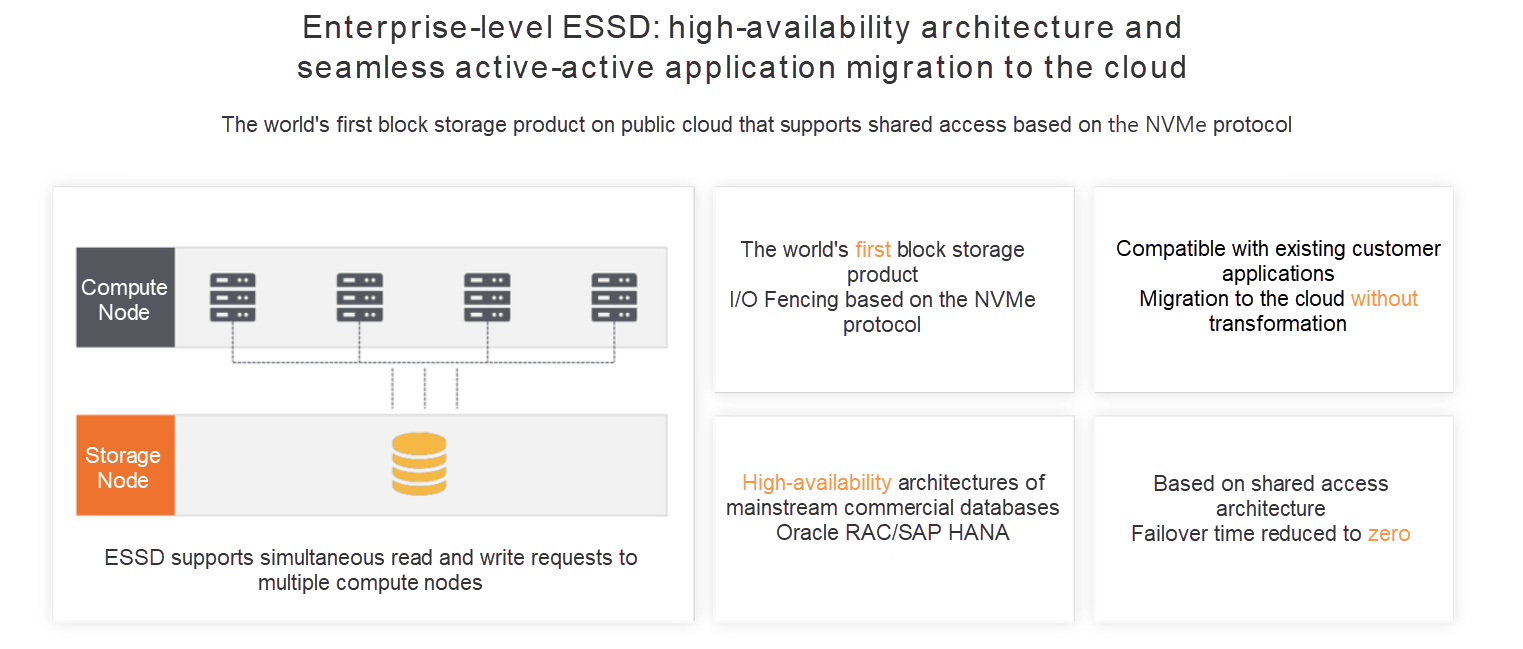

The high performance and rich features of NVMe provide a solid foundation for enterprise storage. The extensibility and growth of the protocol allow the NVMe features and performance to become the core driving force for the evolution of NVMe cloud disks. The NVMe cloud disk is based on ESSD. It inherits the high reliability, high availability, high performance, atomic write, and other capabilities of ESSD and native ESSD enterprise features, such as snapshot data protection, cross-region disaster recovery, encryption, and second-level configuration change. The integration of ESSD and NVMe features can meet the requirements of enterprise-level applications, enabling most NVMe and SCSI-based businesses to seamlessly migrate to the cloud. The shared storage technology covered in this article is implemented based on NVMe Persistent Reservation standards. As one of the additional features of NVMe cloud disks, its Multi-attach and I/O Fencing technologies can help users reduce storage costs and improve business flexibility and data reliability. It can be widely applied in distributed business scenarios, especially for high-availability database systems, such as Oracle RAC and SAP Hana.

As mentioned earlier, shared storage can effectively solve the problem of high availability for databases. Its core capabilities include multi-attach and I/O Fencing. Let's take databases as an example. We will explain how shared storage works.

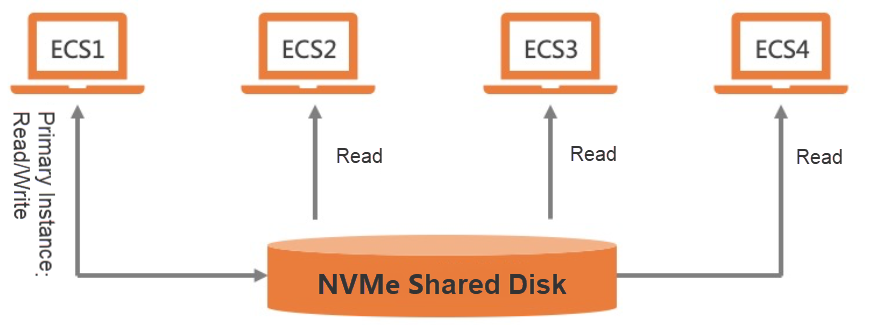

Multi-attach allows a cloud disk to be attached to multiple ECS instances at the same time (up to 16 instances are supported). All instances can read and write the cloud disk (Figure 6). Multiple nodes can share the same data and reduce storage costs through multi-attach. When a single node fails, the business can quickly switch to a healthy node. This process does not require data replication, providing atomic capabilities for the rapid recovery of failures. Highly available databases (such as Oracle RAC and SAP HANA) rely on the implementation of this feature. Note: The shared storage provides data layer consistency and recovery capabilities. The business may need additional processing to achieve final business consistency, such as the log replay of the database.

Figure 6: Attachment to Multiple Instances

Generally, a standalone file system is not suitable as a multi-attach file system. File systems (such as ext4) cache data and metadata to accelerate file access.

However, modifications to files cannot be synchronized to other nodes in a timely manner, resulting in data inconsistency among multiple nodes.

If the metadata is not synchronized, it will also cause conflicts between nodes to access hard disk space, thus introducing data errors. Therefore, multi-attach is usually used with cluster file systems. Common ones include OCFS2, GFS2, GPFS, Veritas CFS, and Oracle ACFS. Alibaba Cloud DBFS and PolarFS can also be used.

Can multi-attach solve all the problems we face? Actually, multi-attach is not omnipotent. It cannot tackle permission management. Generally, applications based on multi-attach need to rely on the cluster management system to manage permissions, such as Linux Pacemaker. However, permission management becomes invalid and causes serious problems in some scenarios. Let's recall the problem raised at the beginning of the article. Under the high-availability architecture, the primary instance will switch to the secondary instance after an exception occurs. If the primary instance is in a suspended state (in scenarios such as network partition and hardware failure), it will mistakenly think that it has the write permission and write dirty data together with the secondary instance. How can this risk be avoided? At this time, it is time for I/O Fencing to come to the stage.

One of the options to solve dirty data writing is to terminate the in-transit request of the original instance and reject the new request. Ensure the old data is no longer written and then switch the instance. Based on this, the traditional solution is STONITH (shoot the other node in the head), which prevents old data from being persisted by restarting the faulty machine remotely. However, there are two problems with this solution. First, the restart process is too long, and the business switching is slow, which usually leads to business interruption of tens of seconds to minutes. There is something more serious. Due to the long I/O path on the cloud, many components are involved, so component failures (such as hardware and network failures) of computing instances may cause I/O recovery failure in a short period. As a result, data correctness cannot be 100% guaranteed.

NVMe standardizes the Persistent Reservation (PR) capability to fundamentally solve this problem, which defines the permission configuration rules for NVMe disks. It can flexibly modify the permissions on disks and attached nodes. In this scenario, after the primary database fails, the secondary database sends the PR command to prohibit the write permission of the primary database and denies all in-transit requests of the primary database. At this time, the secondary database can update data without risk (Figure 7). I/O Fencing can usually assist applications to complete failover at the millisecond level, which shortens the fault recovery time. The smooth migration of businesses is virtually imperceptible to the upper-layer applications, which is far better than STONITH. Next, we will further introduce the permission management technology of I/O Fencing.

Figure 7: I/O Fencing during Failover

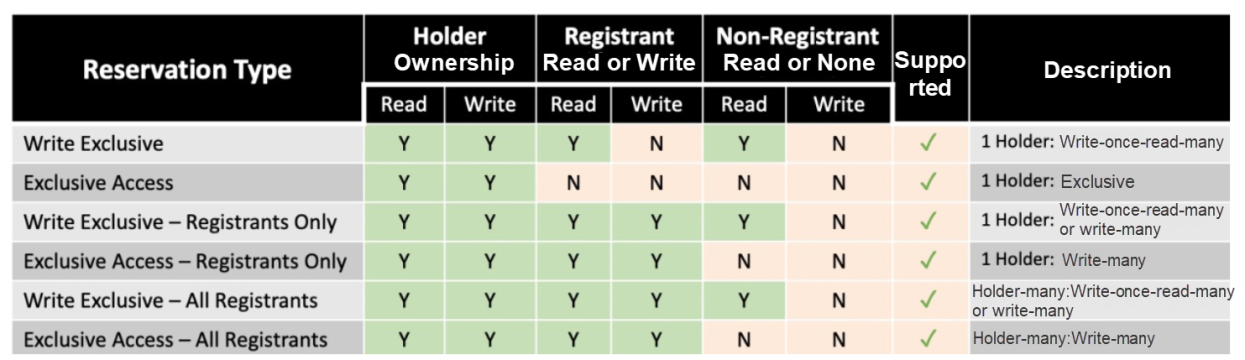

The NVMe PR protocol defines cloud disk and client permissions. Multi-attach capabilities allow you to switch businesses efficiently, securely, and smoothly. The mounted node has three identities in the PR protocol: Holder, Registrant, and Non-Registrant. Holder has all permissions of the cloud disk, Registrant has part of the permissions, and Non-Registrant only has the read permission. At the same time, the disk has six sharing modes, which can realize exclusive, write-once-read-many, and write-many capabilities. You can flexibly manage the permissions of each node (Table 1) by configuring the sharing mode and role identity, thus meeting the requirements of a wide range of business scenarios. NVMe PR inherits all the capabilities of SCSI PR. All SCSI PR-based applications can run on NVMe shared disks with a few modifications.

Table 1: NVMe PR Permissions

Multi-attach and I/O Fencing capabilities can perfectly build a highly available system. NVMe shared disks can also provide the capability of write-once-read-many. It is widely used in read/write splitting databases, AI model training, streaming processing, and other scenarios. In addition, technologies can be easily implemented through shared disks, such as image sharing, heartbeat detection, leader election by arbitration, and the locking mechanism.

Figure 8: Application Scenarios of Write-Once-Read-Many on NVMe Shared Disks

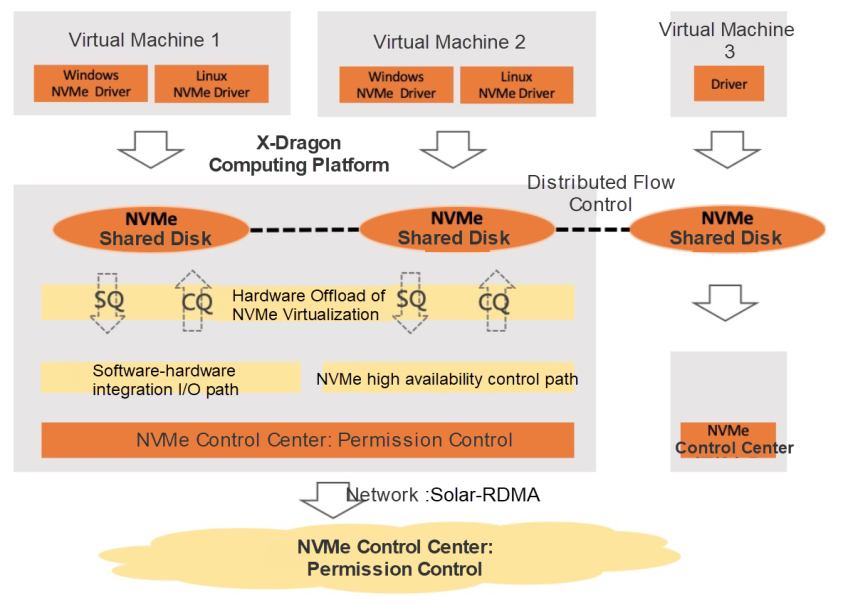

The NVMe cloud disk is implemented based on the computing-storage separation architecture. It realizes efficient NVMe virtualization and a fast I/O path, relying on the X-Dragon hardware platform. It uses Pangu 2.0 storage as the base to achieve high reliability, availability, and performance. The computing and storage are interconnected through the user-state network protocol and RDMA. The NVMe cloud disk is the crystallization of full-stack high-performance and high-availability technologies (Figure 9).

Figure 9: Technical Architecture of NVMe Shared Disks

NVMe Hardware Virtualization: The NVMe hardware virtualization technology is built based on the X-Dragon MOC platform. Send Queue (SQ) and Completion Queue (CQ) are used for efficient interaction between data flow and control flow. The simple NVMe protocol and efficient design, combined with hardware offload technology, reduce 30% of the latency of the NVMe virtualization.

Rapid I/O Path: Based on the software-hardware integration technology of X-Dragon MOC, the rapid I/O path is realized, which shortens the I/O channel and achieves extreme performance.

User-State Protocol: NVMe uses a new generation of Solar-RDMA user-state network communication protocol. Combined with the Leap-CC in-house congestion control, NVMe achieves reliable data transmission and reduces network long-tail delay. JamboFrame based on the 25GbE network realizes efficient large packet transmission. The network software stack is streamlined, and the performance is improved through the comprehensive separation of the data plane and control plane. Network multipathing technology supports the millisecond-level recovery of process faults.

High Availability of Control: The NVMe control center is implemented with Pangu 2.0 distributed high availability storage. NVMe control commands no longer pass through the control nodes, thus obtaining reliability and availability close to I/O, which can assist users to realize service switching at the millisecond level. Based on the NVMe control center, accurate flow control between multiple clients and multiple servers is realized, and accurate distributed flow control for I/O is realized in sub-seconds. I/O Fencing consistency for multiple nodes is realized in a distributed system. The permission status between cloud disk partitions is made consistent through the two-phase update, which solves the split-brain problem of partition permissions.

Large I/O Atomicity: The distributed system implements the atomic write capability of large I/O from end-to-end computing, network, and storage. This ensures the data is not partially persisted without I/O crossing the adjacent 128K boundary. This is important for application scenarios that rely on atomic write, such as databases. It can optimize the double write process of the database and improve the writing performance of the database.

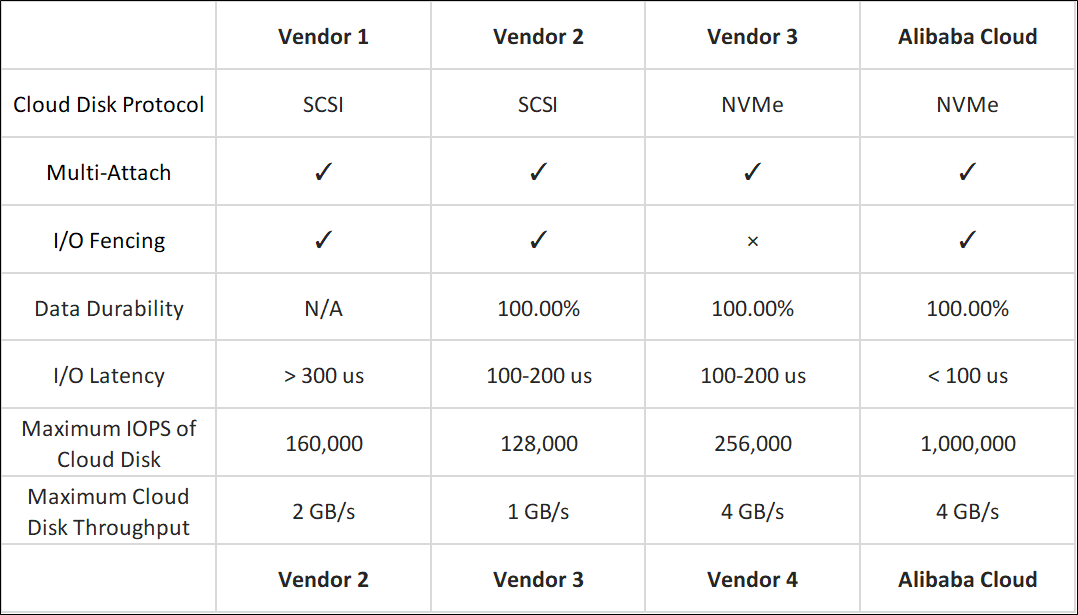

The current NVMe cloud disk combines the most advanced software and hardware technologies in the industry. It is the first in the cloud storage market to realize the combination of NVMe protocol, shared access, and I/O Fencing technology at the same time. It achieves high reliability, availability, and performance on top of ESSD. It also implements a wide range of enterprise features based on the NVMe protocol, such as multi-attach, I/O Fencing, encryption, offline scale-out, native snapshot, and asynchronous replication.

Figure 10: The First Combination of NVMe + Shared Access + I/O Fencing Worldwide

Currently, the NVMe cloud disk and NVMe shared disk are in the invitational beta stage and have been initially certified by Oracle RAC, SAP HANA, and internal database teams. Next, it will be in the public beta stage and be commercialized. We will continue to evolve the NVMe cloud disk to support advanced features, such as online scale-out, comprehensive-process data protection T10-DIF, and multiple namespaces of the cloud disk. As a result, we will provide comprehensive cloud SAN capabilities. Stay tuned!

Table 2: Block Storage Overview of Mainstream Cloud Computing Vendors

Enterprise-Level Features of Alibaba Cloud EBS: Asynchronous Replication

1,353 posts | 478 followers

FollowAlibaba Cloud Community - April 25, 2022

Alibaba Cloud Community - April 24, 2022

digoal - October 31, 2022

Alibaba Cloud Community - April 25, 2022

Alibaba Cloud Community - April 24, 2022

ApsaraDB - December 29, 2025

1,353 posts | 478 followers

Follow Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Application High availability Service

Application High availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Alibaba Cloud Community