Download the "Cloud Knowledge Discovery on KDD Papers" whitepaper to explore 12 KDD papers and knowledge discovery from 12 Alibaba experts.

By Jianxin Ma, Chang Zhou, Hongxia Yang, Peng Cui, Xin Wang, Wenwu Zhu

The training processes of mainstream recommendation algorithms require models to fit a user's next behavior according to this user's historical behavior. However, only fitting the next behavior has the following limitations:

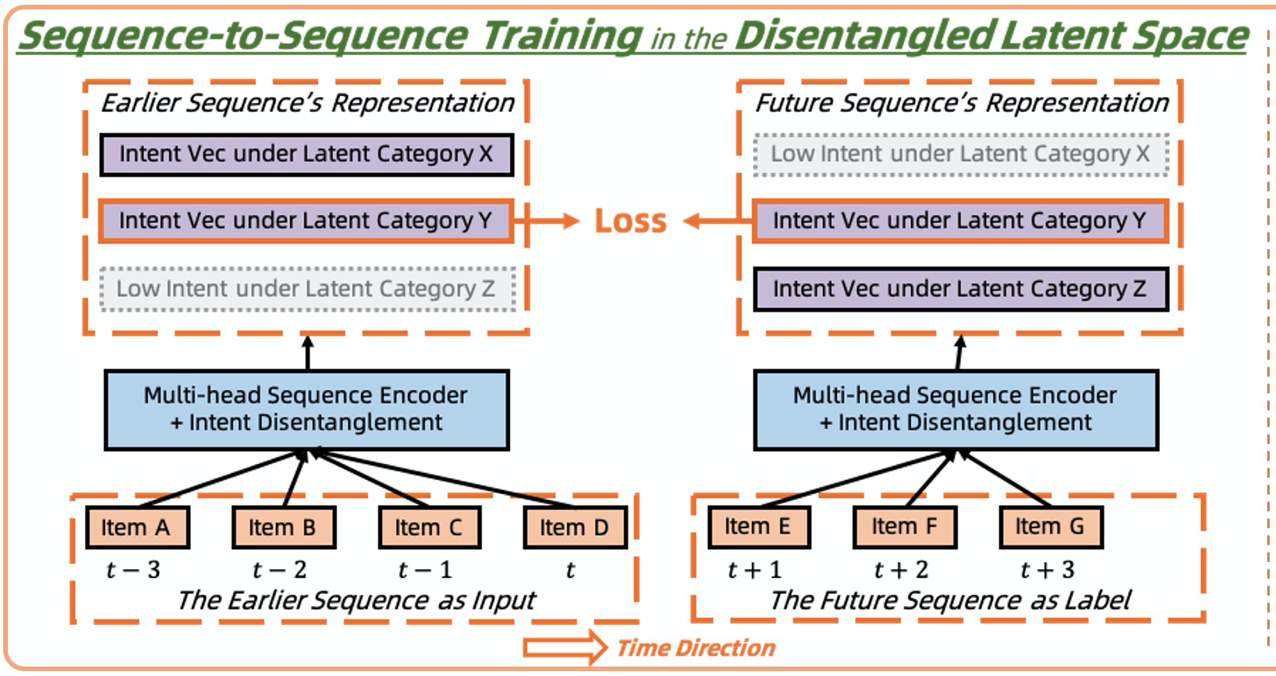

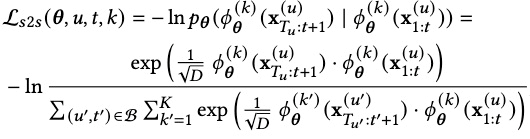

This paper presents an auxiliary training idea to allow models to use the historical behaviors to fit the whole future behavior sequence, rather than just to fit the next behavior. Our work involves both disentangled representation learning and self-supervised contrastive learning to solve two major challenges:

We present the following solutions to solve the preceding challenges:

For Challenge 1, we mapped each item in the future click sequence to a vector that represents the user's interest at that time. For example, more than 50 items are mapped to more than 50 vectors. Then, we clustered these vectors to acquire the user's future main interest vectors. For example, more than 50 vectors were clustered into 8 main vectors. In the final training process, the model predicted these future interest vectors rather than the specific items that the user will click in the future. This way, we only need to fit 8 future vectors rather than more than 50 items.

For Challenge 2, we required the model to estimate the reliability of samples in the training data, such as the similarity between eight future interest vectors and eight historical vectors, and the possibility that each training sample is a noise sample. More reliable samples are used for most of the training time. For example, the most reliable two to three future interest vectors are selected from the eight future interest vectors. The model only needs to fit the selected future interest vectors. The remaining five to six future interest vectors may not be closely related to the user's historical behavior. Therefore, the model is not required to predict these samples.

The purpose of our new training method, that is, sequence-to-sequence loss, is to complement, not replace, the traditional sequence-to-item loss. In other words, we minimize both the losses when processing the sequence. The sequence-to-item loss method is still necessary for recommending items. The sequence-to-sequence loss method is designed to help improve the representation learning quality.

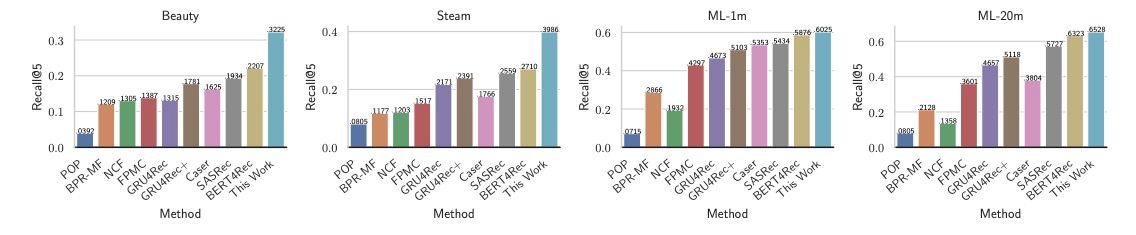

The sequence-to-sequence loss method significantly improves offline recommendation metrics on the basis of public data with intricate interests of users.

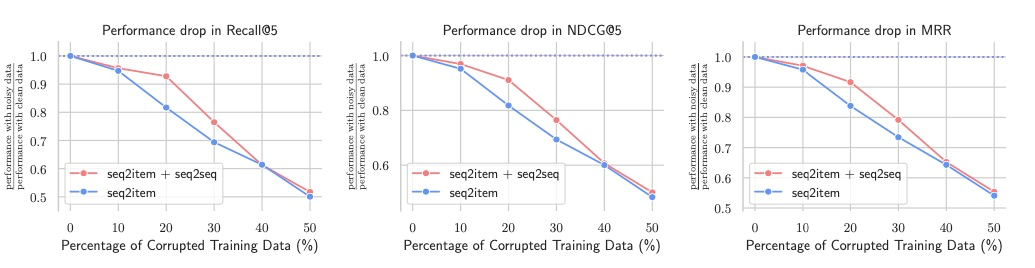

The sequence-to-sequence loss method performs more robustly than the sequence-to-item loss method after noise samples are continuously added to training sets.

In extensive offline experiments conducted by using data from Taobao Mobile, the sequence-to-item loss method alone achieved an absolute improvement of 0.5% (12.6% vs. 12.1%) when it was used to predict the HitRate@50 of the next five clicks. After initial release, the sequence-to-sequence loss method slightly improved exposure and discovery but hardly increased the click rate. We will continue to iterate this method to optimize its online effect on the basis of the improvements that our team will make to the multi-vector model MultCLR and the vector recall loss function CLRec with support for exposure bias removal.

We present a new method of vector recall system training, which aims to make up for the lack of diversity and robustness caused by short-term training objectives. The core technical contribution is to combine disentangled representation learning and self-supervised learning.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Learning Stable Graphs from Heterogeneous Confounded Environments

Understanding Negative Sampling in Graph Representation Learning

2,593 posts | 791 followers

FollowAlibaba Cloud Data Intelligence - September 6, 2023

Jeffle Xu - March 15, 2021

ApsaraDB - November 18, 2025

Alibaba Clouder - January 15, 2019

digoal - May 24, 2021

Alibaba Clouder - July 12, 2018

2,593 posts | 791 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn More AIRec

AIRec

A high-quality personalized recommendation service for your applications.

Learn MoreMore Posts by Alibaba Clouder