By He Linbo (Xinsheng)

With the rapid development of 5G, IoT, and other technologies, edge computing has been increasingly applied to industries and scenarios, such as telecommunications, media, transportation, logistics, agriculture, and retail. It has become the key method to improving data transmission efficiency in these fields. Meanwhile, the form, scale, and complexity of edge computing are growing. However, the O&M methods and capabilities of the edge computing field to support the speed of edge business innovation are becoming increasingly weak. As a result, Kubernetes has become a key element in edge computing to help enterprises run containers at the edge better, maximize resource utilization, and shorten the R&D cycles.

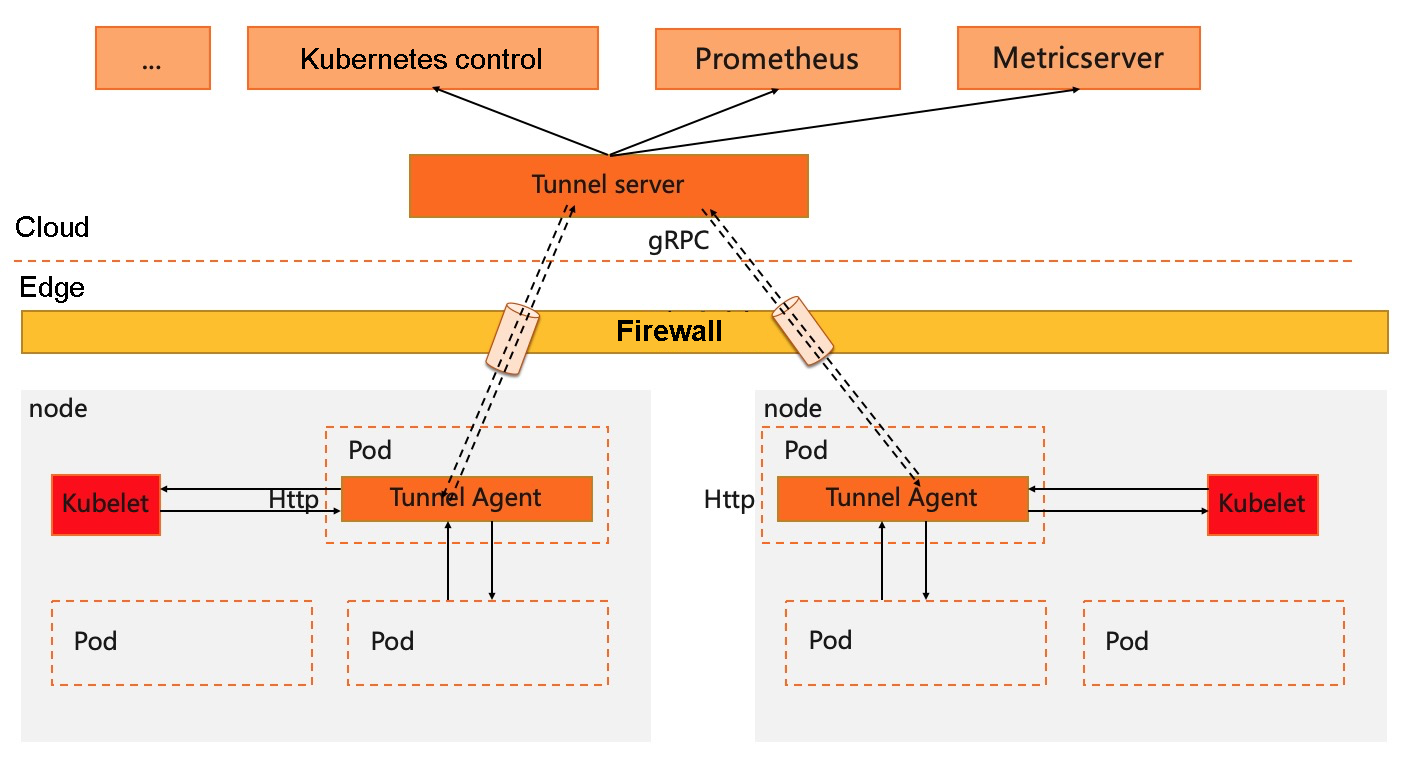

However, there are still many problems to be solved if native Kubernetes is directly applied to edge computing scenarios. For example, the cloud and the edge are usually located at different network planes, and edge nodes are generally located inside firewalls. The adoption of the cloud (central)-edge collaboration framework will lead to the following challenges for the O&M and monitoring capabilities of the native Kubernetes system:

Enterprises need to solve the challenges of native Kubernetes in edge scenarios, such as application lifecycle management, cloud-edge network connection, cloud-edge O&M collaboration, and heterogeneous resource support. For this purpose, OpenYurt, an edge computing, cloud-native, open-source platform based on Kubernetes has emerged. It is also an important part of the CNCF edge cloud-native. This article elaborates how Yurt-Tunnel, one of the core components of OpenYurt, expands the related capabilities of the native Kubernetes system in edge scenarios.

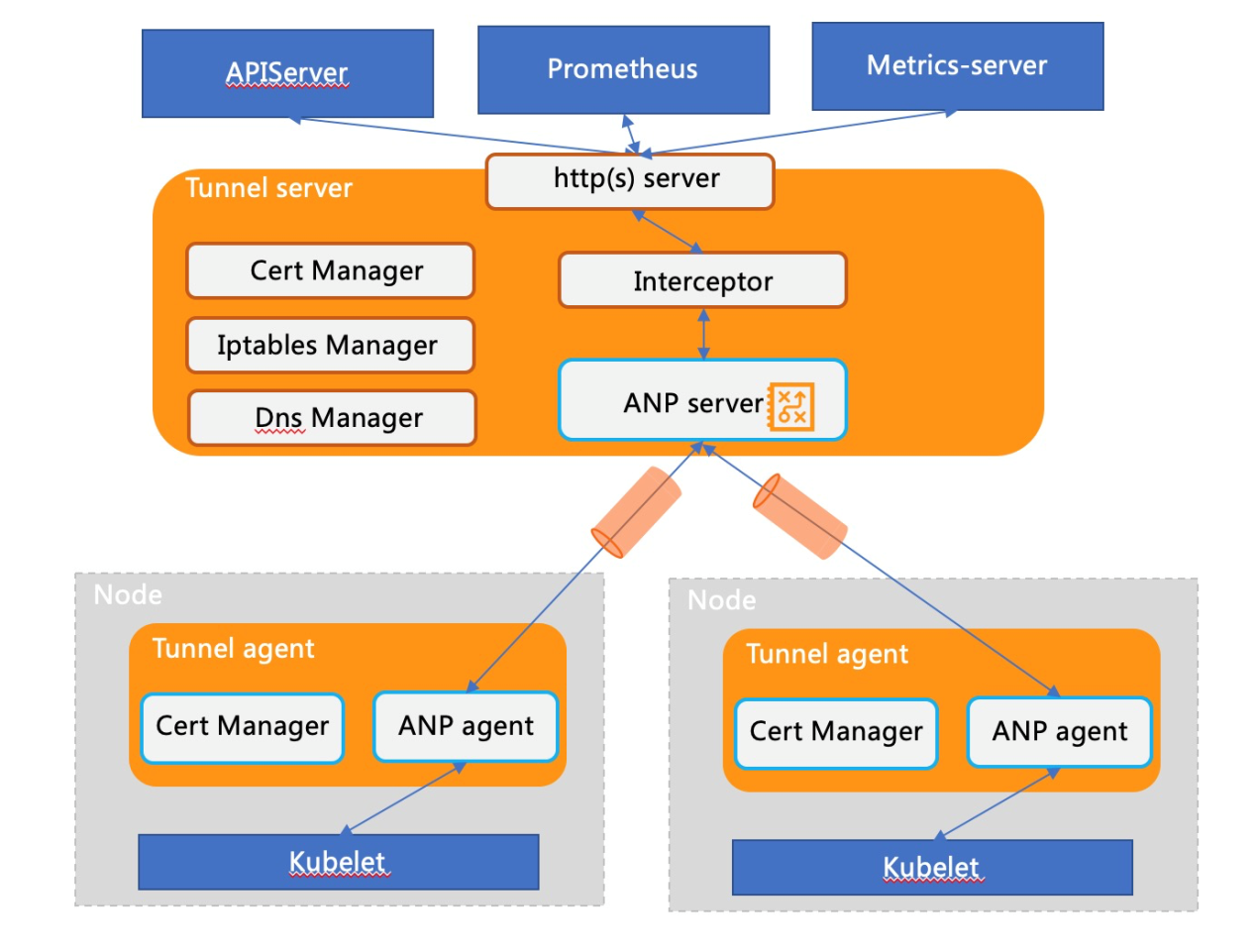

Since the edge can access the cloud, it is possible to consider building a tunnel that can be reversely penetrated at the cloud to ensure that the cloud (center) can actively access the edge based on the tunnel. We have investigated many open-source tunnel solutions from the perspectives of capabilities and ecological compatibility. We finally chose to design and implement the overall solution of Yurt-Tunnel based on ANP with the advantages of security, non-intrusion, scalability, and efficient transmission.

A secure, non-invasive, and scalable reverse channel solution in the Kubernetes cloud-edge integrated framework must include at least the following capabilities:

The following figure shows the framework modules of Yurt-Tunnel:

# https://github.com/openyurtio/apiserver-network-proxy/blob/master/pkg/agent/client.go#L189

# The registration information of the yurt-tunnel-agent:

"agentID": {nodeName}

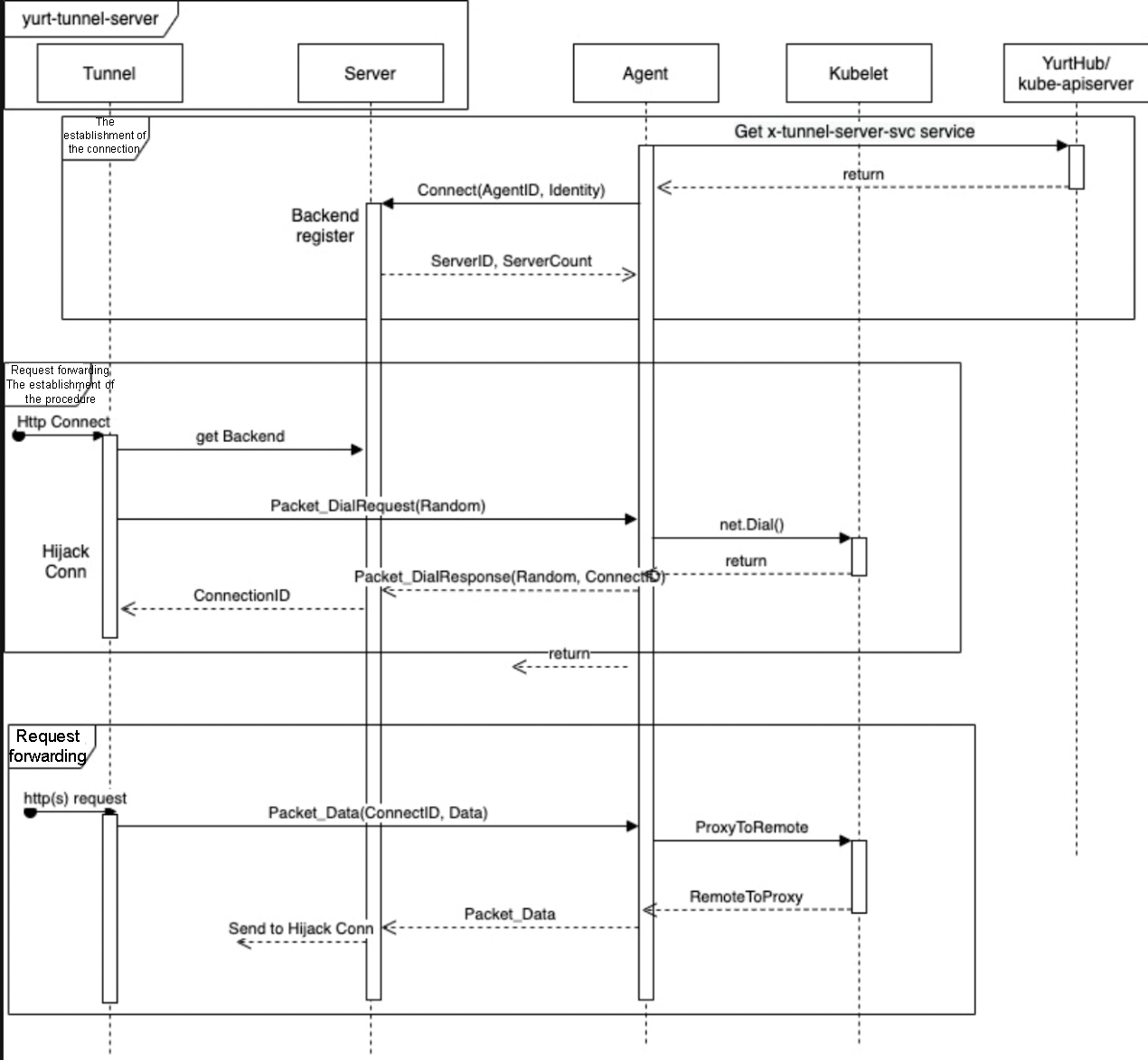

"agentIdentifiers": ipv4={nodeIP}&host={nodeName}"Scheme 1: The initial cloud-edge connection only notifies and forwards the request, and the tunnel-agent establishes a new connection with the cloud to process the request. The problem of the independent request identifier and concurrency can be solved easily through new connections. However, a connection needs to be established for each request, consuming a lot of resources.

Scheme 2: The initial cloud-edge connection only forward requests. To reuse the same connection for a large number of requests, each request needs to be encapsulated and added with an independent identifier to meet the requirement of concurrent forwarding. Since a connection needs to be reused, the connection management and the request lifecycle management must be decoupled. In other words, the status transition of request forwarding must be managed independently. This solution is more complex; it involves encapsulation, unpacking, and state machines for request processing.

# https://github.com/openyurtio/apiserver-network-proxy/blob/master/konnectivity-client/proto/client/client.pb.go#L98

# The data format and data type of cloud-edge communication

type Packet struct {

Type PacketType `protobuf:"varint,1,opt,name=type,proto3,enum=PacketType" json:"type,omitempty"`

// Types that are valid to be assigned to Payload:

// *Packet_DialRequest

// *Packet_DialResponse

// *Packet_Data

// *Packet_CloseRequest

// *Packet_CloseResponse

Payload isPacket_Payload `protobuf_oneof:"payload"`

}Packet_DialRequest and Packet_DialResponse. Packet_DialResponse.ConnectID is used to identify the request and is equivalent to the requestID in the tunnel. The request and associated data are encapsulated in Packet_Data. Packet_CloseRequest and Packet_CloseResponse are used to the reclaim resources of the forwarding procedures. For details, please refer to the following sequence diagram:

RequestInterceptor moduleThe preceding analysis indicates that before yurt-tunnel-server forwards a request, the requester needs to initiate an Http Connect request to construct a forwarding procedure. However, it is difficult to add corresponding processing for open-source components, such as Prometheus and metrics-server. Therefore, a request interception module Interceptor is added to the Yurt-tunnel-server to initiate Http Connect requests. The code is listed below:

# https://github.com/openyurtio/openyurt/blob/master/pkg/yurttunnel/server/interceptor.go#L58-82

proxyConn, err := net.Dial("unix", udsSockFile)

if err != nil {

return nil, fmt.Errorf("dialing proxy %q failed: %v", udsSockFile, err)

}

var connectHeaders string

for _, h := range supportedHeaders {

if v := header.Get(h); len(v) != 0 {

connectHeaders = fmt.Sprintf("%s\r\n%s: %s", connectHeaders, h, v)

}

}

fmt.Fprintf(proxyConn, "CONNECT %s HTTP/1.1\r\nHost: %s%s\r\n\r\n", addr, "127.0.0.1", connectHeaders)

br := bufio.NewReader(proxyConn)

res, err := http.ReadResponse(br, nil)

if err != nil {

proxyConn.Close()

return nil, fmt.Errorf("reading HTTP response from CONNECT to %s via proxy %s failed: %v", addr, udsSockFile, err)

}

if res.StatusCode != 200 {

proxyConn.Close()

return nil, fmt.Errorf("proxy error from %s while dialing %s, code %d: %v", udsSockFile, addr, res.StatusCode, res.Status)

}The yurt-tunnel needs to generate certificates by itself and maintain the automatic rotation of certificates to guarantee the long-term secure communication of the cloud-edge tunnel and support the HTTPS request forwarding. The specific code is listed below:

# 1. Yurt-tunnel-server certificate:

# https://github.com/openyurtio/openyurt/blob/master/pkg/yurttunnel/pki/certmanager/certmanager.go#L45-90

- Certificate storage location: /var/lib/yurt-tunnel-server/pki

- CommonName:"kube-apiserver-kubelet-client" // webhook verification for kubelet server

- Organization:{ "system:masters", "openyurt:yurttunnel"} // webhook verification for kubelet server and auto approve for yurt-tunnel-server certificate

- Subject Alternate Name values: {ips and dns names of x-tunnel-server-svc and

x-tunnel-server-internal-svc}

- KeyUsage: "any"

# 2. Yurt-tunnel-agent certificate:

# https://github.com/openyurtio/openyurt/blob/master/pkg/yurttunnel/pki/certmanager/certmanager.go#L94-112

- Certificate storage location: /var/lib/yurt-tunnel-agent/pki

- CommonName: "yurttunnel-agent"

- Organization: {"openyurt:yurttunnel"} // auto approve for yurt-tunnel-agent certificate

- Subject Alternate Name values: {nodeName, nodeIP}

- KeyUsage: "any"

# 3. The certificate signing request (CSR) of yurt-tunnel is approved by yurt-tunnel-server

# https://github.com/openyurtio/openyurt/blob/master/pkg/yurttunnel/pki/certmanager/csrapprover.go#L115

- Listen to csr resources

- Filter csr that does not belong to yurt-tunnel (no "openyurt:yurttunnel" in Organization)

- Approve csr that has not been approved

# 4. Automatic certificate rotation

# https://github.com/kubernetes/kubernetes/blob/master/staging/src/k8s.io/client-go/util/certificate/certificate_manager.go#L224The cloud component requests need to be forwarded to yurt-tunnel-server seamlessly, meaning that no modification is required for the cloud components. Therefore, we must analyze the requests from cloud components. Currently, there are two main types of component O&M requests:

Different solutions are required for the diversions of different types of requests:

# Maintenance code for related iptables rules: https://github.com/openyurtio/openyurt/blob/master/pkg/yurttunnel/iptables/iptables.go

# The iptables dnat rules of yurt-tunnel-server maintenance are as follows:

[root@xxx /]# iptables -nv -t nat -L OUTPUT

TUNNEL-PORT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* edge tunnel server port */

[root@xxx /]# iptables -nv -t nat -L TUNNEL-PORT

TUNNEL-PORT-10255 tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:10255 /* jump to port 10255 */

TUNNEL-PORT-10250 tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:10250 /* jump to port 10250 */

[root@xxx /]# iptables -nv -t nat -L TUNNEL-PORT-10255

RETURN tcp -- * * 0.0.0.0/0 127.0.0.1 /* return request to access node directly */ tcp dpt:10255

RETURN tcp -- * * 0.0.0.0/0 172.16.6.156 /* return request to access node directly */ tcp dpt:10255

DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* dnat to tunnel for access node */ tcp dpt:10255 to:172.16.6.156:10264nodeName is yurt-tunnel-server so that requests of type 2 can be forwarded to yurt-tunnel seamlessly.# The different uses of x-tunnel-server-svc and x-tunnel-server-internal-svc:

- x-tunnel-server-svc: Mainly expose 10262/10263 ports to access yurt-tunnel-server from the Internet. For example, yurt-tunnel-agent.

- x-tunnel-server-internal-svc: Mainly used to access cloud components from internal networks. For example, Prometheus and metrics-server.

# The principles of dns domain name resolution:

1. Yurt-tunnel-server creates or updates the yurt-tunnel-nodes configmap to kube-apiserver. The format of tunnel-nodes is: {x-tunnel-server-internal-svc clusterIP} {nodeName}, ensuring that the mapping relationship of all nodeNames and yurt-tunnel-server services is recorded.

2. Mount the yurt-tunnel-nodes configmap to the coredns pod. Meanwhile, use the host plug-in to use the dns records of the configmap.

3. Configure the port mapping in the x-tunnel-server-internal-svc. 10250 to 10263, and 10255 to 10264.

4. With the above configuration, the http://{nodeName}:{port}/{path} request can be seamlessly forwarded to yurt-tunnel-servers.If a user needs to access other ports on the edge (other than 10250 and 10255), add the corresponding dnat rules to iptables or add the corresponding port mapping in x-tunnel-server-internal-svc, as shown below:

# For example, to access the 9051 port at the edge

# Add the iptables dnat rule:

[root@xxx /]# iptables -nv -t nat -L TUNNEL-PORT

TUNNEL-PORT-9051 tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:9051 /* jump to port 9051 */

[root@xxx /]# iptables -nv -t nat -L TUNNEL-PORT-9051

RETURN tcp -- * * 0.0.0.0/0 127.0.0.1 /* return request to access node directly */ tcp dpt:9051

RETURN tcp -- * * 0.0.0.0/0 172.16.6.156 /* return request to access node directly */ tcp dpt:9051

DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* dnat to tunnel for access node */ tcp dpt:9051 to:172.16.6.156:10264

# Add port mapping in the x-tunnel-server-internal-svc

spec:

ports:

- name: https

port: 10250

protocol: TCP

targetPort: 10263

- name: http

port: 10255

protocol: TCP

targetPort: 10264

- name: dnat-9051 # Add mapping

port: 9051

protocol: TCP

targetPort: 10264Of course, the preceding iptables dnat rules and service port mappings are updated by the yurt-tunnel-server automatically. Users only need to add port configurations in the yurt-tunnel-server-cfg configmap. The details are listed below:

# Note: Due to uncontrollable factors of certificates, new ports currently only support forwarding form 10264 in the yurt-tunnel-server.

apiVersion: v1

data:

dnat-ports-pair: 9051=10264 # New port=10264(do not support forwarding that is not from 10264)

kind: ConfigMap

metadata:

name: yurt-tunnel-server-cfg

namespace: kube-systemEgressSelector function of the kube-apiserverAs the kernel of Alibaba Cloud ACK@Edge, OpenYurt has been applied in dozens of industries, such as CDN, audio and video livestreaming, IoT and logistics, with millions of CPU cores. We are pleased to see that more developers, open-source communities, enterprises, and academic institutions have recognized the idea of OpenYurt and are joining the team to build OpenYurt jointly. We welcome more users to build the OpenYurt community together, help the cloud-native edge computing ecosystem prosper, and enable the true cloud-native technology to create value in more edge scenarios.

Build a Custom DevOps Platform Based on RocketMQ Prometheus Exporter

Understand the XA Mode of Distributed Transaction in Six Figures

639 posts | 55 followers

FollowAlibaba Developer - March 3, 2022

Alibaba Cloud Native Community - May 4, 2023

Alibaba Developer - July 9, 2021

Alibaba Developer - January 28, 2021

Alibaba Developer - May 31, 2021

Alibaba Cloud Native Community - January 9, 2023

639 posts | 55 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Link IoT Edge

Link IoT Edge

Link IoT Edge allows for the management of millions of edge nodes by extending the capabilities of the cloud, thus providing users with services at the nearest location.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Native Community