By Gongzhi

With the emergence of data computing and machine intelligence algorithms in recent years, applications based on big data and AI algorithms have become increasingly popular, and big data applications have also emerged in various industries. Testing technologies, as a part of engineering technologies, are also evolving with the times. In the Data Technology (DT) era, how to test and guarantee the quality of a big data application has become a puzzle for the testing field.

This article describes how to test the quality of the technology and quality systems of the Alibaba AI mid-end, search, recommendation, and advertising applications. I hope this article will give you some guidance. Your comments and feedback are welcome.

As mobile Internet and smart devices emerged over the past decade, more and more data that contains a large number of user characteristics and behavior logs have been deposited on application platforms of different companies. Through statistical analysis and feature sample extraction, the data is trained to generate business algorithm models. Like smart robots, these models can accurately identify and predict users' behavior and intentions.

If data is used as a resource, Internet companies are essentially different from traditional companies because they are not resource consumers but resource producers. They constantly create resources during platform operations, and the resources increase exponentially when the platform use duration and frequency increase. Platforms use the data and models to provide a better user experience and more business value. In 2016, AlphaGo, an AI Go program based on a deep neural network, defeated the Go world champion Li Shishi for the first time. This algorithm model developed by DeepMind, a company owned by Google, used all of the historical chess manual data of Go players.

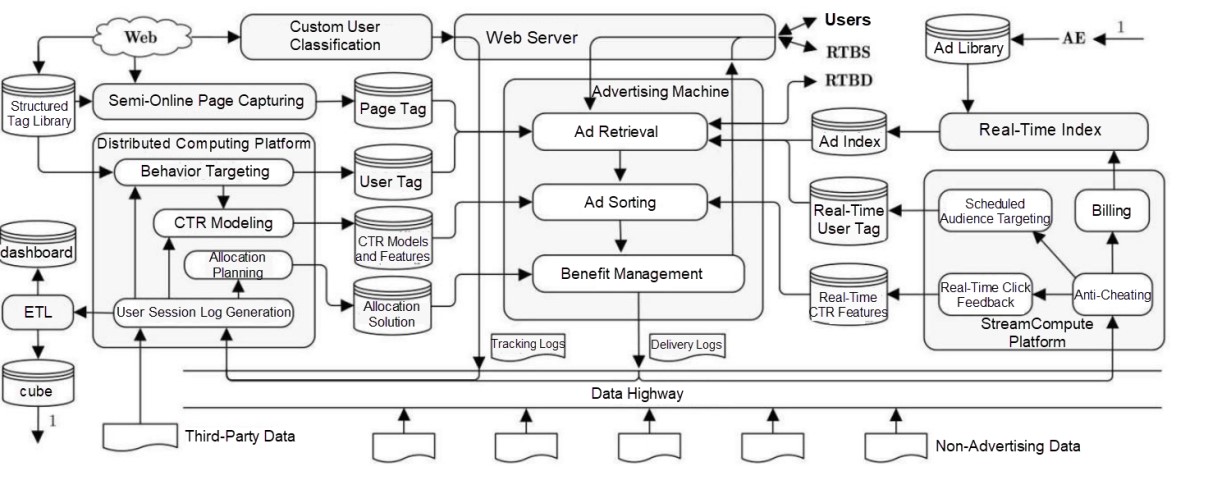

Alibaba's search, recommendation, and advertising systems are typical big data application scenarios (high-dimensional sparse business scenarios.) Before talking about how to test, we need to understand the engineering technologies for the platform to process data. The search, recommendation, and advertising systems have similar engineering architectures and data processing processes, and they are typically classified into online and offline systems. Figure 1 shows the common architecture of an online advertising system, which is referenced from Computing Advertising written by Liu Peng. The offline system processes data and builds and trains algorithm models, and the online system processes users' real-time requests. The online system will use models trained by the offline system for real-time online prediction, for example, click through rate (CTR) evaluation.

When users visit Taobao Mobile or other apps, they generate a large amount of behavior data, including browsing, search, clicking, purchase, comment, and stay duration. The behavior data, sellers' various commodity data, and advertisers' data if ads are involved are collected, filtered, processed, and extracted for features to generate the sample data required for models. After the sample data is trained offline on the machine learning and training platform, it generates various algorithm models for online services, such as Deep Interest Evolution Network (DIEN), tree-based deep models, representation learning on large graphs, and dynamic similar user vector recall models based on user classification and interests. Typically, the online system uses technologies related to information retrieval, such as forward and inverted data index and time series storage to provide data retrieval and online prediction services.

After deep learning of big data in the preceding dimensions, the search, recommendation, and advertising systems feature a thousand people and faces. They present different commodities and recommend different natural and commercial results based on users' requests. A user may obtain different results at different time points if the user's behavior changes. All of these are attributed to data and algorithm models.

Figure 1: Common Architecture of an Online Advertising System

Before thinking about how to test the search, recommendation, and advertising systems, we must first define the problem domain, that is, what are the test problems that need to be solved? Our thoughts are summarized in the following areas.

In addition to normal requests and responses, we need to check big data integrity and the richness of the search, recommendation, or advertising system. The performance of a search or recommendation engine depends on whether it provides rich content and diversified recall methods. The algorithm causes uncertain search and recommendation results, which also brings trouble for testing and verification. Therefore, data integrity and uncertainty verification are key points for functional testing.

As we all know, the online computing engine of a search or advertising system updates its internal data continuously because sellers change commodity information or advertisers change creation ideas or delivery plans. These updates need to be synced to the delivery engine in a timely manner. Otherwise, the information will be inconsistent or incorrect. We need to focus on how to test and verify the timeliness of these changes with certain concurrent bandwidth and timely responses to data updates.

All online services require low latency. The server end needs to respond to each query within dozens of milliseconds. However, the whole server topology consists of about 30 different modules. Therefore, it is important to test the performance and capacity of backend services.

The search, recommendation, and advertising results need to match users' requirements and interests to ensure a high CTR and conversion rate. However, how to verify the correlation between such requirements and results and how to test the algorithm effect is an interesting and challenging topic.

The purpose of testing before an offline release is to inspect and accept the code. In this process, defects are found and rectified to improve the code quality. The purpose of online stability operations is to improve the system running stability. In this way, technical O&M methods can be used to improve the high availability and robustness of a system with common code quality and reduce online fault occurrence frequency and impact. This section is also called the online technical risk field.

This aspect is a supplement to the preceding several aspects and is a supplement to the efficiency of the entire engineering R&D system. Quality and efficiency are twins and two sides of the same coin. It is difficult to balance the two. Whether quality or efficiency is preferred varies at different product development phases. Our engineering efficiency aims to solve the DevOps R&D tool link issues to improve R&D engineering productivity.

These are the six major problem domains for big data application testing. Some domains have exceeded the traditional scope of testing and quality. However, those are the unique quality challenges that come with big data applications. Next, I will talk about these six problem domains.

Functional testing consists of three parts: end-to-end user interaction testing, online engineering functional testing, and offline algorithm system functional testing.

1. End-to-End User Interaction Testing

This aspect tests and verifies the user interaction of the search, recommendation, or advertising system, including verification of the user experience and logical features on the buyer side of Taobao Mobile, Tmall, Youku, and other apps, verification of the business process logic on the Business Platform of advertisers and sellers, and testing of advertisers' creative advertising creation, delivery plan formulation, billing, and settlement. End-to-end testing guarantees the quality of products that we provide to users and customers. Client testing technologies and tools mainly involve end-to-end (native or h5) app or web UI automation, security, performance stability (monkey test or crash rate), traffic (weak network), power consumption, compatibility, and adaptation. We have made some improvements and innovations based on the testing technologies and open-source technical systems of other teams in the group. For example, we have introduced intelligent rich media verification into automated client testing to complete image comparison, text OCR, local feature matching, edge detection, key frame-based video verification (component animations and pre-movie adverts), and other features. This verifies all the presentation forms of advertising and recommendations on the client. In addition to common API Service-level tests, we used the comparative test method based on data traffic playback for testing Java APIs and the client SDK. This method has a good effect on the quality of the API, database, file, and traffic comparison. For end-to-end testing and verification, we focus on API automation testing and only perform simple logic verification for UI automation due to a fast UI iteration speed. Full UI verification regression (functional logic and style experience) is tested manually. We used some outsourcing test services as needed.

2. Online Engineering System Testing

This aspect is the focus of the functional testing of a system. The search, recommendation, and advertising systems are essentially data management systems that manage data in the commodity, user, seller, and advertiser dimensions. The online engineering system needs to store a large amount of data to the machine's memory in a certain data structure to provide the recall, evaluation, and convergence services. Here, the basic principle of functional testing is to send a request or query string and verify the response results. On this basis, multiple technologies that improve the efficiency of test case generation and execution are used. We have integrated visualization and intelligence technologies, including intelligent case generation, intelligent regression, intelligent attribution of failures, precise test coverage, and functional A/B testing into the testing methodology to solve problems, such as high writing costs, difficult debugging, and low regression efficiency of large-scale heterogeneous functional test cases of the online engineering system. The online service engineering of the search, recommendation, or advertising system consists of 20 to 30 different online modules. It takes a lot of time to test these online service modules. Our primary optimization objectives are case writing efficiency and regression running efficiency. We have used case expansion and recommendation technologies and the genetic algorithm to dynamically generate valid test cases and dynamically orchestrated regression technologies in the case execution phase, dramatically improving the functional testing coverage rate of online modules.

In addition, we use online queries for comparative tests to verify function change differences and analyze results consistency and data distribution between the system to release and the online system. This helps us discover any functional issues in the system. In addition to comparative tests, we regularly inspect and monitor recent top N queries online to monitor features and easily verify the integrity and diversity of engine data when the amount of query data reaches a certain level, for example, 80% of long tail queries in the last week. Finally, we need to emphasize the test policy in this aspect. The algorithm logic, for example, the service logic of recall and sorting is complex and involves different business and segmentation models. Algorithm engineers design the logic. Therefore, they also design and execute test cases of the algorithm logic. Only they know about the functional logic of models and how they are changed. With the online debugging system, algorithm engineers know about the logic differences between online running algorithms and offline algorithms to release. Therefore, they can easily write test cases. Test engineers build the whole test framework and tool environment and write and run basic test cases. The last chapter of this article also discusses the test policy adjustment.

3. Offline System Testing or Algorithm Engineering Testing

In terms of the data process, algorithm engineering includes algorithm model building, training, and release. We need to know how to verify the quality of feature samples and models of the whole pipeline, from the offline process to the online process, including feature extraction, sample generation, model training, and online prediction. Therefore, the algorithm test focus includes the following:

The first two focus areas involve the data quality and feature efficacy and will be introduced in section 4. Various data quality metrics are used to evaluate quality.

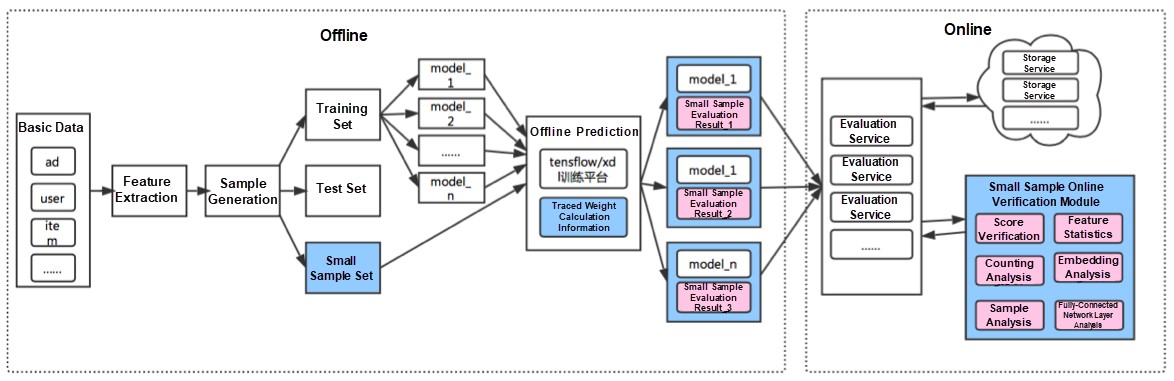

We will introduce the last focus, testing before the algorithm online evaluation service is released. It involves the final service quality of the model and therefore is important. Here, we compared the scores of a small sample online and offline to comprehensively verify the online quality of a module. Before a model is released to provide services, we need to test and verify the model. In addition to common test data sets, we independently separate a part of sample sets, which is called a small sample data set. We compared the online and offline scores of the small sample data set to verify the online service quality of the model. This small sample scoring provides capabilities similar to grayscale verification. Figure 2 shows the small sample testing process in detail.

Figure 2: Small Sample Testing

For offline system testing, we have performed some intensive research to guarantee the quality of the deep learning and training platform, and have encountered the following challenges:

For the preceding challenges, we provide the following solutions:

This section mainly includes the following:

1. Testing the Real-Time Update Link of Engine Data

In a real-time update link, data is first read from the upstream data source or data table (Alibaba message middleware and offline data tables, such as TT, MetaQ, and ODPS.) Then, the data is processed by the real-time computing task of the StreamCompute platform (Alibaba real-time computing platform, such as Bayes engine and Blink) to generate update messages that are acceptable to the engine. After receiving messages of this type, the engine updates data. The link verification points include:

To verify data correctness and consistency, we used real-time stream data comparison and full data comparison. Data timeliness depends on the underlying resources of the computing platform to ensure a millisecond-level data update speed. To verify update timeliness, we record update timestamps. To verify the response time and concurrent processing capability of the whole link, we simulate upstream data and copy traffic.

2. Testing the Real-Time Update Link of Models (Online Deep Learning)

In the last two years, Online Deep Learning (ODL) emerged to obtain the algorithm benefits of real-time behavior. Users' real-time behavior feature data needs to be trained in models. Online service models will be updated and replaced at 10- to 15-minute intervals, and a maximum of 10 minutes are retained for model verification. This is a quality challenge that ODL brings. To solve this problem, we used two methods.

3. The main method is to establish a quality metrics monitoring system for the full ODL link, that is, the link from sample building to online prediction, including verification of sample domain metrics and training domain metrics.

The metrics are selected based on whether they are associated with the effect. For CTR evaluation, we can calculate metrics, such as the AUC, GAUC, and score_avg, and check whether the difference between train_auc and test_auc and between pctr and actual_ctr is within a reasonable scope. For the difference between train_auc and test_auc, check whether overfitting occurs. For the difference between pctr and actual_ctr, check the accuracy of the score. Another key point is the test set selection. We recommend that you select data in the next time window and data randomly sampled from data in a past period of time, for example, one week, as the test set. Future data is used to test the model generalization performance, and existing and comprehensive data is used to test whether the model deviates. This reduces the adverse impact of partial exceptional test samples on evaluation metrics.

4. In addition to the metrics system, we designed an offline simulation system to simulate scoring in a simulated environment before a model is released.

In the offline test environment, the component scoring module of the online service scores models that need to be released based on online traffic. If any errors occur during the scoring of a model, the model fails verification and cannot be released. For models whose scores are normal, the average score value and score distribution are verified. The preceding two solutions, together with sample and model monitoring and interception, can significantly reduce the ODL quality risks.

For AI systems that consist of online and offline systems, the online system needs to respond to users' real-time access requests in a timely manner. Therefore, the performance of the online system is a focus in this section. The performance of the offline system greatly depends on the scheduling and use of computing resources on the training platform. We typically use simple source data duplication for verification. The online system performance testing consists of the read and write performance tests. The write performance test was introduced in real-time update link timeliness. Here, we will introduce the write performance and capacity tests of the online system.

An online system typically consists of 20 to 30 different engine modules. The performance test result varies greatly depending on data and test queries in the engine modules. In addition, it costs a lot to synchronize data the offline and online performance test environments. Therefore, we selected an online production cluster for performance and capacity tests. For an online system that can process hundreds of thousands of queries per second, it is difficult to accurately control concurrent queries. The simple multi-thread or multi-process control method can no longer solve the problem. We used a hill climbing algorithm (gradient multi-iteration hill climbing) to accurately control the traffic with hundreds of stress test machines running in the backend to incrementally detect the system performance level.

Another direction is automatic stress testing and unattended implementation to automatically and smoothly complete the whole stress testing process from automatic scenario-based query selection online, to stress generation, to automatic mean shift verification. Together with traffic switchover between clusters, routine stress tests during the day and night can be performed, which makes online performance level and bottleneck analysis much more convenient.

Effect testing and evaluation is an important part of the algorithms of big data applications because the algorithm effect affects the benefits (revenue and GMV) of search and advertising businesses. We have invested heavily in this, which is classified into the following areas:

1. Quality and Efficacy Evaluation of Features and Samples

In terms of the feature quality (whether data is involved and data distribution) and feature effect (value to an algorithm), we identified some important metrics for feature metrics computing, such as the miss rate, high-frequent values, distribution change, and value correlation. During training and evaluation, a large number of intermediate metrics have causation with the model effect. Systematic analysis of the modeling tensor, gradient, weight, and update can help optimize algorithms and locate problems.

In addition, AUC algorithm optimization and analysis of more evaluation metrics, such as the ROC, PR, and distribution can comprehensively evaluate the model effect. In the past two years, with increasing amounts of data, we used hundreds of billions of parameters and trillions of samples during modeling and training. Graph Deep Learning also entered the phase of tens of billions of nodes and hundreds of billions of edges. In the data sea, how to visualize feature samples and the preceding metrics has become a puzzle. We have made a lot of optimizations and improvements based on Google's open-source TensorBoard, which helps algorithm engineers support data metrics visualization, training process debugging, and deep learning model interpretability.

2. Online Traffic Experiment

Before an algorithm project is formally released, the model needs to introduce real online traffic in the experimental environment to test and optimize the effect. Based on first-generation online hierarchical experiments built on the Google hierarchical experiment architecture ("Overlapping Experiment Infrastructure More, Better, Faster Experimentation," Google), we have made a lot of improvements to concurrent experiments, parameter management, inter-parameter coverage, experiment quality assurance, experiment debugging capability, and experiment expansion capability. This significantly improves concurrent traffic reuse and security, helping reach the real goal of the production experiment. The real traffic is verified on the online experimental platform, which helps prove the effect and quality of the model.

3. Data Effect Evaluation

Data effect evaluation includes correlation evaluation and effect evaluation. Correlation is an important evaluation metric of the correlation model. We evaluate the search result metrics to obtain the correlation score of each search result. Specific metrics include the Customer Satisfaction Score (CSAT), Net Promoter Score (NPS), Customer Effort Score (CES), and HEART framework. CSAT values include very satisfied, moderately satisfied, slightly satisfied, moderately dissatisfied, and very dissatisfied. NPS was proposed in 2003 by Fred Reichheld, the Founder of the customer loyalty business of Bain & Company. NPS is used to measure users' willingness to recommend and is used to understand users' loyalty. CES indicates the difficulty for users to resolve an issue by using a product or service. The HEART framework is derived from Google and consists of happiness, engagement, adoption, retention, and task success.

To evaluate the data effects, we used data statistics and analysis. Before an algorithm model is used to provide services, we need to accurately verify the service effect of this model. In addition to the online and offline comparison described in the first section, we need more objective data metrics. Here, we used A/B testing of real traffic. 5% of online traffic is imported to a model to release. Then, we compare the effect of the 5% of traffic and the benchmark bucket and analyze metrics in the user experience (correlation), platform benefit, and customer value dimensions. Based on users' correlation evaluation results, platform revenue or GMV, and customer ROI, we check the potential impact of a new model on buyers, platforms, and sellers and provide the data necessary to support the final decision-making for the business. When the traffic ratio increases from 5% to 10%, 20%, and 50%, functional, performance, and effect issues can be detected, which helps further reduce major risks. This method integrates statistical data analysis and technologies, unlike other technical methods mentioned in this document. It is unique and has better results.

Similar to the stability construction of other BUs, we used three release measures (phased release, monitoring, and rollback) to solve quality issues in the code, system, and model release process. We also used online disaster recovery drills, fault injection and drills, and Attack and Defense Drills (ADD) to improve the stability and availability of online systems. We are the provider of the Monkey King C++ version for Alibaba open-source chaos engineering. For O&M control based on AI Ops and service mesh, we tried to provide intelligent O&M, transparent data analysis, automatic traffic splitting, and auto scaling. With the evolution of the service mesh technology in C++ online services, we predict that the development service system will be able to know the traffic changes of business applications without changing the code or configuration, ensuring traffic scheduling and isolation. ADD will be further developed, automated, and process-based to become a standard format for implementing chaos engineering. This part is still in its infancy, so I will not introduce more content that has not been implemented. However, we believe that this is a promising direction for development. It is different from traditional O&M and is close to Google's Site Reliability Engineering (SRE.)

This section aims to improve the efficiency in the test and R&D phases. In this direction, we build the DevOps tool chain and tools used in the whole R&D closed loop, including development, testing, engineering release, model release (model debugging and positioning), and customer feedback (experience evaluation, crowdsourced testing, and customer issue debugging.) In envisaged DevOps scenarios, development personnel can use these tools to independently complete requirement development, testing, and release and process customer feedback. However, this direction has little to do with testing, so I will not introduce it in detail.

Solutions to several major problem domains in big data application testing have been introduced. In this chapter, I will introduce some of my preliminary judgments about the future of big data application testing.

We believe that backend service testing will no longer require dedicated test personnel in the future. Development engineers can use suitable test tools to efficiently complete test tasks. Dedicated test teams will focus more on frontend product quality related to user interactions. The same as product managers, dedicated test teams need to consider product quality issues from the users' perspective. Product delivery and interaction verification are focuses in this area. Most server-end tests can be automated, and many service-level verification tasks can only be performed automatically. Development personnel has stronger capabilities in API-level automated code development than test personnel. In addition, if development personnel perform testing, costs for communication between test and development personnel can be reduced, which accounts for a large percentage in the whole release link. Moreover, as described in the first chapter, algorithm engineers have a clearer understanding of the service logic.

Therefore, we hope that backend testing can be independently completed by engineering personnel or algorithm engineers. In this new production model, test personnel focuses more on test tool R&D, including the automated test framework, test environment deployment tools, test data construction and generation, smoke-testing tool release, and continuous integration and deployment. Google is using this model, and we also tried transformation in this direction this year. The effect of quality change and efficiency improvement is good. As the first test transformation roadmap led by Chinese Internet companies, we believe that it can give you some guidance. Even though the test team has implemented transformation in this direction, backend testing is still required with the test task executors switched for development engineers. The backend testing technologies and directions mentioned in this document still exist. In addition to efficiency tools, the online system stability construction described in section 5 of the preceding chapter is a good direction for the transformation of the backend service test team.

This concept was proposed by Microsoft engineers about 10 years ago. TIP is a future testing method in terms of the following factors:

As shown in Figure 3, intelligent testing has models with different maturity levels, including manual testing, automation, auxiliary intelligent testing, and highly intelligent testing, similar to autonomous driving classification. Machine intelligence is a tool that applies to different test phases. Different algorithms and models may be used in the test data and case design phase, test case regression implementation phase, test result inspection phase, and multiple technical risk fields, such as online exceptional metric detection. Intelligent testing is a product of development and requires digitalization. Automated testing is a simple digitalization. Without digitalization or automation, there are no requirements for intelligent analysis or optimization.

For testing, some simple algorithms may achieve better results than complex deep learning or reinforcement learning algorithms. When a complex deep learning or reinforcement learning algorithm is used, it is difficult to extract features and build models and samples, and feedback for running tests is unavailable. However, using the latest algorithm technology to optimize the efficiency in different test phases is a direction in the future. Just like autonomous driving, highly intelligent testing is not mature due to insufficient test data rather than insufficient algorithms or modules.

Figure 3: Intelligent Test Technologies

Nearly 10 years of continuous development, with an extensive effort by many predecessors, has enabled Alibaba's search, recommendation, and advertising systems to achieve good quality in many segmented fields. The methods described in this article are concentrated on internal tools. In the future, we will gradually make these tools publicly available, to give back to the community. Due to space limitations, I have not elaborated on many technical details here. If you want to learn more, follow the Alibaba technical quality team's test book, "Alibaba's Way of Testing" (provisional title) published by the Publishing House of the Electronics Industry. The testing of big data applications based on AI algorithms described in this article is recorded in Chapter 6 of this book. If you want more information, you are welcome to join our team or open-source community for further research and building on the preceding areas.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Architecture Methodology: How to Derive Application Logic from the Bottom Up

A Strategic Take on Cloud Storage Solutions – Part 1: The Overview

2,593 posts | 793 followers

FollowAlibaba Clouder - April 1, 2021

Alibaba Clouder - September 1, 2020

Alibaba Cloud Native Community - March 1, 2022

Alibaba Clouder - April 28, 2021

Apache Flink Community China - December 25, 2019

Alibaba Clouder - December 21, 2020

2,593 posts | 793 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Clouder