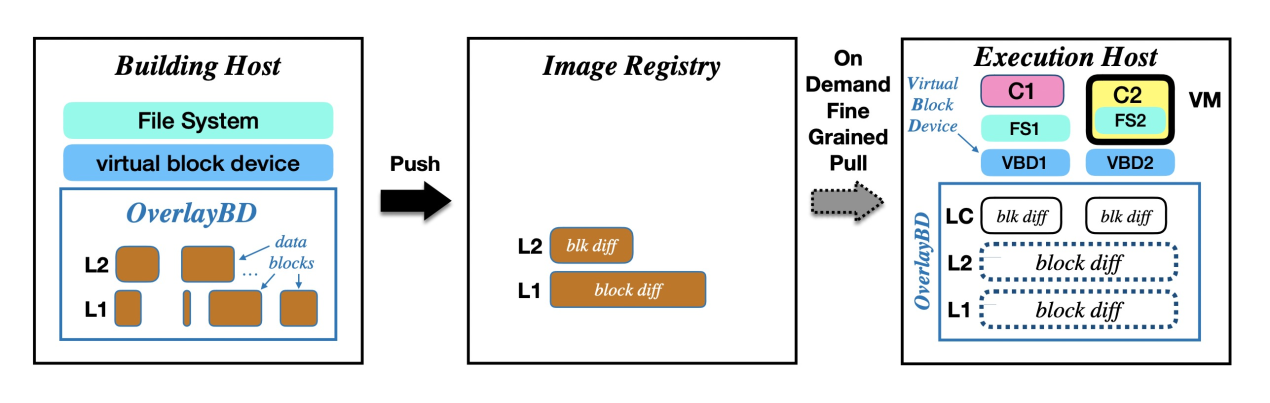

Recently, Alibaba Cloud made its cloud-native accelerated container image technology open-source. Compared with the traditional layered tar file format, the OverlayBD image format implements network-based on-demand reading so containers can start quickly.

This solution was originally part of the Alibaba Cloud Data Accelerator for Disaggregated Infrastructure (DADI). DADI is designed to provide various possible data access acceleration technologies for computing/storage separation architectures. Accelerated image delivery was a breakthrough of the DADI architecture in the container and cloud-native fields. Since its launch in 2019, the DADI architecture has deployed a large number of containers online and started more than one billion containers in total. It has supported multiple business lines of Alibaba Group and Alibaba Cloud and improved the application release and scaling efficiency significantly. In 2020, the DADI Team presented a paper named DADI: Block-Level Image Service for Agile and Elastic Application Deployment. USENIX ATC'20 [1] and then launched an open-source project. The team aims to contribute this technology to the community. Furthermore, the team wants to attract more developers to the container and cloud-native performance optimization field by establishing standards and building the ecosystem.

With the uptick of Kubernetes and the cloud-native, containers have been widely used in enterprises. Fast deployment and startup are the core advantages of containers. Fast startup means that the instantiation time of the local image is very short; the "hot startup" time is short. However, if no image is available locally during a cold start, containers can only be created after downloading images from the registry. After the business image is maintained and updated for a long time, the number of layers and the overall size of images can be very large. For example, the image size can reach hundreds of megabytes or several gigabytes. Therefore, in the production environment, the cold startup of containers usually takes several minutes. As the size increases, Registry cannot download images quickly due to network congestion within the cluster.

For example, during one of the previous Double 11 Global Shopping Festival, an Alibaba application scale-out was triggered due to insufficient capacity. However, the scale-out took a long time because of the high concurrency. During this period, the user experience was affected. In 2019, after the release of DADI, the total time that the container in the new image format spent on "image pull and container startup" was five times shorter than a common container, and the time of longtail request with p99 standard was 17 times faster than the common container.

The remote processing of image data is the key to solve the problem of slow container cold startup. In the past, the industry has tried to solve this problem in various ways, such as using block storage or NAS to save container registry to implement on-demand reading or using network-based distribution technologies, including peer-to-peer (p2p). Downloading images from multiple sources or preloading images to the hosts in advance to avoid single-point network bottlenecks are other solutions. In recent years, discussion about the new image format has been gradually discussed more. According to the research of Harter and others [2], pulling images takes up 76% of the container startup time, while reading data takes only 6.4% of the time. Therefore, images with on-demand read have become the trend. Google's Seekable tar.gz (Stargz) [3] can selectively search for and extract specific files from the archive without scanning or decompressing the entire image. Stargz is designed to improve the performance of image pulling. Its lazy-pull technology does not pull the entire image file, achieving on-demand reading. Stargz introduced a containerd snapshotter plug-in that optimizes I/O at the storage layer to improve the runtime efficiency.

An image needs to be mounted after it is ready during the lifecycle of a container. The core technology for mounting a horizontal image is the overlay filesystem (OverlayFS). It merges multiple underlying layer files in a stacked form and exposes them to a unified read-only file system. It stacks files in the form of snapshots similar to the block storage and NAS mentioned above. CRFS associated with Stargz can also be considered as another implementation of OverlayFS.

DADI does not use OverlayFS directly. It just borrows the idea of OverlayFS and an early union filesystem. However, it proposes a brand-new block device-based hierarchical stacking technology called OverlayBD, which provides a series of block-based merged data views for container imaging. The implementation of OverlayBD is very simple, so many things can become a reality. However, implementing a fully POSIX-compatible file system interface is full of challenges and may have bugs, which can be seen from the history of various mainstream file systems.

OverlayBD has the following advantages over OverlayFS:

To understand the principles of OverlayBD, you need to understand the layering mechanism of the container images first. A container image consists of multiple incremental layer files, which are superimposed when using it. By doing so, the system only needs to distribute the layer files when distributing images. Each layer is a compressed package that is essentially different from the previous layer, which involves file addition, modification, or deletion. The container engine can superimpose the differences using its storage driver and mounting the differences to the specified directory in read-only mode. This directory is called lower_dir. For a writable layer mounted in read/write mode, the mount directory is generally called upper_dir.

Note: OverlayBD itself has no concept of file. It just abstracts the image into a virtual block device and mounts a regular file system on it. When a user application reads data, the read request is processed by a conventional file system first, converting the request into one or more reads of the virtual block device. These read requests are forwarded to the receiving program on the user side, which is the runtime carrier of OverlayBD. Finally, it will be converted to random reads for one or more layers.

Like traditional images, OverlayBD still retains the layer structure internally, but the content of each layer is a series of data blocks corresponding to the differences in file system changes. OverlayBD provides a merged view for the upper layer. The superposition rules of layers are very simple. It says, for any data block, the last change is always used, and unchanged blocks in a layer are considered zero blocks. In addition, the capability of exporting a series of data blocks into a layer file is provided for the under layer, and the file is dense, non-sparse, and indexable. Therefore, reading a continuous logical block address (LBA) on a block device may include small pieces of data that originally belonged to multiple layers. These small pieces of data are called segments. Finding the layer number from the attribute of segments can map the reading of files on this layer. For traditional container images, their layer files can be stored in the registry or OSS. Therefore, the OverlayBD image can also do the same.

For better compatibility, OverlayBD encapsulates the header and footer of the tar file at the outermost layer to the layer file. By doing so, the file is disguised as a tar file. The tar file contains only one file, so reading on-demand is not affected. By default, either Docker, Containerd, or BuildKit has the decompression and compression processes for downloading or uploading images, which is insurmountable without code intrusion. Therefore, adding tar disguise is good for compatibility and process uniformity. For example, users only need to provide plug-ins without modifying the code when converting, building, or downloading images.

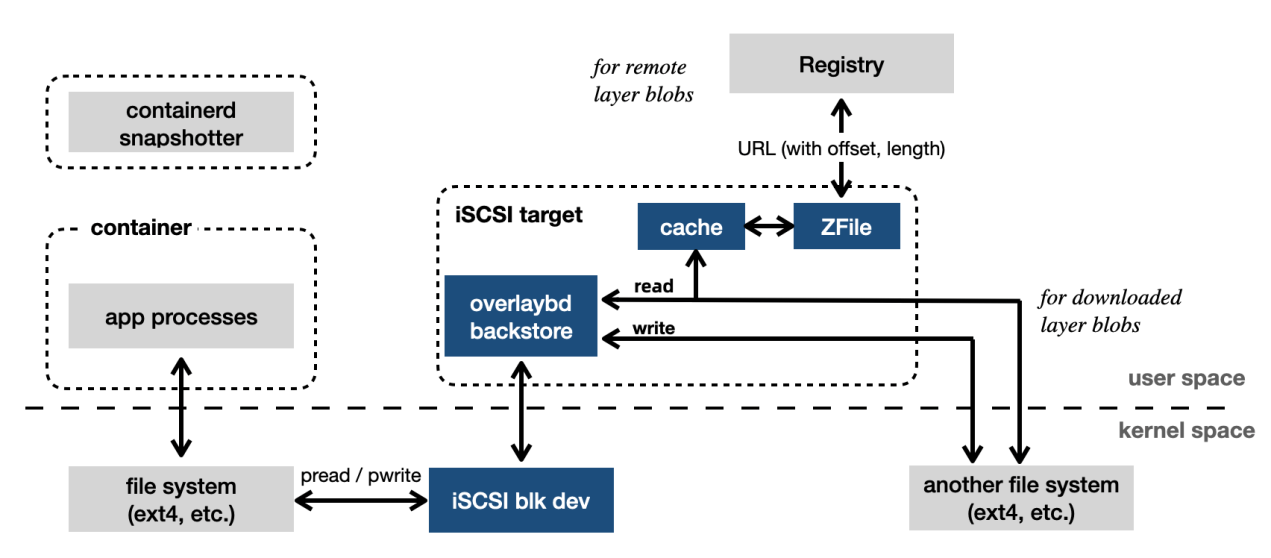

The figure above shows the overall architecture of DADI. All components are described below:

Since version 1.4, Containerd initially supported some functions for starting remote images, and Kubernetes has explicitly abandoned the support for Docker as the runtime. Therefore, the DADI open-source version supported the Containerd ecosystem first and then Docker.

The core function of snapshotter is to abstract service interfaces for mounting and detaching the container root filesystem (rootfs). It is designed to replace a module called graphdriver in an earlier version of Docker, simplifying the storage driver and being compatible with block device snapshots and OverlayFS.

The OverlayBD snapshotter provided by DADI allows the container engine to support OverlayBD images to mount virtual block devices to the corresponding directories. It is also compatible with traditional open container initiative (OCI) tar images, enabling users to continue to run normal containers in OverlayFS.

iSCSI is a widely supported remote block device protocol with high stability and recoverable capability when encountering failures. The OverlayBD module is used as the storage backend of the iSCSI protocol. If the program crashes unexpectedly, it can be restored by restarting the module. However, image-based file system acceleration solutions, such as Stargz, cannot restore OverlayBD.

iSCSI target is the runtime carrier of OverlayBD. In this project, two target modules are implemented. The first one is tgt [4] based on OverlayBD, which can compile the code into a dynamic link library for runtime loading because of its backing store mechanism. Another one is the Linux kernel-based LIO SCSI target (also known as TCMU) [5]. The entire target runs in the kernel, which makes it easier to output a virtual block device.

ZFile is a data compression format that supports online decompression. It splits the source file by fixed block size, compresses the data blocks individually, and maintains a jump table that records the physical offsets of each data block in the ZFile file. To read data from a ZFile file, users need to search the index to find the corresponding file location and decompress the relevant data block.

ZFile supports various effective compression algorithms, including LZ4 and Zstandard. It decompresses files very fast with low overhead, effectively saving storage space and data transmission volume. The experiment data shows that when decompressing a remote ZFile on demand, the performance is higher than loading uncompressed data because the time saved by transmission is greater than the extra overhead of decompression.

OverlayBD supports exporting layer files in ZFile format.

As mentioned above, the layer file is stored in the registry, and the read I/O of containers on the block device is mapped to the request to registry with the support for HTTP 206 Partial Content from the registry. However, due to the cache mechanism, this situation will not always exist. The cache downloads the layer files automatically sometime after the container is started and persists files to the local file system. If the cache hits, the read I/O is not mapped to the registry anymore, but it is executed locally.

On March 25, 2021, Forrester, an authoritative consulting organization, released the Function-As-A-Service Platforms (FaaS platforms) evaluation report for the first quarter of 2021. Alibaba Cloud stood out by virtue of its products and got the highest score in eight evaluation dimensions, becoming a global FaaS leader like Amazon AWS. This is also the first time a technology enterprise in China has entered the FaaS leadership quadrant.

Containers are the foundation of the FaaS platform, and the container startup speed determines the performance and response delay of the whole platform. Powered by DADI, Alibaba Cloud Function Compute (FC) reduces container startup time by 50% to 80% [6], bringing a new Serverless use experience.

Alibaba's open-source DADI and its OverlayBD image format are helpful to meet the demand for the fast startup of containers in the new era. In the future, the project team will work with the community to speed up the connection to mainstream tool chains. Moreover, the team will actively participate in the formulation of new image format standards, with the goal of making OverlayBD one of the standards for OCI remote image format.

You are welcome to participate in the open-source project!

The format description capability of Manifest V1 of the OCI image is limited and cannot meet the requirements for remote imaging. There is no substantial progress in the discussion of Manifest V2 currently, and overthrowing V1 is not realistic either. However, raw data can be described through additional descriptors of OCI Artifacts Manifest, which will guarantee compatibility, thus making it easier for users to accept. Artifacts is also the project that OCI and CNCF are promoting. In the future, DADI will embrace Artifacts to realize PoC.

DADI allows users to select an appropriate file system to build images based on their business needs. However, the corresponding interfaces are not open yet, and the ext4 file system is used by default. In the future, the team will improve the interfaces related to this function, so users can decide the file system they want to use according to their needs.

Currently, users can build images by mounting snapshotter to BuildKit, which will improve in the future to form a complete tool chain.

The I/O is recorded after the container is started so the record can be replayed when the same image is started later to prefetch the data and avoid temporary requests for Registry. By doing so, the cold start time for containers will be shortened by more than half. Theoretically, all stateless or idempotent containers can be recorded and replayed.

[1] https://www.usenix.org/conference/atc20/presentation/li-huiba

[2] https://www.usenix.org/conference/fast16/technical-sessions/presentation/harter

[3] https://github.com/containerd/stargz-snapshotter

228 posts | 33 followers

FollowAliware - July 21, 2021

Alibaba Developer - March 31, 2021

Alibaba Cloud Serverless - May 26, 2021

Alibaba Clouder - April 3, 2020

Alibaba Clouder - November 23, 2020

Alibaba Cloud Native Community - November 5, 2020

228 posts | 33 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Container Service